当前位置:网站首页>AGCO AI frontier promotion (2.22)

AGCO AI frontier promotion (2.22)

2022-06-21 15:17:00 【Zhiyuan community】

LG - machine learning CV - Computer vision CL - Computing and language AS - Audio and voice RO - robot

Turn from love to a lovely life

1、[AI] Axiomatizing consciousness, with applications

H Barendregt, A Raffone

[Radboud University & Sapienza University]

Axiomatization and application of consciousness . Consciousness will be introduced axiomatically , Received Buddhist inner cultivation and psychology 、 Logic in computer science and the inspiration of cognitive neuroscience , As composed of 、 Discrete sum ( Indecisive ) The computable configuration flow consists of . In this context , Self - 、 focus 、 Concepts such as mindfulness and various forms of pain can be defined . As an application of this setting , This article shows how the integrated development of mindfulness and mindfulness can alleviate and eventually eliminate some forms of pain .

Consciousness will be introduced axiomatically, inspired by Buddhist insight meditation and psychology, logic in computer science, and cognitive neuroscience, as consisting of a stream of configurations that is compound, discrete, and (non-deterministically) computable. Within this context the notions of self, concentration, mindfulness, and various forms of suffering can be defined. As an application of this set up, it will be shown how a combined development of concentration and mindfulness can attenuate and eventually eradicate some of the forms of suffering.

2、[CL] SGPT: GPT Sentence Embeddings for Semantic Search

N Muennighoff

[Peking University]

SGPT: Semantic search oriented GPT Sentence embedding .GPT Transformer It is the largest language model at present , But semantic search is BERT Transformer Dominated by . In this paper, SGPT-BE and SGPT-CE, Is used to GPT Model as Bi-Encoders or Cross-Encoders For symmetric or asymmetric search .SGPT-BE Through the contrast and fine adjustment of the bias tensor only and a new pooling method, semantic sentence embedding .58 Billion parameter SGPT-BE Better than the best sentence embedding available 6%, stay BEIR It has created a new and most advanced level , It is superior to the fine adjustment proposed at the same time 25 Ten thousand times the parameter 175B Davinci End OpenAI Embeddings.SGPT-CE use GPT Logarithmic probability of the model , Without any fine tuning .61 Billion parameter SGPT-CE stay BEIR It has created the most advanced level without supervision , stay 7 Beat the most advanced level of supervision on data sets , But in other data sets, it is significantly worse . This article shows how to alleviate this situation by adjusting the prompts .SGPT-BE and SGPT-CE The performance of varies with the size of the model . however , Additional delay needs to be considered 、 Storage and computing costs .

GPT transformers are the largest language models available, yet semantic search is dominated by BERT transformers. We present SGPT-BE and SGPT-CE for applying GPT models as Bi-Encoders or Cross-Encoders to symmetric or asymmetric search. SGPT-BE produces semantically meaningful sentence embeddings by contrastive fine-tuning of only bias tensors and a novel pooling method. A 5.8 billion parameter SGPT-BE outperforms the best available sentence embeddings by 6% setting a new state-of-the-art on BEIR. It outperforms the concurrently proposed OpenAI Embeddings of the 175B Davinci endpoint, which fine-tunes 250,000 times more parameters. SGPT-CE uses log probabilities from GPT models without any fine-tuning. A 6.1 billion parameter SGPT-CE sets an unsupervised state-of-the-art on BEIR. It beats the supervised state-of-the-art on 7 datasets, but significantly loses on other datasets. We show how this can be alleviated by adapting the prompt. SGPT-BE and SGPT-CE performance scales with model size. Yet, increased latency, storage and compute costs should be considered. Code, models and result files are freely available at https://github.com/Muennighoff/sgpt.

3、[LG] Jury Learning: Integrating Dissenting Voices into Machine Learning Models

M L. Gordon, M S. Lam, J S Park, K Patel, J T. Hancock, T Hashimoto, M S. Bernstein

[Stanford University & Apple Inc]

Jury study : Incorporate disagreements into machine learning models . machine learning (ML) The algorithm should learn to emulate who provides the tag ? Machine learning tasks ranging from online comment on toxicity to false message detection to medical diagnosis , Different social groups may have irreconcilable differences on the real label . Facing the common differences in user oriented tasks , A choice has to be made . At present, supervised machine learning implicitly solves these label differences with majority votes , And the minority label is ignored . This article proposes jury study , A supervised machine learning method , These differences are explicitly resolved through the jury metaphor : Define which people or groups , What proportion determines the prediction of the classifier . for example , Jury learning models for online toxicity may focus on female and black jurors , Because they are often the target of online harassment . To achieve jury learning , Designed a deep learning architecture , Model each annotator in the dataset , Sample from the annotator model to populate the jury , Then run the reasoning to classify . This architecture enables the jury to dynamically adjust its composition , Explore the counterfactual , And visualize objections .

Whose labels should a machine learning (ML) algorithm learn to emulate? For ML tasks ranging from online comment toxicity to misinformation detection to medical diagnosis, different groups in society may have irreconcilable disagreements about ground truth labels. Supervised ML today resolves these label disagreements implicitly using majority vote, which overrides minority groups' labels. We introduce jury learning, a supervised ML approach that resolves these disagreements explicitly through the metaphor of a jury: defining which people or groups, in what proportion, determine the classifier's prediction. For example, a jury learning model for online toxicity might centrally feature women and Black jurors, who are commonly targets of online harassment. To enable jury learning, we contribute a deep learning architecture that models every annotator in a dataset, samples from annotators' models to populate the jury, then runs inference to classify. Our architecture enables juries that dynamically adapt their composition, explore counterfactuals, and visualize dissent.

4、[LG] Learned Turbulence Modelling with Differentiable Fluid Solvers

B List, L Chen, N Thuerey

[Technical University of Munich]

Turbulence modeling learning based on the differentiable fluid solver . In this paper, a turbulence model based on convolutional neural network is trained . These learned turbulence models improve the incompressible Navier-Stokes A low resolution solution to the equation . The proposed method includes developing a differentiable numerical solver , Supports propagation of optimization gradients through multiple solver steps . By showing the excellent robustness and accuracy of models with more deployment steps in the training process , To demonstrate the importance of this feature . This method is applied to three two-dimensional turbulent cases , One is homogeneous decay turbulence , One is the time evolution hybrid layer , One is the hybrid layer of spatial evolution . Compared with model free simulation , The proposed method achieves significant improvement of long-term prior statistical data , There is no need to include these statistics directly in the learning objectives . In reasoning , The proposed method is compared with the similar exact pure digital method , And a lot of performance improvements .

In this paper, we train turbulence models based on convolutional neural networks. These learned turbulence models improve under-resolved low resolution solutions to the incompressible Navier-Stokes equations at simulation time. Our method involves the development of a differentiable numerical solver that supports the propagation of optimisation gradients through multiple solver steps. We showcase the significance of this property by demonstrating the superior stability and accuracy of those models that featured a higher number of unrolled steps during training. This approach is applied to three two-dimensional turbulence flow scenarios, a homogeneous decaying turbulence case, a temporally evolving mixing layer and a spatially evolving mixing layer. Our method achieves significant improvements of long-term a-posteriori statistics when compared to no-model simulations, without requiring these statistics to be directly included in the learning targets. At inference time, our proposed method also gains substantial performance improvements over similarly accurate, purely numerical methods.

5、[SD] Deep Performer: Score-to-Audio Music Performance Synthesis

H Dong, C Zhou, T Berg-Kirkpatrick, J McAuley

[Dolby Laboratories & University of California San Diego]

Deep Performer: Music performance synthesis from music score to audio . The purpose of music performance synthesis , It is the synthesis of music scores into a natural performance . This paper borrows the latest development of text to speech synthesis , Put forward Deep Performer—— A new system for music score to audio music performance synthesis . Different from voice , Music often contains polyphonic and long notes . therefore , This paper presents two new techniques to deal with polyphonic input , And in Transformer Encoder - Fine grained tuning is provided in the decoder model . A system proposed for training , A new violin data set is proposed , Including paired recordings and music scores , And the estimated alignment between them . The proposed model can synthesize music with clear polyphonic and harmonic structures . In an auditory test , In pitch accuracy 、 The timbre and noise level are consistent with the baseline model ( A conditional generation audio model ) Competitive quality . Besides , The overall quality of the proposed model is significantly better than the baseline of the existing piano data set .

Music performance synthesis aims to synthesize a musical score into a natural performance. In this paper, we borrow recent advances in text-to-speech synthesis and present the Deep Performer—a novel system for score-to-audio music performance synthesis. Unlike speech, music often contains polyphony and long notes. Hence, we propose two new techniques for handling polyphonic inputs and providing a finegrained conditioning in a transformer encoder-decoder model. To train our proposed system, we present a new violin dataset consisting of paired recordings and scores along with estimated alignments between them. We show that our proposed model can synthesize music with clear polyphony and harmonic structures. In a listening test, we achieve competitive quality against the baseline model, a conditional generative audio model, in terms of pitch accuracy, timbre and noise level. Moreover, our proposed model significantly outperforms the baseline on an existing piano dataset in overall quality.

Several other papers worthy of attention :

[LG] Synthetic Control As Online Linear Regression

As the integrated control of online linear regression

J Chen

[Harvard University]

[LG] Provable Regret Bounds for Deep Online Learning and Control

The provable regret boundary of deep online learning and control

X Chen, E Minasyan, J D. Lee, E Hazan

[Princeton University]

[LG] What Does it Mean for a Language Model to Preserve Privacy?

Privacy protection of language model

H Brown, K Lee, F Mireshghallah, R Shokri, F Tramèr

[National University of Singapore & Cornell University & University of California San Diego & Google]

[LG] Towards Battery-Free Machine Learning and Inference in Underwater Environments

Research on battery free machine learning and reasoning in underwater environment

Y Zhao, S S Afzal, W Akbar, O Rodriguez, F Mo, D Boyle, F Adib, H Haddadi

[Imperial College London & MIT]

边栏推荐

- JS written test question: array

- Kubeneters installation problems Collection

- Computer shortcuts During sorting, fill in as needed

- ARP interaction process

- Redis introduction and Practice (with source code)

- 2022 latest MySQL interview questions

- 模拟设计磁盘文件的链接存储结构

- JS interview question: regular expression, to be updated

- Mysql5.7 setup password and remote connection

- Word thesis typesetting tutorial

猜你喜欢

Phantom star VR product details 34: Happy pitching

Operator Tour (I)

Browser evaluation: a free, simple and magical super browser - xiangtian browser

Application GDB debugging

Leetcode hot topic Hot 100, to be updated

What is a good product for children's serious illness insurance? Please recommend it to a 3-year-old child

DP question brushing record

Usage of SED (replacement, deletion of text content, etc.)

Fluent encapsulates an immersive navigation bar with customizable styles NavigationBar

![The whole process of Netease cloud music API installation and deployment [details of local running projects and remote deployment]](/img/3b/678fdf93cf6cc39caaec8e753af169.jpg)

The whole process of Netease cloud music API installation and deployment [details of local running projects and remote deployment]

随机推荐

Shell uses arrays

‘maxflow‘ has no attribute ‘Graph‘

[font multi line display ellipsis] and [dialog box] implementation ----- case explanation, executable code

100% troubleshooting and analysis of Alibaba cloud hard disk

2022 Hunan latest fire facility operator simulation test question bank and answers

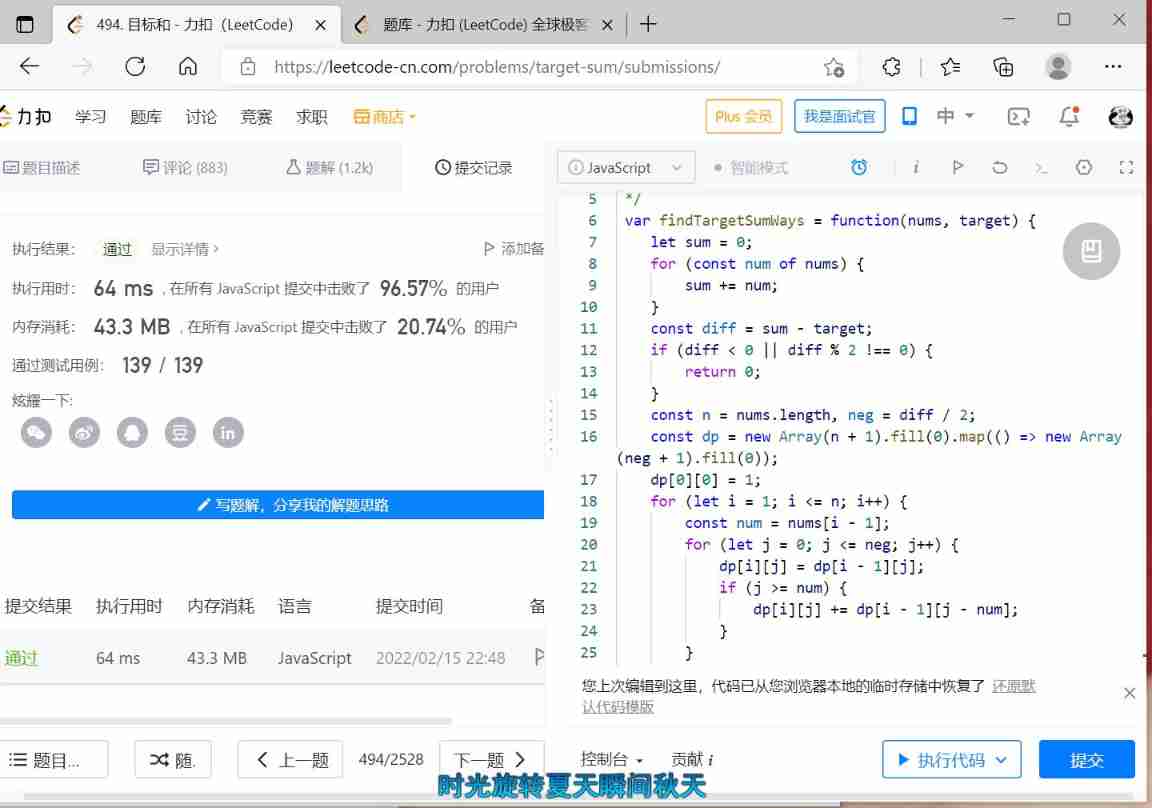

DP question brushing record

JS written test question: array

MNIST model training (with code)

There is a PPP protocol between routers. How can there be an ARP broadcast protocol

Selection (041) - what is the output of the following code?

Summary of the most basic methods of numpy

My debug Path 1.0

How to write a rotation chart

Operator Tour (I)

2020-11-12 meter skipping

Word thesis typesetting tutorial

Nmap scan port tool

Design of LVDS interface based on FPGA

Niuke - real exercise-01

Algorithm question: interview question 32 - I. print binary tree from top to bottom (title + idea + code + comments) sequence traversal time and space 1ms to beat 97.84% of users once AC