当前位置:网站首页>Analysis of the underlying architecture of spark storage system - spark business environment practice

Analysis of the underlying architecture of spark storage system - spark business environment practice

2022-06-29 20:19:00 【Full stack programmer webmaster】

This series of blogs draw cases from the real business environment to summarize and share , And give Spark Source code interpretation and business practice guidance , Please keep an eye on this blog . Copyright notice : This set Spark Source code interpretation and commercial practice belong to the author ( Qin Kaixin ) all , Prohibited reproduced , Welcome to learn .

Spark Advanced series of business environment practice and optimization

- Spark Business environment practice -Spark Built in frame rpc Communication mechanism and RpcEnv infrastructure

- Spark Business environment practice -Spark Event monitoring bus process analysis

- Spark Business environment practice -Spark Analysis of the underlying architecture of the storage system

- Spark Business environment practice -Spark There are many at the bottom MessageLoop Loop threads perform process analysis

- Spark Business environment practice -Spark Two level dispatching system Stage Analysis of partition algorithm and optimal task scheduling details

- Spark Business environment practice -Spark Task delay scheduling and scheduling pool Pool Architecture analysis

- Spark Business environment practice -Task Granular cache aggregate sort structure AppendOnlyMap Analyze in detail

- Spark Business environment practice -ExternalSorter The sorter is Spark Shuffle Analysis of design ideas in the process

- Spark Business environment practice -StreamingContext Start up process and Dtream Template source code analysis

- Spark Business environment practice -ReceiverTracker And BlockGenerator Analysis of data stream receiving process

1. Spark Explanation of storage system component relationships

BlockInfoManger It mainly provides read / write lock control , The hierarchy is only located in BlockManger under , Usually Spark Read and write operations are called first BlockManger, Then consult BlockInfoManger Is there lock competition , And then call DiskStore and MemStore, And then call DiskBlockManger To determine the data and location mapping , Or call MemoryManger To determine the soft boundary of the memory pool and the memory usage request .

1.1 Driver And Executor And SparkEnv And BlockManger Component relationships :

Driver And Executor Each component has its own task execution SparkEnv Environmental Science , And every one SparkEnv One of them BlockManger Responsible for storage services , As a high-level abstraction ,BlockManger Between the need to pass RPCEnv,ShuffleClient, And BlocakTransferService Communicate with each other .

1.1 BlockInfoManger And BlockInfo Read / write control relationship between shared lock and exclusive lock :

BlockInfo Flag with read / write lock in , The flag can be used to determine whether to perform write control

val NO_WRITER: Long = -1

val NON_TASK_WRITER: Long = -1024

* The task attempt id of the task which currently holds the write lock for this block, or

* [[BlockInfo.NON_TASK_WRITER]] if the write lock is held by non-task code, or

* [[BlockInfo.NO_WRITER]] if this block is not locked for writing.

def writerTask: Long = _writerTask

def writerTask_=(t: Long): Unit = {

_writerTask = t

checkInvariants()

Copy code BlockInfoManager have BlockId And BlockInfo Mapping relationships and tasks id And BlockId Lock mapping for :

private[this] val infos = new mutable.HashMap[BlockId, BlockInfo]

*Tracks the set of blocks that each task has locked for writing.

private[this] val writeLocksByTask = new mutable.HashMap[TaskAttemptId, mutable.Set[BlockId]]

with mutable.MultiMap[TaskAttemptId, BlockId]

*Tracks the set of blocks that each task has locked for reading, along with the number of times

*that a block has been locked (since our read locks are re-entrant).

private[this] val readLocksByTask =

new mutable.HashMap[TaskAttemptId, ConcurrentHashMultiset[BlockId]]

Copy code 1.3 DiskBlockManager And DiskStore Component relationships :

You can see DiskStore Internally it will call DiskBlockManager To make sure Block Read and write position of :

1.3 MemManager And MemStore And MemoryPool Component relationships :

What I want to emphasize here is : The first generation big data framework hadoop Just use memory as a computing resource , and Spark Not only is memory used as a computing resource , It also includes a part of the memory into the storage system :

- Memory pool model : Logically, it is divided into heap memory and off heap memory , Then heap memory ( Or out of heap memory ) The interior is divided into StorageMemoryPool and ExecutionMemoryPool.

- MemManager It is abstract. , Defines the interface specification of the memory manager , Easy to expand later , such as : The original StaticMemoryManager And the new version UnifiedMemoryManager.

- MemStore Depend on UnifiedMemoryManager Apply for memory and change soft boundary or release memory .

- MemStore Internal is also responsible for storing real objects , For example, internal member variables :entries , Established in memory BlockId And MemoryEntry(Block Form of memory ) Mapping between .

- MemStore Inside “ Occupied seat ” Behavior , Such as : Internal variables offHeapUnrollMemoryMap and onHeapUnrollMemoryMap.

1.4 BlockManagerMaster And BlockManager Component relationships :

- BlockManagerMaster The function of is to exist in Dirver or Executor Upper BlockManger Unified management , This is simply an act of agency , Because he holds BlockManagerMasterEndpointREf, Further and BlockManagerMasterEndpoint To communicate .

2. Spark Storage system components BlockTransferServic Transport services

To be continued

3. summary

The storage system is Spark Cornerstone , I try to dissect every tiny piece of knowledge , Unlike most blogs , I will try to use the most plain language , After all, technology is a layer of window paper .

Qin Kaixin 20181031 In the morning

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/101341.html Link to the original text :https://javaforall.cn

边栏推荐

- XSS vulnerability

- Go: how to write a correct UDP server

- Three. JS development: drawing of thick lines

- Ovirt database modify delete node

- Dynamics CRM: 本地部署的服务器中, Sandbox, Unzip, VSS, Asynchronous还有Monitor服务的作用

- [USB flash disk test] in order to transfer the data at the bottom of the pressure box, I bought a 2T USB flash disk, and the test result is only 47g~

- Nutch2.1 distributed fetching

- 从众伤害的是自己

- Cmake开发-多目录工程

- Sword finger offer 41 Median in data stream

猜你喜欢

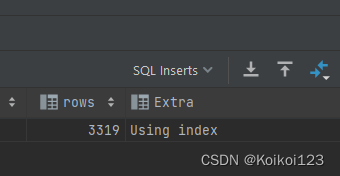

mysql中explain语句查询sql是否走索引,extra中的几种类型整理汇总

![[notes] take notes again -- learn by doing Verilog HDL – 014](/img/92/ba794253f1588ff9ad87d2571a453e.png)

[notes] take notes again -- learn by doing Verilog HDL – 014

![[compilation principle] syntax analysis](/img/9e/6b1f15e3da9997b08d11c6f091ed0e.png)

[compilation principle] syntax analysis

【Try to Hack】vulnhub narak

Bigder: Automation Test Engineer

Three. JS development: drawing of thick lines

Flume configuration 3 - interceptor filtering

Comparable comparator writing & ClassCastException class conversion exception

Comparable比较器写法&ClassCastExcption类转换异常

Flume配置2——监控之Ganglia

随机推荐

2021 CCPC 哈尔滨 E. Power and Modulo (思维题)

Command execution (RCE) vulnerability

Dynamics CRM: 本地部署的服务器中, Sandbox, Unzip, VSS, Asynchronous还有Monitor服务的作用

0/1 score planning topic

Real time tracking of bug handling progress of the project through metersphere and dataease

. NETCORE unified authentication authorization learning - run (1)

wangeditor富文本编辑器使用(详细)

Talk about the delta configuration of Eureka

The explain statement in MySQL queries whether SQL is indexed, and several types in extra collate and summarize

proxmox集群节点崩溃处理

How to use the configuration in thinkphp5

freemarker模板框架生成图片

Go: how to write a correct UDP server

Linux Installation mysql5

Introduction to the latest version 24.1.0.360 update of CorelDRAW

【编译原理】语义分析

What is a database? Database detailed notes! Take you into the database ~ you want to know everything here!

Hangfire详解

How to use filters in jfinal to monitor Druid for SQL execution?

网站压力测试工具——Webbench