当前位置:网站首页>Paper reading (54):deepfool: a simple and accurate method to four deep neural networks

Paper reading (54):deepfool: a simple and accurate method to four deep neural networks

2022-06-10 05:36:00 【Inge】

List of articles

1 introduce

1.1 subject

1.2 motivation

There is no doubt about the achievements of deep neural network in image classification . However , These architectures have been shown to be less robust to small perturbations in images , At present, there is also a lack of effective methods to accurately calculate the robustness of depth classifiers to large-scale data sets . This paper quantifies these robustness reliably .

1.3 Code

Torch:http://github.com/lts4/deepfool

1.4 Bib

@inproceedings{Moosavi:2016:25742582,

author = {Seyed-Mohsen Moosavi-Dezfooli and Alhussein Fawzi and Pascal Frossard},

title = {Deep{F}ool: A simple and accurate method to fool deep neural networks},

booktitle = {

{IEEE} Conference on Computer Vision and Pattern Recognition},

pages = {2574--2582},

year = {2016}

}

2 DeepFool

For a given classifier , Define a minimum Antagonistic disturbance r r r, It is used to change the evaluation label of the sample k ^ ( x ) \hat{k}(x) k^(x):

Δ ( x ; k ^ ) : = min r ∥ r ∥ 2 s . t . k ^ ( x + r ) ≠ k ^ ( x ) , (1) \tag{1} \Delta(x;\hat{k}):=\min_r\|r\|_2\qquad s.t. \qquad \hat{k}(x+r)\neq\hat{k}(x), Δ(x;k^):=rmin∥r∥2s.t.k^(x+r)=k^(x),(1) among x x x It's the input image . This formula is also called k ^ \hat{k} k^ At point x x x Robustness of , So the classifier k ^ \hat{k} k^ Of Robustness, Defined as :

ρ adv ( k ^ ) = E x Δ ( x ; k ^ ) ∥ x ∥ 2 , (2) \tag{2} \rho_\text{adv}(\hat{k})=\mathbb{E}_x\frac{\Delta(x;\hat{k})}{\|x\|_2}, ρadv(k^)=Ex∥x∥2Δ(x;k^),(2) among E x \mathbb{E}_x Ex Is the expectation of data set distribution .

3 DeepFool And two categories

Under the second classification problem , Yes k ^ ( x ) = sign ( f ( x ) ) \hat{k}(x)=\text{sign}(f(x)) k^(x)=sign(f(x)), among f : R n → R f:\mathbb{R}^n\to \mathbf{R} f:Rn→R Is an image classification function . Make F = Δ { x : f ( x ) = 0 } \mathcal{F}\overset{\Delta}{=}\{x:f(x)=0\} F=Δ{ x:f(x)=0} Express f f f stay 0 Situated level set. First, the linear classifier is analyzed f ( x ) = w T x + b f(x)=w^Tx+b f(x)=wTx+b The situation of , Then we derive a general algorithm that can be applied to any differentiable two classifiers .

It is easy to see the linearity f f f At point x 0 x_0 x0 Robustness at , Δ ( x ; f ) \Delta(x;f) Δ(x;f) Equivalent to x 0 x_0 x0 To the separating hyperplane F = { x : w T x + b = 0 } \mathcal{F}=\{x:w^Tx+b=0\} F={ x:wTx+b=0} Distance of ( Such as chart 2), The minimum disturbance that changes the decision of the classifier corresponds to x 0 x_0 x0 To F \mathcal{F} F The orthogonal projection of . A closed form formula to describe the process is as follows :

r ∗ ( x 0 ) : = arg min ∥ r ∥ 2 s . t . sign ( f ( x 0 + r ) ) ≠ sign ( f ( x 0 ) ) = − f ( x 0 ) ∥ w ∥ 2 2 w . (3) \tag{3} r_*(x_0):=\argmin\|r\|_2\qquad s.t. \qquad\text{sign}(f(x_0+r))\neq\text{sign}(f(x_0))=-\frac{f(x_0)}{\|w\|_2^2}w. r∗(x0):=argmin∥r∥2s.t.sign(f(x0+r))=sign(f(x0))=−∥w∥22f(x0)w.(3)

hypothesis f f f A general two class differentiable classifier , We use one Iteration strategy To assess robustness Δ ( x 0 ; f ) \Delta(x_0;f) Δ(x0;f). In each iteration , f f f Around the current point x i x_i xi Linearization , The minimum disturbance of the linear classifier is calculated as

arg min r i ∥ r i ∥ s . t . f ( x i ) + ∇ f ( x i ) T r i = 0. (4) \tag{4} \argmin_{r_i}\|r_i\|\qquad s.t.\qquad f(x_i)+\nabla f(x_i)^Tr_i=0. riargmin∥ri∥s.t.f(xi)+∇f(xi)Tri=0.(4) Disturbance r i r_i ri Pass the formula in each iteration 3 Calculation , And in the next iteration x i + 1 x_{i+1} xi+1 When the update . The algorithm will stop when the flag of the classifier changes . Algorithm 1 Sum up DeepFool Process for binary classification problems . chart 3 Is a visual result .

actually , The above algorithm can often converge to the zero level set F \mathcal{F} F The last point . To reach the other side of the classification boundary , The final disturbance r ^ \hat{r} r^ Will multiply by a constant 1 + η 1+\eta 1+η, among η ≪ 1 \eta\ll1 η≪1. Set to... In the experiment 0.02 0.02 0.02.

4 DeepFool And multiclassification

One to many is the most commonly used multi classification strategy , So we extend... Based on this strategy DeepFool To multiple categories . Under this setting , The classifier will have c c c Outputs , So the classifier is defined as f : R d → R c f:\mathbb{R}^d\to \mathbb{R}^c f:Rd→Rc And :

k ^ ( x ) = arg max k f k ( x ) , (5) \tag{5} \hat{k}(x)=\argmax_kf_k(x), k^(x)=kargmaxfk(x),(5) among f k ( x ) f_k(x) fk(x) yes f ( x ) f(x) f(x) In the c c c Class . Similar to the second classification , First, the linear case is analyzed and extended to other classifiers .

4.1 Linear multiple classifiers

Make f ( x ) = W T x + b f(x)=W^Tx+b f(x)=WTx+b Represents a linear classifier , Under the one to many strategy , The minimum disturbance of fooling the classifier is rewritten as :

arg min r ∥ r ∥ 2 s . t . ∃ k : w k T ( x 0 + r ) + b k ≥ w k ^ ( x 0 ) T ( x 0 + r ) + b k ^ ( x 0 ) , (6) \tag{6} \argmin_r\|r\|_2\qquad s.t.\qquad\exists k:w_k^T(x_0+r)+b_k\geq w_{\hat{k}(x_0)}^T(x_0+r)+b_{\hat{k}(x_0)}, rargmin∥r∥2s.t.∃k:wkT(x0+r)+bk≥wk^(x0)T(x0+r)+bk^(x0),(6) among w k w_k wk yes W W W Of the k k k Column . Geometrically , The above problem corresponds to the calculation x 0 x_0 x0 And convex polyhedron complement P P P Distance between :

P = ⋂ k = 1 c { x : f k ^ ( x o ) ( x ) ≥ f k ( x ) } , (7) \tag{7} P=\bigcap_{k=1}^c\{x:f_{\hat{k}(x_o)}(x)\geq f_k(x)\}, P=k=1⋂c{ x:fk^(xo)(x)≥fk(x)},(7) among x 0 x_0 x0 It's located in P P P In the point . We define this distance by d i s t ( x 0 , P c ) \mathbf{dist}(x_0,P^c) dist(x0,Pc). Polyhedron P P P Defined f f f Output label k ^ ( x 0 ) \hat{k}(x_0) k^(x0) The spatial area of , Such as chart 4 Shown .

The formula 6 The solution of can be calculated in closed form as follows . Make l ^ ( x 0 ) \hat{l}(x_0) l^(x0) It means to leave P P P The nearest hyperplane to the boundary of , for example chart 4 Medium l ^ ( x 0 ) = 3 \hat{l}(x_0)=3 l^(x0)=3. Formally , l ^ ( x 0 ) \hat{l}(x_0) l^(x0) It can be calculated as :

l ^ ( x 0 ) = arg min k ≠ k ^ ( x 0 ) ∣ f k ( x 0 ) − f k ^ ( x 0 ) ( x 0 ) ∣ ∥ w k − w k ^ ( x 0 ) ∥ 2 . (8) \tag{8} \hat{l}\left({x}_{0}\right)=\underset{k \neq \hat{k}\left({x}_{0}\right)}{\arg \min } \frac{\left|f_{k}\left({x}_{0}\right)-f_{\hat{k}\left({x}_{0}\right)}\left({x}_{0}\right)\right|}{\left\|{w}_{k}-{w}_{\hat{k}\left({x}_{0}\right)}\right\|_{2}}. l^(x0)=k=k^(x0)argmin∥∥∥wk−wk^(x0)∥∥∥2∣∣∣fk(x0)−fk^(x0)(x0)∣∣∣.(8) Minimum disturbance r ∗ ( x 0 ) r_*(x_0) r∗(x0) Yes, it will x 0 x_0 x0 Projected to by l ^ ( x 0 ) \hat{l}(x_0) l^(x0) Vectors on the hyperplane of the index :

r ∗ ( x 0 ) = ∣ f l ^ ( x 0 ) ( x 0 ) − f k ^ ( x 0 ) ( x 0 ) ∣ ∥ w l ^ ( x 0 ) − w k ^ ( x 0 ) ∥ 2 2 ( w l ^ ( x 0 ) − w k ^ ( x 0 ) ) . (9) \tag{9} {r}_{*}\left({x}_{0}\right)=\frac{\left|f_{\hat{l}\left({x}_{0}\right)}\left({x}_{0}\right)-f_{\hat{k}\left({x}_{0}\right)}\left({x}_{0}\right)\right|}{\left\|{w}_{\hat{l}\left({x}_{0}\right)}-{w}_{\hat{k}\left({x}_{0}\right)}\right\|_{2}^{2}}\left({w}_{\hat{l}\left({x}_{0}\right)}-{w}_{\hat{k}\left({x}_{0}\right)}\right) . r∗(x0)=∥∥∥wl^(x0)−wk^(x0)∥∥∥22∣∣∣fl^(x0)(x0)−fk^(x0)(x0)∣∣∣(wl^(x0)−wk^(x0)).(9) let me put it another way , We can find x o x_o xo stay P P P The nearest projection on the plane of .

4.2 Generalized classifier

For nonlinear classifiers , The formula 7 Describes the output label of the classifier k ^ ( x 0 ) \hat{k}(x_0) k^(x0) A collection of spatial regions P P P No longer a polyhedron . It is similar to the iterative solution under two categories , aggregate P P P Pass the first i i i Round iterated polyhedron P ~ i \tilde{P}_i P~i The approximate :

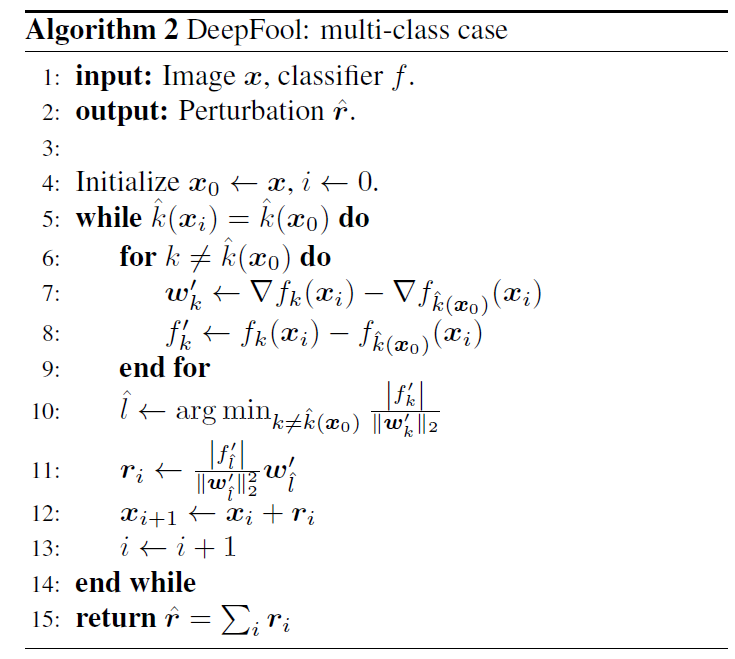

P ~ i = ⋂ k = 1 c { x : f k ( x i ) − f k ^ ( x 0 ) ( x i ) + ∇ f k ( x i ) T x − ∇ f k ^ ( x 0 ) ( x i ) T x ≤ 0 } . (10) \tag{10} \tilde{P}_i=\bigcap_{k=1}^c\left\{x:f_k(x_i)-f_{\hat{k}(x_0)}(x_i)+\nabla f_k(x_i)^Tx-\nabla f_{\hat{k}(x_0)}(x_i)^Tx\leq0\right\}. P~i=k=1⋂c{ x:fk(xi)−fk^(x0)(xi)+∇fk(xi)Tx−∇fk^(x0)(xi)Tx≤0}.(10) And then in each iteration through d i s t ( x i , P ~ i ) \mathbf{dist}(x_i,\tilde{P}_i) dist(xi,P~i) To approximate d i s t ( x i , P i ) \mathbf{dist}(x_i,P_i) dist(xi,Pi). Algorithm 2 Shows the process . It should be noted that , The proposed algorithm runs greedily , Convergence to... Is not guaranteed The formula 1 Optimal perturbation in . The observation results in practice show that the proposed algorithm can produce very small disturbance , This is considered to be a good approximation of the minimum disturbance .

4.3 ℓ p \ell_p ℓp An extended version of the norm

DeepFool The previous steps of are in ℓ 2 \ell_2 ℓ2 Proceed under , When in ℓ p , p ∈ [ 1 , ∞ ) \ell_p,p\in[1,\infty) ℓp,p∈[1,∞) When you lower the constraint , Algorithm 2 No 10、11 The line needs to be replaced with :

l ^ ← arg min k ≠ k ^ ( x 0 ) ∣ f k ′ ∣ ∥ w k ′ ∥ q , (11) \tag{11} \hat{l} \leftarrow \underset{k \neq \hat{k}\left({x}_{0}\right)}{\arg \min } \frac{\left|f_{k}^{\prime}\right|}{\left\|{w}_{k}^{\prime}\right\|_{q}}, l^←k=k^(x0)argmin∥wk′∥q∣fk′∣,(11) r i ← ∣ f ı ^ ′ ∣ ∥ w ı ^ ′ ∥ q q ∣ w ı ^ ′ ∣ q − 1 ⊙ sign ( w l ^ ′ ) , (12) \tag{12} {r}_{i} \leftarrow \frac{\left|f_{\hat{\imath}}^{\prime}\right|}{\left\|{w}_{\hat{\imath}}^{\prime}\right\|_{q}^{q}}\left|{w}_{\hat{\imath}}^{\prime}\right|^{q-1} \odot \operatorname{sign}\left({w}_{\hat{l}}^{\prime}\right), ri←∥wı^′∥qq∣fı^′∣∣wı^′∣q−1⊙sign(wl^′),(12)

among ⊙ \odot ⊙ Multiply by elements 、 q = p p − 1 ⋅ q=\frac{p}{p-1} \cdot q=p−1p⋅. Specially p = ∞ p=\infty p=∞ when , Yes :

l ^ ← arg min k ≠ k ^ ( x 0 ) ∣ f k ′ ∣ ∥ w k ′ ∥ 1 , (13) \tag{13} \hat{l} \leftarrow \underset{k \neq \hat{k}\left(\boldsymbol{x}_{0}\right)}{\arg \min } \frac{\left|f_{k}^{\prime}\right|}{\left\|\boldsymbol{w}_{k}^{\prime}\right\|_{1}}, l^←k=k^(x0)argmin∥wk′∥1∣fk′∣,(13) r i ← ∣ f l ^ ′ ∣ ∥ w l ^ ′ ∥ 1 sign ( w l ^ ′ ) . (14) \tag{14} \boldsymbol{r}_{i} \leftarrow \frac{\left|f_{\hat{l}}^{\prime}\right|}{\left\|\boldsymbol{w}_{\hat{l}}^{\prime}\right\|_{1}} \operatorname{sign}\left(\boldsymbol{w}_{\hat{l}}^{\prime}\right). ri←∥∥∥wl^′∥∥∥1∣∣∣fl^′∣∣∣sign(wl^′).(14)

边栏推荐

- midway的使用教程

- MTK基于GAT工具和SpOffineDebugSuite工具 dump 抓取和解析

- leetcode 1332. Remove palindromic subsequences

- Zen project management flow

- Curator - Create Client

- MTK based on gat tool and spoffinedebugsuite tool dump capture and parsing

- stack_ quick_ sort

- Streaming media pressure measurement st load

- MySQL uses where in under the InnoDB engine to cause lock escalation

- . Net C Foundation (7): interface - how people interact with cats

猜你喜欢

![[live dialogue] graph computing is the next frontier of science and technology](/img/23/7477ec2c81866ece291b01db9b7e4b.png)

[live dialogue] graph computing is the next frontier of science and technology

使用GAT解析Minidump(图形界面)

JS wechat games - fighting mosquitoes

Bubble Sort Bubble_ sort

全球首个金融图数据库测试基准立项,蚂蚁集团开放专利共建

Record the realization of animation effect on the page of small rocket of BiliBili (station B)

Methods of using variables in shell parameters

With the advent of the digital wave, how to achieve agile business delivery and sustainable technology governance? Uncover the ant bizstack

How to spread the whole page evenly in word

Be diligent in making money abroad and talk about the importance of tasks

随机推荐

Personnaliser le plug - in bulles JS prompt tooltips

He said it was difficult to make lead abroad

Using kubekey to build kubernetes/kubesphere environment“

Sequential search, binary search

蚂蚁集团三项技术方案入选“2021年信息技术应用创新典型解决方案”

Api 接口优化的几个技巧

Talking about thread pool with pictures and texts

MYSQL第二篇(核心技术)

Using gat to parse minidump (graphical interface)

Common English abbreviations of display

[STM32] migration of guilite based on Hal Library

R language party package ctree function builds conditional inference decision trees, and uses plot function to visualize the trained conditional inference decision trees

Installation and configuration of NPM and yarn

Three technical solutions of ant group were selected as "typical solutions for it application innovation in 2021"

Web171~180 of ctfshow - SQL injection (1)

Web89~web96---php feature of ctfshow (1)

Safari's favorites item does not appear on the home page

. Net C Foundation (7): interface - how people interact with cats

Curator - node type

Clear table selection