当前位置:网站首页>MMdet中的Resnet源码解读+Ghost模块

MMdet中的Resnet源码解读+Ghost模块

2022-06-29 10:44:00 【故乡的云和星星】

1.Ghostnet中的Ghost模块和Resnet模块源码讲解

1.1Ghostnet+MMdet+Resnet

1.1.1Ghostnet中的Ghost模块和残差块

class GhostModule(nn.Module):

def __init__(self, inp, oup, kernel_size=1, ratio=2, dw_size=3, stride=1, relu=True):

super(GhostModule, self).__init__()

self.oup = oup

init_channels = math.ceil(oup / ratio)

new_channels = init_channels*(ratio-1)

self.primary_conv = nn.Sequential(

nn.Conv2d(inp, init_channels, kernel_size, stride, kernel_size//2, bias=False),

nn.BatchNorm2d(init_channels),

nn.ReLU(inplace=True) if relu else nn.Sequential(),

)

self.cheap_operation = nn.Sequential(

nn.Conv2d(init_channels, new_channels, dw_size, 1, dw_size//2, groups=init_channels, bias=False),

nn.BatchNorm2d(new_channels),

nn.ReLU(inplace=True) if relu else nn.Sequential(),

)

def forward(self, x):

x1 = self.primary_conv(x)

x2 = self.cheap_operation(x1)

out = torch.cat([x1,x2], dim=1)

return out[:,:self.oup,:,:]

class GhostBottleneck(nn.Module):

""" Ghost bottleneck w/ optional SE"""

def __init__(self, in_chs, mid_chs, out_chs, dw_kernel_size=3,

stride=1, act_layer=nn.ReLU, se_ratio=0.):

super(GhostBottleneck, self).__init__()

has_se = se_ratio is not None and se_ratio > 0.

self.stride = stride

# Point-wise expansion

self.ghost1 = GhostModule(in_chs, mid_chs, relu=True)

# Depth-wise convolution

if self.stride > 1:

self.conv_dw = nn.Conv2d(mid_chs, mid_chs, dw_kernel_size, stride=stride,

padding=(dw_kernel_size - 1) // 2,

groups=mid_chs, bias=False)

self.bn_dw = nn.BatchNorm2d(mid_chs)

# Squeeze-and-excitation

if has_se:

self.se = SqueezeExcite(mid_chs, se_ratio=se_ratio)

else:

self.se = None

# Point-wise linear projection

self.ghost2 = GhostModule(mid_chs, out_chs, relu=False)

# shortcut

if (in_chs == out_chs and self.stride == 1):

self.shortcut = nn.Sequential()

else:

self.shortcut = nn.Sequential(

nn.Conv2d(in_chs, in_chs, dw_kernel_size, stride=stride,

padding=(dw_kernel_size - 1) // 2, groups=in_chs, bias=False),

nn.BatchNorm2d(in_chs),

nn.Conv2d(in_chs, out_chs, 1, stride=1, padding=0, bias=False),

nn.BatchNorm2d(out_chs),

)

def forward(self, x):

residual = x

# 1st ghost bottleneck

x = self.ghost1(x)

# Depth-wise convolution

if self.stride > 1:

x = self.conv_dw(x)

x = self.bn_dw(x)

# Squeeze-and-excitation

if self.se is not None:

x = self.se(x)

# 2nd ghost bottleneck

x = self.ghost2(x)

x += self.shortcut(residual)

return x1.1.2 Resnet模块源码讲解

1.1.3 各种参数讲解:

"""ResNet backbone.

Args:

depth (int): Depth of resnet, from {18, 34, 50, 101, 152}.

stem_channels (int | None): Number of stem channels. If not specified,

it will be the same as `base_channels`. Default: None.

base_channels (int): Number of base channels of res layer. Default: 64.

in_channels (int): Number of input image channels. Default: 3.

num_stages (int): Resnet stages. Default: 4.

strides (Sequence[int]): Strides of the first block of each stage.

dilations (Sequence[int]): Dilation of each stage.

out_indices (Sequence[int]): Output from which stages.

style (str): `pytorch` or `caffe`. If set to "pytorch", the stride-two

layer is the 3x3 conv layer, otherwise the stride-two layer is

the first 1x1 conv layer.

deep_stem (bool): Replace 7x7 conv in input stem with 3 3x3 conv

avg_down (bool): Use AvgPool instead of stride conv when

downsampling in the bottleneck.

frozen_stages (int): Stages to be frozen (stop grad and set eval mode).

-1 means not freezing any parameters.

norm_cfg (dict): Dictionary to construct and config norm layer.

norm_eval (bool): Whether to set norm layers to eval mode, namely,

freeze running stats (mean and var). Note: Effect on Batch Norm

and its variants only.

plugins (list[dict]): List of plugins for stages, each dict contains:

- cfg (dict, required): Cfg dict to build plugin.

- position (str, required): Position inside block to insert

plugin, options are 'after_conv1', 'after_conv2', 'after_conv3'.

- stages (tuple[bool], optional): Stages to apply plugin, length

should be same as 'num_stages'.

with_cp (bool): Use checkpoint or not. Using checkpoint will save some

memory while slowing down the training speed.

zero_init_residual (bool): Whether to use zero init for last norm layer

in resblocks to let them behave as identity.

pretrained (str, optional): model pretrained path. Default: None

init_cfg (dict or list[dict], optional): Initialization config dict.

Default: None

Example:

>>> from mmdet.models import ResNet

>>> import torch

>>> self = ResNet(depth=18)

>>> self.eval()

>>> inputs = torch.rand(1, 3, 32, 32)

>>> level_outputs = self.forward(inputs)

>>> for level_out in level_outputs:

... print(tuple(level_out.shape))

(1, 64, 8, 8)

(1, 128, 4, 4)

(1, 256, 2, 2)

(1, 512, 1, 1)

ResNet backbone.

Args:

depth: (int) ResNet 的深度, 可以是 {18, 34, 50, 101, 152}.

in_channels: (int) 输入图像的通道数(默认: 3).

stem_channels: (int) stem 的通道数(默认: 64).

base_channels: (int) ResNet 的 res layer 的基础通道数(默认: 64).

num_stages: (int) 使用 ResNet 的 stage 数量(默认: 4).

strides: (Sequence[int]) 每个 stage 的第一个 block 的 stride, 如果为 2 进行 2 倍下采样.

dilations: (Sequence[int]) 每个 stage 中所有 block 的第一个卷积层的 dilation.

out_indices: (Sequence[int]) 需要输出的 stage 的索引.

style: (str) 'pytorch' 或 'caffe'.

如果使用 'pytorch', block 中 stride 为 2 的卷积层是 3x3 conv2, stride=2

如果使用 'caffe', block 中 stride 为 2 的卷积层是 1x1 conv1, stride=2

deep_stem: (bool) 如果为 True, 将 stem 的 7x7 conv 替换为 3 个 3x3 conv.

avg_down: (bool) 在下采样的时候使用 Avg pool 2x2 stride=2 代替带步长的卷积.

frozen_stages: (int) 冻结的 stage 数(停止更新梯度, 并开启eval模式), -1 代表不冻结.

conv_cfg: (dict) 构建 conv 的 config.

norm_cfg: (dict) 构建 norm 的 config.

norm_eval: (bool) 是否设置 norm 层为 eval 模式. 即冻结参数状态(mean, var).

dcn: (dict) 构建 DCN 的 config.

stage_with_dcn: (Sequence[bool]) 需要使用 DCN 的 stage.

plugins: (list[dict]) 为 stage 提供插件.

with_cp: (bool) 是否加载 checkpoint. 使用 checkpoint 会节省一部分内存, 同时会减少训练时间.

zero_init_residual: (bool) 是否使用 0 对所有 block 中的最后一个 norm 层初始化, 使其为恒等映射.

depth (int): resnet的深度,选项为{18, 34, 50, 101, 152}

stem_channels (int | None): 当开启deep_stem为True才有用,默认为None,则等于base_channels, 表示经过stem layer后输出的channels

base_channels (int): 经过conv1后的输出channels,默认为64

in_channels (int): 输入channels,没什么好说,RGB,默认为3

num_stages (int): res layer的层数,从上面介绍的网络结构可见,默认为4

strides (Sequence[int]): 每一个res layer的第一个基本单元的stride,可见除了res layer1的stride=1不作下采样,其余都为2,所以默认为(1, 2, 2, 2)

dilations (Sequence[int]): 每一res layer的空洞卷积,其中空洞卷积只会应用在BasicBlock的第一个卷积,或者是BottlenetBlock的第2个卷积。并且padding==dilation,使得输入输出特征图大小不变。

out_indices (Sequence[int]):按out_indices指定顺序输出,其中int表示第几个res layer层的输出,从0开始计数。默认为(0, 1, 2, 3)。

style (str): `pytorch` or `caffe`. 之前介绍过

deep_stem (bool): 之前介绍过

avg_down (bool): 之前介绍过

frozen_stages (int): 冻结梯度以及设置eval模式的前n个stages。为0表示conv1、norm1(或者stem);为1表示res layer1,以此类推;-1 表示不冻结任何。

conv_cfg(dict|None):卷积的配置字典,默认为None,采用Conv2d

norm_cfg (dict): norm层的配置字典,如:dict(type='BN', requires_grad=True)

norm_eval (bool): 默认为True,这样在训练时就不会更新norm层中的均值和方差,当然norm层中的可学习参数beta等还是会更新的。这是一个迁移训练技巧,加载的预训练模型,并把norm设置为eval。

dcn(dict|None):DCN的配置文件,默认不采用deformable convolution

stage_with_dcn(Sequence[bool]):指定每一个res layer是否采用DCN,默认为(False, False, False, False)

plugins (list[dict]): 指定拓展模块,比如nonlocal。其中字典需要包括以下字段:

- position (str): 在stage的哪个卷积后插入,选项为 'after_conv1', 'after_conv2', 'after_conv3'.

- stages (tuple[bool], optional): 在哪一个stage使用这个操作

-cfg (dict): 该拓展模块的配置文件

with_cp (bool):是否采用torch.utils.checkpoint.checkpoint方法

Example:

>>> from mmdet.models import ResNet

>>> import torch

>>> self = ResNet(depth=18)

>>> self.eval()

>>> inputs = torch.rand(1, 3, 32, 32)

>>> level_outputs = self.forward(inputs)

>>> for level_out in level_outputs:

... print(tuple(level_out.shape))

(1, 64, 8, 8)

(1, 128, 4, 4)

(1, 256, 2, 2)

(1, 512, 1, 1)

"""1.1.4 Resnet模块的残差块

class BasicBlock(BaseModule):

expansion = 1 # 输出通道数为输入通道数的倍数.(输出通道数 == 输入通道数)

def __init__(self,

inplanes,

planes,

stride=1,

dilation=1,

downsample=None,

style='pytorch',

with_cp=False,

conv_cfg=None,

norm_cfg=dict(type='BN'),

dcn=None,

plugins=None,

init_cfg=None):

super(BasicBlock, self).__init__(init_cfg)

assert dcn is None, 'Not implemented yet.'

assert plugins is None, 'Not implemented yet.' # conv3x3 --> bn1 --> relu --> conv3x3 --> bn2 --> relu

# bn1, bn2对隐藏层输入的分布进行平滑,缓解随机梯度下降权重更新对后续层的负面影响

self.norm1_name, norm1 = build_norm_layer(norm_cfg, planes, postfix=1)

self.norm2_name, norm2 = build_norm_layer(norm_cfg, planes, postfix=2)

# conv1: 3x3 conv

# 当 conv 为 3x3 且 padding = dilation 时,原特征图大小只和 stride 有关

self.conv1 = build_conv_layer(

conv_cfg,

inplanes,

planes,

3,

stride=stride,

padding=dilation,

dilation=dilation,

bias=False)

self.add_module(self.norm1_name, norm1)

# conv2: 3x3 conv, stride = 1, padding = 1.

self.conv2 = build_conv_layer(

conv_cfg, planes, planes, 3, padding=1, bias=False)

self.add_module(self.norm2_name, norm2)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

self.dilation = dilation

self.with_cp = with_cp

@property

def norm1(self):

"""nn.Module: normalization layer after the first convolution layer第一卷积层之后的归一化层"""

return getattr(self, self.norm1_name)

@property

def norm2(self):

"""nn.Module: normalization layer after the second convolution layer第二卷积层之后的归一化层"""

return getattr(self, self.norm2_name)

def forward(self, x):

"""Forward function."""

def _inner_forward(x):

identity = x

out = self.conv1(x)

out = self.norm1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.norm2(out)

# 需要保证 identity 和 x 的宽高相同.

if self.downsample is not None:

identity = self.downsample(x)

# h(x) = f(x) + x

out += identity

return out

# 加载预训练模型并且需要求导的时候, 使用 checkpoint 用时间换取空间。

# 这是因为加载权重后可以训练的 epoch 数少,可以考虑时间换取空间。

if self.with_cp and x.requires_grad:

# 使用 checkpoint 不保存中间计算的激活值, 在反向传播中重新计算一次中间激活值。

# 即重新运行一次检查点部分的前向传播,这是一种以时间换空间(显存)的方法。

out = cp.checkpoint(_inner_forward, x)

# 不加载权重,从零开始训练。不使用 ckpt,因为训练慢。

else:

out = _inner_forward(x)

# 注意:f(x) + x 之后再进行 relu

out = self.relu(out)

return outclass Bottleneck(BaseModule):

expansion = 4# 输出通道数为输入通道数的倍数. (输出通道数 == 4 × 输入通道数)

# def __init__(self,

# # 网络深度

# depth,

# # 输入图像的channel数

# in_channels=3,

# # 主干卷积层的channel数,默认等于base_channels

# stem_channels=None,

# base_channels=64,

# # stage数量

# num_stages=4,

# # 每个stage第一个残差块的stride参数

# strides=(1, 2, 2, 2),

# # 膨胀(空洞)卷积参数设置

# dilations=(1, 1, 1, 1),

# # 输出特征图的索引,每个stage对应一个

# out_indices=(0, 1, 2, 3),

# # 风格设置

# style='pytorch',

# # 是否用3个3×3的卷积核代替主干上1个7×7的卷积核

# deep_stem=False,

# # 是否使用平均池化代替stride为2的卷积操作进行下采样

# avg_down=False,

# # 冻结层数,-1表示不冻结

# frozen_stages=-1,

# # 构建卷积层的配置

# conv_cfg=None,

# # 构建归一化层的配置

# norm_cfg=dict(type='BN', requires_grad=True),

# norm_eval=True,

# # 是否使用dcn(可变形卷积)

# dcn=None,

# # 指定哪个stage使用dcn

# stage_with_dcn=(False, False, False, False),

# plugins=None,

# with_cp=False,

# # 是否对残差块进行0初始化

# zero_init_residual=True,

# # 预训练模型(已弃用,若指定会自动调用init_cfg)

# pretrained=None,

# # 指定预训练模型

def __init__(self,

inplanes,

planes,#平面图

stride=1,#步长

dilation=1,#空洞卷积参数设置

downsample=None,

style='pytorch',

with_cp=False,# 是否对残差块进行0初始化

conv_cfg=None,# 构建卷积层的配置

norm_cfg=dict(type='BN'),# 构建归一化层的配置

dcn=None,# 是否使用dcn(可变形卷积)

plugins=None,

init_cfg=None):

"""Bottleneck block for ResNet.

ResNet 50, 101, 152 使用的 block

Args:

style:(str) 'pytorch' 或 'caffe'.

如果使用 'pytorch', block 中 stride 为 2 的卷积层是 3x3 conv, stride=2

如果使用 'caffe', block 中 stride 为 2 的卷积层是 1x1 conv, stride=2

If style is "pytorch", the stride-two layer is the 3x3 conv layer, if

it is "caffe", the stride-two layer is the first 1x1 conv layer.

如果样式为“pytorch”,则跨步二层为3x3转换层,如果a层为“caffe”,跨步二层为第一个1x1转换层。

"""

super(Bottleneck, self).__init__(init_cfg)

assert style in ['pytorch', 'caffe']

assert dcn is None or isinstance(dcn, dict)

assert plugins is None or isinstance(plugins, list)

if plugins is not None:

allowed_position = ['after_conv1', 'after_conv2', 'after_conv3']

assert all(p['position'] in allowed_position for p in plugins)

#初始化

self.inplanes = inplanes

self.planes = planes

self.stride = stride

self.dilation = dilation

self.style = style

self.with_cp = with_cp

self.conv_cfg = conv_cfg

self.norm_cfg = norm_cfg

self.dcn = dcn

self.with_dcn = dcn is not None

self.plugins = plugins

self.with_plugins = plugins is not None

if self.with_plugins:

# collect plugins for conv1/conv2/conv3

self.after_conv1_plugins = [

plugin['cfg'] for plugin in plugins

if plugin['position'] == 'after_conv1'

]

self.after_conv2_plugins = [

plugin['cfg'] for plugin in plugins

if plugin['position'] == 'after_conv2'

]

self.after_conv3_plugins = [

plugin['cfg'] for plugin in plugins

if plugin['position'] == 'after_conv3'

]

if self.style == 'pytorch':

self.conv1_stride = 1

self.conv2_stride = stride

else:

self.conv1_stride = stride

self.conv2_stride = 1

# conv1x1 --> bn1 --> relu

# conv3x3 --> bn2 --> relu

# conv1x1 --> bn3

self.norm1_name, norm1 = build_norm_layer(norm_cfg, planes, postfix=1)

self.norm2_name, norm2 = build_norm_layer(norm_cfg, planes, postfix=2)

self.norm3_name, norm3 = build_norm_layer(

norm_cfg, planes * self.expansion, postfix=3)

self.conv1 = build_conv_layer(

conv_cfg,

inplanes,

planes,

kernel_size=1,

stride=self.conv1_stride,

bias=False)

self.add_module(self.norm1_name, norm1)

fallback_on_stride = False

if self.with_dcn:

fallback_on_stride = dcn.pop('fallback_on_stride', False)

if not self.with_dcn or fallback_on_stride:

self.conv2 = build_conv_layer(

conv_cfg,

planes,

planes,

kernel_size=3,

stride=self.conv2_stride,

padding=dilation,

dilation=dilation,

bias=False)

else:

assert self.conv_cfg is None, 'conv_cfg must be None for DCN'

self.conv2 = build_conv_layer(

dcn,

planes,

planes,

kernel_size=3,

stride=self.conv2_stride,

padding=dilation,

dilation=dilation,

bias=False)

self.add_module(self.norm2_name, norm2)

self.conv3 = build_conv_layer(

conv_cfg,

planes,

planes * self.expansion,

kernel_size=1,

bias=False)

self.add_module(self.norm3_name, norm3)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

if self.with_plugins:

self.after_conv1_plugin_names = self.make_block_plugins(

planes, self.after_conv1_plugins)

self.after_conv2_plugin_names = self.make_block_plugins(

planes, self.after_conv2_plugins)

self.after_conv3_plugin_names = self.make_block_plugins(

planes * self.expansion, self.after_conv3_plugins)

def make_block_plugins(self, in_channels, plugins):

"""make plugins for block.

Args:

in_channels (int): Input channels of plugin.

plugins (list[dict]): List of plugins cfg to build.

Returns:

list[str]: List of the names of plugin.

"""

assert isinstance(plugins, list)

plugin_names = []

for plugin in plugins:

plugin = plugin.copy()

name, layer = build_plugin_layer(

plugin,

in_channels=in_channels,

postfix=plugin.pop('postfix', ''))

assert not hasattr(self, name), f'duplicate plugin {name}'

self.add_module(name, layer)

plugin_names.append(name)

return plugin_names

def forward_plugin(self, x, plugin_names):

out = x

for name in plugin_names:

out = getattr(self, name)(x)

return out

@property

def norm1(self):

"""nn.Module: normalization layer after the first convolution layer"""

return getattr(self, self.norm1_name)

@property

def norm2(self):

"""nn.Module: normalization layer after the second convolution layer"""

return getattr(self, self.norm2_name)

@property

def norm3(self):

"""nn.Module: normalization layer after the third convolution layer"""

return getattr(self, self.norm3_name)

def forward(self, x):

"""Forward function."""

def _inner_forward(x):

identity = x

out = self.conv1(x)

out = self.norm1(out)

out = self.relu(out)

if self.with_plugins:

out = self.forward_plugin(out, self.after_conv1_plugin_names)

out = self.conv2(out)

out = self.norm2(out)

out = self.relu(out)

if self.with_plugins:

out = self.forward_plugin(out, self.after_conv2_plugin_names)

out = self.conv3(out)

out = self.norm3(out)

if self.with_plugins:

out = self.forward_plugin(out, self.after_conv3_plugin_names)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

return out

if self.with_cp and x.requires_grad:

out = cp.checkpoint(_inner_forward, x)

else:

out = _inner_forward(x)

out = self.relu(out)

return out

边栏推荐

- Is it safe to open a stock account online

- 新版CorelDRAW Technical Suite2022最新详细功能介绍

- 在日本的 IT 公司工作是怎样一番体验?

- XML外部实体注入漏洞(一)

- Modbustcp protocol WiFi wireless learning single channel infrared module (small shell version)

- [HBZ sharing] InnoDB principle of MySQL

- Qt学习15 用户界面与业务逻辑的分离

- When a technician becomes a CEO, what "bugs" should be modified?

- The first "cyborg" in the world died, and he only transformed himself to "change his life against the sky"

- Today in history: musk was born; Microsoft launches office 365; The inventor of Chua's circuit was born

猜你喜欢

![LeetCode 535 TinyURL的加密与解密[map] HERODING的LeetCode之路](/img/76/709bbbbd8eb01f32683a96c4abddb9.png)

LeetCode 535 TinyURL的加密与解密[map] HERODING的LeetCode之路

Exclusive interview with CTO: the company has deepened the product layout and accelerated the technological innovation of domestic EDA

Modbustcp protocol WiFi wireless learning single channel infrared module (small shell version)

Rebuild confidence in China's scientific research - the latest nature index 2022 released that China's research output increased the most

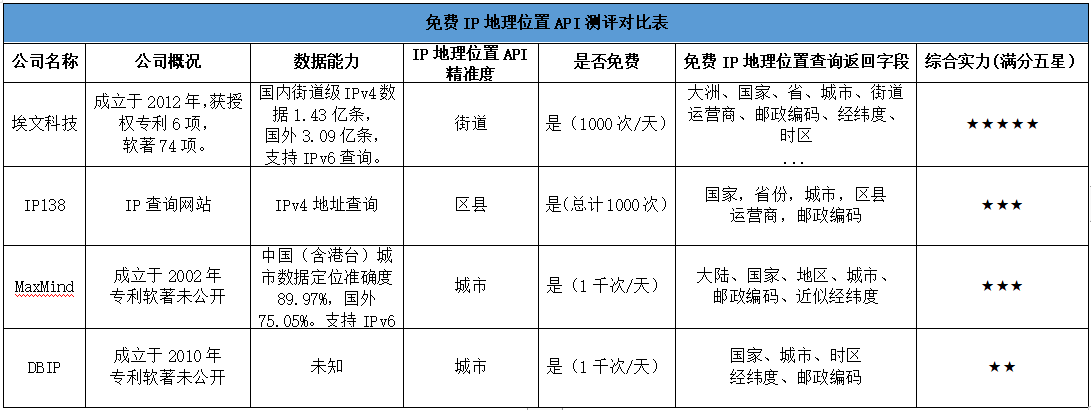

关于IP定位查询接口的测评Ⅱ

Hit the industry directly! The first model selection tool in the industry was launched by the flying propeller

Qt学习03 Qt的诞生和本质

Good news | Haitai Fangyuan has passed the cmmi-3 qualification certification, and its R & D capability has been internationally recognized

![Leetcode 535 encryption and decryption of tinyurl [map] the leetcode road of heroding](/img/76/709bbbbd8eb01f32683a96c4abddb9.png)

Leetcode 535 encryption and decryption of tinyurl [map] the leetcode road of heroding

Necessary for cloud native development: the first common codeless development platform IVX editor

随机推荐

分布式缓存之Memcached

又拍雲 Redis 的改進之路

Modbustcp protocol WiFi wireless learning single channel infrared module (round shell version)

(JS) imitate an instanceof method

Interview questions of Tencent automation software test of CSDN salary increase secret script (including answers)

Hit the industry directly! The first model selection tool in the industry was launched by the flying propeller

[various * * question series] what are OLTP and OLAP?

Unity学习笔记--Vector3怎么设置默认参数

喜报|海泰方圆通过CMMI-3资质认证,研发能力获国际认可

ETL为什么经常变成ELT甚至LET?

斐波那锲数列与冒泡排序法在C语言中的用法

Rebuild confidence in China's scientific research - the latest nature index 2022 released that China's research output increased the most

MySQL 索引失效的几种类型以及解决方式

Qt学习02 GUI程序实例分析

[daily 3 questions (3)] reformat the phone number

Qt学习15 用户界面与业务逻辑的分离

Xuetong denies that the theft of QQ number is related to it: it has been reported; IPhone 14 is ready for mass production: four models are launched simultaneously; Simple and elegant software has long

[HBZ sharing] the principle of reentrantlock realized by AQS + CAS +locksupport

Qt学习08 启航!第一个应用实例

Oracle NetSuite 助力 TCM Bio,洞悉数据变化,让业务发展更灵活