当前位置:网站首页>Troubleshooting the kubernetes problem: deleting the rancher's namespace by mistake causes the node to be emptied

Troubleshooting the kubernetes problem: deleting the rancher's namespace by mistake causes the node to be emptied

2022-06-24 13:24:00 【imroc】

This article excerpts from kubernetes Learning notes

Problem description

The nodes of the cluster suddenly disappeared (kubectl get node It's empty ), Cause cluster paralysis , But in fact, the virtual machines corresponding to the nodes are still . Because the cluster did not open the audit , So it's not easy to check node What was deleted .

Fast recovery

Because only k8s node Resource deleted , The actual machines are still there , We can restart nodes in batches , Automatic pull up kubelet Re registration node, Can be restored .

Suspicious operation

Found before the node disappeared , There is a suspicious operation : Some students found that in another cluster, there were a lot of messy namespace ( such as c-dxkxf), View these namespace No workload is running in , It could be a test created by someone else namespace, Just delete it .

analysis

Delete namespace Is installed in the cluster of rancher, Suspected of being deleted namespace yes rancher Automatically created .

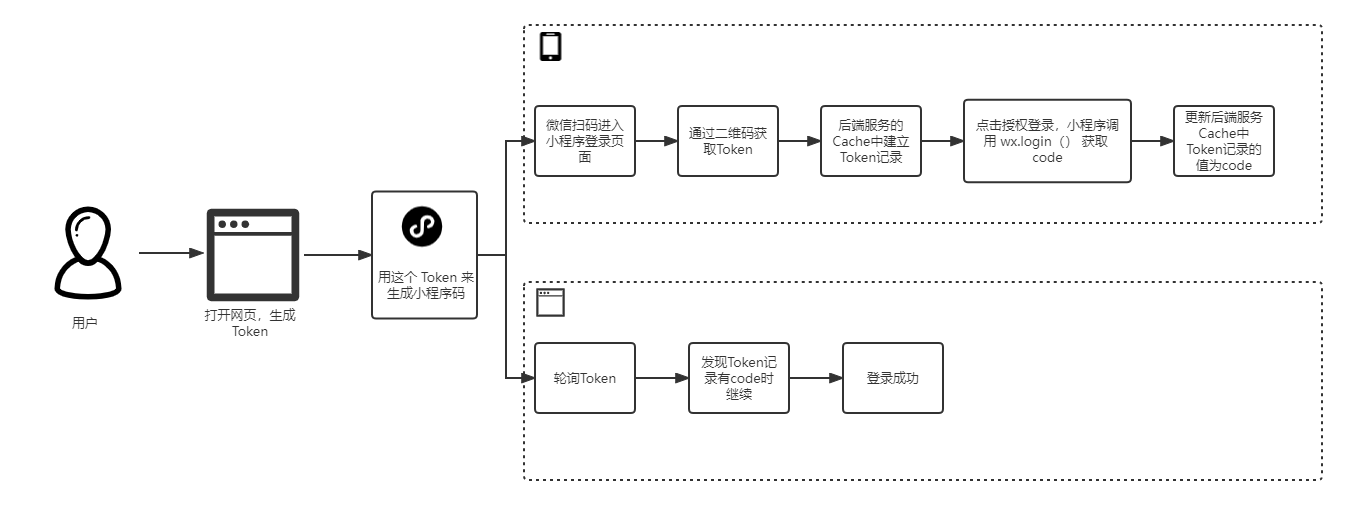

rancher Managed other k8s colony , Architecture diagram :

guess : Delete the namespace yes rancher Created , Cleaned up when deleting rancher Resources for , It also triggers rancher clear node The logic of .

Analog reproduction

Try to simulate a recurrence , Verify the conjecture :

- Create a k8s colony , As rancher Of root cluster, And will rancher Install in .

- Get into rancher web Interface , Create a cluster, Use import The way :

- Output cluster name:

- Pop up the prompt , Let the following... Be executed in another cluster kubectl Command to import it into rancher:

- Create another k8s Cluster as rancher Managed clusters , And will kubeconfig Import local for later use kubectl operation .

- Import kubeconfig And switch context after , perform rancher Provided kubectl Command to import the cluster into rancher:

You can see in the managed TKE Automatically created in the cluster cattle-system Namespace , And run some rancher Of agent:

- take context Switch to installation rancher The cluster of (root cluster), You can find that after adding a cluster , Automatically created some namespace: 1 individual

c-At the beginning ,2 individual-pAt the beginning :

Guess it is c- At the beginning namespace By rancher Used to store the added cluster Information about ;-p Used to store project Relevant information , The official also said that it would be automatically for each cluster establish 2 individual project:

- See what rancher Of crd, There is one

nodes.management.cattle.ioQuite conspicuous , Obviously used to store cluster Of node Information :

- look down node Where to store it namespace ( As expected

c-At the beginning namespace in ):

- Try to delete

c-At the beginning namesapce, And switch context To the added cluster , performkubectl get node:

Nodes are cleared , Problem recurrence .

Conclusion

Experimental proof ,rancher Of c- At the beginning namespace Saved the... Of the added cluster node Information , If you delete this namespace, That is, the stored node Information ,rancher watch The associated cluster will be deleted automatically k8s node resources .

therefore , Never clean up easily rancher Created namespace,rancher Some stateful information is stored directly in root cluster in ( adopt CRD resources ), Delete namespace May cause very serious consequences .

边栏推荐

- 不用Home Assistant,智汀也开源接入HomeKit、绿米设备?

- What should I do if I fail to apply for the mime database? The experience from failure to success is shared with you ~

- Detailed explanation of abstractqueuedsynchronizer, the cornerstone of thread synchronization

- How can ffmpeg streaming to the server save video as a file through easydss video platform?

- How long will it take to open a mobile account? Is online account opening safe?

- One article explains R & D efficiency! Your concerns are

- Quickly understand the commonly used message summarization algorithms, and no longer have to worry about the thorough inquiry of the interviewer

- 1. Snake game design

- Yolov6: the fast and accurate target detection framework is open source

- Pycharm中使用Terminal激活conda服务(终极方法,铁定可以)

猜你喜欢

1、贪吃蛇游戏设计

The agile way? Is agile development really out of date?

openGauss内核:简单查询的执行

3. caller service call - dapr

The data value reported by DTU cannot be filled into Tencent cloud database through Tencent cloud rule engine

hands-on-data-analysis 第三单元 模型搭建和评估

Getting started with the lvgl Library - colors and images

CVPR 2022 | 美团技术团队精选论文解读

openGauss内核:简单查询的执行

Use abp Zero builds a third-party login module (I): Principles

随机推荐

AGCO AI frontier promotion (6.24)

Nifi from introduction to practice (nanny level tutorial) - environment

Resolve symbol conflicts for dynamic libraries

Interviewer: the MySQL database is slow to query. What are the possible reasons besides the index problem?

Ask a question about SQL view

Leetcode 1218. 最长定差子序列

Use abp Zero builds a third-party login module (I): Principles

The agile way? Is agile development really out of date?

LVGL库入门教程 - 颜色和图像

手机开户后多久才能通过?在线开户安全么?

Concept + formula (excluding parameter estimation)

Detailed explanation of abstractqueuedsynchronizer, the cornerstone of thread synchronization

Coinbase将推出首个针对个人投资者的加密衍生产品

我真傻,招了一堆只会“谷歌”的程序员!

One hour is worth seven days! Ingenuity in the work of programmers

Richard Sutton, the father of reinforcement learning, paper: pursuing a general model for intelligent decision makers

Comparator 排序函数式接口

Who said that "programmers are useless without computers? The big brother around me disagrees! It's true

MySQL master-slave replication

Use abp Zero builds a third-party login module (I): Principles