当前位置:网站首页>Pytoch realizes text emotion analysis

Pytoch realizes text emotion analysis

2022-07-23 06:14:00 【CityD】

Text sentiment analysis

In this paper, we introduce how to use neural network to realize the task of emotion analysis , The main contents are :

- Load the pre training word vector

- Introduce how to deal with emotional analysis data sets

- Using recurrent neural network model training

- Use one-dimensional convolutional neural network model to train

Reference resources : Hands-on deep learning

1、 load Glove Pre trained word vectors

Create below TokenEmbedding Class to load and use the pre trained word vector .

import torch

import os

import collections

from torch import nn

from d2l import torch as d2l

from torch.utils.data import TensorDataset,DataLoader

''' Load and use Glove Pre trained word vectors '''

class TokenEmbedding:

def __init__(self, embedding_name):

self.idx_to_token, self.idx_to_vec = self._load_embedding(

embedding_name)

self.unknown_idx = 0

self.token_to_idx = {

token: idx for idx, token in

enumerate(self.idx_to_token)}

def _load_embedding(self, embedding_name):

# Used to hold id-token and id- Eigenvector

idx_to_token, idx_to_vec = ['<unk>'], []

data_dir = 'F:/ Thesis data set /glove.6B'

with open(os.path.join(data_dir, embedding_name + '.txt'), 'r',encoding='UTF-8') as f:

for line in f:

# Use spaces to token Separate from word vector

elems = line.rstrip().split(' ')

token, elems = elems[0], [float(elem) for elem in elems[1:]]

# Skip title information

if len(elems) > 1:

idx_to_token.append(token)

idx_to_vec.append(elems)

# Yes idx_to_vec Add... To the front of <unk> The word of the vector All for 0

idx_to_vec = [[0] * len(idx_to_vec[0])] + idx_to_vec

return idx_to_token, torch.tensor(idx_to_vec)

def __getitem__(self, tokens):

# Parameters are all lexical elements , Then get the index of the pre training word vector

indices = [self.token_to_idx.get(token, self.unknown_idx)

for token in tokens]

# Returns the word vector corresponding to the word vector index .

vecs = self.idx_to_vec[torch.tensor(indices)]

return vecs

def __len__(self):

return len(self.idx_to_token)

glove_6b50d = TokenEmbedding('glove.6b.50d')

len(glove_6b50d.idx_to_token),len(glove_6b50d.idx_to_vec)

(400002, 400002)

2、 Processing emotion analysis data sets

There are many data sets of emotion analysis , This paper uses a large-scale film review data set for emotional analysis . Because the original data is text and label , Therefore, it needs to be processed before it can be used for model input .

def read_imdb(data_dir,is_train):

data,labels = [],[]

for label in ('pos','neg'):

folder_name = os.path.join(data_dir, 'train' if is_train else 'test',label)

# Traverse folder_name Everything in the folder

for file in os.listdir(folder_name):

with open(os.path.join(folder_name, file), 'rb') as f:

# Save text

review = f.read().decode('utf-8').replace('\n', '')

# Save the label

data.append(review)

labels.append(1 if label == 'pos' else 0)

# Return text and label content

return data, labels

Next, load the training set to test the above method

data_dir = 'F:/ Thesis data set /aclImdb'

train_data = read_imdb(data_dir, is_train=True)

print(' Number of training sets :', len(train_data[0]))

for x, y in zip(train_data[0][:3], train_data[1][:3]):

print(' label :', y, 'review:', x[0:60])

Number of training sets : 25000

label : 1 review: Bromwell High is a cartoon comedy. It ran at the same time a

label : 1 review: Homelessness (or Houselessness as George Carlin stated) has

label : 1 review: Brilliant over-acting by Lesley Ann Warren. Best dramatic ho

Create below tokenize The function is used to split a text sequence into a list of words .

# Split the text into words or character lexical elements

def tokenize(lines, token = 'word'):

# Split into words

if token == 'word':

return [line.split() for line in lines]

# Split into characters

elif token == 'char':

return [list(line) for line in lines]

else:

print(' error : Unknown morpheme type :'+token)

establish Vocab Class is used to generate Thesaurus , Generate one-to-one correspondence between each word and the index .

# Count the frequency of lexical elements , Returns each word element and the number of occurrences , Return as a dictionary .

def count_corpus(tokens):

# there tokens It's a 1D List or 2D list

if len(tokens) == 0 or isinstance(tokens[0], list):

# Flatten the word element list into a list

tokens = [token for line in tokens for token in line]

# This method is used to count the number of occurrences of each element in a sequence , Stored in the dictionary as key value pairs .

return collections.Counter(tokens)

# Text Thesaurus

class Vocab:

def __init__(self,tokens = None, min_freq = 0, reserved_tokens = None):

if tokens is None:

tokens = []

if reserved_tokens is None:

reserved_tokens = []

# Sort according to the frequency of words

counter = count_corpus(tokens)

#counter.items(): For a dictionary

#lambda x:x[1]: Sort the second field

#reverse = True: Descending

self._token_freqs = sorted(counter.items(),key = lambda x:x[1],reverse = True)

# The index of unknown words is 0

#idx_to_token Used to save all unrepeated lexical elements

self.idx_to_token = ['<unk>'] + reserved_tokens

#token_to_idx: It's a dictionary , Save the word element and its corresponding index

self.token_to_idx = {

token:idx for idx,token in enumerate(self.idx_to_token)}

for token, freq in self._token_freqs:

#min_freq Is the minimum number of occurrences , If less than this number , This word is discarded

if freq < min_freq:

break

# If this word element does not appear in the vocabulary , Add it to the vocabulary

if token not in self.token_to_idx:

self.idx_to_token.append(token)

# Because the first position is occupied by the position word

self.token_to_idx[token] = len(self.idx_to_token) - 1

# Return the length of the vocabulary

def __len__(self):

return len(self.idx_to_token)

# Get the index of the word element to be queried , Support list,tuple Query the index of multiple words

def __getitem__(self, tokens):

if not isinstance(tokens,(list,tuple)):

#self.unk: If the query fails to return 0

return self.token_to_idx.get(tokens,self.unk)

return [self.__getitem__(token) for token in tokens]

# Query the word element according to the index , Support list,tuple Query the word elements corresponding to multiple indexes

def to_tokens(self,indices):

if not isinstance(indices,(list,tuple)):

return self.idx_to_token[indices]

return [self.idx_to_token[index] for index in indices]

@property

def unk(self):

return 0

@property

def token_freqs(self):

return self._token_freqs

establish load_array Function to create a data iterator .

def load_array(data_arrays,batch_size,is_train=True):

# Construct a Pytorch Data iterators

dataset = TensorDataset(*data_arrays)

return DataLoader(dataset,batch_size,shuffle=is_train)

establish truncate_pad The function is used to truncate or fill the sequence to a specified length .

def truncate_pad(line,num_steps,padding_token):

if len(line) > num_steps:

return line[:num_steps]

return line + [padding_token] * (num_steps - len(line))

Finally, integrate the above functions , Encapsulate it in load_data_imdb Function , Return training and test data sets and IMDb Thesaurus of the review collection .

''' Returns the data iterator and IMDb Thesaurus of the review dataset '''

def load_data_imdb(batch_size, num_steps=500):

data_dir = 'F:/ Thesis data set /aclImdb'

train_data = read_imdb(data_dir, True)

test_data = read_imdb(data_dir, False)

# Participle a sentence

train_tokens = tokenize(train_data[0], token='word')

test_tokens = tokenize(test_data[0], token='word')

# Building a vocabulary , It feels like it should train_tokens and test_tokens Build a vocabulary together ??

vocab = Vocab(train_tokens, min_freq=5)

# Turn each word element into id, And fill and truncate to a uniform length 500

train_features = torch.tensor([truncate_pad(

vocab[line], num_steps, vocab['<pad>']) for line in train_tokens])

test_features = torch.tensor([truncate_pad(

vocab[line], num_steps, vocab['<pad>']) for line in test_tokens])

train_iter = load_array((train_features, torch.tensor(train_data[1])),

batch_size)

test_iter = load_array((test_features, torch.tensor(test_data[1])),

batch_size,

is_train=False)

return train_iter, test_iter, vocab

3、 Using recurrent neural network model training

Next, build a cyclic neural network , And use the data set introduced above to train it .

First, build a model , Use a two-tier two-way LSTM Model .

class BiRNN(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens,

num_layers, **kwargs):

super(BiRNN, self).__init__(**kwargs)

#self.embedding = nn.Embedding.from_pretrained(torch.tensor(embedding_matrix, dtype=torch.float), freeze=False)

self.embedding = nn.Embedding(vocab_size, embed_size)

# take bidirectional Set to True To obtain a bidirectional recurrent neural network

self.encoder = nn.LSTM(embed_size, num_hiddens, num_layers=num_layers, bidirectional=True,batch_first = True)

self.decoder = nn.Linear(4 * num_hiddens, 2)

def forward(self, inputs):

# inputs The shape of is ( Batch size , Time steps )

# The output shape is ( Time steps , Batch size , Word vector dimension )

embeddings = self.embedding(inputs)

self.encoder.flatten_parameters()

# Returns the hidden state of the previous hidden layer in different time steps ,

# outputs The shape of is ( Time steps , Batch size ,2* Number of hidden units )

outputs, _ = self.encoder(embeddings)

# The implicit state connecting the initial and final time steps , As input to the full connection layer ,

# Its shape is ( Batch size ,4* Number of hidden units )

encoding = torch.cat((outputs[:,0,:], outputs[:,-1,:]), dim=1)

outs = self.decoder(encoding)

return outs

Load the dataset described in the previous section .

batch_size = 64

train_iter, test_iter, vocab = load_data_imdb(batch_size)

The following is the pre training for the words in the vocabulary 100 dimension Glove The embedded , Get the word embedding corresponding to each word element .

glove_embedding = TokenEmbedding('glove.6b.100d')

embeds = glove_embedding[vocab.idx_to_token]

embeds.shape

torch.Size([49346, 100])

def try_all_gpus():

devices=[torch.device(f'cuda:{

i}') for i in range(torch.cuda.device_count())]

return devices if devices else [torch.device('cpu')]

''' Computational accuracy '''

def accuracy(y_hat,y):

# Calculate the correct number of predictions

if len(y_hat.shape)>1 and y_hat.shape[1]>1:

y_hat=y_hat.argmax(axis=1)

cmp=y_hat.type(y.dtype)==y

return float(cmp.type(y.dtype).sum())

''' GPU Upper calculation accuracy '''

def evaluate_accuracy_gpu(net, data_iter, device=None):

if isinstance(net, nn.Module):

net.eval() # Set the model to evaluation mode

if not device:

device = next(iter(net.parameters())).device

# No. of correct predictions, no. of predictions

metric = d2l.Accumulator(2)

with torch.no_grad():

for X, y in data_iter:

if isinstance(X, list):

# Required for BERT Fine-tuning (to be covered later)

X = [x.to(device) for x in X]

else:

X = X.to(device)

y = y.to(device)

metric.add(accuracy(net(X), y), d2l.size(y))

return metric[0] / metric[1]

''' Use more GPU Do small batch training '''

def train_batch(net, X, y, loss, trainer, devices):

if isinstance(X, list):

X = [x.to(devices[0]) for x in X]

else:

X = X.to(devices[0])

y = y.to(devices[0])

net.train()

trainer.zero_grad()

pred = net(X)

l = loss(pred, y)

l.sum().backward()

trainer.step()

scheduler.step()

train_loss_sum = l.sum()

train_acc_sum = accuracy(pred, y)

return train_loss_sum, train_acc_sum

''' Use more GPU Model training '''

def train(net, train_iter, test_iter, loss, trainer, num_epochs,

devices = try_all_gpus()):

timer, num_batches = d2l.Timer(), len(train_iter)

animator = d2l.Animator(xlabel='epoch', xlim=[1, num_epochs], ylim=[0, 1],

legend=['train loss', 'train acc', 'test acc'])

net = nn.DataParallel(net, device_ids=devices).to(devices[0])

for epoch in range(num_epochs):

# 4 Dimensions : Store training losses , Training accuracy , Number of instances , Characteristic number

metric = d2l.Accumulator(4)

for i, (features, labels) in enumerate(train_iter):

timer.start()

l, acc = train_batch(

net, features, labels, loss, trainer, devices)

metric.add(l, acc, labels.shape[0], labels.numel())

timer.stop()

if (i + 1) % (num_batches // 5) == 0 or i == num_batches - 1:

animator.add(epoch + (i + 1) / num_batches,

(metric[0] / metric[2], metric[1] / metric[3],

None))

test_acc = evaluate_accuracy_gpu(net, test_iter)

animator.add(epoch + 1, (None, None, test_acc))

print(f'loss {

metric[0] / metric[2]:.3f}, train acc '

f'{

metric[1] / metric[3]:.3f}, test acc {

test_acc:.3f}')

print(f'{

metric[2] * num_epochs / timer.sum():.1f} examples/sec on '

f'{

str(devices)}')

embed_size, num_hiddens, num_layers = 100, 100, 2

devices = try_all_gpus()

net = BiRNN(len(vocab), embed_size, num_hiddens, num_layers)

net.embedding.weight.data.copy_(embeds)

net.embedding.weight.requires_grad = False

# Initialize model parameters

def init_weights(m):

if type(m) == nn.Linear:

nn.init.xavier_uniform_(m.weight)

if type(m) == nn.LSTM:

for param in m._flat_weights_names:

if "weight" in param:

nn.init.xavier_uniform_(m._parameters[param])

net.apply(init_weights);

lr, num_epochs = 0.01, 5

#params = filter(lambda p: p.requires_grad, net.parameters())

trainer = torch.optim.Adam(net.parameters(), lr=lr)

loss = nn.CrossEntropyLoss(reduction="none")

train(net, train_iter, test_iter, loss, trainer, num_epochs,

devices)

loss 0.276, train acc 0.884, test acc 0.839

505.9 examples/sec on [device(type='cuda', index=0)]

4、 Use one-dimensional convolutional neural network model to train

Let's first look at how one-dimensional convolution works . The following figure is a special case of two-dimensional convolution based on cross-correlation operation .

Build a one-dimensional time convolution model

class TextCNN(nn.Module):

def __init__(self, vocab_size, embed_size, kernel_sizes, num_channels,embedding_matrix,

**kwargs):

super(TextCNN, self).__init__(**kwargs)

self.embedding = nn.Embedding.from_pretrained(torch.tensor(embedding_matrix, dtype=torch.float))

self.constant_embedding = nn.Embedding.from_pretrained(torch.tensor(embedding_matrix, dtype=torch.float), freeze=False)

# self.embedding = nn.Embedding(vocab_size, embed_size)

# This embedded layer does not require training

# self.constant_embedding = nn.Embedding(vocab_size, embed_size)

self.dropout = nn.Dropout(0.5)

self.decoder = nn.Linear(sum(num_channels), 2)

# Pooling layer

# For an input (B C L) Of tensor One dimensional pool, Turn into (B,C,1)

self.pool = nn.AdaptiveAvgPool1d(1)

self.relu = nn.ReLU()

# Create multiple one-dimensional convolution layers

self.convs = nn.ModuleList()

for c, k in zip(num_channels, kernel_sizes):

self.convs.append(nn.Conv1d(2 * embed_size, c, k))

def forward(self, inputs):

# Join the two embedded layers along the vector dimension ,

# The output shape of each embedded layer is ( Batch size , Number of lexical elements , Lexical vector dimension ) Connect

embeddings = torch.cat((

self.embedding(inputs), self.constant_embedding(inputs)), dim=2)

#print(embeddings.shape)

# According to the input format of one-dimensional convolution layer , Rearrange the tensor , So that the channel can be used as the second 2 dimension

embeddings = embeddings.permute(0, 2, 1)

# Each one-dimensional convolution layer is merged after the maximum time convergence layer , The tensor shape obtained is ( Batch size , The channel number ,1)

# Delete the last dimension and link along the channel dimension

# Use three alone ConvD1, Put the final structure together

encoding = torch.cat([torch.squeeze(self.relu(self.pool(conv(embeddings))), dim = -1) for conv in self.convs], dim = 1)

#print(encoding.shape)

outputs = self.decoder(self.dropout(encoding))

return outputs

Define the relevant parameters , Train the model .

embed_size, kernel_sizes, nums_channels = 100, [3, 4, 5], [100, 100, 100]

devices = d2l.try_all_gpus()

net = TextCNN(len(vocab), embed_size, kernel_sizes, nums_channels,embeds)

def init_weights(m):

if type(m) in (nn.Linear, nn.Conv1d):

nn.init.xavier_uniform_(m.weight)

net.apply(init_weights);

#net.embedding.weight.data.copy_(embeds)

#net.constant_embedding.weight.data.copy_(embeds)

#net.constant_embedding.weight.requires_grad = False

lr, num_epochs = 0.001, 5

params = filter(lambda p: p.requires_grad, net.parameters())

trainer = torch.optim.Adam(params, lr=lr)

loss = nn.CrossEntropyLoss(reduction="none")

# Adjust your learning rate

#scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, 10)

train(net, train_iter, test_iter, loss, trainer, num_epochs,

devices)

loss 0.127, train acc 0.954, test acc 0.875

1072.4 examples/sec on [device(type='cuda', index=0)]

边栏推荐

- 源码编译!!

- Chapter7 循环神经网络-1

- Design and implementation of position recommendation system based on Knowledge Map

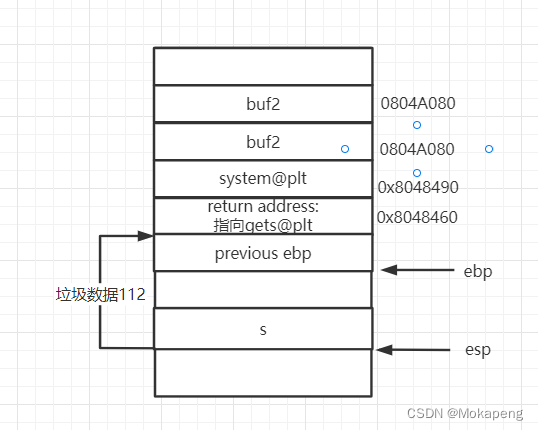

- PWN —— ret2libc1

- Logical volume management

- LC: Sword finger offer 03. repeated numbers in the array

- SQL入门学习--基本增删改查以及练习

- [强网杯 2019]随便注

- Introduction to programming 3 - seeking the maximum value

- 30出头成为复旦博导,陈思明:敲代码和写诗,我两样都要

猜你喜欢

随机推荐

初学者备战蓝桥杯历程(大学编程学习历程记录,题目思路献给需要备考蓝桥杯的同学)

Recommended system infrastructure and project introduction

Pytorch实现文本情感分析

Use of vim editor

Chapter7 循环神经网络-1

最大公约数和最小公倍数

Using "hifolw" to quickly create the information generation of College Students' return list

栈溢出基础练习题——6(字符串漏洞64位下)

栈溢出基础练习题——4(写有64和32位两种攻击方式)

LC:剑指 Offer 05. 替换空格

Zstuacm summer camp flag bearer

蓝桥杯31天冲刺之二十一day(C语言)

Transformer

3步就能制作漫画头像的机器人,想拥有一个吗?

CSDN has accompanied me for four years of undergraduate life, and I have begun to record it well

Enter two strings STR1 and STR2, and count the number of times that the string STR2 appears in STR1.

源码编译!!

C51 single chip microcomputer digital (display hours, minutes and seconds)

zy:修改主机名

STM32 learning - DHT11 temperature and humidity sensor sampling drive and reporting in cjson format

![[强网杯 2019]随便注](/img/a4/4c7f647f2dc8e535699e8e5fa25685.png)