当前位置:网站首页>Sub-Category Optimization for Multi-View Multi-Pose Object Detection

Sub-Category Optimization for Multi-View Multi-Pose Object Detection

2022-06-21 05:57:00 【Wanderer001】

Reference resources Sub-Category Optimization for Multi-View Multi-Pose Object Detection - cloud + Community - Tencent cloud

Abstract

Object class detection with large changes in appearance is a basic problem in the field of computer vision . Because of the variability inside the class 、 Viewing angle and lighting , The appearance of the target category may change . For target categories with large changes in appearance , You need to use a subcategory based approach . This paper puts forward a kind of A subcategory optimization method that automatically divides a target category into an appropriate number of subcategories based on appearance changes . We do not use predefined in class subcategories based on domain knowledge or validation data sets , Instead, unsupervised clustering based on distinguishing image features is used to divide the sample space . Then the clustering performance is verified by sub - category discriminant analysis . Clustering performance based on unsupervised method and results of subcategory discriminant analysis , The optimal number of subcategories for each target category is determined . A large number of experimental results show that the use of two standards and the author's own database . The comparison shows that , Our method is superior to the most advanced one .

1、 brief introduction

Classify general target categories with large appearance changes 、 Detection and clustering are challenging tasks in computer vision . The image appearance of the object category will change due to many factors , For example, there are great changes within the category ( Different textures and colors )、 Different lighting conditions 、 Direction / Viewing direction and posture . For target categories with small intra class changes ,Viola and Jones The proposed cascade structure classifier is an effective solution . however , For more diverse patterns with large appearance changes , Such as multi view automobile 、 Cows and dogs , A more powerful classifier model is needed . One of the basic principles of this classifier is [2] and [3] Divide and conquer method used in . In their approach , When a category cannot be modeled as a whole , It is divided into several subcategories , Then the system learns a model for them . however , When the appearance changes due to many factors , It is difficult to find a major attribute to divide the sample . Manually assigning subcategory labels to training samples can be difficult , And it's time-consuming . Besides , Sub classification based on domain knowledge may not be the optimal classification task .

This article defines some simple rules , It can be used to automatically determine the optimal number of subcategories of a target category . Specially , We put forward two criteria . In the first rule , We do not use the predefined subclass method based on domain knowledge , Instead, an unsupervised clustering algorithm based on distinguishing image features is used to divide the sample space . So , We use probabilistic latent semantic analysis (pLSA) Model [4] Target specific clustering probability . Such a topic model has recently been classified in the target class [5]、[6] The success of the project has aroused great interest in the frontiers of topic optimization and sub classification . especially ,Fritz Et al. Proposed a representation , Use the topic model to decompose 、 Discover and detect visual target categories . However , In their approach , The total number of subcategories in the learning process is fixed , Therefore, their number has not been optimized on the training data set .

In the second criterion , The number of clusters under each category is taken as the number of subcategories , We use subcategory discriminant analysis (SDA) technology [7] To analyze the discrimination ability of a given number of sub categories . Since the real challenge and our goal is to find the optimal number of subcategories of an object class , We use SDA To find the discriminant power that optimizes the number of subcategories , Not like it Zhu Et al. Proposed to optimize the classification performance on cross validation data sets by using the same number of subcategories .

2、 Subclass optimization

In this section , We describe our subcategory optimization method , It combines clustering performance analysis with subcategory discriminant analysis . Start with the image , We first show our data representation . Then we describe how to apply the topic model to this representation and generate clusters for each target category . Last , Take the generated cluster as the classification , The mixing coefficient is used as the distinguishing feature , The discriminant power is analyzed .

A、 The data shows

In order to build pLSA Visual vocabulary and vocabulary of the model , We detect and describe interest points from all training images . The combination of point of interest detector and invariant local descriptor shows the interesting function of describing image and target . In this study , We use Canny The edge detector constructs the edge mapping of the image . Then the local interest points on the edge graph are detected in two stages . First , We use He and Yung Proposed technology , Local and global curvature properties based on edges , Reliably detect corners in edge mapping , And take the whole corner as a set of keys . In the second phase , Select the remaining total key points by uniformly sampling the edge of the object . Each generated key uses a radius of r = 10 Round patch Upper 128 dimension SIFT Descriptors to describe . utilize k- Mean clustering algorithm for SIFT Descriptor for vector quantization , Form a visual vocabulary . stay pLSA In the formula of , We calculate a co-occurrence table , Each of these targets represents a collection of visual terms provided for the visual vocabulary .

B、 Cluster performance analysis

In the learning phase ,pLSA The model will target d A visual word in  Each observation with a cluster variable

Each observation with a cluster variable  Connect , The maximum likelihood principle is used to determine

Connect , The maximum likelihood principle is used to determine  and

and  . For all training images , Mixing coefficient

. For all training images , Mixing coefficient  Can be regarded as target characteristics , Used for classification . We calculated

Can be regarded as target characteristics , Used for classification . We calculated  by :

by :

If  It means the first one i A collection of class targets , that

It means the first one i A collection of class targets , that ![]() It means that

It means that  Next

Next  The probability of a cluster . here

The probability of a cluster . here  It's No i Total number of class targets .

It's No i Total number of class targets .![]() , among

, among  and

and  Is the total number of clusters . about

Is the total number of clusters . about  Target categories ,

Target categories ,![]() . We can calculate the total cluster probability sum as ,

. We can calculate the total cluster probability sum as ,![]() ), among Li Is assigned to i A list of clusters of categories . To determine the optimal number of clusters , We need to count each possible number of clusters

), among Li Is assigned to i A list of clusters of categories . To determine the optimal number of clusters , We need to count each possible number of clusters  Use the following formula to calculate

Use the following formula to calculate  value , Then select the minimum value .

value , Then select the minimum value .

If  Represents the target category i Set , then

Represents the target category i Set , then ![]() For given

For given  when , cluster

when , cluster  Probability . So for the second i A target class

Probability . So for the second i A target class ![]() , among

, among ![]() , also K Is the total number of clusters . Yes C Target class of

, also K Is the total number of clusters . Yes C Target class of ![]() . We can calculate the total cluster probability sum as

. We can calculate the total cluster probability sum as ![]() , among

, among  Is assigned to the

Is assigned to the  A list of clusters of categories . To determine the optimal number of clusters , We need to count each possible number of clusters K Use the following formula to calculate

A list of clusters of categories . To determine the optimal number of clusters , We need to count each possible number of clusters K Use the following formula to calculate  value , Then select the minimum value .

value , Then select the minimum value .

However , It can be seen from the experimental evaluation that , For more complex target categories with large changes in appearance , Using this criterion alone is not always stable . So in the next section , In addition to this rule , We will use the above clustering and mixing coefficients to determine a more stable optimization criterion .

C、 Subclass distinction analysis

Once the mixed Gaussian distribution is used to determine the data distribution of each cluster , It is easy to use the following generalized eigenvalue decomposition equation to find the discriminant vector for the best classification of data ,

![]()

Is the scattering matrix in the subclass ,

Is the scattering matrix in the subclass , Is the scattering matrix between subclasses ( Both matrices are symmetric , Positive semidefinite ),V Is a matrix whose column corresponds to the distinguishing vector ,

Is the scattering matrix between subclasses ( Both matrices are symmetric , Positive semidefinite ),V Is a matrix whose column corresponds to the distinguishing vector , Is the corresponding eigenvalue of a diagonal matrix .

Is the corresponding eigenvalue of a diagonal matrix .![]() , here

, here  and

and  They are the first i Of the categories j A subclass , A priori and mean .Ki It's a category i Number of subcategories in ,

They are the first i Of the categories j A subclass , A priori and mean .Ki It's a category i Number of subcategories in ,![]() Is the total number of subcategories , be equal to pLSA The total number of clusters generated by the model . We can define the scattering matrix within the subcategory as

Is the total number of subcategories , be equal to pLSA The total number of clusters generated by the model . We can define the scattering matrix within the subcategory as ![]() , among

, among  For the category i Of the

For the category i Of the  Samples ( Mixing coefficient ).

Samples ( Mixing coefficient ).

For subcategory discriminant analysis , We have to make the scattering matrix at the same time  The given measure is the largest

The given measure is the largest  and

and  The calculated measure is the smallest . however , In some cases , This may not be done in parallel . under these circumstances ,(3) Possible misclassification . Fortunately, , This problem is easy to find , Because when this happens ,

The calculated measure is the smallest . however , In some cases , This may not be done in parallel . under these circumstances ,(3) Possible misclassification . Fortunately, , This problem is easy to find , Because when this happens , and

and  The angle between the first eigenvectors is very small . This can be formally calculated as [9],

The angle between the first eigenvectors is very small . This can be formally calculated as [9],![]() , among

, among  and

and  yes

yes  and

and  Eigenvector of , With the first i Large eigenvalue correlation ,m < rank(

Eigenvector of , With the first i Large eigenvalue correlation ,m < rank( ). We want the above defined

). We want the above defined  The value of is as small as possible . We can calculate the normalized value of this equation for

The value of is as small as possible . We can calculate the normalized value of this equation for  Every possible value of ,

Every possible value of ,

To determine stability criteria , We calculate by pLSA For each possible cluster generated by the model  and

and Average value . We are right.

To

To  Repeat the process , among r The value of can be specified by the user , To find the one that minimizes the average K Value .

Repeat the process , among r The value of can be specified by the user , To find the one that minimizes the average K Value .

3、 Experimental setup

A、 Subclass optimization performance

To measure classification and optimize performance , We used ETH-80 database , It contains 8 Category 3280 Zhang image . Each category is included in a total of 41 Shot from a viewpoint 10 An image of an object . In our experiment , A category of 10 In one example 10 Images from different views were used as test data sets , The rest of the images are used as training data sets . therefore , At each stage we used 80 Test images and 3200 Training images . Because each instance has 41 Individual view , So there are the same number (41 individual ) Training for / Testing phase . At each training stage , We use our algorithm to determine the optimal number of subcategories of the target category . chart 1(a) For one of the training stages, the optimization result shown by the circular mark on the graph . In this picture ,y The axis represents a conflict metric , This measure is achieved by taking the  and

and To calculate the average of . Optimization occurs in 53 Subcategory . The number of subcategories of each target category is shown in Table 1 . chart 2 For the category car Of 8 Of the optimal subcategories 5 Subcategory ( The first 1 To 5 That's ok ) Several typical images of .

In the process of classification , We start with an image  Extract a visual word from

Extract a visual word from  , Then each visual word is clustered with the highest probability of a specific word

, Then each visual word is clustered with the highest probability of a specific word  To classify . then , Classify targets according to the maximum number of visual words that support a particular cluster . chart 1(b) Average classification results for eight target categories . If there are no subcategories , We can only get 59.5% The recognition accuracy of . After subcategory optimization , The best average classification accuracy is 84.75%. Our average classification results are consistent with Zhu Etc. [7] Quite a . They used a feature-based approach to get 82% The recognition accuracy of . And [7] comparison , Our better results may be due to the subcategory optimization of each category , Instead of dividing each target category into the same number of subcategories .

To classify . then , Classify targets according to the maximum number of visual words that support a particular cluster . chart 1(b) Average classification results for eight target categories . If there are no subcategories , We can only get 59.5% The recognition accuracy of . After subcategory optimization , The best average classification accuracy is 84.75%. Our average classification results are consistent with Zhu Etc. [7] Quite a . They used a feature-based approach to get 82% The recognition accuracy of . And [7] comparison , Our better results may be due to the subcategory optimization of each category , Instead of dividing each target category into the same number of subcategories .

B、ETHZ Performance of shape database

In this section , We compare our method with others [10]、[5] Method in ETHZ Compare the performance on the shape class ,ETHZ The shape class contains a total of 255 Images , These images follow the apple logo (40)、 Bottle (48)、 giraffe (87)、 glass (48) And swan (32) division . We use and [10] Compare our methods with the same parameter settings described in . Use [10] Five fold cross validation proposed in , We got the watch II Results in .

The optimization algorithm will 5 Target categories are broken down into 11 Subcategory . In the identification phase , We extracted a visual vocabulary bag (BOVW), And use the optimized model , Use our recent [11] To generate a promising hypothesis . The assumed position is then verified using a support vector machine classifier using the following features specifically  Merge kernels ,

Merge kernels ,

In the first part on the right (5) Measure the similarity between the direction gradient histograms of the pyramid (PHOG) Quantity level of feature set and pyramid  , Part II measures BOVW Similarity between feature sets ,

, Part II measures BOVW Similarity between feature sets , and

and  The weight of PHOG BOVW kernel . In all cases , Except for the apple logo , Our method performs better than the other two methods . Our average detection and location performance ratio Fritz Etc. [5] Improved 4.3%, Than Ferrari Etc. [10] Improved 12.3%

The weight of PHOG BOVW kernel . In all cases , Except for the apple logo , Our method performs better than the other two methods . Our average detection and location performance ratio Fritz Etc. [5] Improved 4.3%, Than Ferrari Etc. [10] Improved 12.3%

C、 Recognition performance of author database

In this section , We will show 10 Detection and location performance of class database . It consists of working with our application ( Service robots ) Related daily goals consist of , These goals are in different environments with a messy 、 The real background corresponds to . Our database contains images of multiple objects, each image , And create a truth bounding box with the ground . All in all 809 A picture , contain 2138 An object . among 630 Goals (340 Images ) Used for training ,1508 Objects (469 Images ) Used to test the system . During training , Optimization occurs in 10 Target categories 21 Subcategory . As shown in Table 3 , If you don't subclass , The average detection and location rate of our system (DLR) by 61%, by 0.64 FPPI. On the other hand , Use the optimal number of subcategories , The system will average DLR Up to 84.5%, take FPPI from 0.64 Down to 0.61. chart 3 Some test results of our method are shown , Different databases are recorded in the clutter background 、 Partial occlusion 、 Performance under significant scale and viewpoint changes .

4、 Conclusion

In this paper , We propose a subcategory optimization method , It can optimize a target category into an appropriate number of subcategories . Our method can also distinguish different target categories and use specific functions χ2 Merge kernel shape and appearance features . Experimental results show that , This method can learn the model from the image labeled by the bounding box , And there are a lot of clutter 、 Detect and locate the boundaries of new class instances in the case of scale and viewpoint changes and intra class variability .

边栏推荐

- Picture steganography: Method 1

- [UVM basics] three commonly used phase functions: build_ phase/connect_ phase/run_ phase

- 397 linked list (206. reverse linked list & 24. exchange nodes in the linked list in pairs & 19. delete the penultimate node of the linked list & interview question 02.07. link list intersection & 142

- Connection refused : no futher information : localhost/127.0.0.1:6379

- Hardware exploration -- Design and manufacture of digital clock

- Object. Assign() object merge and object Keys() get the object name

- Fluorite Cloud Application

- 关闭SQLite3中的journal暂存档

- js 中 async/await 的用法

- 一次Namenode的RPC延迟故障排查引发的深入思考

猜你喜欢

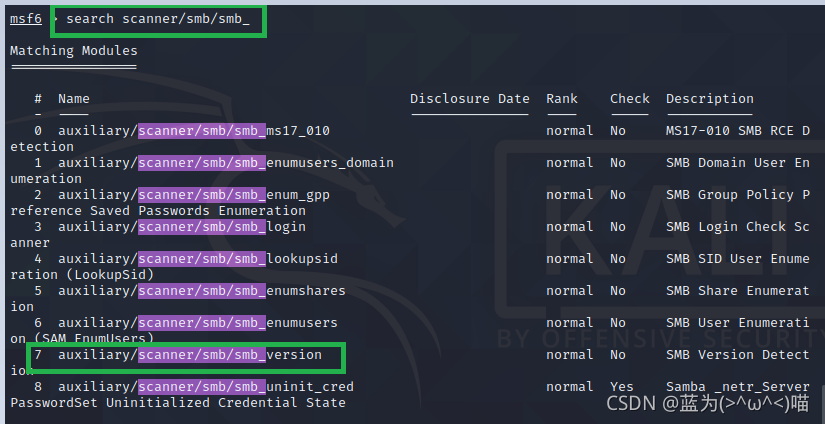

Metasploit intrusion win7

科创人·数智未来私董会第4期:转型的实证-幸存者偏差与盲人摸象

Kali shortcut keys and settings

![[open source tutorial] DIY tutorial of 2020 new version of brushless power regulation [Author: I love loli love loli] (brief introduction and circuit construction)](/img/cb/64fef3cd344ca0d0befe4b8dff11c7.jpg)

[open source tutorial] DIY tutorial of 2020 new version of brushless power regulation [Author: I love loli love loli] (brief introduction and circuit construction)

Implementation and analysis of transformer model

C语言课程设计(服装管理系统详解)

完善业务细节必填项的确定,根据返回的状态码回显错误信息时,回显的信息与预期不符

基于注意力的seq2seq模型

Excel列数字索引转字符索引

Librosa 𞓜 the most humorous explanation of Mel spectrum

随机推荐

Small program design scheme for appointment of beauty agencies

Picture steganography: Method 1

Things to think about before using mysqldump

In the lab, can the full link data governance be replicated? And I want to ask how the data source connection is handled. Can I directly connect to the local database

Research and Analysis on the current situation of LBS market in China and forecast report on its development prospect (2022)

代码生成器文件运行出错:The server time zone value ‘�й���ʱ��‘ is unrecognized or represents more than one time

NFT platform track dark horse takes advantage of the situation

实验室,全链路数据治理 不能复制吗,,以及想问数据源连接是如何处理的啊,可以直接连接当地的数据库吗

新零售品牌“三只松鼠”遇挫,摆脱困境的两大方法

Armcm3 authoritative guide notes - the impact of address misalignment in arm programming

Embedded programming complexity

Metasploit intrusion win7

397 linked list (206. reverse linked list & 24. exchange nodes in the linked list in pairs & 19. delete the penultimate node of the linked list & interview question 02.07. link list intersection & 142

应用在洗衣机触摸屏中的触摸芯片

Subtitle, key frame animation and sound processing in PR

Canvas制作经典贪吃蛇

397-链表(206.反转链表 & 24. 两两交换链表中的节点 & 19. 删除链表的倒数第 N 个结点 & 面试题 02.07. 链表相交 & 142.环形链表II)

Memorizing Normality to Detect Anomaly: Memory-augmented Deep Autoencoder for Unsupervised Anomaly D

应用在电视触摸屏中的十四通道智能触摸芯片

simple_js 攻防世界