当前位置:网站首页>Cvpr2022 𞓜 thin domain adaptation

Cvpr2022 𞓜 thin domain adaptation

2022-06-24 14:33:00 【New words of science and technology】

background

Deep neural network is usually used for offline image acquisition ( Marked source data ) Training , And then embedded into the edge device , To test the images taken from the new scene ( Unlabeled target data ). In practice , This mode reduces network performance due to domain transfer . In recent years , More and more researchers are adapting to unsupervised fields (UDA) In depth research , To solve this problem .

Vanilla UDA It aims to align source data and target data into a joint representation space , So that the model trained according to the source data can be well extended to the target data . however , There is still a gap between academic research and industrial demand : Most of the existing UDA Methods only the fixed neural structure was used for weight adaptation , But it can not effectively meet the requirements of various devices in real world applications .

In an effort to 1 The widely used application scenario shown in , under these circumstances , Ordinary UDA The method must repeatedly train a series of models with different capacities and architectures , To meet the needs of equipment with different calculation budgets , It's expensive and time consuming .

In order to solve the above problems , The author puts forward Slimmable Domain Adaption(SlimDA), That is, the model is trained only once , In this way, you can flexibly extract customized models with different capacities and architectures from them , To meet the needs of equipment with different calculation budgets .

chart 1 SlimDA

When the thin neural network satisfies the unsupervised domain adaptation , Two challenges remain :

1) Weight adaptation : How to improve the adaptive performance of all models in the model base at the same time .

2) Architecture adaptation : Given a specific calculation budget , How to search for an appropriate model on unlabeled target data .

For the first challenge , Random integrated distillation is proposed (SEED) To interact with models in the model library , In order to suppress the uncertainty of unlabeled target data adaptively in the model . surface 1 Shows SEED And traditional knowledge distillation .

surface 1 Distillation of traditional knowledge (CKD) With random integrated distillation (SEED)

For the second challenge , The author puts forward an unsupervised performance evaluation index , It can alleviate the output difference between the candidate model and the anchor model . The smaller the measure , Assume better performance .

contribution

1. Put forward SlimDA, One “ Once and for all ” Framework , To jointly adapt to the adaptive performance and calculation budget of equipment with limited resources .

2. Put forward SEED, It can improve the adaptability of all models in the model base at the same time .

3. An optimal separation of three classifiers is designed to adjust the optimization between intra model adaptation and inter model interaction .

4. An unsupervised performance evaluation index is proposed , To facilitate architectural adaptation .

Related methods

1. Unsupervised domain adaptation (UDA)

The existing UDA The method aims to improve the performance of the model on the unlabeled target domain . In the last few years , The difference based method and antagonism optimization method are proposed , This problem is solved by field alignment .SymNet A dual classifier architecture is developed , To promote category level domain confusion . lately ,Li Others are trying to learn the best architecture , To further improve the performance of the target domain , This proves that the network architecture is right UDA Importance . these UDA The approach focuses on implementing specific models with better performance on the target domain .

2. Neural architecture search (NAS)

NAS The method aims at reinforcement learning 、 Evolutionary methods 、 Gradient based methods, etc. automatically search for the optimal architecture . lately , The one-time method is very popular , Because you only need to train a super network , At the same time, multiple weight sharing subnetworks of various architectures are optimized . such , You can search for the optimal network structure from the model base . In this paper , The author emphasizes UDA about NAS It is an unnoticed but significant scene , Because they can work together unsupervised to optimize lightweight, scenario specific architectures .

3. Cross domain network compression

Chen Et al. Proposed a cross domain unstructured pruning method .Y u And so on MMD To minimize domain differences , And prune the filter in the Taylor based strategy ,Yang Et al. Focused on compressed graph neural networks .Feng Et al. Conducted antagonistic training between the channel pruning network and the full-size network . However , There is still much room for improvement in the performance of the existing methods . Besides , Their methods are not flexible enough , Many optimal models cannot be obtained under different resource constraints .

Method

1. SlimDA frame

It has been proved in the reducible neural network , With different widths ( That is, the layer channel ) Many networks can be coupled to the weight sharing model base , And optimize at the same time . Start with a baseline , In this baseline ,SymNet Directly merge with the slender neural network .

For the sake of simplicity ,SymNet The overall goal of the project is Ldc. In each training iteration , From the model library {(Fj,Csj,Ctj)}mj=1 Several models of random sampling in ∈(F,Cs,Ct), Named model batch , among m Represents the model batch size . here (F、Cs、Ct) Can be regarded as the largest model , Other models can be sampled through weight sharing .

To ensure that the model base is adequately trained , The largest and smallest models shall be sampled in each training iteration , And make it part of the model batch .

This baseline can be regarded as Eqn The two alternate processes of . To encourage interaction between models in the above baseline , The author puts forward SlimDA frame , Pictured 2 Shown . The framework consists of randomly integrated distillation (SEED) And optimize the separation of three classifiers (OSTC) Design composition .

SEED The aim is to use the complementary knowledge in the model base for multi model interaction .Cs and Ct The red arrow on the classifier indicates domain confusion training Ldc And knowledge aggregation in the model base .Ca The purple arrow on the classifier indicates seed optimization Lseed.

chart 2 SlimDA frame

2. Random integrated distillation (SEED)

SEED The aim is to use the complementary knowledge in the model base for multi model interaction . Different models in the model base can intuitively learn supplementary knowledge about unlabeled target data . Inspired by Bayesian learning with model perturbations , The author uses the models in the model base to suppress the uncertainty of unlabeled target data through Monte Carlo sampling .

Model confidence definition :

Sharpen the function to induce implicit entropy minimization during seed training :

3. Optimize the separation of three classifiers (OSTC)

The first two are used for domain obfuscation training , The last one is used to receive the knowledge of random polymerization for distillation . The distillation loss formula is as follows :

4. Unsupervised performance evaluation indicators

Unsupervised performance measures (UPEM):

边栏推荐

- 在CVS中恢复到早期版本

- 15 differences between MES in process and discrete manufacturing enterprises (Part 2)

- 测试 H5 和小程序的区别,你真的知道吗?

- R language constructs regression model diagnosis (normality is invalid), performs variable transformation, and uses powertransform function in car package to perform box Cox transform to normality on

- Detailed explanation of redis data types

- Explore cloud native databases and take a broad view of future technological development

- ES mapping之keyword;term查询添加keyword查询;更改mapping keyword类型

- GO语言并发模型-MPG模型

- ESP32系列--ESP32各个系列对比

- June training (day 23) - dictionary tree

猜你喜欢

v-if 和 v-show 的区别

box-sizing

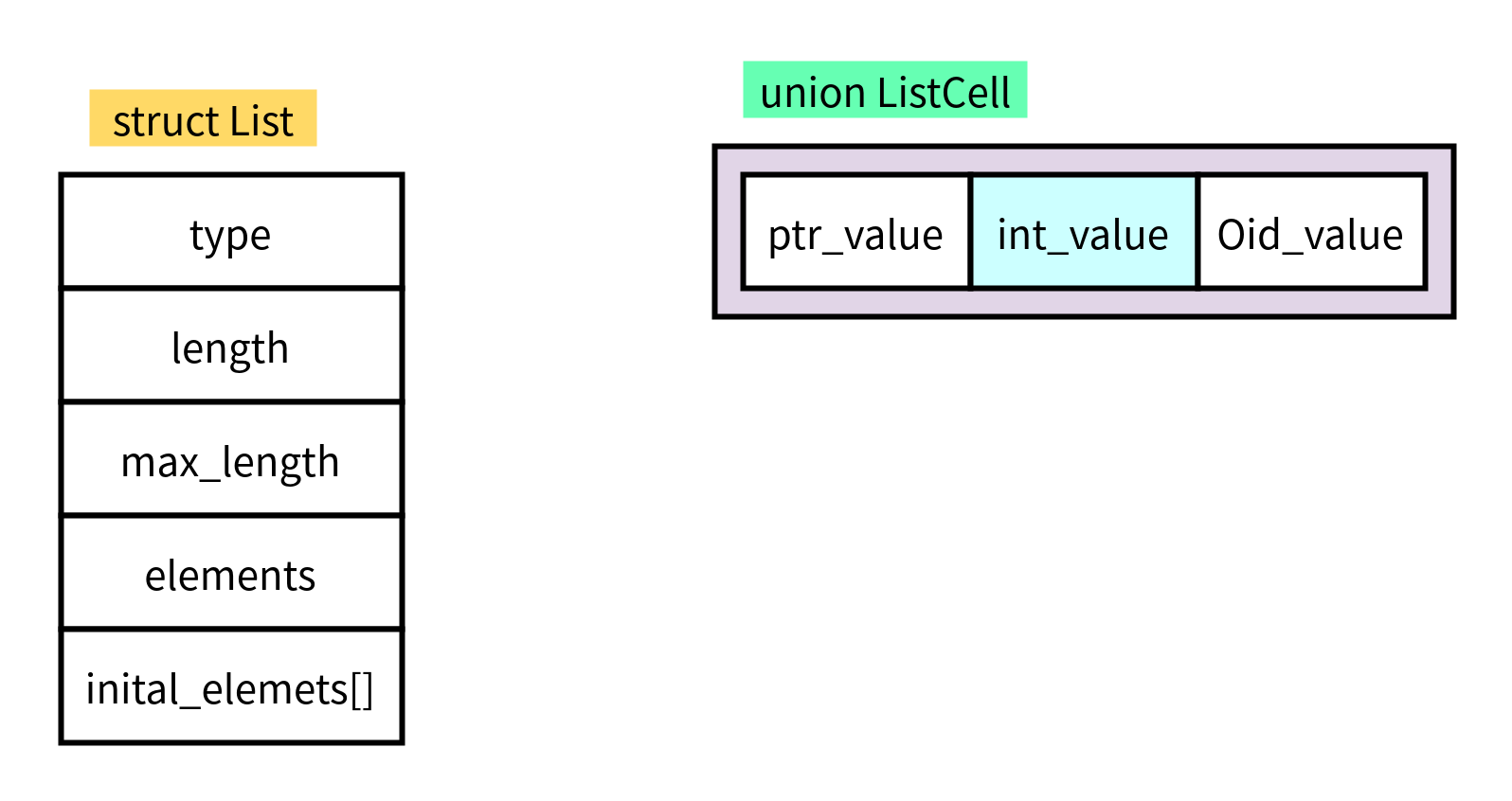

List of PostgreSQL

How to avoid placing duplicate orders

Defeat the binary tree!

In the eyes of the universe, how to correctly care about counting East and West?

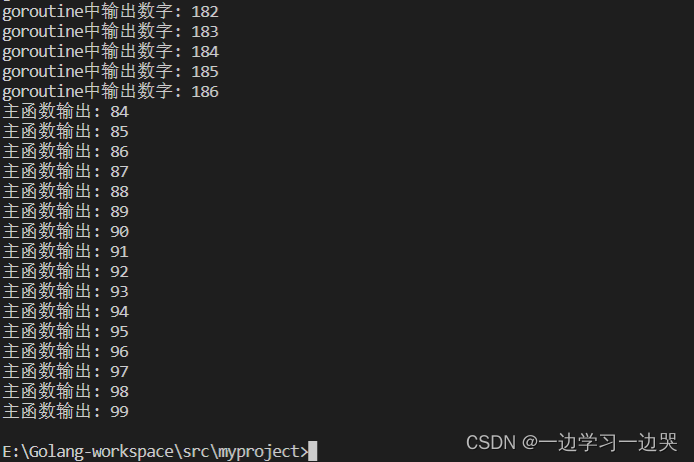

GO语言-goroutine协程的使用

Explore cloud native databases and take a broad view of future technological development

Linux 安装 CenOS7 MySQL - 8.0.26

Common sense knowledge points

随机推荐

leetcode 139. Word break word split (medium)

A common defect management tool - Zen, which teaches you from installation to using the handle

R language plot visualization: use plot to visualize the training set and test set after data division, use different shape label representation, training set, test set, and display training and test

ES mapping之keyword;term查詢添加keyword查詢;更改mapping keyword類型

ESP32系列--ESP32各个系列对比

Virtual machines on the same distributed port group but different hosts cannot communicate with each other

Overview of SAP marketing cloud functions (IV)

10_ Those high-profile personal signatures

从pair到unordered_map,理论+leetcode题目实战

时间同步业务的闭环管理——时间监测

六月集训(第23天) —— 字典树

How to avoid placing duplicate orders

【ansible问题处理】远程执行用户环境变量加载问题

【比特熊故事汇】6月MVP英雄故事|技术实践碰撞境界思维

手机注册股票开户 炒股开户安全吗

业务与技术双向结合构建银行数据安全管理体系

ssh-keygen 配置无需每次输入密码

update+catroot+c000021a+critical service failed+drivers+intelide+viaide+000000f

`Thymeleaf`模板引擎全面解析

From pair to unordered_ Map, theory +leetcode topic practice