当前位置:网站首页>Data storage - interview questions

Data storage - interview questions

2022-07-03 23:59:00 【Pet Nannan's pig】

1. Please tell me HDFS Read and write flow

HDFS Writing process :

- client The client sends an upload request , adopt RPC And namenode Establish communication ,namenode Check whether the user has upload permission , And whether the uploaded file is in hdfs Duplicate name under the corresponding directory , If either of these two is not satisfied , Direct error reporting , If both are satisfied , Then it returns a message that can be uploaded to the client

- client Cut according to the size of the file , Default 128M A piece of , When the segmentation is complete, give namenode Send request first block Which servers are blocks uploaded to

- namenode After receiving the request , File allocation according to network topology, rack awareness and replication mechanism , Return to available DataNode The address of

- After receiving the address, the client communicates with a node in the server address list, such as A communicate , It's essentially RPC call , establish pipeline,A After receiving the request, it will continue to call B,B Calling C, Will the whole pipeline Establishment and completion , Step by step back client

- client Began to A Send the first block( First read the data from the disk and then put it into the local memory cache ), With packet( Data packets ,64kb) In units of ,A Receive a packet It will be sent to B, then B Send to C,A After each pass packet It will be put into a reply queue waiting for a reply

- The data is divided into pieces packet The packet is in pipeline On the Internet , stay pipeline In reverse transmission , Send... One by one ack( Correct command response ), In the end by the pipeline First of all DataNode node A take pipelineack Send to Client

- When one block Once the transmission is complete , Client Ask again NameNode Upload the second block ,namenode Reselect three DataNode to client

HDFS Reading process :

- client towards namenode send out RPC request . Request file block The location of

- namenode After receiving the request, it will check the user permissions and whether there is this file , If all meet , Some or all of the... Will be returned as appropriate block list , For each block,NameNode Will be returned containing the block Replica DataNode Address ; These returned DN Address , According to the cluster topology DataNode Distance from client , And then sort it , There are two rules for sorting : Distance in network topology Client The nearest row is in the front ; Timeout reporting in heartbeat mechanism DN Status as STALE, That's the bottom line

- Client Select the one at the top of the order DataNode To read block, If the client itself is DataNode, Then the data will be obtained directly from the local ( Short circuit reading characteristics )

- The bottom line is essentially to build Socket Stream(FSDataInputStream), Repeated calls to the parent class DataInputStream Of read Method , Until the data on this block is read

- After reading the list block after , If the file reading is not finished , The client will continue to NameNode Get the next batch of block list

- Read one block It's all going on checksum verification , If reading DataNode Time error , The client will be notified NameNode, And then have that from the next block Replica DataNode Continue to read

- read The method is a parallel read block Information , It's not a block by block read ;NameNode Just go back to Client Request the DataNode Address , It doesn't return the data of the request block

- Finally, all the data are read block Will merge into a complete final document

2.HDFS While reading the file , What if one of the blocks breaks suddenly

End of client reading DataNode After the block on the checksum verification , That is, the client reads the local block and HDFS Check the original block on the , If the verification results are inconsistent , The client will be notified NameNode, And then have that from the next block Replica DataNode Continue to read .

3. HDFS When uploading files , If one of them DataNode How to do if you hang up suddenly

When the client uploads a file, it is associated with DataNode establish pipeline The Conduit , Pipeline forward is client to DataNode Packets sent , The reverse direction of the pipe is DataNode Send to the client ack confirm , That is to say, after receiving the data packet correctly, send a reply that has been confirmed , When DataNode All of a sudden , The client cannot receive this DataNode Sent ack confirm , The client will be notified NameNode,NameNode Check that the copy of the block does not conform to the regulations ,

NameNode Will inform DataNode To copy , And will hang up DataNode Go offline , No longer let it participate in file upload and download .

4. Please tell me HDFS Organizational structure of

- Client: client

(1) Cut documents . Upload files HDFS When ,Client Cut the file into pieces Block, And then store it

(2) And NameNode Interaction , Get file location information

(3) And DataNode Interaction , Read or write data

(4)Client Provide some orders to manage HDFS, Such as startup and shutdown HDFS、 visit HDFS Contents, etc - NameNode: Name node , Also called master node , Metadata information for storing data , Don't store specific data

(1) management HDFS The namespace of

(2) Manage data blocks (Block) The mapping information

(3) Configure replica policy

(4) Processing client read and write requests - DataNode: Data nodes , Also called slave node .NameNode give a command ,DataNode Perform the actual operation

(1) Store the actual data block

(2) Perform block reading / Write operations - Secondary NameNode: Is not NameNode Hot standby . When NameNode When I hang up , It can't be replaced immediately NameNode And provide services

(1) auxiliary NameNode, Share their workload

(2) Merge regularly Fsimage and Edits, And push it to NameNode

(3) In an emergency , Can assist in recovery NameNode

边栏推荐

- Solve the problem that the kaggle account registration does not display the verification code

- Fudan 961 review

- China standard gas market prospect investment and development feasibility study report 2022-2028

- [Happy Valentine's day] "I still like you very much, like sin ² a+cos ² A consistent "(white code in the attached table)

- JDBC Technology

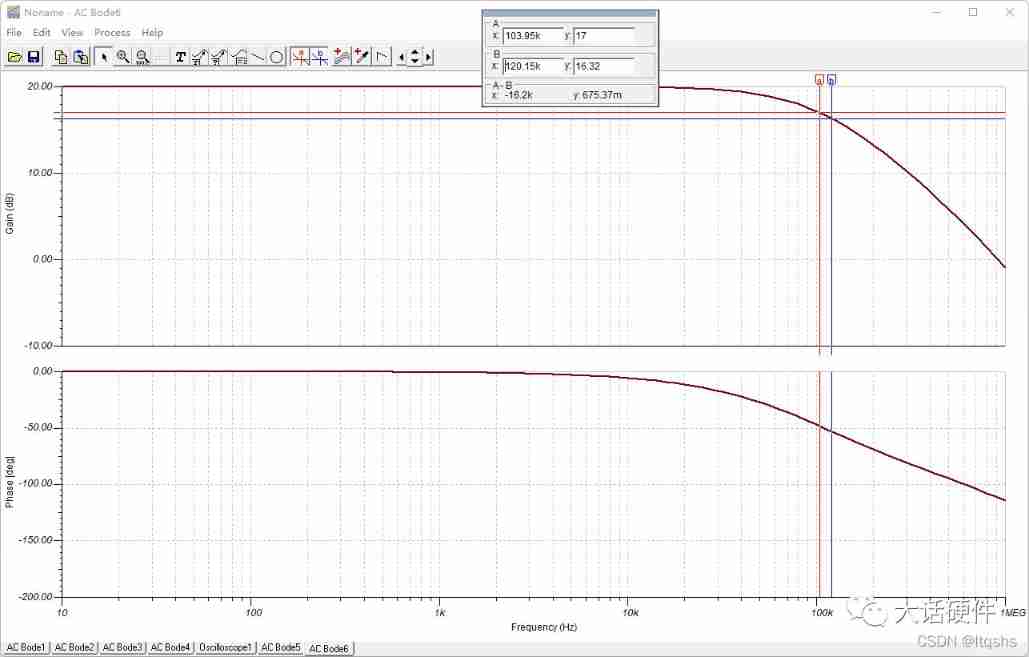

- The difference between single power amplifier and dual power amplifier

- Gossip about redis source code 74

- How to understand the gain bandwidth product operational amplifier gain

- Tencent interview: can you find the number of 1 in binary?

- 2022 Guangdong Provincial Safety Officer a certificate third batch (main person in charge) simulated examination and Guangdong Provincial Safety Officer a certificate third batch (main person in charg

猜你喜欢

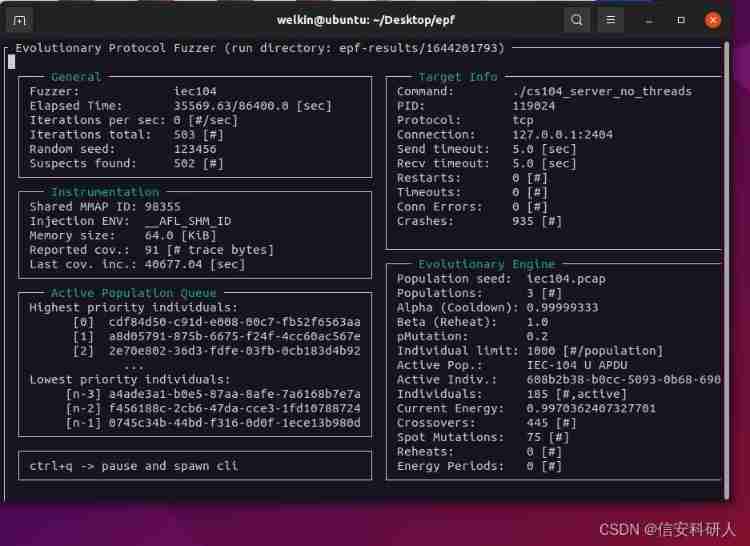

EPF: a fuzzy testing framework for network protocols based on evolution, protocol awareness and coverage guidance

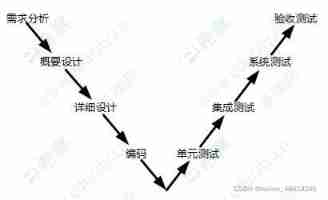

2022 system integration project management engineer examination knowledge points: software development model

Schematic diagram of crystal oscillator clock and PCB Design Guide

Ningde times and BYD have refuted rumors one after another. Why does someone always want to harm domestic brands?

![[PHP basics] cookie basics, application case code and attack and defense](/img/7c/551b79fd5dd8a411de85c800c3a034.jpg)

[PHP basics] cookie basics, application case code and attack and defense

STM32 GPIO CSDN creative punch in

NLP Chinese corpus project: large scale Chinese natural language processing corpus

Idea a method for starting multiple instances of a service

Ningde times and BYD have refuted rumors one after another. Why does someone always want to harm domestic brands?

How to understand the gain bandwidth product operational amplifier gain

随机推荐

Gossip about redis source code 74

Op amp related - link

[MySQL] classification of multi table queries

Amway by head has this project management tool to improve productivity in a straight line

STM32 GPIO CSDN creative punch in

Selenium check box

The upload experience version of uniapp wechat applet enters the blank page for the first time, and the page data can be seen only after it is refreshed again

[CSDN Q & A] experience and suggestions

AI Challenger 2018 text mining competition related solutions and code summary

Gossip about redis source code 82

"Learning notes" recursive & recursive

Kubedl hostnetwork: accelerating the efficiency of distributed training communication

A method to solve Bert long text matching

Tencent interview: can you pour water?

Solve the problem that the kaggle account registration does not display the verification code

D30:color tunnels (color tunnels, translation)

Social network analysis -social network analysis

Minimum commission for stock account opening. Stock account opening is free. Is online account opening safe

Gossip about redis source code 81

Cgb2201 preparatory class evening self-study and lecture content