当前位置:网站首页>Technology sharing | introduction to linkis parameters

Technology sharing | introduction to linkis parameters

2022-07-01 11:02:00 【Wechat open source】

Introduction : This paper mainly focuses on Linkis The parameter system of , Include Linkis Server parameters 、 Client parameters 、 Introduction of management console parameters .

Linkis Parameters are mainly divided into the following three parts :

Linkis Server parameters , It mainly includes Linkis Its own parameters and Spring Parameters of

Linkis SDK、Restful Wait for the client side to call the submitted parameters

Linkis Management console parameters

├──conf The configuration directory│ ├── application-eureka.yml│ ├── application-linkis.yml│ ├── linkis-cg-engineconnmanager.properties│ ├── linkis-cg-engineplugin.properties│ ├── linkis-cg-entrance.properties│ ├── linkis-cg-linkismanager.properties│ ├── linkis-mg-gateway.properties│ ├── linkis-ps-cs.properties│ ├── linkis-ps-data-source-manager.properties│ ├── linkis-ps-metadatamanager.properties│ ├── linkis-ps-publicservice.properties│ ├── linkis.properties│ ├── log4j2-console.xml│ ├── log4j2.xml

It is recommended that general parameters be placed in the main configuration file , The personality profile is placed in the service profile

Linkis Service is based on SpringBoot Applied ,Spring Relevant parameters are supported in application-linkis.yml Set it up , Also support in linkis Configure in the configuration file . stay linkis The configuration in the configuration file needs to be added spring. The prefix of . as follows :

spring port defaultserver.port=9102in linkis conf need spring prefixspring.server.port=9102

{"executionContent": {"code": "show tables", "runType": "sql"},"params": { // Submit parameters"variable":{ // Custom variables needed in the code"k1":"v1"},"configuration":{"special":{ // Special configuration parameters Such as log path , Result set path, etc"k2":"v2"},"runtime":{ // Runtime parameters , Such as JDBC Database connection parameters of the engine ,presto Data source parameters of the engine"k3":"v3"},"startup":{ // Launch parameters , If you start EC Memory parameters ,spark Engine parameters 、hive Engine parameters, etc"k4":"v4" Such as :spark.executor.memory:5G Set up Spark Actuator memory , Bottom Spark、hive Equal engine parameters keyName It is consistent with the native parameters}}},"labels": { // Tag parameters , Support setting engine version 、 Users and Applications"engineType": "spark-2.4.3","userCreator": "hadoop-IDE"}}

notes : Comments on methodsJobSubmitAction jobSubmitAction =JobSubmitAction.builder().addExecuteCode(code)// Launch parameters , If you start EC Memory parameters ,spark Engine parameters 、hive Engine parameters, etc , Such as :spark.executor.memory:5G Set up Spark Actuator memory , Bottom Spark、hive Equal engine parameters keyName It is consistent with the native parameters.setStartupParams(startupMap)// Runtime parameters , Such as JDBC Database connection parameters of the engine ,presto Data source parameters of the engine.setRuntimeParams(runTimeMap)// Custom variables needed in the code.setVariableMap(varMap)// Tag parameters , Support setting engine version 、 Users and Applications.setLabels(labels)//submit user.setUser(user)// execute user.addExecuteUser(user).build();

linkis-cli -runtieMap key1=value -runtieMap key2=value-labelMap key1=value-varMap key1=value-startUpMap key1=value

Map<String, Object> labels = new HashMap<String, Object>();labels.put(LabelKeyConstant.ENGINE_TYPE_KEY, "spark-2.4.3"); // Specify the engine type and versionlabels.put(LabelKeyConstant.USER_CREATOR_TYPE_KEY, user + "-IDE");// Specify the user running and your APPNamelabels.put(LabelKeyConstant.CODE_TYPE_KEY, "sql"); // Specify the type of script to run :spark Support :sql、scala、py;Hive:hql;shell:sh;python:python;presto:psqllabels.put(LabelKeyConstant.JOB_RUNNING_TIMEOUT_KEY, "10");//job function 10s Failed to complete automatic initiation Kill, Unit is slabels.put(LabelKeyConstant.JOB_QUEUING_TIMEOUT_KEY, "10");//job Queue over 10s Failed to complete automatic initiation Kill, Unit is slabels.put(LabelKeyConstant.RETRY_TIMEOUT_KEY, "10000");//job Waiting time for retry due to failure of resources , Unit is ms, If the queue fails because of insufficient resources , By default, it will initiate 10 Retrieslabels.put(LabelKeyConstant.TENANT_KEY,"hduser02");// Tenant label , If the tenant parameter is specified for the task, the task will be routed to a separate ECM machinelabels.put(LabelKeyConstant.EXECUTE_ONCE_KEY,"");// Execute label once , This parameter is not recommended , After setting, the engine will not reuse, and the engine will end after the task runs , Only a task parameter with specialization can be set

queue CPU Use the upper limit [wds.linkis.rm.yarnqueue.cores.max], At this stage, only restrictions are supported Spark Use of total queue resources for type tasksMaximum queue memory usage [wds.linkis.rm.yarnqueue.memory.max]Global memory usage limit of each engine [wds.linkis.rm.client.memory.max] This parameter does not refer to the total memory that can only be used , Instead, specify a Creator Total memory usage of a specific engine , Such as restrictions IDE-SPARK Tasks can only use 10G MemoryThe maximum number of cores of each engine in the whole world [wds.linkis.rm.client.core.max] This parameter does not mean that it can only be used in total CPU, Instead, specify a Creator Total memory usage of a specific engine , Such as restrictions IDE-SPARK Tasks can only use 10CoresThe maximum concurrency of each engine globally [wds.linkis.rm.instance], This parameter has two meanings , One is to limit one Creator How many specific engines can be started in total , And limit a Creator The number of tasks that a specific engine task can run at the same time

How to be a community contributor

1 ► Official document contribution . Discover the inadequacy of the document 、 Optimize the document , Participate in community contribution by continuously updating documents . Contribute through documentation , Let developers know how to submit PR And really participate in the construction of the community . Reference guide : Nanny class course : How to become Apache Linkis Document contributors

2 ► Code contribution . We sorted out the simple and easy to get started tasks in the community , It is very suitable for newcomers to make code contributions . Please refer to the novice task list :https://github.com/apache/incubator-linkis/issues/1161

3 ► Content contribution : Release WeDataSphere Content related to open source components , Including but not limited to installation and deployment tutorials 、 Use experience 、 Case practice, etc , There is no limit to form , Please submit your contribution to the little assistant . for example :

Technical dry cargo | Linkis practice : The new engine implements process parsing

Community developer column | MariaCarrie:Linkis1.0.2 Installation and use guide

4 ► Community Q & A : Actively answer questions in the community 、 Share technology 、 Help developers solve problems, etc ;

5 ► other : Actively participate in community activities 、 Become a community volunteer 、 Help the community promote 、 Provide effective suggestions for community development ;

This article is from WeChat official account. - WeDataSphere(gh_273e85fce73b).

If there is any infringement , Please contact the [email protected] Delete .

Participation of this paper “OSC Source creation plan ”, You are welcome to join us , share .

边栏推荐

- 数据库实验报告(二)

- Dotnet console uses microsoft Maui. Getting started with graphics and skia

- China's cellular Internet of things users have reached 1.59 billion, and are expected to surpass mobile phone users within this year

- [encounter Django] - (II) database configuration

- 基金国际化的发展概况

- SQLAchemy 常用操作

- bash: ln: command not found

- 获取键代码

- [.NET6]使用ML.NET+ONNX预训练模型整活B站经典《华强买瓜》

- Can I choose to open an account on CICC securities? Is it safe?

猜你喜欢

Wireshark TS | confusion between fast retransmission and out of sequence

How to solve the problem of SQL?

bash: ln: command not found

JS基础--数据类型

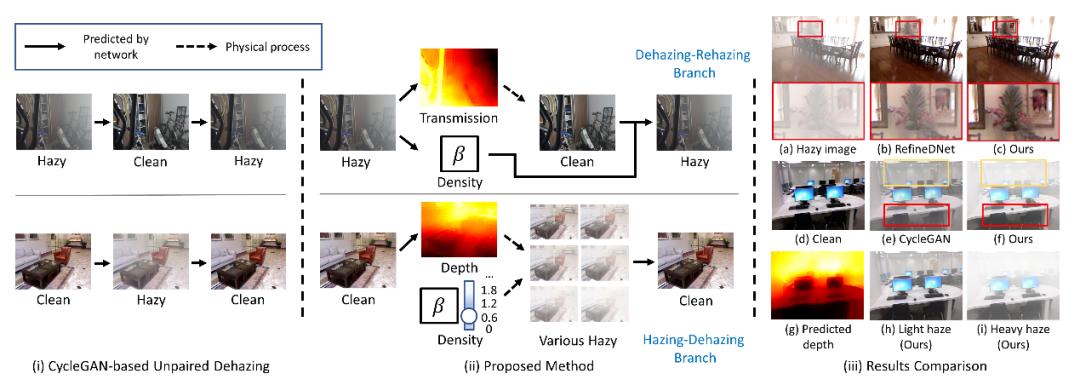

CVPR 2022 | 基于密度与深度分解的自增强非成对图像去雾

投稿开奖丨轻量应用服务器征文活动(5月)奖励公布

Design and practice of new generation cloud native database

Yoda unified data application -- Exploration and practice of fusion computing in ant risk scenarios

![[paper reading] trajectory guided control prediction for end to end autonomous driving: a simple yet strong Ba](/img/fa/f2d24ee3dbbbe6332c84a82109338e.png)

[paper reading] trajectory guided control prediction for end to end autonomous driving: a simple yet strong Ba

CVPR 2022 | Virtual Correspondence: Humans as a Cue for Extreme-View Geometry

随机推荐

PHP realizes lottery function

NC | intestinal cells and lactic acid bacteria work together to prevent Candida infection

Combination of Oracle and JSON

Huawei Equipment configure les services de base du réseau WLAN à grande échelle

Personal mall two open Xiaoyao B2C mall system source code - Commercial Version / group shopping discount seckill source code

想请教一下,我在广州,到哪里开户比较好?现在网上开户安全么?

《数据安全法》出台一周年,看哪四大变化来袭?

CRC check

China's cellular Internet of things users have reached 1.59 billion, and are expected to surpass mobile phone users within this year

Win平台下influxDB导出、导入

Mutual conversion of pictures in fluent uint8list format and pictures in file format

Intel Labs announces new progress in integrated photonics research

Dotnet console uses microsoft Maui. Getting started with graphics and skia

Half of 2022 has passed, isn't it sudden?

华为HMS Core携手超图为三维GIS注入新动能

[matytype] insert MathType inter line and intra line formulas in CSDN blog

英特尔实验室公布集成光子学研究新进展

CRC verification

Simulink simulation circuit model of open loop buck buck buck chopper circuit based on MATLAB

银行卡借给别人是否构成犯罪