当前位置:网站首页>Deep Blue Academy - 14 Lectures on Visual SLAM - Chapter 7 Homework

Deep Blue Academy - 14 Lectures on Visual SLAM - Chapter 7 Homework

2022-08-02 05:23:00 【hello689】

目录

Seventh class homework

2. Bundle Adjustment

2.1 文献阅读

为何说Bundle Adjustment is slow 是不对的?

论文page 2,原文:The claimed slowness is almost always due to the unthinking use of a general-purpose optimization routine that completely ignores the problem structure and sparseness.

在之前的文献中,BAIt has always been mistaken for being slower,Because the structure and sparsity of the problem are not taken into account.直接对 H H H求逆来计算增量方程,It consumes a lot of computing resources,It also seems slower.实际上,由于 H H Hhas a sparse structure,It can be solved using acceleration techniques.And processedBA,Usually faster than other new methods,更有效.BA What are the parameters that need to be paid attention to⽅?Pose 和Point What are the parameters of each⽅式?有何优缺点.

3D points(That is, signpostsy)、3D Rotation(That is, out-of-camera parameters(R,t) Or the pose of the camera)、相机校准(camera calibration)That is, the in-camera parameters 、Projected pixel coordinates.

Pose: 变换矩阵、欧拉角(Euler angles)、四元数(quaternions)

The advantage of Euler angles is that they are very intuitive,The disadvantage is that it is prone to gimbal lock problems

The advantage of transformation matrices is that they are easy to describe,The disadvantage is that it produces too many parameters,需要16parameters to describe the transformation process.

The advantage of quaternions is that they are easy to calculate,No gimbal lock issues,The disadvantage is that it is more difficult to understand、不直观.

Point: 三维坐标点(X,Y,Z)、逆深度

The advantage of three-dimensional coordinate points is that it is relatively simple and intuitive,The disadvantage is that infinity points cannot be described;

The advantage of inverse depth is that it can model points at infinity;在实际应用中,Inverse depth also has better numerical stability.

This topic refers to the literatureP7和博客:https://www.cnblogs.com/guoben/p/13375128.html

本⽂写于2000 年,但是⽂Much of what is mentioned in the post⾯⼗⼏Years of research has been confirmed.Which can you see⽅to follow⼯reflected in the work?请举例说明.

- Intensity-basedThe method is the direct methodBundle Adjustment;

- mentioned in the textNetwork StructureCorresponding to the more widely used graph optimization methods;

- Hsparsity can be achievedBA实时,在07年的PTAM上实现.

2.2 BAL-dataset

This question refers to the textbookP257中g2o求解BA的例子,以及博客https://blog.csdn.net/QLeelq/article/details/115497273关于g2o的使用说明.

g2oThe usage steps are roughly as follows:

- 创建一个线性求解器LinearSolver;

- 创建BlockSolver,并用上面定义的线性求解器初始化;

- 创建总求解器solver,并从GN/LM/DogLeg 中选一个作为迭代策略,再用上述块求解器BlockSolver初始化;

- 创建图优化的核心:稀疏优化器(SparseOptimizer);

- 定义图的顶点和边,并添加到SparseOptimizer中;

- 设置优化参数,开始执行优化.

BAL 的投影模型⽐There are more negative signs introduced in the textbook,Projection model part of the code:

Vector2d project(const Vector3d &point) {

//1.Convert world coordinates to pixel coordinates

Vector3d pc = _estimate.rotation * point + _estimate.translation;

//2.归一化坐标,BALThe projection model for is one more minus sign than the one presented in the textbook

pc = -pc / pc[2];

double r2 = pc.squaredNorm();

//3.Distortion model considering normalized coordinates

double distortion = 1.0 + r2 * (_estimate.k1 + _estimate.k2 * r2);

//4.Calculate the pixel coordinates according to the internal parameter model

return Vector2d(_estimate.focal * distortion * pc[0],

_estimate.focal * distortion * pc[1]);

}

Waypoint definition:

//继承并重写BaseVertex类,并实现接口

class VertexPoint : public g2o::BaseVertex<3, Vector3d> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexPoint() {}

virtual void setToOriginImpl() override {

_estimate = Vector3d(0, 0, 0);

}

virtual void oplusImpl(const double *update) override {

_estimate += Vector3d(update[0], update[1], update[2]);

}

virtual bool read(istream &in) {}

virtual bool write(ostream &out) const {}

};

Definition of projected edges:

//边的定义

class EdgeProjection :

public g2o::BaseBinaryEdge<2, Vector2d, VertexPoseAndIntrinsics, VertexPoint> {

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

virtual void computeError() override {

auto v0 = (VertexPoseAndIntrinsics *) _vertices[0];

auto v1 = (VertexPoint *) _vertices[1];

auto proj = v0->project(v1->estimate());

_error = proj - _measurement;

}

// use numeric derivatives

virtual bool read(istream &in) {}

virtual bool write(ostream &out) const {}

};

Detailed code and compilation files are incode文件夹下.选择problem-52-64053-pre.txt数据.

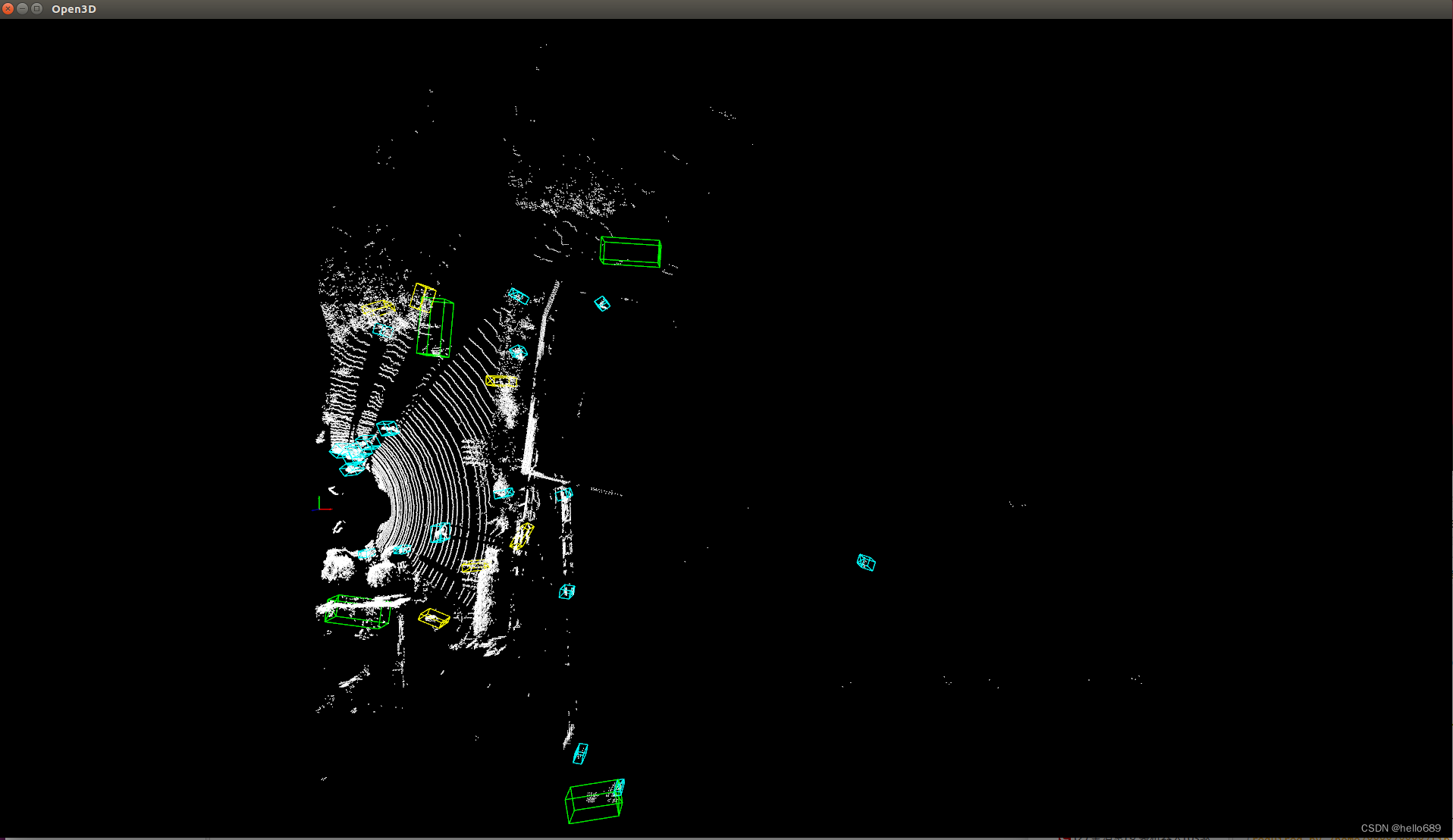

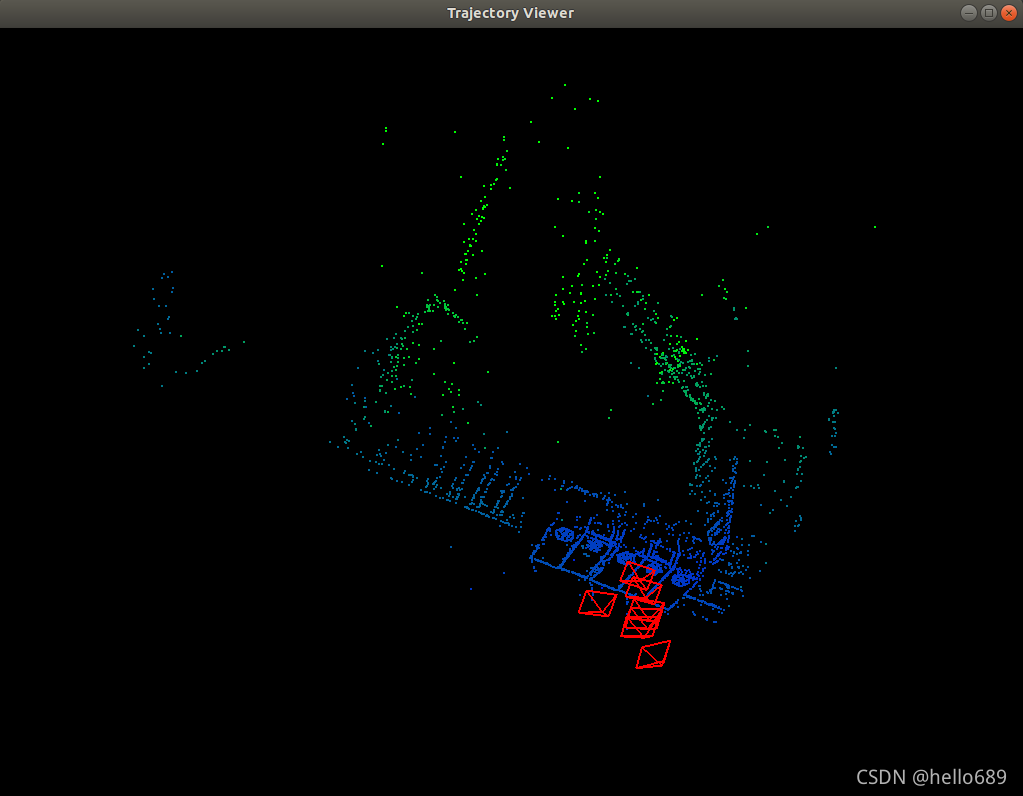

程序运行结果如下图所示,The left is the initial image,右边为BAThe optimized graph:

3. 直接法的Bundle Adjustment

3.1 数学模型

- How to describe arbitrary⼀The point is projected on arbitrary⼀formed in the imageerror?

e r r o r = I ( p i ) − I j ( π ( K T J p i ) ) error = I(p_i)-I_j(\pi(KT_Jp_i)) error=I(pi)−Ij(π(KTJpi))

每个error 关联⼏个优化变量?

Correlate two optimization variables,are the pose and landmark points, respectively.That is, the Lie algebra of the camera and the three-dimensional space coordinate pointP(x,y,z).

error Jacobs on each variable⽐是什么?

This problem is similar to the Jacobian solution of error versus variable in the direct method of the previous coursework.Second edition textbookP220.

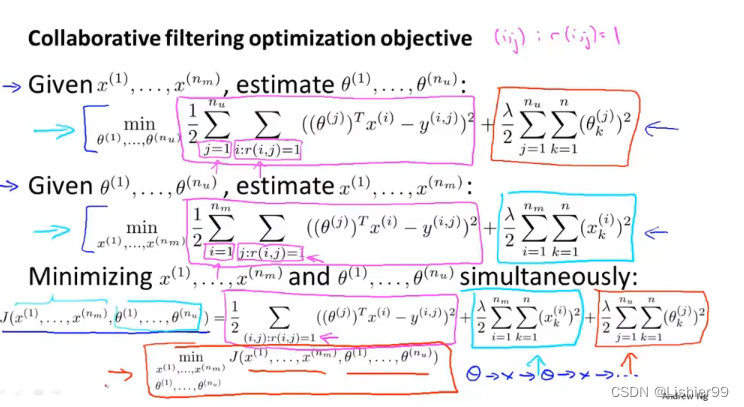

The derivative of the projection equation with respect to a 3D point in the camera coordinate system:

∂ u ∂ q = [ f x Z 0 − f x X Z 2 0 f y Z − f y Y Z 2 ] \frac{\partial u}{\partial q} = \begin{bmatrix} \frac{f_x}{Z} & 0& -\frac{f_xX}{Z^2}\\ 0& \frac{f_y}{Z}&-\frac{f_yY}{Z^2} \end{bmatrix} ∂q∂u=[Zfx00Zfy−Z2fxX−Z2fyY]

The transformed 3D point is the derivative of the transformation:

∂ q ∂ δ ξ = [ I , − q ∧ ] \frac{\partial q}{\partial \delta \xi} = \begin{bmatrix} I, & -q^{\wedge} \end{bmatrix} ∂δξ∂q=[I,−q∧]

Two of them can be combined:

∂ u ∂ q ⋅ ∂ q ∂ δ ξ = ∂ u ∂ δ ξ = [ f x Z 0 − f x X Z 2 − f x X Y Z 2 f x + f x X 2 Z 2 − f x Y Z 0 f y Z − f y Y Z 2 − f y − f y Y 2 Z 2 f y X Y Z 2 f y X Z ] \frac{\partial u}{\partial q}\cdot \frac{\partial q}{\partial \delta \xi} = \frac{\partial u}{\partial \delta \xi}= \begin{bmatrix} \frac{f_x}{Z}&0& -\frac{f_xX}{Z^2}& -\frac{f_xXY}{Z^2}& f_x+\frac{f_xX^2}{Z^2}& -\frac{f_xY}{Z}& \\ 0&\frac{f_y}{Z}& -\frac{f_yY}{Z^2}& -f_y-\frac{f_yY^2}{Z^2}& \frac{f_yXY}{Z^2}& \frac{f_yX}{Z} \end{bmatrix}\\ ∂q∂u⋅∂δξ∂q=∂δξ∂u=[Zfx00Zfy−Z2fxX−Z2fyY−Z2fxXY−fy−Z2fyY2fx+Z2fxX2Z2fyXY−ZfxYZfyX]

The error is relative to the Jacobian of the Lie algebra:

J = − ∂ I 2 ∂ u ∂ u ∂ δ ξ J = -\frac{\partial I_2}{\partial u} \frac{\partial u}{\partial \delta \xi} J=−∂u∂I2∂δξ∂u

error relative to3DThe Jacobian of the coordinate point:

J = − ∂ I 2 ∂ u ∂ u ∂ P J = -\frac{\partial I_2}{\partial u} \frac{\partial u}{\partial P} J=−∂u∂I2∂P∂u

3.2 实现

- Can you not[x; y; z]T The form parameterizes each point?

可以,You can also use the inverse depth method to parameterize each point,In this way, infinite points can be represented.

- 取4x4 的patch 好吗?change⼤的patch Well, take it⼩⼀点的patch 好?

4*4的patchShould be a moderate size,patchToo large a meeting leads to a large amount of computation,Too small will result in poor robustness.

- from this question,You see the direct method and the feature point methodBA How are the stages different?

The biggest difference is the calculation of the error,The direct method calculates the photometric error,The feature point method calculates the reprojection error.

- due to differences in images,You may need robust kernel functions,例如Huber.此时Huber how to choose the threshold?

HuberThresholds should be based on multiple experiments,Determined by experience.

计算误差:

// TODO START YOUR CODE HERE

const g2o::VertexPointXYZ *vertexPw = static_cast<const g2o::VertexPointXYZ * >(vertex(0));

const VertexSophus *vertexTcw = static_cast<const VertexSophus * >(vertex(1));

Eigen::Vector3d Pc = vertexTcw->estimate() * vertexPw->estimate();

float u = Pc[0] / Pc[2] * fx + cx;

float v = Pc[1] / Pc[2] * fy + cy;

if (u - 2 < 0 || v - 2 <0 || u+1 >= targetImg.cols || v + 1 >= targetImg.rows) {

//边界点的处理(error设为0,setLevel(1))

this->setLevel(1);

for (int n = 0; n < 16; n++)

_error[n] = 0;

} else {

for (int i = -2; i < 2; i++) {

for (int j = -2; j < 2; j++) {

int num = 4 * i + j + 10;

_error[num] = origColor[num] - GetPixelValue(targetImg, u + i, v + j);

}

}

}

// END YOUR CODE HERE

计算雅克比:

virtual void linearizeOplus() override {

if (level() == 1) {

_jacobianOplusXi = Eigen::Matrix<double, 16, 3>::Zero();

_jacobianOplusXj = Eigen::Matrix<double, 16, 6>::Zero();

return;

}

const g2o::VertexPointXYZ *vertexPw = static_cast<const g2o::VertexPointXYZ * >(vertex(0));

const VertexSophus *vertexTcw = static_cast<const VertexSophus * >(vertex(1));

Eigen::Vector3d Pc = vertexTcw->estimate() * vertexPw->estimate();

float x = Pc[0];

float y = Pc[1];

float z = Pc[2];

float inv_z = 1.0 / z;

float inv_z2 = inv_z * inv_z;

float u = x * inv_z * fx + cx;

float v = y * inv_z * fy + cy;

Eigen::Matrix<double, 2, 3> J_Puv_Pc;

J_Puv_Pc(0, 0) = fx * inv_z;

J_Puv_Pc(0, 1) = 0;

J_Puv_Pc(0, 2) = -fx * x * inv_z2;

J_Puv_Pc(1, 0) = 0;

J_Puv_Pc(1, 1) = fy * inv_z;

J_Puv_Pc(1, 2) = -fy * y * inv_z2;

Eigen::Matrix<double, 3, 6> J_Pc_kesi = Eigen::Matrix<double, 3, 6>::Zero();

J_Pc_kesi(0, 0) = 1;

J_Pc_kesi(0, 4) = z;

J_Pc_kesi(0, 5) = -y;

J_Pc_kesi(1, 1) = 1;

J_Pc_kesi(1, 3) = -z;

J_Pc_kesi(1, 5) = x;

J_Pc_kesi(2, 2) = 1;

J_Pc_kesi(2, 3) = y;

J_Pc_kesi(2, 4) = -x;

Eigen::Matrix<double, 1, 2> J_I_Puv;

for (int i = -2; i < 2; i++)

for (int j = -2; j < 2; j++) {

int num = 4 * i + j + 10;

J_I_Puv(0, 0) =

(GetPixelValue(targetImg, u + i + 1, v + j) - GetPixelValue(targetImg, u + i - 1, v + j)) / 2;

J_I_Puv(0, 1) =

(GetPixelValue(targetImg, u + i, v + j + 1) - GetPixelValue(targetImg, u + i, v + j - 1)) / 2;

_jacobianOplusXi.block<1, 3>(num, 0) = -J_I_Puv * J_Puv_Pc * vertexTcw->estimate().rotationMatrix();

_jacobianOplusXj.block<1, 6>(num, 0) = -J_I_Puv * J_Puv_Pc * J_Pc_kesi;

}

}

构建BA问题:

// build optimization problem

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6, 3>> DirectBlock; // 求解的向量是6*1的

std::unique_ptr<DirectBlock::LinearSolverType> linearSolver ( new g2o::LinearSolverDense<DirectBlock::PoseMatrixType>()); // 线性方程求解器

std::unique_ptr<DirectBlock> solver_ptr ( new DirectBlock ( std::move(linearSolver))); // 矩阵块求解器

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( std::move(solver_ptr)); //选择使用LM优化

g2o::SparseOptimizer optimizer;

optimizer.setAlgorithm(solver);

optimizer.setVerbose(true);

// TODO add vertices, edges into the graph optimizer

// START YOUR CODE HERE

for (int k = 0; k < points.size(); ++k) {

g2o::VertexPointXYZ* pPoint = new g2o::VertexPointXYZ();

pPoint->setId(k);

pPoint->setEstimate(points[k]);

pPoint->setMarginalized(true);//Manually set the vertices that need to be marginalized when using sparse optimization The optimization target is only the pose nodes,So signpost nodes need to be marginalized

optimizer.addVertex(pPoint);

}

for (int j = 0; j < poses.size(); j++) {

VertexSophus *vertexTcw = new VertexSophus();

vertexTcw->setEstimate(poses[j]);

// 两种节点的idThe order remains continuous

vertexTcw->setId(j + points.size());

optimizer.addVertex(vertexTcw);

}

for (int c = 0; c < poses.size(); c++)

for (int p = 0; p < points.size(); p++) {

EdgeDirectProjection *edge = new EdgeDirectProjection(color[p], images[c]);

// 先point后pose

edge->setVertex(0, dynamic_cast<g2o::VertexPointXYZ *>(optimizer.vertex(p)));

edge->setVertex(1, dynamic_cast<VertexSophus *>(optimizer.vertex(c + points.size())));

// The information matrix can be set directly to error_dim*error_dim 的单位阵

edge->setInformation(Eigen::Matrix<double, 16, 16>::Identity());

// 设置Huber核函数,Reduce the impact of error points,加强鲁棒性

g2o::RobustKernelHuber *rk = new g2o::RobustKernelHuber;

rk->setDelta(1.0);

edge->setRobustKernel(rk);

optimizer.addEdge(edge);

}

// END YOUR CODE HERE

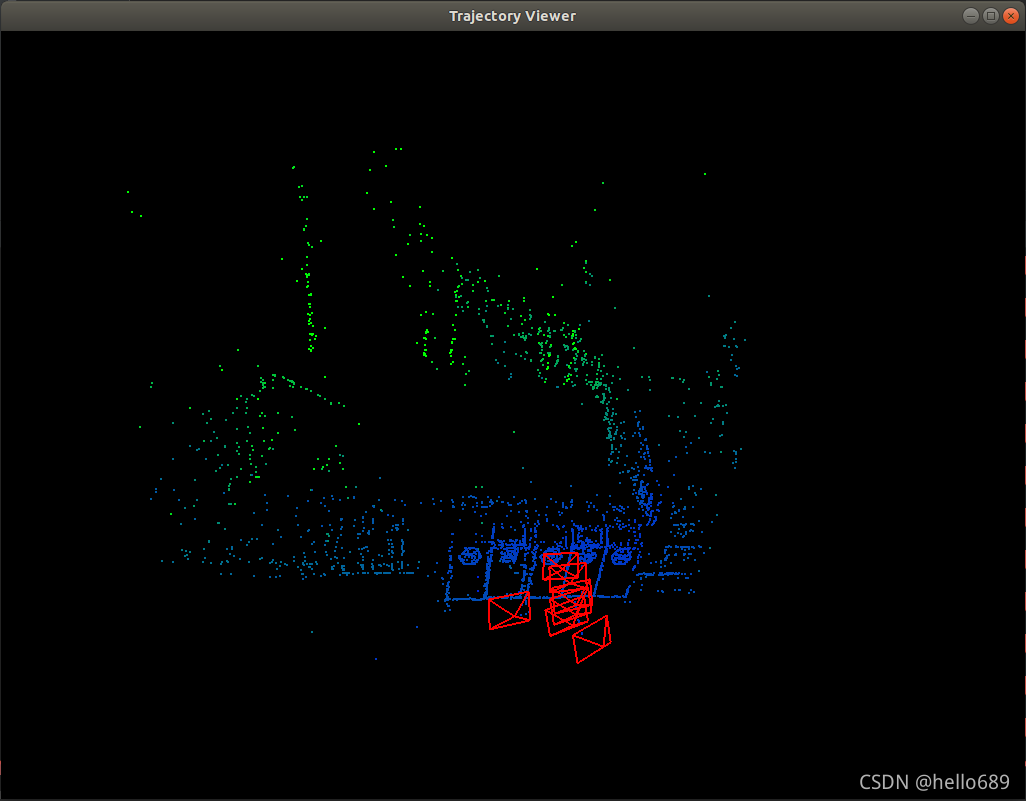

程序运行结果如下图所示:

Pose and point cloud before optimization:

The optimized pose and point cloud:

国内gitee地址:https://gitee.com/ximing689/slam-learning.git

github地址: https://github.com/ximing1998/slam-learning.git

边栏推荐

猜你喜欢

随机推荐

Plus版SBOM:流水线物料清单PBOM

深度学习基础之过拟合、欠拟合问题和正则化

允许Traceroute探测

js平层数组转树形结构(解决数据处理后源数据篡改)

未来智安XDR及核心组件产品上榜《嘶吼2022网络安全产业图谱》

深度学习基础之批量归一化(BN)

迭代器与生成器

Location、navigator和History对象

BOM学习

VisibleDeprecationWarning: Creating an ndarray from ragged nested sequences (which is a list-or-tupl

flv文件结构解析

Anconda spyder内引用OpenCV出现错误ImportError: numpy.core.multiarray failed to import

Class ‘PHPWord_Writer_Word2003‘ not found

this指向问题

微服务入门

3个最佳实践助力企业改善供应链安全

金融行业案例 | 未来智安XDR助力银行业客户优化安全运营体系,有效提高告警研判率

科研笔记(八) 深度学习及其在 WiFi 人体感知中的应用(上)

MySQL5.7的安装编译及报错的解决方法

如何将PDF中的一部分页面另存为新的PDF文件