当前位置:网站首页>Role of RESNET residual block

Role of RESNET residual block

2022-06-11 20:34:00 【TranSad】

ResNet Brief introduction

ResNet yes 15 The classic network proposed in . stay ResNet Before I put it forward , It is found that when the number of model layers is increased to a certain extent , Increasing the number of layers will no longer improve the model effect —— This leads to a bottleneck in the deep learning network , The way to improve the effect by increasing the number of layers seems to have come to an end .ResNet Solved this problem .

ResNet The core idea of is to introduce the residual edge . That is, an edge added directly from the input to the output .

What's the use of doing this ? It can be understood in this way : If the learning effect of these new layers is very poor , Then we can use a residual edge to directly “ skip ”. It is easy to achieve this , Set the weight parameters of these layers to 0 That's it . thus , No matter how many layers there are in the network , We keep the layers with good effect , If the effect is not good, we can skip . All in all , The new network layer added will at least not make the effect worse than the original , The effect of the model can be improved stably by adding the deep number 了 .

Besides , Another benefit of using residual edges is Sure avoid The problem of gradient disappearance . Because of this feature , it It can train hundreds or even thousands of layers of networks .

Why residual network can avoid gradient vanishing is also well understood :

When there is no residual edge , If the network layer is very deep , To update the underlying ( Close to the input data section ) Network weight , First, find the gradient , According to the chain rule, we need to multiply forward , As long as any one of these factors is too small, the gradient will be very small , Even if this small gradient is multiplied by a large learning rate, it will not help ;

And when we have the residual edge , The gradient can be obtained directly through “ Expressway ” Go straight to the object we want to demand the gradient , At this point, no matter how small the gradient obtained by taking the normal route through the chain rule , The sum of the two routes will not be small , You can update the gradient effectively .

Because of the advantages of residual network , Now many mainstream networks , Residual edge has become standard configuration , We can see its far-reaching influence .

ResNet Some specific points

ResNet It can be divided into many types according to the number of layers , For example, each column in the following figure , They correspond to ResNet18,ResNet34,ResNet50 Wait for the network structure . among ResNet152 Most floors , It is also generally considered to be the most effective ( The cost also requires more computation ). Generally speaking ResNet It can be used as the preferred network to do classification tasks , If you want the highest precision, use ResNet152.

With ResNet34( Framed in the above figure ) Let's give an example to explain its structure ,34 In fact, it means that it has 34 layer , At the top of it, there are 7*7 The convolution of layer ( count 1 layer ), after (3+4+6+3)=16 A remnant , Each residual has two convolutions ( There is 16*2=32 layer ), Connect a full connection layer at the end ( Last 1 layer ), So we have 34 layer .

What does the residual block look like ? Use residual edge “ package ” The part that gets up can be regarded as a residual error . I wrote in the picture stage1 To stage4( Add up : General image Half the size 、 Double the number of channels We think it is a stage, however These dimensions You can't see it in the above picture , The above figure shows the size of convolution kernel and the number of channels , Don't confuse ), Every stage In fact, different residuals are used ,ResNet The key point of is the structure in the residual block .

We put ResNet34 The intermediate structure is partially intercepted , from stage1 Start to go down , The specific structure is shown in the figure below :

Through the various stage The marked position of can be compared with the two figures , We are right. ResNet It is much clearer how the residual blocks are connected . It can be found that in addition to the normal convolution , The most striking feature is Each residual block has either a solid line or a dashed line next to it .

Through these repeated residual block structures , We control the number of layers, the size of convolution kernel and the number of channels in the residual block , You get different ResNet 了 . Here we are , Whether it's ResNet A few , Its network structure can be understood according to the same logic .

wait , What is the difference between the residual edges of the solid line and the dotted line in the figure ? Say first conclusion :

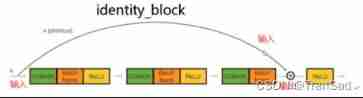

Residual edge of solid line , Is directly connected to the result , That is, the simplest and simplest line , Corresponding identity_block;

The residual edge of the dotted line , In fact, there must be a 1*1 To change the number of channels , Corresponding convolution_block.

from stage2 Start , Every stage They all come first convolution_block, A few more identity_block.( As shown below ResNet Network style diagram . The blue one is convolution_block, Red is identity_block)

The specific term , Why is it stage You need one at the beginning convolution_block Well ? It says One convolution_block The residual edge of is a 1*1 Convolution of ( Here's the picture ).

The last article has been sorted out 1*1 The function of convolution , One of the functions here is Expand the number of channels . Why expand the number of channels ? If you say this convolution_block Corresponding to the dotted line in the figure below :

It can be found in a The place was originally 64 Of a characteristic graph , After 2 Time 3*3、 The number of channels is 128 Convolution kernel , To b The number of channels becomes 128; Well, if you go from a Direct to b If you do nothing at the edge of this residual error of , Give Way 64 The channel is directly connected to 128 Channel addition is not true . And if the 64 The characteristic diagram of the channel passes through 1*1 Convolution , As long as the number of convolution kernels is 128, You can double the number of channels and add them reasonably . This is it. 1*1 The function of convolution , The residual edge can also be applied where the number of channels is doubled , And the whole becomes convolution_block.

and identity_block It's very simple ( Here's the picture ) It's just one side , Corresponding to all solid lines . When the size of input and output 、 When the number of channels does not change before and after , The input can be added directly to the output .

All in all , Throughout ResNet In the network structure , From input to output , The process of reducing the size of the feature map and increasing the number of feature maps continues . As the number of feature maps increases , The residual edge has to be 1*1 To expand the number of channels as well , Thus, the size of the operation result obtained by normal convolution can be kept consistent .

and NiN The Internet is similar to ,ResNet Finally, it also uses Global average pooling , But at this time, we no longer restrict the number of channels to be directly equal to the number of results, so we can get the result dimension directly through a global average pool —— But add A full connectivity layer To get the result .( Finally, the convolution result has several channel numbers , such as 512, The number of input neurons is 512; The result has several dimensions , such as 10, The number of neurons in the output layer is 10)

A brief summary :

This article has been sorted out ResNet The basic idea and general structure of the network , Some advantages are mentioned , It also distinguishes convolution_block and identity_block The differences between .

Last , stay ResNet It is used in many places in Batch Normal To standardize , This has been intentionally ignored in the above processes ,Batch Normal It is mainly used to solve the problems of gradient disappearance and gradient explosion , For the time being, it can be regarded as a processing method that does not change the size and number of channels . About BN There are a few expansions , I'll add it later .

边栏推荐

- Première formation sur les largeurs modernes

- 9 r remove missing values

- Usage methods and cases of PLSQL blocks, cursors, functions, stored procedures and triggers of Oracle Database

- 7905 and TL431 negative voltage regulator circuit - regulator and floating circuit relative to the positive pole of the power supply

- In 2021, the global revenue of minoxidil will be about 1035million US dollars, and it is expected to reach 1372.6 million US dollars in 2028

- Final examination of theory and practice of socialism with Chinese characteristics 1

- Edit the project steps to run QT and opencv in the clion

- 2022-2028 current situation and future development trend of fuel cell market for cogeneration application in the world and China

- [Err] 1045 - Access denied for user ‘root‘@‘%‘ (using password: YES)

- Current situation and future development trend of global and Chinese cogeneration system market from 2022 to 2028

猜你喜欢

ICML 2022 𞓜 rethinking anomaly detection based on structured data: what kind of graph neural network do we need

27. this指向问题

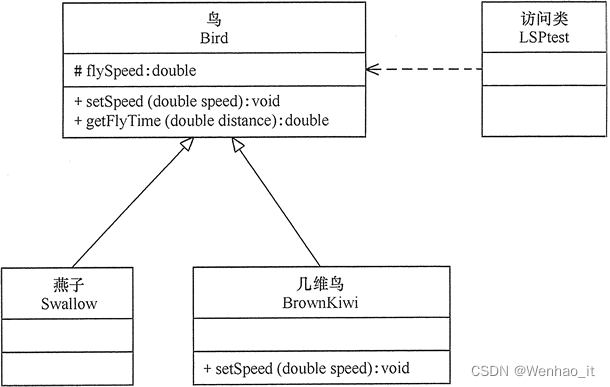

里氏替换原则

unity package manager starting server stuck(Unity啟動卡在starting server,然後報錯)

Lanqi technology joins in, and dragon dragon dragon community welcomes leading chip design manufacturers again

8 r subset

2022年最新宁夏建筑八大员(标准员)考试试题及答案

2022Redis7.0x版本持久化详细讲解

模拟Oracle锁等待与手工解锁

In 2021, the global revenue of minoxidil will be about 1035million US dollars, and it is expected to reach 1372.6 million US dollars in 2028

随机推荐

Are there any techniques for 3D modeling?

Black circle display implementation

2022-2028 current situation and future development trend of fuel cell market for cogeneration application in the world and China

Interpretation of OCP function of oceanbase Community Edition

8 r subset

Oracle case: ora-00600: internal error code, arguments: [4187]

Flutter doctor shows the solution that Xcode is not installed

A Mechanics-Informed Artificial Neural Network Approach in Data-Driven Constitutive Modeling 学习

模拟Oracle锁等待与手工解锁

STC hardware only automatic download circuit V2

sql优化之DATE_FORMAT()函数

浅谈UGUI中Canvas RectTransform的Scale

黑圆圈显示实现

Shanghai internal promotion 𞓜 Yuyang teacher's research group of Shanghai Chizhi research institute recruits full-time researchers

Two end carry output character

2022-2028 global and Chinese thermopile detector Market Status and future development trend

vectorDrawable使用报错

My favorite product management template - Lenny

These postgraduate entrance examination majors are easy to be confused. If you make a mistake, you will take the exam in vain!

10 R vector operation construction