当前位置:网站首页>Openkruise v1.2: add persistentpodstate to realize stateful pod topology fixation and IP reuse

Openkruise v1.2: add persistentpodstate to realize stateful pod topology fixation and IP reuse

2022-06-09 11:05:00 【Alibaba cloud native】

author : Wang Siyu ( Wine wish )

Cloud native application automation management suite 、CNCF Sandbox project – OpenKruise, Recently released v1.2 edition .

OpenKruise [1] Is aimed at Kubernetes Enhanced capability Suite , Focus on the deployment of cloud native applications 、 upgrade 、 Operation and maintenance 、 Stability protection and other fields . All functions are through CRD And so on , It can be applied to 1.16 Any of the above versions Kubernetes colony . Single helm The command is done Kruise One click deployment of , No more configuration required .

Version resolution

stay v1.2 In the version ,OpenKruise A file named PersistentPodState The new CRD And controller , stay CloneSet status and lifecycle hook New field in , Also on PodUnavailableBudget Multiple optimization .

1. newly added CRD and Controller-PersistentPodState

With the development of cloud primitives , More and more companies are starting to have stateful Services ( Such as :Etcd、MQ) Conduct Kubernetes Deploy .K8s StatefulSet Is to manage the workload of stateful Services , It considers the deployment characteristics of stateful services in many ways . However ,StatefulSet Only a limited Pod state , Such as :Pod Name Orderly and unchanging ,PVC Persistence , It is not enough for others Pod The need to maintain state , for example : Fix IP Dispatch , Priority scheduling to previously deployed Node etc. . Typical cases are :

The service discovery middleware service is responsible for the deployment of Pod IP Extremely sensitive , requirement IP Can't change at will

The database service persists the data to the host disk , Belongs to Node Changes will result in data loss

For the above description ,Kruise By customizing PersistentPodState CRD, Be able to keep Pod Other relevant status , for example :“ Fix IP Dispatch ”.

One PersistentPodState Resource objects YAML as follows :

apiVersion: apps.kruise.io/v1alpha1

kind: PersistentPodState

metadata:

name: echoserver

namespace: echoserver

spec:

targetRef:

# Native k8s or kruise StatefulSet

# Only support StatefulSet type

apiVersion: apps.kruise.io/v1beta1

kind: StatefulSet

name: echoserver

# required node affinity, as follows :Pod After reconstruction, it will be forcibly deployed to the same Zone

requiredPersistentTopology:

nodeTopologyKeys:

failure-domain.beta.kubernetes.io/zone[,other node labels]

# preferred node affinity, as follows :Pod After reconstruction, it will be deployed to the same Node

preferredPersistentTopology:

- preference:

nodeTopologyKeys:

kubernetes.io/hostname[,other node labels]

# int, [1 - 100]

weight: 100

“ Fix IP Dispatch ” It should be a common stateful service K8s Deployment requirements , It doesn't mean “ Appoint Pod IP Deploy ”, It's about asking for Pod After the first deployment , Routine operation and maintenance operations such as business release or machine eviction will not lead to Pod IP change . Achieve the above effect , First of all, we need K8s Network components can support Pod IP Keep and try to keep IP Constant ability , This article will flannel In the network component Host-local The plug-in has made some code changes , Make it possible to achieve the same Node Lower hold Pod IP The same effect , The relevant principles are not stated here , Please refer to :host-local [2] .

“ Fix IP Dispatch ” It seems that it would be good if there were network component support , This one PersistentPodState What does it matter ? because , Network component implementation "Pod IP remain unchanged " There are certain restrictions , for example :flannel Can only support the same Node keep Pod IP unchanged . however ,K8s The biggest characteristic of scheduling is “ uncertainty ”, therefore “ How to ensure Pod After reconstruction, it is scheduled to the same Node On ” Namely PersistentPodState Problem solved .

in addition , You can use the StatefulSet or Advanced StatefulSet The following new annotations, To make the Kruise Automatically for your StatefulSet establish PersistentPodState object , This avoids manually creating all PersistentPodState The burden of .

apiVersion: apps.kruise.io/v1alpha1

kind: StatefulSet

metadata:

annotations:

# Automatic generation PersistentPodState object

kruise.io/auto-generate-persistent-pod-state: "true"

# preferred node affinity, as follows :Pod After reconstruction, it will be deployed to the same Node

kruise.io/preferred-persistent-topology: kubernetes.io/hostname[,other node labels]

# required node affinity, as follows :Pod After reconstruction, it will be forcibly deployed to the same Zone

kruise.io/required-persistent-topology: failure-domain.beta.kubernetes.io/zone[,other node labels]

2. CloneSet For percentage form partition Calculate logical changes , newly added status Field

In the past ,CloneSet adopt “ Rounding up ” To calculate its partition The number ( When it is a numerical value in percentage form ), This means that even if you will partition Set to a value less than 100% Percent of ,CloneSet You may not upgrade any of them Pod To the new version . such as , For one replicas=8 and partition=90% Of CloneSet object , It calculates the actual partition Values are 8( come from 8 * 90% Rounding up ), Therefore, it will not perform the upgrade action for the time being . This sometimes brings confusion to users , Especially for some rollout Rolling upgrade component scenarios , such as Kruise Rollout or Argo.

therefore , from v1.2 Version start ,CloneSet I'll make sure that partition Less than 100% Percentage value of , There are at least 1 individual Pod Will be upgraded , Unless CloneSet be in replicas <= 1 The situation of .

however , This will make it difficult for users to understand the calculation logic , At the same time, it is necessary to partition When upgrading, you know what you expect to upgrade Pod Number , To judge whether the upgrade of this batch is completed .

So we're on the other side CloneSet status Added in expectedUpdatedReplicas Field , It can be very straightforward to show current partition The number , How much to expect Pod Will be upgraded . For users :

Just compare status.updatedReplicas>= status.expectedUpdatedReplicas And the other updatedReadyReplicas To determine whether the current release phase has reached the completion status .

apiVersion: apps.kruise.io/v1alpha1

kind: CloneSet

spec:

replicas: 8

updateStrategy:

partition: 90%

status:

replicas: 8

expectedUpdatedReplicas: 1

updatedReplicas: 1

updatedReadyReplicas: 1

3. stay lifecycle hook Stage settings Pod not-ready

Kruise In an earlier version lifecycle hook function , among CloneSet and Advanced StatefulSet All supported PreDelete、InPlaceUpdate Two kinds of hook, Advanced DaemonSet Currently only supported PreDelete hook.

In the past , these hook Only the current operation will be stuck , And allow users to Pod Do some customization before and after deleting or upgrading in place ( For example, will Pod Remove from the service endpoint ). however ,Pod In these stages, it is likely that Ready state , At this point it will be from some custom service Remove from the implementation , Actually, it's a little against Kubernetes Common sense of , Generally speaking, it will only be in NotReady State of Pod Remove from the service endpoint .

therefore , In this version we are lifecycle hook Added in markPodNotReady Field , It controls Pod In place hook Whether the phase will be forced to be set to NotReady state .

type LifecycleStateType string

// Lifecycle contains the hooks for Pod lifecycle.

type Lifecycle struct

// PreDelete is the hook before Pod to be deleted.

PreDelete *LifecycleHook `json:"preDelete,omitempty"`

// InPlaceUpdate is the hook before Pod to update and after Pod has been updated.

InPlaceUpdate *LifecycleHook `json:"inPlaceUpdate,omitempty"`

}

type LifecycleHook struct {

LabelsHandler map[string]string `json:"labelsHandler,omitempty"`

FinalizersHandler []string `json:"finalizersHandler,omitempty"`

/********************** FEATURE STATE: 1.2.0 ************************/

// MarkPodNotReady = true means:

// - Pod will be set to 'NotReady' at preparingDelete/preparingUpdate state.

// - Pod will be restored to 'Ready' at Updated state if it was set to 'NotReady' at preparingUpdate state.

// Default to false.

MarkPodNotReady bool `json:"markPodNotReady,omitempty"`

/*********************************************************************/

}

For configuration markPodNotReady: true Of PreDelete hook, It will be PreparingDelete Stage will Pod Set to NotReady, And this Pod Before we turn it up again replicas The value cannot be returned to normal state .

For configuration markPodNotReady: true Of InPlaceUpdate hook, It will be PreparingUpdate Phase will Pod Set to NotReady, And in Updated Phase will force NotReady The state of being removed .

4. PodUnavailableBudget Support customization workload And performance optimization

Kubernetes Provided by itself PodDisruptionBudget To help users protect highly available applications , But it can only protect eviction Expel a scene . For a wide variety of unavailable operations ,PodUnavailableBudget It can more comprehensively protect the high availability and SLA, It can not only protect Pod deportation , Other functions such as deleting are also supported 、 Upgrading in place will lead to Pod Unavailable operation .

In the past ,PodUnavailableBudget Only some specific workload, such as CloneSet、Deployment etc. , But it does not recognize some unknown workloads defined by the user .

from v1.2 Version start ,PodUnavailableBudget Support for protecting arbitrary custom workloads Pod, As long as these workloads declare scale subresource Sub resources .

stay CRD in ,scale The sub resources are declared as follows :

subresources:

scale:

labelSelectorPath: .status.labelSelector

specReplicasPath: .spec.replicas

statusReplicasPath: .status.replicas

however , If your project is through kubebuilder or operator-sdk Generated , So just in your workload Add a line of comments to the definition structure and re make manifests that will do :

// +kubebuilder:subresource:scale:specpath=.spec.replicas,statuspath=.status.replicas,selectorpath=.status.labelSelector

in addition ,PodUnavailableBudget Also by closing client list When the default DeepCopy operation , To improve the runtime performance in large-scale clusters .

5. Other changes

You can go through Github release [3] page , To see more changes and their authors and submission records .

Community participation

You are very welcome to pass Github/Slack/ nailing / WeChat Join us and participate in OpenKruise The open source community . Do you already have something you want to communicate with our community ? You can have a biweekly meeting in our community

(https://shimo.im/docs/gXqmeQOYBehZ4vqo) Share your voice on , Or participate in the discussion through the following channels :

Join the community Slack channel (English)

https://kubernetes.slack.com/?redir=%2Farchives%2Fopenkruise\Join the community nail group : Search for group numbers 23330762 (Chinese)

Join the community wechat group ( new ): Add users openkruise And let the robot pull you into the group (Chinese)

Reference link :

[1] OpenKruise:

[2] host-local:

https: //github.com/openkruise/samples

[3] Github release :

https://github.com/openkruise/kruise/releases

stamp here , see OpenKruise project github Home page !!

边栏推荐

- 33 - nodejs simple proxy pool (it's estimated that it's over) the painful experience of using proxy by SuperAgent and the need to be careful

- Web3 的“中国特色”

- Lua call principle demonstration (Lua stack)

- 复杂嵌套的对象池(2)——管理单个实例对象的对象池

- 多线程中编译错误

- NFT market has entered the era of aggregation, and okaleido has become the first aggregation platform on BNB chain

- 线程池的实现

- 字符串切割 group by

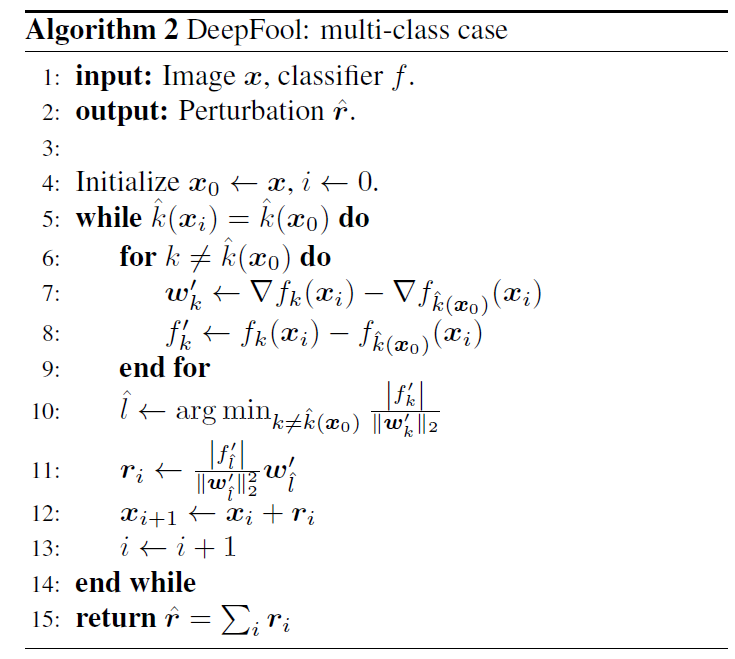

- 论文阅读 (54):DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks

- 基于任务调度的企业级分布式批处理方案

猜你喜欢

Cyclodextrin metal organic framework loaded low molecular weight heparin and adriamycin (MOF metal organic framework loaded biological macromolecular drugs)

C# 图片验证码简单例子

全局组织结构控制之抢滩登陆

![[image enhancement] image enhancement based on sparse representation and regularization with matlab code](/img/0c/3755a74a68ae7ac8232774881d618a.png)

[image enhancement] image enhancement based on sparse representation and regularization with matlab code

肆拾伍- 正则表达式 (?=pattern) 以及 (?!pattern)

论文阅读 (54):DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks

AI candidates scored 48 points in challenging the composition of the college entrance examination; IBM announced its withdrawal from the Russian market and has suspended all business in Russia; Opencv

Forty five - regular expressions (? =pattern) and (?! pattern)

字符串切割 group by

excel条件格式使用详细步骤

随机推荐

Network planning | units of each layer in OSI model

Learning fuzzy from SQL injection to bypass the latest safe dog WAF

Is it safe for the securities company with the lowest fees to open an account

Stop watch today

web开发交流,web开发实例教程

Qt-Char实现动态波形显示

From "no one cares" to comprehensive competition, the first 10 years of domestic industrial control safety

error NU1202: Package Volo.Abp.Cli 5.2.1 is not compatible with netcoreapp3.1

Is Huatai Securities safe? I want to open an account

三拾壹- NodeJS簡單代理池(合) 之 MongoDB 鏈接數爆炸了

MOS管从入门到精通

How much do you know, deep analysis, worth collecting

How to pass the MySQL database header song training task stored procedure?

Thirty nine - SQL segment / group summary of data content

Mathematical formula display

Cyclodextrin metal organic framework loaded low molecular weight heparin and adriamycin (MOF metal organic framework loaded biological macromolecular drugs)

Configurationmanager pose flash

Two Sum

Cyclodextrin metal organic framework( β- Cd-mof) loaded with dimercaptosuccinic acid( β- CD-MOF/DMSA) β- Drug loading mechanism of cyclodextrin metal organic framework

字符串切割 group by