当前位置:网站首页>高考录取分数线爬虫

高考录取分数线爬虫

2022-07-02 12:08:00 【jidawanghao】

# -*- coding: utf-8 -*-

'''

作者 : dy

开发时间 : 2021/6/15 17:15

'''

import aiohttp

import asyncio

import pandas as pd

from pathlib import Path

from tqdm import tqdm

import time

current_path = Path.cwd()

def get_url_list(max_id):

url = 'https://static-data.eol.cn/www/2.0/school/%d/info.json'

not_crawled = set(range(max_id))

if Path.exists(Path(current_path, 'college_info.csv')):

df = pd.read_csv(Path(current_path, 'college_info.csv'))

not_crawled -= set(df['学校id'].unique())

return [url%id for id in not_crawled]

async def get_json_data(url, semaphore):

async with semaphore:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36',

}

async with aiohttp.ClientSession(connector=aiohttp.TCPConnector(ssl=False), trust_env=True) as session:

try:

async with session.get(url=url, headers=headers, timeout=6) as response:

# 更改相应数据的编码格式

response.encoding = 'utf-8'

# 遇到IO请求挂起当前任务,等IO操作完成执行之后的代码,当协程挂起时,事件循环可以去执行其他任务。

json_data = await response.json()

if json_data != '':

# print(f"{url} collection succeeded!")

return save_to_csv(json_data['data'])

except:

return None

def save_to_csv(json_info):

save_info = {}

save_info['学校id'] = json_info['school_id'] # 学校id

save_info['学校名称'] = json_info['name'] # 学校名字

level = ""

if json_info['f985'] == '1' and json_info['f211'] == '1':

level += "985 211"

elif json_info['f211'] == '1':

level += "211"

else:

level += json_info['level_name']

save_info['学校层次'] = level # 学校层次

save_info['软科排名'] = json_info['rank']['ruanke_rank'] # 软科排名

save_info['校友会排名'] = json_info['rank']['xyh_rank'] # 校友会排名

save_info['武书连排名'] = json_info['rank']['wsl_rank'] # 武书连排名

save_info['QS世界排名'] = json_info['rank']['qs_world'] # QS世界排名

save_info['US世界排名'] = json_info['rank']['us_rank'] # US世界排名

save_info['学校类型'] = json_info['type_name'] # 学校类型

save_info['省份'] = json_info['province_name'] # 省份

save_info['城市'] = json_info['city_name'] # 城市名称

save_info['所处地区'] = json_info['town_name'] # 所处地区

save_info['招生办电话'] = json_info['phone'] # 招生办电话

save_info['招生办官网'] = json_info['site'] # 招生办官网

df = pd.DataFrame(save_info, index=[0])

header = False if Path.exists(Path(current_path, 'college_info.csv')) else True

df.to_csv(Path(current_path, 'college_info.csv'), index=False, mode='a', header=header)

async def main(loop):

# 获取url列表

url_list = get_url_list(5000)

# 限制并发量

semaphore = asyncio.Semaphore(500)

# 创建任务对象并添加到任务列表中

tasks = [loop.create_task(get_json_data(url, semaphore)) for url in url_list]

# 挂起任务列表

for t in tqdm(asyncio.as_completed(tasks), total=len(tasks)):

await t

if __name__ == '__main__':

start = time.time()

# 修改事件循环的策略

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

# 创建事件循环对象

loop = asyncio.get_event_loop()

# 将任务添加到事件循环中并运行循环直至完成

loop.run_until_complete(main(loop))

# 关闭事件循环对象

loop.close()

df = pd.read_csv(Path(current_path, 'college_info.csv'))

df.drop_duplicates(keep='first', inplace=True)

df.reset_index(drop=True, inplace=True)

df.sort_values('学校id', inplace=True)

df.loc[df['软科排名'] == 0, '软科排名'] = 999

df.to_csv(Path(current_path, 'college_info.csv'), index=False)

print(f'采集完成,共耗时:{round(time.time() - start, 2) } 秒')+680边栏推荐

- Implementation of n queen in C language

- 你不知道的Set集合

- 搭载TI AM62x处理器,飞凌FET6254-C核心板首发上市!

- 03.golang初步使用

- 15_ Redis_ Redis. Conf detailed explanation

- SQL stored procedure

- PHP method to get the index value of the array item with the largest key value in the array

- How to solve the problem of database content output

- 6.12 企业内部upp平台(Unified Process Platform)的关键一刻

- 13_ Redis_ affair

猜你喜欢

Base64 coding can be understood this way

18_Redis_Redis主从复制&&集群搭建

Mavn builds nexus private server

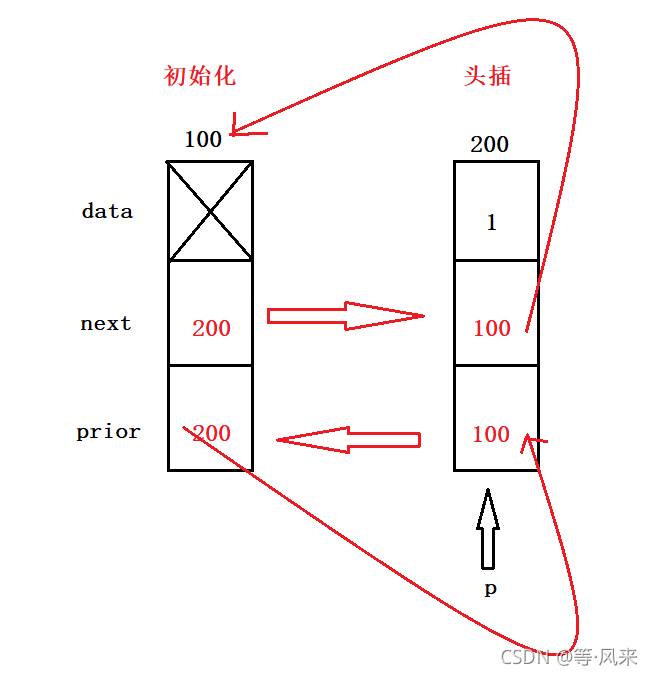

03_线性表_链表

LeetCode刷题——去除重复字母#316#Medium

14_ Redis_ Optimistic lock

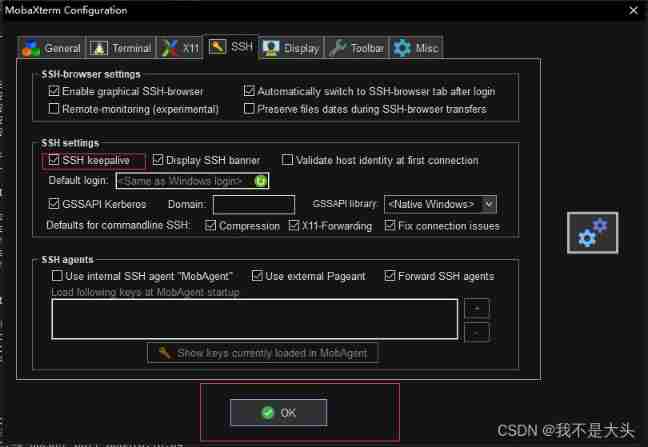

Solve the problem of frequent interruption of mobaxterm remote connection

How to choose a third-party software testing organization for automated acceptance testing of mobile applications

YOLOV5 代码复现以及搭载服务器运行

XML配置文件

随机推荐

Guangzhou Emergency Management Bureau issued a high temperature and high humidity chemical safety reminder in July

How to find a sense of career direction

TiDB混合部署拓扑

Leetcode skimming -- sum of two integers 371 medium

08_ strand

JVM architecture, classloader, parental delegation mechanism

Map介绍

Leetcode skimming -- verifying the preorder serialization of binary tree # 331 # medium

N皇后问题的解决

. Solution to the problem of Chinese garbled code when net core reads files

04. Some thoughts on enterprise application construction after entering cloud native

MySQL calculate n-day retention rate

Huffman tree: (1) input each character and its weight (2) construct Huffman tree (3) carry out Huffman coding (4) find hc[i], and get the Huffman coding of each character

Data analysis thinking analysis methods and business knowledge - business indicators

YOLOV5 代码复现以及搭载服务器运行

20_ Redis_ Sentinel mode

Engineer evaluation | rk3568 development board hands-on test

03_线性表_链表

百变大7座,五菱佳辰产品力出众,人性化大空间,关键价格真香

18_ Redis_ Redis master-slave replication & cluster building