当前位置:网站首页>Long article review: entropy, free energy, symmetry and dynamics in the brain

Long article review: entropy, free energy, symmetry and dynamics in the brain

2022-07-04 00:51:00 【Zhiyuan community】

Thesis title :

Entropy, Free Energy, Symmetry and Dynamics in the Brain

Thesis link :https://iopscience.iop.org/article/10.1088/2632-072X/ac4bec/meta

Abstract :

Neuroscience is the home of concepts and theories rooted in various fields , Including information theory 、 Dynamical system theory and Cognitive Psychology . But not all fields can be connected coherently , Some concepts are incommensurable , And domain specific terms create obstacles to integration . For all that , Conceptual integration is still a form of understanding that provides intuition and consolidation , There is no conceptual integration , Progress will be headless . This paper focuses on the integration of deterministic and stochastic processes within the framework of information theory , Thus, the information entropy and free energy are connected with the emergence dynamics mechanism and self-organization mechanism in the brain network . We identified the neuronal population (neuronal populations) Basic properties of , This attribute causes the equivariant matrix to appear in the network , And in this network , Complex behaviors can be represented by structured flows on manifolds , So as to establish an internal model related to brain function theory . We propose a neural mechanism to produce internal models from the symmetry breaking of brain network connections . Emerging ideas illustrate how free energy is related to internal models , And how they are produced in the neural basement .

introduction

Prediction code (Predictive coding) It is one of the most influential brain function theories in contemporary times [1-3]. The theory is based on the intuition that the brain operates as a Bayesian inference system , In this way, the internal generation model can predict the external world . These predictions are constantly compared with sensory input , Form prediction errors and update internal models ( See the picture 1). From a theoretical point of view , It is so fascinating to express brain function in the form of predictive coding , Because it solves too many esoteric concepts in different fields . In this way, there is an opportunity to link abstract concepts into an integrated framework , These abstract concepts such as dynamics 、 Deterministic action and stochastic action 、 Emergence 、 Self organizing [4-6]、 Information 、 entropy 、 Free energy [7,8]、 Steady state and so on . This cross domain integration is consistent with an intuitive understanding of these complex abstract concepts , Although it is usually reserved for ease of handling , All levels of complexity for a given concept are not described equivalently . for example , Use simple internal models in predictive coding theory ( For example, when making decisions , Dynamics is reduced to transformation ), But simple models are difficult to generalize to more complex behaviors . When the focus is on the reasoning part of the process , This approach is taken for granted , And we don't want to do this . In fact, we emphasize the neural basis of internal models in brain activation and the theory of complex behavior emergence . however , Considering the concept of information theory ( Especially entropy and free energy ) Importance , In predictive coding , Such an attempt requires them to integrate with the probability distribution function and related deterministic and stochastic effects existing in contemporary brain network models ( See the same view [9-11]).

chart 1: Bayesian brain hypothesis (Bayesian brain hypothesis) An illustration of the reasoning process of . The generative model on the left side of the figure shows internal neurodynamics , Through perception and action ( On the right side of the picture ) Exchange information with the outside world to update the model .

Karl Friston It is the first time to put forward the principle of taking free energy as a brain function [12-14], This paper expounds the self adaptation in Mathematics 、 How self-organizing systems resist natural ( Thermodynamic ) Disorderly tendency . Over time , The principle of free energy has been derived from the Helmholtz machine (Helmholtz machine) Developed from the concept of free energy used in , It is used to explain the cortical response in the context of predictive coding , And gradually developed into the general principles of agents , This is also called active reasoning [15]. Bayesian inference process and maximum information criterion (maximum information principle) In fact, both of them can be restated as the problem of free energy minimization . Although these concepts about free energy are used in two related frameworks , But they are not completely equivalent . Ambiguous or because of such facts , That is, their general form is similar to the Helmholtz free energy of thermodynamics (Helmholtz free energy), But it comes from two different reasoning routes ( See [15]). The first is the so-called “ Free energy from constraints ”, Corresponding to the minimum free energy under the maximum information criterion , Represents the trade-off between deterministic constraints and random actions [7]. Our main consideration is this type of free energy and its constraints . The other is variational free energy (variational free energy) And it is related to Bayesian brain hypothesis . This concept of free energy stems from the restatement of Bayesian rules , That is, to find the minimum relative entropy (KL- The divergence ) The probability distribution of this optimization problem , The relative entropy represents the error deviating from the exact Bayesian posterior .

Make sure the constraint is Structured flows on manifolds (structured flows on manifolds, SFMs)[16,17] The frame is expressed in the form of dynamics , Among them, structured flow belongs to the low dimensional dynamic system generated in the network , Therefore, it is the main candidate to represent the internal model in brain theory . Through the mediation of probability distribution , Empirical functions ( Such as discharge rate 、 energy 、 Variance and so on ) The correlations on both contain free energy and SFMs The connection between the two , among , Probability distribution is formed under the interaction of certainty and randomness in the system .Ilya Prigogine When explaining the meaning of entropy , The close relationship between these functions is explained in detail . ad locum , Time ( The concept of ) Beyond the concept of repetition and degradation , Reach the concept of constructive irreversibility , As life system shows , Through the exchange of entropy with its environment, we can perpetuate ourselves . Biology is believed to need to engrave irreversible time on matter . In the context of neuroscience , This reminds us of Ingvar The assumption of , That is, the brain has the ability to simulate through its time polarized structure “ Future memory ”[20], And the brain can maintain and navigate the past distributed in different brain regions 、 Present and future experience .

Although the mathematical formula of entropy first appeared in classical thermodynamics ( Involving things like heat 、 Macroscopic quantities such as temperature and energy exchange ) In the background of , Later statistical mechanics expressed entropy as the probability logarithmic function of the system in different possible microscopic states . The function form of the latter is the same as Shannon information entropy , among , The probability in Shannon information entropy expression is the probability of different possible values of variables ( see 2.2 section ). Such as Edwin T Jaynes stay 1957 As Nian said , At a deeper level , As a measure of uncertainty expressed in probability distribution , These two concepts of entropy are closely linked ; In two cases , It is regarded as the prediction problem of probability distribution constrained by observation , The probability distribution with maximum entropy is the only unbiased choice [7].

The above brief discussion on the meaning of entropy , stay Hermann Haken Established collaborators (synergetics) Find a theoretical framework in , The framework formally integrates away from the balance system (far-from-equilibrium systems) Medium dissipative structure (dissipative structures) Emerging mathematical forms . Nonlinearity and instability lead to emergence and complexity mechanism , Entropy and fluctuation lead to irreversibility and unpredictability . The essence of this dynamics naturally leads to the concept of probability , It makes up for our inability to accurately depict the unique trajectory of the system . Synergetics have always been the driving force to apply these principles to other fields , Especially life science and neuroscience . Clarify the duality of deterministic and stochastic effects and elaborate on how they occur in the brain , It will create a stage for our vision . therefore , The goal of this article is to unravel these complex relationships , So as to unify the seemingly unrelated brain dynamics model framework .

Considering the complementarity of these concepts , And for the convenience of readers who are interested in macro brain dynamics modeling from different backgrounds , Let's take a step back , Review some basic concepts related to probability theory and information theory .

The information theoretic framework of the brain —— System evolution modeling

Edwin T Jaynes emphasize , The major conceptual progress of information theory lies in a clear quantity , That is, the information entropy of uncertainty quantity is expressed by probability distribution , It intuitively reflects that the wide distribution represents more uncertainty than the peak distribution , At the same time, all other conditions consistent with this intuition are met [7]. Without any information , The corresponding probability distribution has no information at all and the entropy information disappears . With some deterministic constraints , Such as the measured value of the average value of physical observation , Using the Lagrange equation of the first kind (Lagrange's theory of first kind), We can solve the corresponding maximum information entropy under this constraint ( Equivalent to minimizing free energy [7,12]) Probability distribution of . In that sense , The consideration of entropy takes precedence over the discussion of deterministic effects , And it is concluded that it should conform to the maximum entropy distribution , The conclusion is based on the fact , That is, the maximum entropy distribution conforms to the results of all deterministic effects , But beyond that , I can't deny other influences like missing information . When entropy is the main concept , Free energy 、 Probability distribution function and SFMs The relationship between other related quantities is naturally established . These relationships express themselves in the real world through relevance , At the physical level, it is also available by measuring the function of system state variables , And in principle, it is allowed to systematically estimate all parameters of the system .

2.1. Predictive coding and its modeling framework

The content of prediction coding and its related concepts , Essentially based on three core equations :

The first equation establishes a simplified form of Bayesian theorem , In terms of probability distribution function p To said , among p(x,y) It's a state variable x and y The joint probability of ,p(y|x) Is a given variable x State variables y Conditional probability of . In the Bayesian framework , Parameters and state variables have similar status in a certain sense , That is, they can be described by distribution and enter the probability function in the form of parameters p in . for example , The given parameters k, state x and y The joint probability of can be written as p(x,y|k), Determine a given set of parameter values k Under the condition of , Get a set of data (x,y) The possibility of , among k The prior distribution of is p(k). A priori represents our understanding of the model and initial values .

The second equation , Langevin equation ( Technically, there are more precise forms of calculus that can be used in, for example [4] Find , The assumption here is ITO calculus ) Determine the generation model , The brain activity at the neurogenic level consists of N Dimensional state vector Q=(x,y,...)∈RN Express ,f(Q,k) Represents deterministic impact , Expressed as state based Q And parameters k( Or a set of parameters {k}) Of M Dimensional flow vector f.v∈RN The fluctuation effect is determined , It is usually assumed that <vi(t)vj(t')>=cδijδ(t-t') The Gaussian white noise of , among δij yes Kronecker-delta function ,δ(t-t') yes Dirac function . Noise effects ( Including multiplicative noise or colored noise ) There may be a more general formula , Please refer to the relevant literature by yourself .

The third equation establishes the observation model , Through the forward model h(Q) And measurement noise w Put the source activity Q(t) And the sensor signal obtained by experiment Z(t) Connect . For EEG measurement ,h yes Maxwell The gain matrix determined by the equation ; For fMRI measurements ,h By neurovascular coupling and hemodynamics Ballon–Windkessel The model gives . In the current situation , The observation model is irrelevant , But it is very important in real world applications , And it is usually the main pollution factor in model inversion and parameter estimation . For completeness , We mention these engineering problems here , But for the sake of simplicity , hypothesis h It is an identity operation with zero measurement noise , therefore Z=Q.

Predictive coding involves a major research field in behavioral neuroscience [21,22], Especially dedicated to perception - Ecological psychology of action and dynamic system research (ecological psychology)[23,24]. Here ,James J Gibson Emphasize the importance of environment [25], In particular, how the environment of an organism provides it with the perception of various behaviors . This perception - The action cycle closely matches the prediction and update cycle of the internally generated model in the prediction coding . But ecological psychology emphasizes one nuance , That is, ecologically available information —— Relative to the external or internal feeling —— Cause perception - The emergence of action dynamics .Scott Kelso And colleagues have made great contributions to the formalization of this framework , And developed an experimental paradigm , The dynamic characteristics of the internal model used to test the coordination between organisms and the environment [26-28]. These paradigms are theoretically influenced by Hermann Haken Synergetics [4] Inspired by the , Synergetics has always been the basis of self-organization theory . This has led to a lot of research focusing on the transition between perception and action states , Including the modeling of Bi sensory and multi sensory motor coordination [29-32] And system experimental test [33-44]. These methods are then extended to a wider range of paradigms [45-53], It aims to extract the main characteristics of the interaction between organisms and the environment [22]. The substantial evidence produced by many works is conducive to the dynamic description of behavior , These evidences can be classified as SFMs frame , For behavior [48] And the brain [54-56] Medium function perception - Action variables .

2.2. Maximum information principle

We calculate the corresponding probability distribution pi, So as to understand the process of determining certainty and randomness , This process determines the state variables x Discrete values of xi. Shannon proved such a remarkable fact , That is, there is a quantity of information H(p1,...pn), The uncertainty expressed by these probability distributions can be uniquely measured [8]. He pointed out in his initial proof , Requirements of three basic conditions , Especially the combination law of events and probability , Naturally, the following expression is formed .

ad locum ,K Is a positive constant . measure H Directly corresponding to the expression of entropy in statistical mechanics , It's called information entropy [7]. The discussion of its attributes is usually carried out from the perspective of the detailed probability related to the amount of information available to the observer . Without any differential information , Laplace's principle of insufficient reason (principle of insufficient reason) Assign equal probability to two events . This subjective School of thought believes that probability is the expression of human ignorance , Express our expectations about whether the event will happen . This idea is the basis of predictive coding theory and its explanation of the cognitive process of brain production . The objective School of thought is rooted in Physics , It is believed that probability is the objective attribute of events , In principle, this attribute can always be measured by the frequency of events in random experiments . Here , By studying the deterministic and stochastic processes under predictive coding , We hope to remain neutral on this point . The reference to the role of certainty and randomness usually belongs to the language of the objective School , among , The subsequent interpretation within the framework of predictive coding is part of the Subjective School . The generation model in predictive coding represents two types of actions through Langevin equation and forms a probability function , such , Through empirical measure function <g(x)>, It provides us with a way to obtain this information . among , Angle brackets indicate the expected value .

g(x) Correlation and normalization requirements Σipi=1, Express the constraints caused by certainty and randomness , Under these constraints, information entropy has a maximum . In addition to the assignment that maximizes the information entropy , Any other assignment will introduce other deterministic deviations or arbitrary assumptions , And we didn't make these assumptions . This insight is Edwin T Jaynes The essence of maximum information criterion [7]. therefore , Adopt the method commonly used in classical mechanics , introduce Lagrange Parameters λi, We maximize information entropy H Then we get the following formula :

The expression of probability distribution is generalized as event xi Multi measure function of gi(xi). The entropy of this distribution S Maximize , Write it down as :

The relationship between empirical measure and maximum information entropy is determined by partition function Z determine ( Such as status and (Zustandssumme) In the ):

Contact the following :

Measured by experience <gj(x)> The obtained correlation is related to the stationary probability distribution function through these equations , And further linked to the generation model expressed by Langevin equation ( That is, deterministic influence ).

边栏推荐

- 挖财帮个人开的证券账户安全吗?是不是有套路

- The difference between fetchtype lazy and eagle in JPA

- Pair

- Beijing invites reporters and media

- GUI application: socket network chat room

- 【.NET+MQTT】.NET6 环境下实现MQTT通信,以及服务端、客户端的双边消息订阅与发布的代码演示

- 功能:将主函数中输入的字符串反序存放。例如:输入字符串“abcdefg”,则应输出“gfedcba”。

- MySQL winter vacation self-study 2022 12 (1)

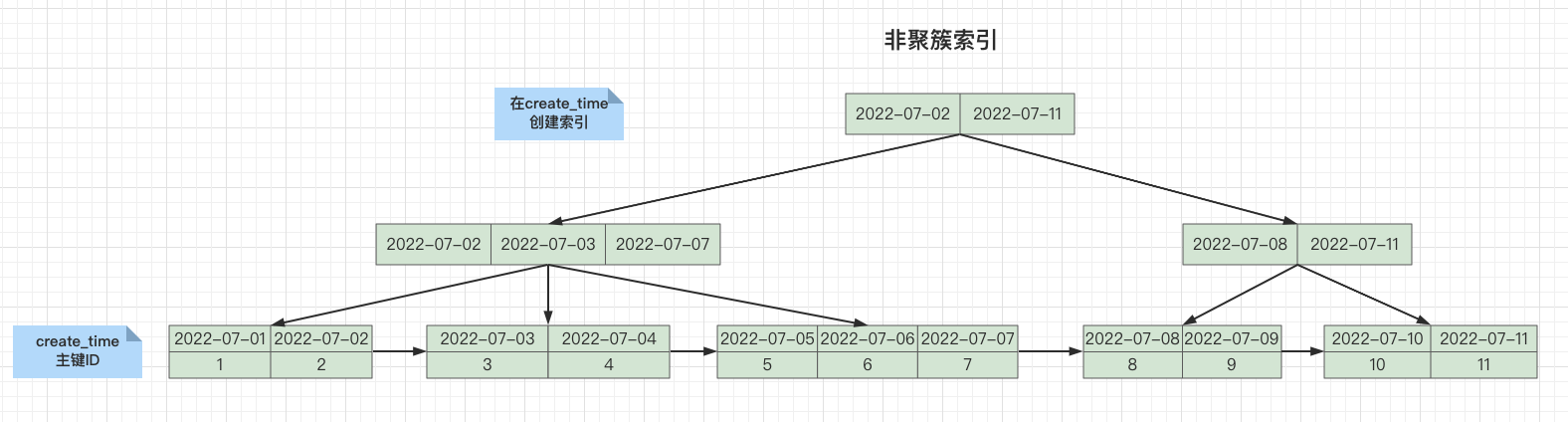

- 查询效率提升10倍!3种优化方案,帮你解决MySQL深分页问题

- 数据库表外键的设计

猜你喜欢

Query efficiency increased by 10 times! Three optimization schemes to help you solve the deep paging problem of MySQL

![[complimentary ppt] kubemeet Chengdu review: make the delivery and management of cloud native applications easier!](/img/3f/75b3125f8779e6cf9467a30fd7eeb4.jpg)

[complimentary ppt] kubemeet Chengdu review: make the delivery and management of cloud native applications easier!

【.NET+MQTT】.NET6 环境下实现MQTT通信,以及服务端、客户端的双边消息订阅与发布的代码演示

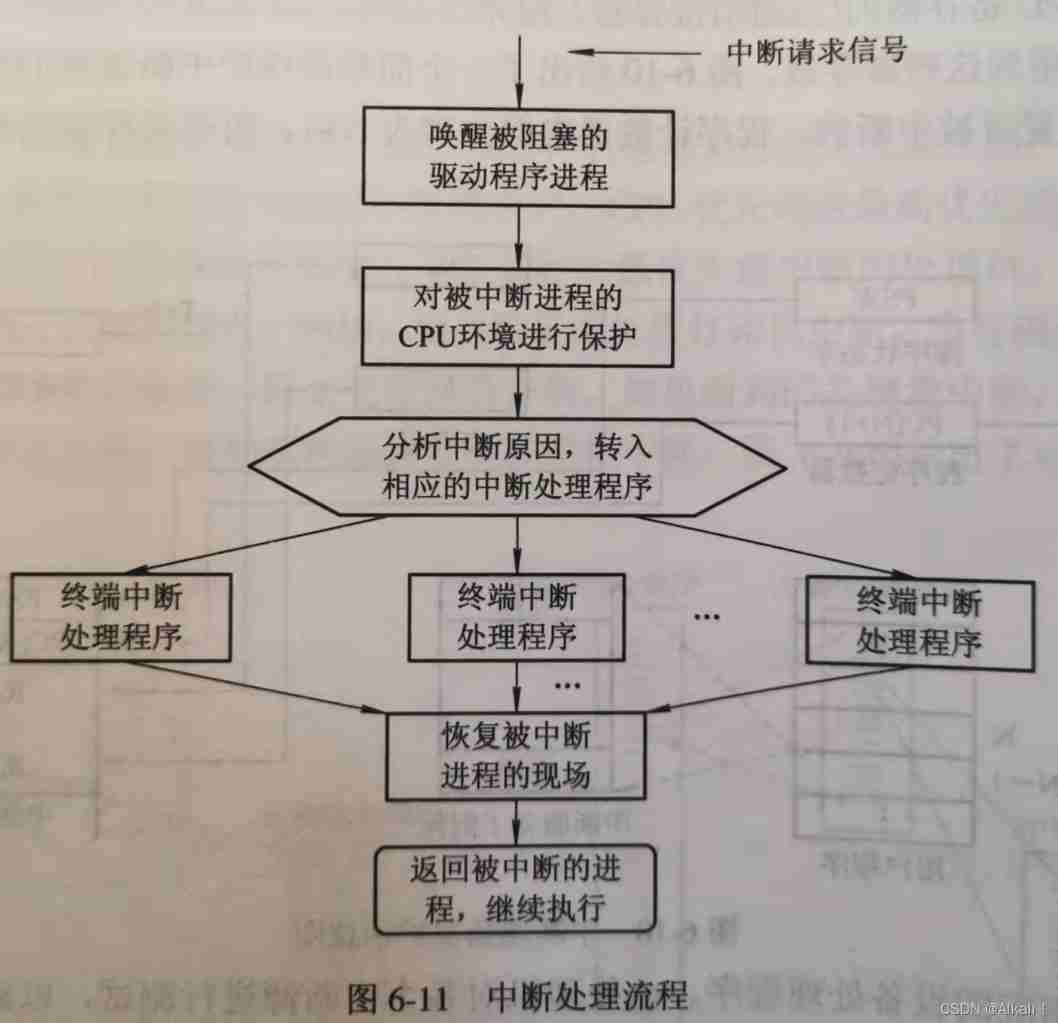

OS interrupt mechanism and interrupt handler

功能:将主函数中输入的字符串反序存放。例如:输入字符串“abcdefg”,则应输出“gfedcba”。

@EnableAsync @Async

1-Redis架构设计到使用场景-四种部署运行模式(上)

![[prefix and notes] prefix and introduction and use](/img/a6/a75e287ac481559d8f733e6ca3e59c.jpg)

[prefix and notes] prefix and introduction and use

![[common error] custom IP instantiation error](/img/de/d3f90cd224274d87fcf153bb9244d7.jpg)

[common error] custom IP instantiation error

![[error record] configure NDK header file path in Visual Studio](/img/9f/89f68c037dcf68a31a2de064dd8471.jpg)

[error record] configure NDK header file path in Visual Studio

随机推荐

Pytest unit test framework: simple and easy to use parameterization and multiple operation modes

Global and Chinese market of glossometer 2022-2028: Research Report on technology, participants, trends, market size and share

swagger中响应参数为Boolean或是integer如何设置响应描述信息

Wechat official account and synchronization assistant

Introduction to unity shader essentials reading notes Chapter III unity shader Foundation

On covariance of array and wildcard of generic type

On the day when 28K joined Huawei testing post, I cried: everything I have done in these five months is worth it

Thinkphp6 integrated JWT method and detailed explanation of generation, removal and destruction

[complimentary ppt] kubemeet Chengdu review: make the delivery and management of cloud native applications easier!

Is it really possible that the monthly salary is 3K and the monthly salary is 15K?

It's OK to have hands-on 8 - project construction details 3-jenkins' parametric construction

我管你什么okr还是kpi,PPT轻松交给你

1-redis architecture design to use scenarios - four deployment and operation modes (Part 1)

Function: write function fun to find s=1^k+2^k +3^k ++ The value of n^k, (the cumulative sum of the K power of 1 to the K power of n).

Oracle database knowledge points (IV)

PMP 考试常见工具与技术点总结

[GNN] hard core! This paper combs the classical graph network model

基于.NetCore开发博客项目 StarBlog - (14) 实现主题切换功能

What is the potential of pocket network, which is favored by well-known investors?

Sequence list and linked list