当前位置:网站首页>Elementary analysis of graph convolution neural network (GCN)

Elementary analysis of graph convolution neural network (GCN)

2022-07-23 06:14:00 【CityD】

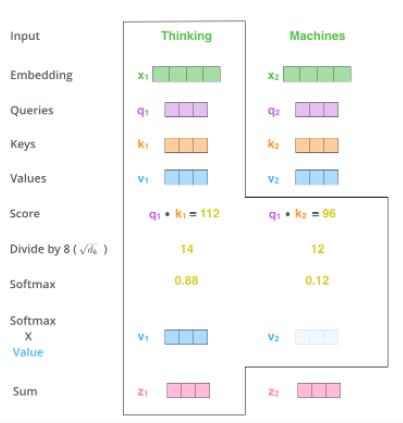

Multilayer graph convolution network (GCN) The interlayer propagation rule of is :

H ( l + 1 ) = σ ( D ~ − 1 2 A ~ D ~ − 1 2 H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left(\tilde{D}^{-\frac{1}{2}} \tilde{A} \tilde{D}^{-\frac{1}{2}} H^{(l)} W^{(l)}\right) H(l+1)=σ(D~−21A~D~−21H(l)W(l))

among , A ~ = A + I N \tilde{A}=A+I_N A~=A+IN, A A A Is the adjacency matrix of a graph , I N I_N IN Is the unit matrix . D ~ i i = ∑ j A ~ i j \tilde{D}_{ii}=\sum_j\tilde{A}_{ij} D~ii=∑jA~ij. W ( l ) W^{(l)} W(l) Is the trainable weight parameter of a specific layer . σ ( ⋅ ) \sigma(\cdot) σ(⋅) It's an activation function .

The following figure is an example to illustrate how these parameters are generated :

Its adjacency matrix A by :

A : [ [ 0. 1. 1. 0. ] [ 1. 0. 1. 0. ] [ 1. 1. 0. 1. ] [ 0. 0. 1. 0. ] ] A: \\ [[0.\quad1. \quad1.\quad 0.]\\ [1.\quad 0.\quad 1.\quad 0.]\\ [1. \quad1. \quad0. \quad1.]\\ [0. \quad0. \quad1. \quad0.]] A:[[0.1.1.0.][1.0.1.0.][1.1.0.1.][0.0.1.0.]]

A ~ = A + I N \tilde{A}=A+I_N A~=A+IN by :

A ~ : [ [ 1. 1. 1. 0. ] [ 1. 1. 1. 0. ] [ 1. 1. 1. 1. ] [ 0. 0. 1. 1. ] ] \tilde{A}: \\ [[1.\quad1.\quad1.\quad0.]\\ [1.\quad1.\quad1.\quad0.]\\ [1.\quad1.\quad1.\quad1.]\\ [0.\quad0.\quad1.\quad1.]] A~:[[1.1.1.0.][1.1.1.0.][1.1.1.1.][0.0.1.1.]]

D ~ i i = ∑ j A ~ i j \tilde{D}_{ii}=\sum_j\tilde{A}_{ij} D~ii=∑jA~ij by ( Equivalent to degree matrix ):

D ~ : [ [ 3. 0. 0. 0. ] [ 0. 3. 0. 0. ] [ 0. 0. 4. 0. ] [ 0. 0. 0. 2. ] ] \tilde{D}:\\ [[3.\quad0.\quad0.\quad0.]\\ [0.\quad3.\quad0.\quad0.]\\ [0.\quad0.\quad4.\quad0.]\\ [0.\quad0.\quad0.\quad2.]] D~:[[3.0.0.0.][0.3.0.0.][0.0.4.0.][0.0.0.2.]]

D ~ − 1 2 \tilde{D}^{- \frac{1}{2}} D~−21 by :

D ~ − 1 2 : [ [ 0.57735027 0. 0. 0. ] [ 0. 0.57735027 0. 0. ] [ 0. 0. 0.5 0. ] [ 0. 0. 0. 0.70710678 ] ] \tilde{D}^{-\frac{1}{2}}:\\ [[0.57735027 \quad 0. \quad 0.\quad0.]\\ [0.\quad0.57735027\quad0.\quad0.]\\ [0.\quad0.\quad0.5\quad0.]\\ [0.\quad0.\quad0.\quad0.70710678]] D~−21:[[0.577350270.0.0.][0.0.577350270.0.][0.0.0.50.][0.0.0.0.70710678]]

Take the following simple undirected graph to illustrate what calculation means :

The formula is :

H ( l + 1 ) = σ ( D ~ − 1 2 A ~ D ~ − 1 2 H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left(\tilde{D}^{-\frac{1}{2}} \tilde{A} \tilde{D}^{-\frac{1}{2}} H^{(l)} W^{(l)}\right) H(l+1)=σ(D~−21A~D~−21H(l)W(l))

Let's not consider D ~ − 1 2 \tilde{D}^{- \frac{1}{2}} D~−21, That is, only consider H ( l + 1 ) = σ ( A ~ H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left( \tilde{A} H^{(l)} W^{(l)}\right) H(l+1)=σ(A~H(l)W(l))

Adjacency matrix A A A and A ~ \tilde{A} A~ by :

A = [ [ 0 1 1 ] [ 1 0 0 ] [ 1 0 0 ] ] A ~ = [ [ 1 1 1 ] [ 1 1 0 ] [ 1 0 1 ] ] A=\\ [[0\quad1\quad1]\\ [1\quad0\quad0]\\ [1\quad0\quad0]] \\ \tilde{A}=\\ [[1\quad1\quad1]\\ [1\quad1\quad0]\\ [1\quad0\quad1]] A=[[011][100][100]]A~=[[111][110][101]]

The eigenvector matrix composed of the features of each node in the figure is :

X = [ [ 0.1 0.4 ] [ 0.2 0.3 ] [ 0.1 0.2 ] ] X = \\ [[0.1\quad0.4]\\ [0.2\quad0.3]\\ [0.1\quad0.2]] X=[[0.10.4][0.20.3][0.10.2]]

When only the adjacency matrix is considered , The calculation formula is : H ( l + 1 ) = σ ( A H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left( A H^{(l)} W^{(l)}\right) H(l+1)=σ(AH(l)W(l)). The calculation process is ( Without multiplying by the weight ):

[ [ 0 1 1 ] [ [ 0.1 0.4 ] [ [ 0.2 + 0.1 0.3 + 0.2 ] [ 1 0 0 ] ∗ [ 0.2 0.3 ] = [ 0.1 0.4 ] [ 1 0 0 ] ] [ 0.1 0.2 ] ] [ 0.1 0.4 ] ] [[0\quad1\quad1]~~~~~~~~~~[[0.1\quad0.4]~~~~~~~~~~[[0.2+0.1\quad0.3+0.2]\\ [1\quad0\quad0] ~~~~~*~~~~[0.2\quad0.3]~~~~~=~~~~~[0.1\quad0.4]\\ [1\quad0\quad0]] ~~~~~~~~~~[0.1\quad0.2]]~~~~~~~~~~[0.1\quad0.4]] [[011] [[0.10.4] [[0.2+0.10.3+0.2][100] ∗ [0.20.3] = [0.10.4][100]] [0.10.2]] [0.10.4]]

Now the characteristic of each node becomes the sum of the eigenvalues of all its neighbors . For example, the eigenvalue of the first node becomes [0.2+0.1 0.3+0.2], This is i yes 2、3 Sum of nodes .

Let's see what the calculation process means when considering the adjacency matrix plus the identity matrix , Its calculation formula is : H ( l + 1 ) = σ ( A ~ H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left( \tilde{A} H^{(l)} W^{(l)}\right) H(l+1)=σ(A~H(l)W(l)), The calculation process is ( Without multiplying by the weight ):

[ [ 1 1 1 ] [ [ 0.1 0.4 ] [ [ 0.2 + 0.1 + 0.1 0.4 + 0.3 + 0.2 ] [ 1 1 0 ] ∗ [ 0.2 0.3 ] = [ 0.1 + 0.2 0.4 + 0.3 ] [ 1 0 1 ] ] [ 0.1 0.2 ] ] [ 0.1 + 0.1 0.4 + 0.2 ] ] [[1\quad1\quad1]~~~~~~~~~~[[0.1\quad0.4]~~~~~~~~~~[[0.2+0.1+0.1\quad0.4+0.3+0.2]\\ [1\quad1\quad0] ~~~~~*~~~~[0.2\quad0.3]~~~~~=~~~~~[0.1+0.2\quad0.4+0.3]\\ [1\quad0\quad1]] ~~~~~~~~~~[0.1\quad0.2]]~~~~~~~~~~[0.1+0.1\quad0.4+0.2]] [[111] [[0.10.4] [[0.2+0.1+0.10.4+0.3+0.2][110] ∗ [0.20.3] = [0.1+0.20.4+0.3][101]] [0.10.2]] [0.1+0.10.4+0.2]]

After the adjacency matrix plus the identity matrix , The eigenvalue of each node becomes the sum of the eigenvalues of its own node and all neighbor nodes .

If a node has more neighbors , Then the eigenvalue after adding becomes very large , Therefore, it needs to be normalized , and D ~ − 1 2 \tilde{D}^{- \frac{1}{2}} D~−21 The function of plays a role of normalization , therefore GCN The inter layer propagation rule of is H ( l + 1 ) = σ ( D ~ − 1 2 A ~ D ~ − 1 2 H ( l ) W ( l ) ) H^{(l+1)}=\sigma\left(\tilde{D}^{-\frac{1}{2}} \tilde{A} \tilde{D}^{-\frac{1}{2}} H^{(l)} W^{(l)}\right) H(l+1)=σ(D~−21A~D~−21H(l)W(l)).

The following figure for GCN A schematic diagram of , Enter a feature dimension as C Graph structure of , after GCN Then get a new graph , The characteristics and dimensions of each node in the graph change ( from C Dimension becomes F dimension ), But the structure of the graph remains unchanged .

The last layer GCN The resulting graph is used for specific tasks . In the classification task , Generally, each node in the graph is classified , therefore :

- The last layer GCN The number of hidden layer features of is equal to the number of categories , Used directly for softmax Output probability .

- In the output of F After Witt's sign , Another full connection layer , Then turn to dimensions with the same number of categories .

To sum up

With symmetric adjacency matrix A Two levels of semi supervised node classification on the graph GCN For example , First, calculate in the pretreatment step A ^ = D ~ − 1 2 A ~ D ~ − 1 2 \hat{A}=\tilde{D}^{-\frac{1}{2}}\tilde{A}\tilde{D}^{-\frac{1}{2}} A^=D~−21A~D~−21, The forward calculation of the model is :

Z = f ( X , A ) = softmax ( A ^ ReLU ( A ^ X W ( 0 ) ) W ( 1 ) ) Z=f(X, A)=\operatorname{softmax}\left(\hat{A} \operatorname{ReLU}\left(\hat{A} X W^{(0)}\right) W^{(1)}\right) Z=f(X,A)=softmax(A^ReLU(A^XW(0))W(1))

W ( 0 ) ∈ R C × H W^{(0)}\in \mathbb{R}^{C \times H} W(0)∈RC×H Is a person with H H H Input of hidden layers of dimensional features - Hide the weight matrix . W ( 1 ) ∈ R H × F W^{(1)}\in \mathbb{R}^{H\times F} W(1)∈RH×F Is a weight matrix hidden in the output .softmax Activation function . For classification tasks , Using the cross entropy loss function :

L = − ∑ l ∈ Y L ∑ f = 1 F Y l f ln Z l f \mathcal{L}=-\sum_{l \in \mathcal{Y}_{L}} \sum_{f=1}^{F} Y_{l f} \ln Z_{l f} L=−l∈YL∑f=1∑FYlflnZlf

Code section

First import the package used .

import scipy.sparse as sp

import numpy as np

import torch

import math

from torch.nn.parameter import Parameter

import torch.nn as nn

import torch.nn.functional as F

import time

import argparse

import torch.optim as optim

from visdom import Visdom

Code address :https://github.com/tkipf/pygcn

1、 Data processing

cora The data set consists of machine learning papers . These papers fall into one of the following seven categories :

- Case_Based

- Genetic_Algorithms

- Neural_Networks、

- Probabilistic_Methods

- Reinforcement_Learning

- Rule_Learning

- Theory

cora The data set includes two files :

cora.contentThe file is the information of all data , Each line includes Node number , Eigenvector , Category .cora.citesThe file is side information , Every actnode 1, node 2, It means that these two nodes are connected to form an edge .

The main function of the data processing part is :

- Use the information of edges to generate adjacency matrix , Then normalize it , In this paper, the normalization method is : A ^ = D ~ − 1 2 A ~ D ~ − 1 2 \hat{A}=\tilde{D}^{-\frac{1}{2}}\tilde{A}\tilde{D}^{-\frac{1}{2}} A^=D~−21A~D~−21, The normalization method in the code implementation is : A ^ = D ~ − 1 A ~ \hat{A}=\tilde{D}^{-1}\tilde{A} A^=D~−1A~.

- Deal with eigenvectors , And normalize the eigenvector ( There is no normalization in the paper , not essential ).

- Divide the training set 、 Validation set and test set .

The specific code is as follows .

def encode_onehot(labels):

""" Change the label to one_hot code :param labels: :return: """

# Get all categories in the tag

#set Create an unordered and unrepeatable collection

classes = set(labels)

# Assign one to each tag one-hot code

#identity The function is used for a n*n The identity matrix of ( The main diagonal elements are all 1, The rest are all 0 Matrix ).

classes_dict = {

c : np.identity(len(classes))[i,:] for i,c in enumerate(classes)}

# Change the original label to one-hot code

labels_onehot = np.array(list(map(classes_dict.get,labels)),dtype = np.int32)

return labels_onehot

def normalize(mx):

''' Row normalized sparse matrix :param mx: :return: '''

# Matrix row summation

rowsum = np.array(mx.sum(1))

# Summative -1 Power

r_inv = np.power(rowsum,-1).flatten()

# If it is inf, convert to 0

r_inv[np.isinf(r_inv)] = 0.

# Construct diagonal matrix

r_mat_inv = sp.diags(r_inv)

# structure D^(-1)*A , Normalization operation

# In the formula is D^(-1/2)*A*D^(-1/2), Here is a simplified normalization operation

mx = r_mat_inv.dot(mx)

return mx

def sparse_mx_to_torch_sparse_tensor(sparse_mx):

''' Transform a huge sparse matrix into torch Sparse tensor . :param sparse_mx: :return: '''

sparse_mx = sparse_mx.tocoo().astype(np.float32)

indices = torch.from_numpy(

np.vstack((sparse_mx.row,sparse_mx.col)).astype(np.int64))

values = torch.from_numpy(sparse_mx.data)

shape = torch.Size(sparse_mx.shape)

return torch.sparse.FloatTensor(indices,values,shape)

def load_data(path = "./data/cora/", dataset = "cora"):

print('Loading {} dataset...'.format(dataset))

# Load data from a text file

#genfromtxt(): Load data from a text file , Processing data at the same time .

#idx_features_labels The shape of the :( Number of nodes , Number + features + Category ) = (2708,1435)

idx_features_labels = np.genfromtxt("{}{}.content".format(path,dataset),dtype = np.dtype(str))

# Take the eigenvalue of each node , At the same time, it is transformed into a sparse matrix .A You can take its value

features = sp.csr_matrix(idx_features_labels[:,1:-1],dtype = np.float32)

# Change the tag value to one-hot

labels = encode_onehot(idx_features_labels[:,-1])

# Get the id

idx = np.array(idx_features_labels[:,0],dtype = np.int32)

# Will all id In order Construct a Dictionaries key Is the node order of the source data ,value For from 0 To 1 Order value of

idx_map = {

j:i for i,j in enumerate(idx)}

# Read edge information , Each row of data consists of two nodes , It means that these two nodes are connected to form an edge

edges_unordered = np.genfromtxt("{}{}.cites".format(path,dataset),dtype = np.int32)

# For each node Renumber

#flatten() : Reduce the dimension of multidimensional data

edges = np.array(list(map(idx_map.get,edges_unordered.flatten())),dtype = np.int32).reshape(edges_unordered.shape)

# Building adjacency matrix

#coo_matrix: Generate a matrix in coordinate format

adj = sp.coo_matrix((np.ones(edges.shape[0]),(edges[:,0],edges[:,1])),

shape = (labels.shape[0],labels.shape[0]),

dtype = np.float32)

# Calculate transpose matrix

adj = adj + adj.T.multiply(adj.T > adj) - adj.multiply(adj.T > adj)

# Normalize the features , Not absolutely necessary

features = normalize(features)

# Yes A+I Normalize ,

adj = normalize(adj + sp.eye(adj.shape[0]))

# Divide training 、 verification 、 Samples tested

idx_train = range(140)

idx_val = range(200,500)

idx_test = range(500,1500)

# take numpy The data goes to torch Format

features = torch.FloatTensor(np.array(features.todense()))

labels = torch.LongTensor(np.where(labels)[1])

adj = sparse_mx_to_torch_sparse_tensor(adj)

idx_train = torch.LongTensor(idx_train)

idx_val = torch.LongTensor(idx_val)

idx_test = torch.LongTensor(idx_test)

return adj,features,labels,idx_train,idx_val,idx_test

2、GCN Calculation process

The calculation process is to use the processed adjacency matrix, weight and eigenvector to calculate , Its operation formula is :$\left(\hat{A} X W\right) $. The specific code is as follows :

class GraphConvolution(nn.Module):

""" Simple GCN layer, similar to https://arxiv.org/abs/1609.02907 """

def __init__(self,in_features,out_features,bias = True):

super(GraphConvolution, self).__init__()

# Enter the feature dimension

self.in_features = in_features

# Output feature dimensions

self.out_features = out_features

# Weight parameters

self.weight = Parameter(torch.FloatTensor(in_features,out_features))

# bias

if bias:

self.bias = Parameter(torch.FloatTensor(out_features))

else:

self.register_parameter('bias',None)

self.reset_parameters()

# Initialize parameters

def reset_parameters(self):

stdv = 1. / math.sqrt(self.weight.size(1))

self.weight.data.uniform_(-stdv,stdv)

if self.bias is not None:

self.bias.data.uniform_(-stdv,stdv)

def forward(self,input,adj):

# The eigenvector is multiplied by the weight

support = torch.mm(input,self.weight)

# Then multiply with the processed adjacency matrix

output = torch.spmm(adj,support)

# Whether to add offset

if self.bias is not None:

return output + self.bias

else:

return output

# Used to display properties

def __repr__(self):

return self.__class__.__name__ + ' ('\

+ str(self.in_features) + ' -> '\

+ str(self.out_features) + ')'

3、 Build a two-tier GCN The Internet

Use the above GCN The calculation process of can build a two-tier GCN The Internet , It also uses dropout, The formula is : softmax ( A ^ ReLU ( A ^ X W ( 0 ) ) W ( 1 ) ) \operatorname{softmax}\left(\hat{A} \operatorname{ReLU}\left(\hat{A} X W^{(0)}\right) W^{(1)}\right) softmax(A^ReLU(A^XW(0))W(1)). The code is as follows :

class GCN(nn.Module):

def __init__(self,nfeat,nhid,nclass,dropout):

super(GCN, self).__init__()

self.gc1 = GraphConvolution(nfeat,nhid)

self.gc2 = GraphConvolution(nhid,nclass)

self.dropout = dropout

def forward(self,x,adj):

x = F.relu(self.gc1(x,adj))

x = F.dropout(x,self.dropout,training = self.training)

x = self.gc2(x,adj)

return F.log_softmax(x,dim = 1)

4、 model training

Define a function of calculation accuracy .

def accuracy(output,labels):

preds = output.max(1)[1].type_as(labels)

correct = preds.eq(labels).double()

correct = correct.sum()

return correct / len(labels)

The following is the code of the training model

# Set training parameters

parser = argparse.ArgumentParser()

parser.add_argument('--no-cuda', action='store_true', default=False,

help='Disables CUDA training.')

parser.add_argument('--fastmode', action='store_true', default=False,

help='Validate during training pass.')

parser.add_argument('--seed', type=int, default=42, help='Random seed.')

parser.add_argument('--epochs', type=int, default=200,

help='Number of epochs to train.')

parser.add_argument('--lr', type=float, default=0.01,

help='Initial learning rate.')

parser.add_argument('--weight_decay', type=float, default=5e-4,

help='Weight decay (L2 loss on parameters).')

parser.add_argument('--hidden', type=int, default=16,

help='Number of hidden units.')

parser.add_argument('--dropout', type=float, default=0.5,

help='Dropout rate (1 - keep probability).')

#args = parser.parse_args()

args = parser.parse_known_args()[0]

args.cuda = not args.no_cuda and torch.cuda.is_available()

# Set random seeds

np.random.seed(args.seed)

torch.manual_seed(args.seed)

if args.cuda:

torch.cuda.manual_seed(args.seed)

# Load data

adj,features,labels,idx_train,idx_val,idx_test = load_data()

# Load models and optimizers

model = GCN(nfeat = features.shape[1],

nhid = args.hidden,

nclass = labels.max().item() + 1,

dropout = args.dropout)

optimizer = optim.Adam(model.parameters(),

lr = args.lr,weight_decay = args.weight_decay)

# If you use GPU, Send data to GPU

if model.cuda():

model.cuda()

features = features.cuda()

adj = adj.cuda()

labels = labels.cuda()

idx_train = idx_train.cuda()

idx_val = idx_val.cuda()

idx_test = idx_test.cuda()

# Training functions

def train(epoch,viz):

t = time.time()

model.train()

optimizer.zero_grad()

output = model(features,adj)

# Calculate the training loss and accuracy

loss_train = F.nll_loss(output[idx_train],labels[idx_train])

acc_train = accuracy(output[idx_train],labels[idx_train])

loss_train.backward()

optimizer.step()

if not args.fastmode:

model.eval()

output = model(features,adj)

# Calculate the verification loss and accuracy

loss_val = F.nll_loss(output[idx_val],labels[idx_val])

acc_val = accuracy(output[idx_val],labels[idx_val])

viz.line(Y=np.column_stack((acc_train.cpu(),acc_val.cpu())),

X=np.column_stack((epoch, epoch)),

win='line1',

opts=dict(legend=['acc_train','acc_val'],

title='acc',

xlabel='epoch',

ylabel='acc'),

update = None if epoch == 0 else 'append'

)

viz.line(Y=np.column_stack((loss_train.detach().cpu().numpy(),loss_val.detach().cpu().numpy())),

X=np.column_stack((epoch, epoch)),

win='line2',

opts=dict(legend=['loss_train','loss_val'],

title='loss',

xlabel='epoch',

ylabel='loss'),

update = None if epoch == 0 else 'append'

)

''' print('Epoch: {:04d}'.format(epoch + 1), 'loss_train: {:.4f}'.format(loss_train.item()), 'acc_train: {:.4f}'.format(acc_train.item()), 'loss_val: {:.4f}'.format(loss_val.item()), 'acc_val: {:.4f}'.format(acc_val.item()), 'time: {:.4f}s'.format(time.time() - t)) '''

# Test functions

def test():

model.eval()

output = model(features,adj)

loss_test = F.nll_loss(output[idx_test],labels[idx_test])

acc_test = accuracy(output[idx_test],labels[idx_test])

print("Test set results:",

"loss= {:.4f}".format(loss_test.item()),

"accuracy= {:.4f}".format(acc_test.item()))

Start training

t_total = time.time()

viz = Visdom(env='test1')

for epoch in range(args.epochs):

train(epoch,viz)

print('Optimization Finished!')

print("Total time elapsed: {:.4f}s".format(time.time() - t_total))

# Testing

test()

Optimization Finished!

Total time elapsed: 8.1138s

Test set results: loss= 0.7548 accuracy= 0.8260

The loss visualization curve during training is :

The visualization curve of accuracy during training is :

边栏推荐

猜你喜欢

Transformer

Win11使用CAD卡顿或者致命错误怎么办?Win11无法正常使用CAD

中兴通讯云基础设施开源与标准总监李响:面向企业的开源风险与开源治理

Chapter6 convolutional neural network (CNN)

Chapter6 卷积神经网络(CNN)

![[第五空间2019 决赛]PWN5 ——两种解法](/img/4a/6712ed4d0d274b923db66f0e87cf60.png)

[第五空间2019 决赛]PWN5 ——两种解法

pytorch中的pad_sequence、pack_padded_sequence和pad_packed_sequence函数

Lc: sword finger offer 05. replace spaces

栈溢出基础练习题——6(字符串漏洞64位下)

UNIX Programming - network socket

随机推荐

中国工程院院士倪光南:拥抱开源 与世界协同创新

Understanding the application steps of machine learning development

ROPgadget初识 ——— ret2syscall

Remember a way to connect raspberry pie wirelessly without a display screen and can't find IP

Asset mapping process

Chapter7 循环神经网络-2

51单片机的入门知识(献给初学者最易懂的文章)

过拟合-权重正则化和Dropout正则化

On Lora, lorawan and Nb IOT Internet of things technologies

堆基础练习题 —— 1

esp-idf vscode配置 从下载工具链到创建工程,步骤记录

【数据库连接】——节选自培训

华为首席开源联络官任旭东:深耕基础软件开源,协同打造数字世界根技术

hcip--复习第二天作业

IDEA:SLF4J: Failed to load class “org.slf4j.impl.StaticLoggerBinder“.

Introduction to SQL -- Basic additions, deletions, modifications, and exercises

MRS +Apache Zeppelin,让数据分析更便捷

Common problems of multiple processes - how to lock the same parent thread variable (critical resource) when creating multiple threads so that the shared parent thread variable is not repeatedly modif

2020_ ACL_ A Transformer-based joint-encoding for Emotion Recognition and Sentiment Analysis

【NumPy】