当前位置:网站首页>TFIDF and BM25

TFIDF and BM25

2022-06-25 15:41:00 【xieyan0811】

TFIDF

Just to review tfidf,tf It's word frequency , That is, a word i stay article j Frequency of occurrence in . The denominator is the number of words in the passage , The denominator is a word i Number of occurrences .tf The higher it is, the more important it is , For short text matching , Each word usually appears only once ,tf The size of depends on the denominator , The length of the article .

t f i , j = n i , j ∑ k n k , j tf_{i,j}=\frac{n_{i,j}}{\sum_kn_{k,j}} tfi,j=∑knk,jni,j

idf It's reverse document frequency , Calculate the frequency of the word in all articles , here , The denominator contains the keyword i The number of articles , The numerator is the number of all articles N. use log Equivalent to the same trend , The value becomes smaller . The more the word appears , The bigger the molecule ,idf The smaller the value. , such as :“ Of ” Often appear , So it's not a keyword . Dang Ci i stay article j It doesn't appear at all , The denominator is 0, So add... To the denominator 1.

i d f i = l o g N d f i + 1 idf_i=log\frac{N}{df_i+1} idfi=logdfi+1N

tf and idf The product of is the word i In the article j Importance of .

t f i d f i , j = t f i , j × i d f i tfidf_{i,j}=tf_{i,j} \times idf_i tfidfi,j=tfi,j×idfi

In search , Calculate multiple keywords in the search string And article j The similarity : Combine the tfidf Add up :

s i m i l a r i t y = ∑ i t f i d f i , j similarity = \sum_{i} tfidf_{i,j} similarity=i∑tfidfi,j

Search before , You need to know the distribution of each word in a given article set .

BM25

BM25 Is based on TF-IDF The improved algorithm ,BM yes Best Match Acronym for best match ,25 It means the 25 Second algorithm iteration .

idf Only minor adjustments have been made in the part :

i d f i = l o g N − d f i + 0.5 d f i + 0.5 idf_i=log\frac{N-df_i+0.5}{df_i+0.5} idfi=logdfi+0.5N−dfi+0.5

The denominator part subtracts from all the articles that contain i The article ,0.5 For smoothing .

Next , Right again tf The following adjustments have been made :

t f s c o r e = ( k + 1 ) × t f k × ( 1 − b + b × L d L a v g ) + t f tfscore= \frac {(k + 1) \times tf} { k \times (1 - b + b \times \frac{L_d}{L_{avg}}) + tf} tfscore=k×(1−b+b×LavgLd)+tf(k+1)×tf

Here we introduce a super parameter k and b.

First look at the parentheses in the denominator ,Ld Is the length of the article ,Lavg Is the average length of all articles , When the article length is consistent with the average length , The value in brackets is 1, Equivalent to the UN multiplied coefficient ; When the article is shorter than the average length , The value in brackets is less than 1, The smaller the denominator , The greater the result of the above formula , That is to say, the shorter the article , The more important each word is , This is also consistent with intuition . in addition , The influence of length is related to b of ,b The bigger it is , The greater the impact ,b The value is 0-1 Between , When b by 0 when , The effect of length is completely disregarded ,b The general value is 0.75.

k The range used to standardize word frequency , take tf Value compressed to 0~k+1 Between , The function curve is as follows :

t f s c o r e = ( k + 1 ) × t f k + t f tfscore = \frac{(k + 1) \times tf}{k + tf} tfscore=k+tf(k+1)×tf

Its horizontal axis is tf, The vertical axis is tfscore, Respectively for k=0,1,2,3,4 drawing . When k=0 when ,tfscore by 1, Do not consider the influence of word frequency , and k The greater the word frequency, the closer it is to the original word frequency . therefore , If the article contains only short text , Or you don't need to pay attention to how many times the word appears , You can set it to k=0.

Sometimes the word i Frequency in search text , The above formula is expanded to :

∑ t ∈ q l o g [ N − d f i + 0.5 d f i + 0.5 ] × ( k 1 + 1 ) t f t d k 1 ( 1 − b + b × L d L a v g ) + t f t d × ( k 2 + 1 ) t f t q k 2 + t f t q \sum_{t \in q} log[\frac{N-df_i+0.5}{df_i+0.5}] \times \frac{(k_1+1)tf_{td}}{k_1(1-b+b \times \frac{L_d}{L_{avg}})+tf_{td}} \times \frac{(k_2+1)tf_{tq}}{k_2+tf_{tq}} t∈q∑log[dfi+0.5N−dfi+0.5]×k1(1−b+b×LavgLd)+tftd(k1+1)tftd×k2+tftq(k2+1)tftq

among td Refers to the searched text ,tq Refers to search text .

In this way, we can refine the control tf The proportion of , And the length of the article , To adapt to the search and matching tasks in different situations . Pay attention to setting parameters k and b.

Previous BM25 Algorithms are integrated in gensim in , The latest version is gone , If you want to use , It can be extracted from the old version .

边栏推荐

- Is it safe to open a stock account through the account opening link of the account manager?

- JMeter reading and writing excel requires jxl jar

- Shared memory synchronous encapsulation

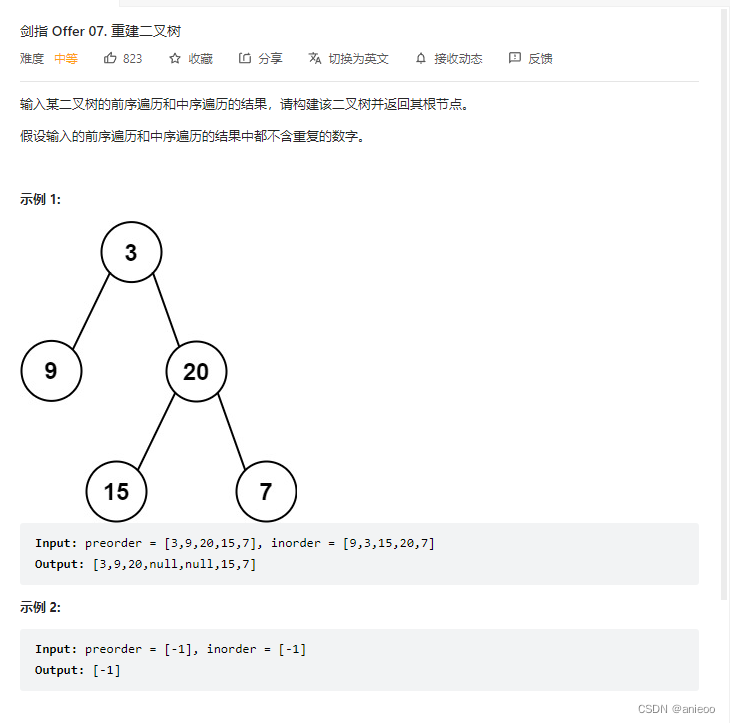

- 剑指 Offer 07. 重建二叉树

- Install Kali extension 1: (kali resolution problem)

- Markdown learning

- How to convert a recorded DOM to a video file

- TFIDF与BM25

- Error com mysql. cj. jdbc. exceptions. Communicationsexception: solutions to communications link failure

- Thread - learning notes

猜你喜欢

Postman usage notes, interface framework notes

解析数仓lazyagg查询重写优化

Afterword of Parl intensive learning 7-day punch in camp

04. binary tree

Record the time to read the file (the system cannot find the specified path)

Why is it said that restarting can solve 90% of the problems

QT pattern prompt box implementation

(1) Introduction

剑指 Offer 07. 重建二叉树

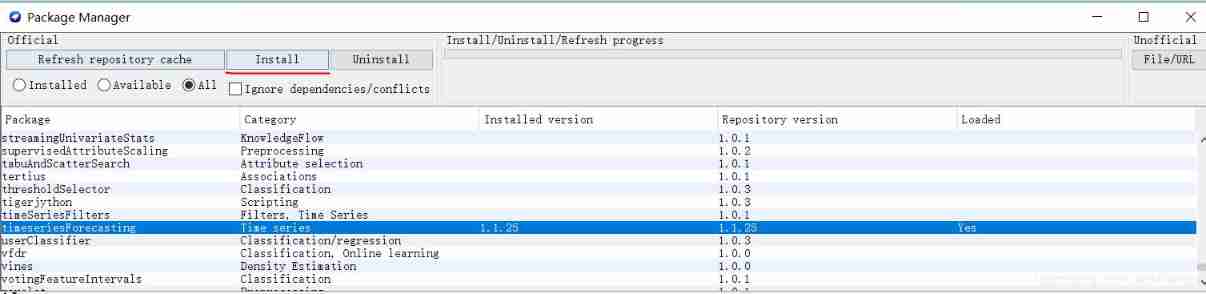

How to download and install Weka package

随机推荐

Summary of regularization methods

剑指 Offer 06. 从尾到头打印链表

Source code analysis of nine routing strategies for distributed task scheduling platform XXL job

MySQL field truncation principle and source code analysis

Distributed token

Day01: learning notes

Completabilefuture of asynchronous tools for concurrent programming

MySQL modifier l'instruction de champ

通俗讲跨域

Several solutions to the distributed lock problem in partial Internet companies

Globally unique key generation strategy - implementation principle of the sender

Start using markdown

Afterword of Parl intensive learning 7-day punch in camp

Internal class learning notes

Using R language in jupyter notebook

中国高校首次!全球唯一!同济学子斩获国际大奖

Shared memory synchronous encapsulation

JMeter reading and writing excel requires jxl jar

Is it safe to open a stock account through the account opening link of the account manager?

Detailed description of crontab command format and summary of common writing methods