当前位置:网站首页>Can deep learning solve the parameters of a specific function?

Can deep learning solve the parameters of a specific function?

2022-07-31 00:49:00 【Wanli Pengcheng in a blink of an eye】

Deep learning, as the most popular technology at the moment, can solve many industrial problems, such as sentiment analysis, fault prediction, face recognition, stock trend forecast, weather forecast and so on.These applications demonstrate the powerful capabilities of deep learning, so can deep learning techniques be applied to the parameter solving of specific functions?

Answer first, no.Deep learning technology cannot be applied to the parameter solving problem of a specific function, because although deep learning can fit any function, it cannot give a specific function form.Just like resnet and densenet, although they know the classification problem of the imagenet dataset that they solve very well, they cannot give a specific function form.

1. How to solve the parameters of a specific function

Then the next step is to ask what techniques in the field of deep learning can be used to solve the parameters of a specific function.This takes deep learning back to the level of neural networks. The essence of deep learning is to solve complex problems by increasing the depth of the model.The essence of neural network training and parameter tuning is gradient descent and backpropagation. Through gradient descent and backpropagation, parameters of any form of function can be solved.

2. How to construct a neural network for a specific problem

The thinking about this blog post actually comes from the following link: https://ask.csdn.net/questions/7764283

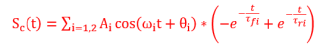

The subject in the question wants to use the neural network method to solve the parameters of the following function

In response to this question, the blogger's answer is: neural networks canFits any function, but cannot specify the function form of the fit, that is to say, the fully connected neural network constructed by conventional methods cannot solve the parameters of the specified function, because the fully connected neural network is nothing more than matrix multiplication and activation function., matrix multiplication cannot represent cos function and exponential function.Unless you implement a custom layer for these functions (implementing cos layer, exponential layer), you can constrain the parameters learned by the network.

Supplementary Note 1: If the fully connected neural network model does not have an activation function, it can only achieve the fitting of a linear function, and cannot achieve the fitting of a nonlinear function.Because the superposition of multiple fully connected layers is essentially a matrix multiplication, which can be simplified to a fully connected layer.

Supplementary Note 2: For a custom layer, solving the parameters of a specific function constrains the form of the function, which makes the initialization of parameters have an extremely serious impact on the training of the model.Unlike the fully connected neural network, only the parameters need to be initialized to a specific distribution.

Supplementary Note 3: According to Supplementary Note 2, when solving the parameters of a specific function, multiple groups of functions should be initialized for multi-channel training.When training a multi-channel model, the parameters of multiple models can be weighted and aggregated every certain rounds to speed up the convergence.

3. There is a problem with constructing a specific layer

In deep learning frameworks, such as tensorflow, paddle, and pytorch, all support custom layers, and any specified function can be implemented by the superposition of multiple custom layers.One of the problems here is the parameters of the layer, the forward propagation of the layer, and the calculation method of the layer gradient.

The parameters of the layer: This is actually the parameters of the function to be solved.It may be the coefficient of the cos function or the coefficient of the exponential function;

The forward propagation of the layer: here is the specific form of the specified function, such as the cos function form, the exponential function form;

Calculation method of layer gradient: Because the specific form of the function is specified in the forward propagation of the layer, the built-in automatic derivation method of the deep learning framework may not be available, and the calculation method of the specific layer derivative needs to be provided by yourself.

边栏推荐

- API 网关 APISIX 在Google Cloud T2A 和 T2D 的性能测试

- background has no effect on child elements of float

- WMware Tools installation failed segmentation fault solution

- [In-depth and easy-to-follow FPGA learning 14----------Test case design 2]

- Strict Mode for Databases

- 【深入浅出玩转FPGA学习14----------测试用例设计2】

- 【深入浅出玩转FPGA学习13-----------测试用例设计1】

- Bypass of xss

- [In-depth and easy-to-follow FPGA learning 15---------- Timing analysis basics]

- Unity2D horizontal version game tutorial 4 - item collection and physical materials

猜你喜欢

![[Tang Yudi Deep Learning-3D Point Cloud Combat Series] Study Notes](/img/52/88ad349eca136048acd0f328d4f33c.png)

[Tang Yudi Deep Learning-3D Point Cloud Combat Series] Study Notes

IOT跨平台组件设计方案

Shell programming of conditional statements

IOT cross-platform component design scheme

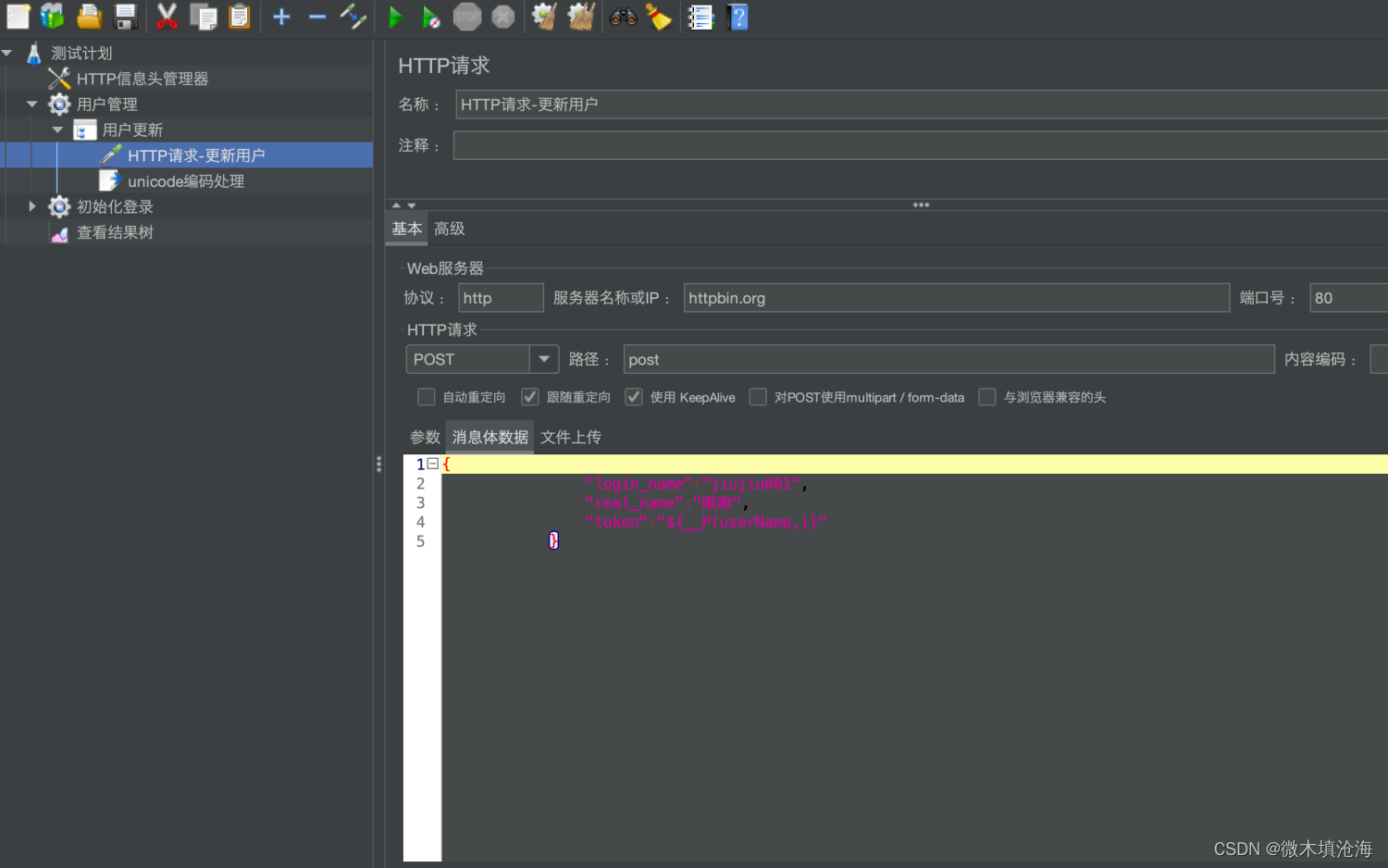

Jmeter parameter transfer method (token transfer, interface association, etc.)

Preparations for web vulnerabilities

typescript15- (specify both parameter and return value types)

DOM系列之动画函数封装

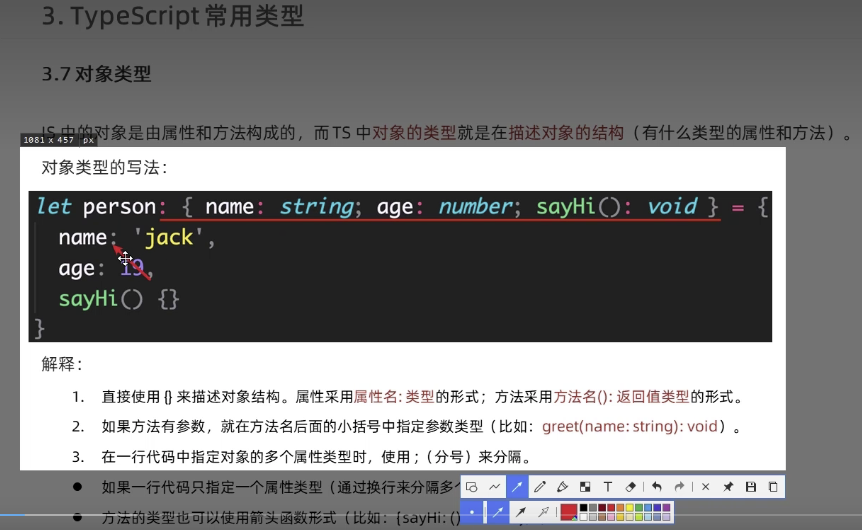

typescript18-对象类型

这个项目太有极客范儿了

随机推荐

registers (assembly language)

typescript14-(单独指定参数和返回值的类型)

TypeScript在使用中出现的问题记录

mysql主从复制及读写分离脚本-亲测可用

【愚公系列】2022年07月 Go教学课程 016-运算符之逻辑运算符和其他运算符

Restricted character bypass

MySQL grant statements

Error ER_NOT_SUPPORTED_AUTH_MODE Client does not support authentication protocol requested by serv

Kotlin协程:协程上下文与上下文元素

深度学习可以求解特定函数的参数么?

Common network status codes

Error ER_NOT_SUPPORTED_AUTH_MODE Client does not support authentication protocol requested by serv

什么是Promise?Promise的原理是什么?Promise怎么用?

Why use high-defense CDN when financial, government and enterprises are attacked?

[Yugong Series] July 2022 Go Teaching Course 016-Logical Operators and Other Operators of Operators

The difference between h264 and h265 decoding

XSS related knowledge

Oracle has a weird temporary table space shortage problem

Huawei's "genius boy" Zhihui Jun has made a new work, creating a "customized" smart keyboard from scratch

【Yugong Series】July 2022 Go Teaching Course 019-For Circular Structure