当前位置:网站首页>LDA study notes

LDA study notes

2022-06-22 13:38:00 【A hundred years of literature have been written on the left sid】

LDA Learning notes

source

The main sources of this article are Introduction to Probabilistic Topic Models、LDA Mathematical gossip and other information , Interested students can read relevant materials .

LDA brief introduction

LDA Is a probabilistic topic model , The goal is to automatically discover topics from the document set . The core problem of topic modeling is to infer the implied topic structure from the observed documents , This can be seen as a reverse generation process , That is, to find out what kind of implicit structure is possible to generate such a set of observations .

LDA Steps for

The first 1 Step : Generate topic distribution randomly

The first 2 Step : For every word in the document

(a) Select a topic randomly from the topics generated in the first step

(b) Randomly select one word from the word distribution corresponding to the topic

Latent Dirichlet Allocation My name comes from

Latent: Document is explicit , But the topic structure ( Topic collection 、 The relationship between the document and the subject 、 file 、 The distribution between topics and words ) It's all unknown 、 The implicit

Dirichlet: Because the document topic distribution used in the first step is Dirichlet distribution

Allocation: Because in LDA In the process ,Dirichlet The result of is used to translate the words in the document Allocation( Distribute ) For each topic

mathematical model

LDA And other subject models belong to probabilistic modeling , The generation process defines the joint probability distribution of explicit and implicit variables . Given an explicit variable , By means of joint distribution , Use data analysis to calculate the conditional distribution of implicit variables ( A posteriori distribution ). stay LDA in , Explicit variables are words in the document , Implicit variables are subject structures , Inferring the topic structure in a document is actually calculating the conditional distribution or a posteriori distribution of the amount of hidden variables in a given document .

Variable definitions :

| Variable name | meaning |

|---|---|

| β 1 : K \beta_{1:K} β1:K | Topic collection |

| β K \beta_K βK | The first K The word distribution of the two topics |

| θ d \theta_d θd | The first d Topic distribution of a document |

| θ d , k \theta_{d,k} θd,k | The first d The... In this document k The probability of a topic |

| z d z_d zd | The first d Topic assignment of documents |

| z d , n z_{d,n} zd,n | The first d Of the documents n The theme of a word |

| ω d \omega_d ωd | The first d Words observed in this document |

| ω d , n \omega_{d,n} ωd,n | The first d Of the documents n Word |

Joint distribution

LDA The generation process of corresponds to the following joint distribution of explicit and implicit variables

p ( β 1 : K , θ 1 : D , z 1 : D , w 1 : D ) = ∏ i = 1 K p ( β i ) ∏ d = 1 D p ( θ d ) ( ∏ n = 1 N p ( z d , n ∣ θ d ) p ( ω d , n ∣ β 1 : K , z d , n ) ) p(\beta_{1:K}, \theta_{1:D}, z_{1:D}, w_{1:D}) = \prod_{i=1}^Kp(\beta_i)\prod_{d=1}^Dp(\theta_d)\left(\prod_{n=1}^Np(z_{d,n}|\theta_d)p(\omega_{d,n}|\beta_{1:K},z_{d,n})\right) p(β1:K,θ1:D,z1:D,w1:D)=i=1∏Kp(βi)d=1∏Dp(θd)(n=1∏Np(zd,n∣θd)p(ωd,n∣β1:K,zd,n))

The above formula specifies many dependencies , It is these dependencies that define LDA, The following is a more vivid explanation of the dependence in the formula with a probability diagram

Existing dependencies have been connected by directed segments , A hollow representation of an implicit variable , A solid indicates an explicit variable .

Posterior distribution

On the basis of the joint distribution given above , Calculate the conditional distribution of the implied topic structure in a given document , A posteriori distribution .

p ( β 1 : K , θ 1 : D , z 1 : D ∣ ω 1 : D ) = p ( β 1 : K , θ 1 : D , z 1 : D , w 1 : D ) p ( ω 1 : D ) p(\beta_{1:K}, \theta_{1:D}, z_{1:D}|\omega_{1:D}) = \frac{p(\beta_{1:K}, \theta_{1:D}, z_{1:D}, w_{1:D}) }{p(\omega_{1:D})} p(β1:K,θ1:D,z1:D∣ω1:D)=p(ω1:D)p(β1:K,θ1:D,z1:D,w1:D)

For the setting of any implicit variable , Molecules are easy to calculate .

The denominator is actually the marginal distribution of observations , Theoretically, it can be obtained by adding to each case , But in the case of large data sets , For a subject , This accumulation involves configuring all possible topics for each word , And document collections often have millions of words , The complexity is too high , Just like many probability models nowadays ( Such as Bayesian statistics ) In that case, the posterior probability cannot be calculated due to the denominator , Therefore, a core research goal of probability modeling is to use a fast method to estimate the denominator .

The topic modeling method estimates the above formula by constructing a near real posterior distribution in the potential topic structure . Topic modeling algorithms can usually be divided into two categories : Sampling based algorithm and variational algorithm .

The sampling based algorithm approximates its empirical distribution by collecting samples from the posterior distribution . The most commonly used sampling algorithm is Gibbs sampling , In this method , We construct a Markov chain , The limit distribution of Markov chain is a posteriori distribution . Markov chain is a set of independent random variables , For the topic model , Random variables are implicit topics defined on a specific corpus , The sampling algorithm collects samples from the limit distribution of Markov chains , Use these samples to approximate the distribution , Only the samples with the highest probability will be collected as an approximation of the subject structure .

The certainty of variational algorithm is higher than that of sampling algorithm . The variational method assumes a cluster of parameterized distributions on the implicit structure , And find the distribution closest to a posteriori . therefore , The inference problem is transformed into an optimization problem , This is a huge innovation .

LDA Realized python Code

The code section refers to https://blog.csdn.net/selinda001/article/details/80446766

# Official documents https://radimrehurek.com/gensim/models/ldamodel.html

from nltk.corpus import stopwords

from nltk.stem.wordnet import WordNetLemmatizer

import string

import gensim

from gensim import corpora

from gensim.models.callbacks import PerplexityMetric

from gensim.models.callbacks import CoherenceMetric

doc1 = "Sugar is bad to consume. My sister likes to have sugar, but not my father."

doc2 = "My father spends a lot of time driving my sister around to dance practice."

doc3 = "Doctors suggest that driving may cause increased stress and blood pressure."

doc4 = "Sometimes I feel pressure to perform well at school, but my father never seems to drive my sister to do better."

doc5 = "Health experts say that Sugar is not good for your lifestyle."

# Consolidate document data

doc_complete = [doc1, doc2, doc3, doc4, doc5]

# You need to execute... On the command line first nltk.download('punkt')、nltk.download('stopwords') and nltk.download('wordnet'), Otherwise, an error will be reported

# Data cleaning and preprocessing

stop = set(stopwords.words('english'))

exclude = set(string.punctuation)

lemma = WordNetLemmatizer()

def clean(doc):

stop_free = " ".join([i for i in doc.lower().split() if i not in stop])

punc_free = ''.join(ch for ch in stop_free if ch not in exclude)

normalized = " ".join(lemma.lemmatize(word) for word in punc_free.split())

return normalized

doc_clean = [clean(doc).split() for doc in doc_complete]

# Create a word dictionary of corpus , Each individual word is given an index

dictionary = corpora.Dictionary(doc_clean)

# Use the dictionary above , The list of documents will be converted ( corpus ) become DT matrix

doc_term_matrix = [dictionary.doc2bow(doc) for doc in doc_clean]

# Use gensim To create LDA Model object

Lda = gensim.models.ldamodel.LdaModel

perplexity_logger = PerplexityMetric(corpus=doc_term_matrix, logger='visdom')

# stay DT Run and train on the matrix LDA Model

# If you make a mistake , Check out my blog https://blog.csdn.net/weixin_42690752/article/details/103936259

ldamodel = Lda(doc_term_matrix, num_topics=100, id2word=dictionary, passes=50, callbacks=[perplexity_logger])

# Output the composition of words in the topic

print(ldamodel.print_topics(num_topics=100, num_words=3))

# Output the subject of each document

for doc in doc_clean:

print(ldamodel.get_document_topics(bow=dictionary.doc2bow(doc)))

边栏推荐

- Growth knowledge network

- 文件下载漏洞&文件读取漏洞&文件删除漏洞

- Shell基础入门

- 476. Number Complement

- leetcode 11. Container with the most water

- Rf5.0 new content quick view

- Triggers in MySQL

- Windows下MySQL 8.0.29的详细安装教程,解决找不到VCRUNTIME140_1.dll、plugin caching_sha2_password could not be loaded

- 260. Single Number III

- leetcode 1130. Minimum cost spanning tree of leaf value

猜你喜欢

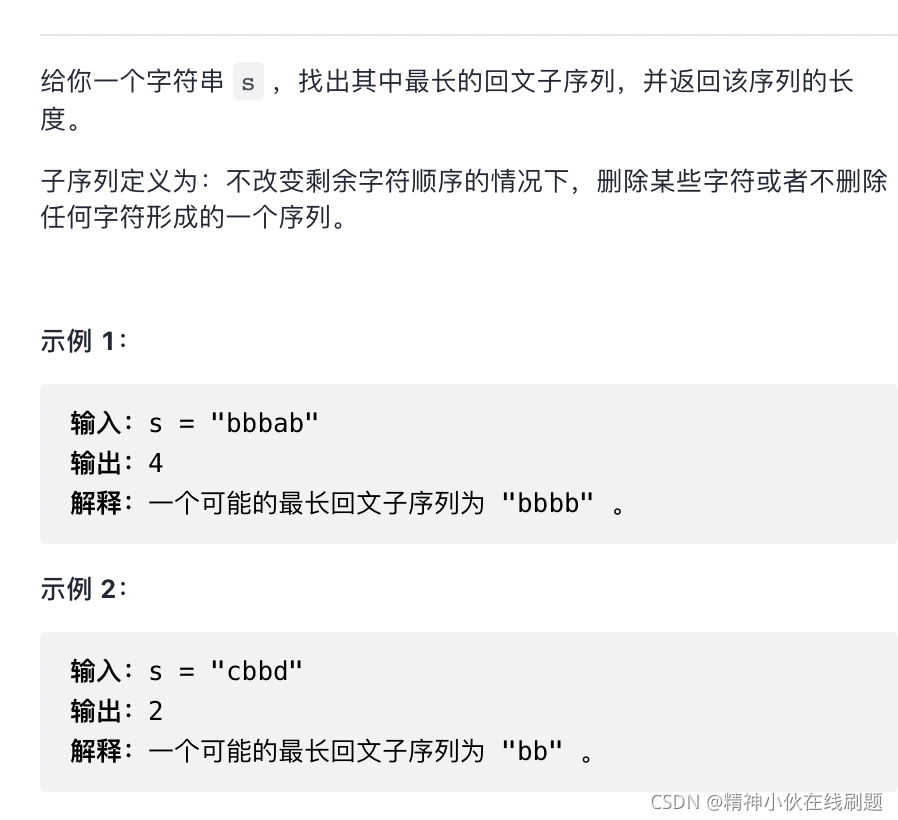

Problème de sous - séquence / substrat leetcode

聊一聊数据库的行存与列存

卸载MySQL 8

别再用 System.currentTimeMillis() 统计耗时了,太 Low,StopWatch 好用到爆!

Opengauss database source code analysis series articles -- detailed explanation of dense equivalent query technology

Acwing week 53

Leetcode union search set

SSM based community garbage classification and transportation management system, high-quality graduation thesis example (can be used directly), source code, database script, project introduction and o

leetcode-二分法

leetcode 第 297 場周賽

随机推荐

Acwing week 53

leetcode 834. Sum of distances in the tree

毕业论文写作中致谢词的常见写法及优秀范文

Query escape in Oracle expdp export

Consolidation of common functions of numpy Library

leetcode-背包问题

leetcode 968. Monitoring binary tree

【Nacos云原生】阅读源码第一步,本地启动Nacos

310. Minimum Height Trees

[cloud native] event publishing and subscription in Nacos -- observer mode

leetcode 32. Longest valid bracket

在CSDN写文几年,我出了“第一本书“,感恩!

基於SSM的小區垃圾分類和運輸管理系統,高質量畢業論文範例(可直接使用),源碼,數據庫脚本,項目導入運行視頻教程,論文撰寫教程

Rigid demand of robot direction → personal thinking ←

leetcode 829. Sum of continuous integers

Performance of recommender algorithms on top-N recommendation tasks

测试方法论——数据驱动测试

基于JSP的图书馆管理系统,包含源码,数据库脚本,项目运行视频教程,毕设论文撰写视频教程

Processing statement on price selection of several manufacturers

基于SSM的图书馆管理系统,高质量毕业论文范例(可直接使用),项目导入视频,附送源码和数据库脚本,论文撰写教程