当前位置:网站首页>Deep feature synthesis and genetic feature generation, comparison of two automatic feature generation strategies

Deep feature synthesis and genetic feature generation, comparison of two automatic feature generation strategies

2022-06-12 19:37:00 【deephub】

Feature engineering is the process of creating new features from existing features , Through feature engineering, we can capture the additional relationship with the target column that the original feature does not have . This process is very important to improve the performance of machine learning algorithm . Although when data scientists apply specific domain knowledge to specific transformations , Feature engineering works best , But there are some methods that can be done in an automated way , Without prior domain knowledge .

In this paper , We will show you how to use... Through an example ATOM Package to quickly compare two automatic feature generation algorithms : Depth feature synthesis (Deep feature Synthesis, DFS) And genetic characteristics (Genetic feature generation, GFG).ATOM It's open source Python package , It can help data scientists speed up the exploration of machine learning pipeline .

Baseline model

For comparison , As a baseline for comparison, only the initial features are used to train the model . The data used here is from Kaggle A variant of the Australian weather dataset . The goal of this data set is to predict whether it will rain tomorrow , In target column RainTomorrow Train a binary classifier on .

import pandas as pd

from atom import ATOMClassifier

# Load the data and have a look

X = pd.read_csv("./datasets/weatherAUS.csv")

X.head()

Initialize the instance and prepare the modeling data . Only a subset of the dataset is used here (1000 That's ok ) demonstrate . The following code estimates the missing values and encodes the classification features .

atom = ATOMClassifier(X, y="RainTomorrow", n_rows=1e3, verbose=2)

atom.impute()

atom.encode()

The output is as follows .

have access to dataset Property to quickly check what the data looks like after conversion .

atom.dataset.head()

The data is now ready . This article will use LightGBM The model predicts . Use atom The training and evaluation model is very simple :

atom.run(models="LGB", metric="accuracy")

You can see that the test set has reached 0.8471 The accuracy of . Let's see if automatic feature generation can improve this .

DFS

DFS Put the standard mathematical operator ( Add 、 Subtraction 、 Multiplication, etc ) Apply to existing features , And combine these features . for example , On our dataset ,DFS You can create new features MinTemp + MaxTemp or WindDir9am x WindDir3pm.

In order to be able to compare models , Need to be for DFS The pipe creates a new branch . If you're not familiar with it ATOM Branch system of , Please check the official documents .

atom.branch = "dfs"

Use atom Of feature_generation Method runs on the new branch DFS. For the sake of , Here, only addition and multiplication are used to create new features ( Use div、log or sqrt The operator may return with inf or nan Characteristics of value , So it needs to be processed again ).

atom.feature_generation(

strategy="dfs",

n_features=10,

operators=["add", "mul"],

)

ATOM It's using featuretools Package to run DFS Of . It's used here n_features=10, Therefore, ten features randomly selected from all possible combinations are added to the data set .

atom.dataset.head()

Train the model again :

atom.run(models="LGB_dfs")

It should be noted that

- Add a label after the acronym of the model _dfs To not cover the baseline model .

- It is no longer necessary to specify indicators for validation .atom The instance will automatically use the same metrics as any previous model training . In our case for accuracy.

look DFS Did not improve the model . The result is even worse . Let's see GFG How do you behave .

GFG

GFG Using genetic programming ( A branch of evolutionary programming ) To determine which features are valid and create new features based on these features . And DFS The blind attempt feature combination is different ,GFG Try to improve its characteristics in each generation of algorithm .GFG Use with DFS The same operator , But not just apply the transformation once , But to further develop them , Create a nested structure of feature combinations . Using operators add (+) and mul (x), The combination of features may be :

add(add(mul(MinTemp, WindDir3pm), Pressure3pm), mul(MaxTemp, MinTemp))

When used with DFS equally , First create a new branch ( From primitive master Branch will DFS exclude ), Then train and evaluate the model . Again , So here we create 10 A new feature .

Be careful :ATOM Use... At the bottom gplearn Package to run GFG.

atom.branch = "gfg_from_master"

atom.feature_generation(

strategy="GFG",

n_features=10,

operators=["add", "mul"],

)

Can pass

generic_features

Property to access the newly generated feature 、 Their name and fitness ( Score obtained during genetic algorithm ) Overview .

atom.genetic_features

What needs to be noted here is , Because the description of features may become very long ( Look at the picture above ), Therefore, the new feature will be numbered and named, for example feature n, among n Represents the... In the dataset n Features .

atom.dataset.head()

Run the model again :

atom.run(models="LGB_gfg")

This time I got 0.8824 The accuracy of , Than the baseline model 0.8471 Much better !

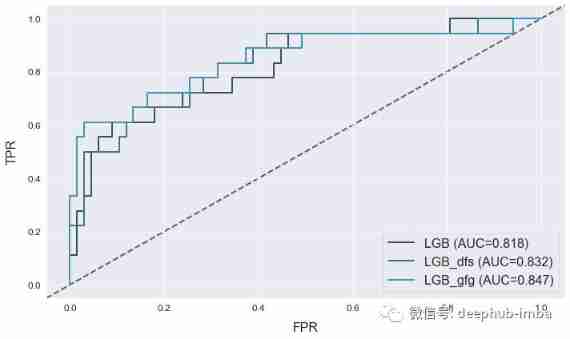

Result analysis

All three models have been trained and can analyze the results . Use results Attribute to view the scores of all models on the training set and test set .

atom.results

Use atom Of plot The method can further compare the characteristics and performance of the model .

atom.plot_roc()

Use atom You can draw multiple adjacent graphs , See which features contribute most to the prediction of the model

with atom.canvas(1, 3, figsize=(20, 8)):

atom.lgb.plot_feature_importance(show=10, title="LGB")

atom.lgb_dfs.plot_feature_importance(show=10, title="LGB + DFS")

atom.lgb_gfg.plot_feature_importance(show=10, title="LGB + GFG")

For two non baseline models , The generated features seem to be the most important features , This indicates that this is relevant to the new target column , And they make a great contribution to the prediction of the model .

Use decision diagrams , You can also view the impact of features on individual rows in the dataset .

atom.lgb_dfs.decision_plot(index=0, show=15)

summary

This paper compares the performance of new features generated by two automatic feature generation techniques for model prediction . The results show that using these techniques can significantly improve the performance of the model . In this article, I used ATOM The package simplifies the process of processing training and modeling , of ATOM For more information , Please check the documentation of the package .

https://www.overfit.cn/post/389b1d229dae4d0fb7b584cb37a350de

author :Marco vd Boom

边栏推荐

- API call display, detailed API of Taobao, tmall and pinduoduo commodity pages, and return of APP side original data parameters

- Transactions in redis

- Can't understand kotlin source code? Starting with the contracts function~

- Demand and business model analysis -6- five topics

- EFCore调优

- 【观察】华为下一代数据中心,为广西低碳高质量发展“添动能”

- Demand and business model analysis-2-business model types

- 【刷题笔记】线段树

- Research Report on global and Chinese cosmetics industry market sales scale forecast and investment opportunities 2022-2028

- Méthode de sauvegarde programmée basée sur la base de données distribuée elle - même

猜你喜欢

3D object detection

Demand and business model analysis-3-design

【观察】华为下一代数据中心,为广西低碳高质量发展“添动能”

Demand and business model innovation - demand 3- demand engineering process

RT thread simulator builds lvgl development and debugging environment

Reading small program based on wechat e-book graduation design (4) opening report

mysql的增删改查,mysql常用命令

解释器文件

![[image denoising] image denoising based on regularization with matlab code](/img/d9/74f4d9cdb4bfe157ba781ec8f6a184.png)

[image denoising] image denoising based on regularization with matlab code

选电子工程被劝退,真的没前景了?

随机推荐

What did 3GPP ran do in the first F2F meeting?

Demand and business model analysis -6- five topics

mysql的增删改查,mysql常用命令

基于分布式数据库本身的定时备份方法

Detailed explanation of yolox network structure

【生成对抗网络学习 其三】BiGAN论文阅读笔记及其原理理解

torch 网络模型转换onnx格式,并可视化

Wechat e-book reading applet graduation design completion works (3) background function

vc hacon 联合编程 GenImage3Extern WriteImage

Software usage of Tencent cloud TDP virt viewer win client

New product launch

Wechat e-book reading applet graduation design completion works (8) graduation design thesis template

Reading small program graduation design based on wechat e-book (5) assignment

[observation] Huawei's next generation data center "adds momentum" to Guangxi's low-carbon and high-quality development

今晚7:00 | PhD Debate 自监督学习在推荐系统中的应用

“即服务”,未来已来,始于现在 | IT消费新模式,FOD按需计费

Embedded development: 6 necessary skills for firmware engineers

负数取余问题

在 Traefik Proxy 2.5 中使用/开发私有插件(Traefik 官方博客)

Reading applet based on wechat e-book graduation design (2) applet function