当前位置:网站首页>Introduction of classic wide & deep model and implementation of tensorflow 2 code

Introduction of classic wide & deep model and implementation of tensorflow 2 code

2022-06-26 21:34:00 【Romantic data analysis】

Wide & Deep Model is introduced

The goal is :

Classic recommended depth model Wide & Deep. complete paper The name is 《Wide & Deep Learning for Recommender Systems》

Goggle stay 2016 Put forward in Wide & Deep Model

Content :

This article written by brother Zhihu is very simple and clear , Extract it directly , original text : Know the original

This paper introduces a classic recommended depth model Wide & Deep. complete paper The name is 《Wide & Deep Learning for Recommender Systems》

One . Model is introduced

wide & deep The model architecture of is shown in the figure below

You can see wide & deep The model is divided into wide and deep Two parts .

- wide Part is a simple linear model , Of course, it's not just a single characteristic linear model , In practice, more features are used to cross . For example, users often buy books A Buy books again B, So books A And books B The two features have a strong correlation , It can be trained as a cross feature .

- deep Part one is a feedforward neural network model .

- The linear model and the feedforward neural network model are combined for training .

- wide Part can be understood as a linear memory model ,

- deep Some are good at reasoning .

- Combination of the two , It has the function of reasoning and memory , The recommended results are more accurate .

Two . Recommend system architecture

When a user requests to come , The recommendation system will start with a large number of item Pick out O(100) Users may be interested in item( Recall phase ). Then this O(100) individual item Will be input into the model for sorting . Select... According to the sorting results of the model topN individual item Return to the user . meanwhile , The user will respond to the presentation item Click , Buying and so on . Final , User feature, Context feature,item Of feature and user action Will log Keep your information , New training data will be generated after processing , Provide training to the model .paper Focus on using wide & deep An architecture based sorting model .

3、 ... and . Wide part

wide Part is actually a simple linear model y = wx + b.y It is our prediction target , x = [ x1, x2, … , xd] yes d individual feature Vector ,w = [w1, w2, … , wd] It's the parameters of the model ,b yes bias. there d individual feature Include the original input feature And transformed feature.

One of the most important transformations feature be called cross-product transformation . If x1 It's gender ,x1=0 For men ,x1=1 For women .x2 It's a hobby ,x2=0 I don't like watermelon ,x2=1 I like eating watermelon . So we can use it x1 and x2 Construct a new feature, Make x3=(x1 && x2), be x3=1 She is a girl and likes to eat watermelon , If you are not a girl or don't like eating watermelon , be x3=0. This is the result of transformation x3 Namely cross-product conversion . The purpose of this transformation is to obtain the influence of cross features on the prediction target , Add nonlinearity to the linear model .

This step is equivalent to artificially extracting some important relationship between the two features .tensorflow Using functions in tf.feature_column.crossed_column

Four . Deep part

deep Part is the feedforward neural network model . about High dimensional sparse classification features , First, it will be transformed into a low dimensional dense vector , such as embeding operation . And then as a neural network hidden layers Input for training .Hidden layers The calculation formula of is as follows

f Is the activation function ( for example ReLu),a It's the last one hidden layer Output , W Is the parameter to be trained ,b yes bias

5、 ... and . Wide and Deep Training together

adopt weight sum The way to wide and deep The output of , And then through logistic loss Functions are trained together . about wide Part of , It is generally used FTRL Training . about deep The part of is AdaGrad Training .

For a logistic regression problem , The prediction formula is as follows

6、 ... and . system implementation

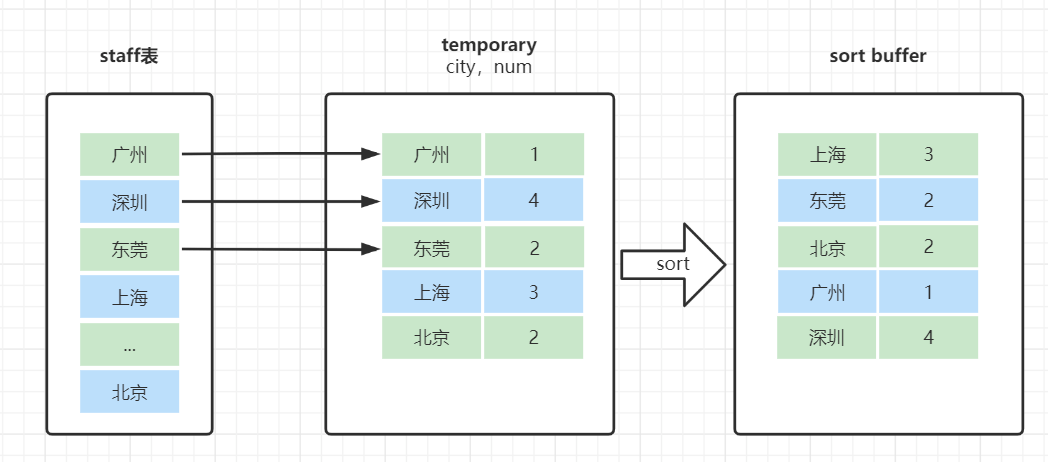

The implementation of the recommendation system is divided into three stages : The data generated , Model training and model services . As shown in the figure below

(1) Data generation stage

At this stage , lately N Days of users and item Will be used to generate training data . Each displayed item There will be a target label. for example 1 Indicates that the user has clicked ,0 Indicates that the user has not clicked .

In the picture Vocabulary Generation Mainly used for data conversion . For example, the classification feature needs to be converted into the corresponding integer Id, Continuous real number features will be mapped to according to the cumulative probability distribution [0, 1] wait .

(2) Model training stage

In the data generation stage, we generate the sparse feature , Dense features and label Training sample , These samples will be put into the model as input for training . As shown in the figure below

wide The section of contains the passage Cross Product The characteristics of transformation . about deep Part of , The classification feature first passes through a layer embedding, Then with dense features concatenate get up after , after 3 Layer of hidden layers, Last sum wide Some of them unite to pass sigmoid Output .

paper It also mentions , because google The number of training samples exceeds 5000 Billion , The cost and delay of retraining all samples each time is very large . To solve this problem , When initializing a new model , Will use the old model embedding Parameter and linear model weight Parameter initializes the new model .

(3) Model service phase

After confirming that the training is OK , The model can go online . For user requests , The server will first select the candidate set that the user is interested in , Then these candidate sets will be put into the model for prediction . Rank the scores of prediction results from high to low , Then take the... Of the sorting result topN Return to the user .

- You can see it here , actually ,Wide & Deep The model is used in the sorting layer , No recall layer is used ? Why? ? because Wide & Deep Training and prediction of models , There are still many operations , It takes a lot of time , Used in the recall phase of large-scale items , The performance overhead is a bit overwhelming .

- Only when the data of the recall layer , From millions to hundreds , You can do this by Wide & Deep The model sorts the 100 level items recalled , Before getting top N(N=20).

7、 ... and . summary

Wide & Deep The model is used in the sorting layer . become wide and deep Two parts .

* wide Part can be understood as a linear memory model ,

* deep Some are good at reasoning .

* Combination of the two , It has the function of reasoning and memory , The recommended results are more accurate .

8、 ... and . Code :

To achieve deep, Re realization wide, Then the two data results are spliced , In the final SIGMOD Activate .

deep part

1、 Relative category characteristics (feature) Conduct embeding

# genre features vocabulary

genre_vocab = ['beijin', 'shanghai', 'shenzhen', 'chengdu', 'xian', 'suzhou', 'guangzhou']

GENRE_FEATURES = {

'city': genre_vocab

}

# all categorical features

categorical_columns = []

for feature, vocab in GENRE_FEATURES.items():

cat_col = tf.feature_column.categorical_column_with_vocabulary_list(

key=feature, vocabulary_list=vocab)

emb_col = tf.feature_column.embedding_column(cat_col, 10)

categorical_columns.append(emb_col)

2、 embeding Vectors are spliced with conventional numerical features , Send in MLP

# deep part for all input features

deep = tf.keras.layers.DenseFeatures(user_numerical_columns + categorical_columns)(inputs)

deep = tf.keras.layers.Dense(128, activation='relu')(deep)

deep = tf.keras.layers.Dense(128, activation='relu')(deep)

wide part

1、 Artificially extract some important , Features that are related to each other . Use tensorflow The function in :

tf.feature_column.crossed_column([movie_col, rated_movie]

2、 Then the cross features are analyzed multi-hot code .

def indicator_column(categorical_column):

"""Represents multi-hot representation of given categorical column.

crossed_feature = tf.feature_column.indicator_column(tf.feature_column.crossed_column([movie_col, rated_movie], 10000))

3、 Transform a sparse matrix into a dense vector .

# wide part for cross feature

wide = tf.keras.layers.DenseFeatures(crossed_feature)(inputs)

wide+deep

The output of the two models , Stitched together and then linearly activated a neuron ( Or neuron activation should be OK ). The final forecast score result .

both = tf.keras.layers.concatenate([deep, wide])

output_layer = tf.keras.layers.Dense(1, activation='sigmoid')(both)

model = tf.keras.Model(inputs, output_layer)

Program running condition :

Tips : And the former 3 The same data in this article , Predicted results :

5319/5319 [==============================] - 115s 21ms/step - loss: 67599.8828 - accuracy: 0.5150 - auc: 0.5041 - auc_1: 0.4693

Epoch 2/5

5319/5319 [==============================] - 114s 21ms/step - loss: 0.6549 - accuracy: 0.6526 - auc: 0.7150 - auc_1: 0.6806

Epoch 3/5

5319/5319 [==============================] - 118s 22ms/step - loss: 0.6326 - accuracy: 0.6722 - auc: 0.7363 - auc_1: 0.7065

Epoch 4/5

5319/5319 [==============================] - 116s 22ms/step - loss: 0.6173 - accuracy: 0.6792 - auc: 0.7410 - auc_1: 0.7133

Epoch 5/5

5319/5319 [==============================] - 113s 21ms/step - loss: 0.6067 - accuracy: 0.6840 - auc: 0.7435 - auc_1: 0.7176

1320/1320 [==============================] - 21s 15ms/step - loss: 0.6998 - accuracy: 0.5645 - auc: 0.5718 - auc_1: 0.5391

Test Loss 0.6997529864311218, Test Accuracy 0.5645247101783752, Test ROC AUC 0.5717922449111938, Test PR AUC 0.539068877696991

You can see , The model is more accurate during training , It's also more time-consuming .

But in test The accuracy of the training set has not been significantly improved . May be test There are many Chinese and new users . No previous behavioral data , Or a lot of data to train , The accuracy of the model is not too high .

Nine 、 Complete code GitHub:

Address :https://github.com/jiluojiluo/recommenderSystemForFlowerShop

边栏推荐

- Twenty five of offer - all paths with a certain value in the binary tree

- Shiniman household sprint A shares: annual revenue of nearly 1.2 billion red star Macalline and incredibly home are shareholders

- 【连载】说透运维监控系统01-监控系统概述

- BN(Batch Normalization) 的理论理解以及在tf.keras中的实际应用和总结

- Operator介绍

- windows系统下怎么安装mysql8.0数据库?(图文教程)

- 0 basic C language (3)

- YuMinHong: New Oriental does not have a reversal of falling and turning over, destroying and rising again

- Vi/vim editor

- The importance of using fonts correctly in DataWindow

猜你喜欢

花店橱窗布置【动态规划】

Sword finger offer 12 Path in matrix

12个MySQL慢查询的原因分析

QT环境下配置Assimp库(MinGW编译器)

Godson China Science and technology innovation board is listed: the market value is 35.7 billion yuan, becoming the first share of domestic CPU

大家都能看得懂的源码(一)ahooks 整体架构篇

【山东大学】考研初试复试资料分享

Redis + Guava 本地缓存 API 组合,性能炸裂!

Leetcode: hash table 08 (sum of four numbers)

众多碎石3d材质贴图素材一键即可获取

随机推荐

Distributed ID generation system

Leetcode question brushing: String 06 (implement strstr())

Common concurrent testing tools and pressure testing methods

Module 5 operation

AI intelligent matting tool - hair can be seen

在哪家证券公司开户最方便最安全可靠

C language 99 multiplication table

Stop being a giant baby

Leetcode question brushing: String 05 (Sword finger offer 58 - ii. left rotation string)

ICML2022 | Neurotoxin:联邦学习的持久后门

Android IO, a first-line Internet manufacturer, is a collection of real questions for senior Android interviews

lotus configurations

不要做巨嬰了

[leetcode]- linked list-2

Leetcode: hash table 08 (sum of four numbers)

0 basic C language (1)

windows系统下怎么安装mysql8.0数据库?(图文教程)

【 protobuf 】 quelques puits causés par la mise à niveau de protobuf

SAP Spartacus 默认路由配置的工作原理

【连载】说透运维监控系统01-监控系统概述