当前位置:网站首页>Android mediacodec hard coded H264 file (four), ByteDance Android interview

Android mediacodec hard coded H264 file (four), ByteDance Android interview

2022-06-26 21:05:00 【m0_ sixty-six million two hundred and sixty-four thousand one h】

}

// call stop Methods into the Uninitialized state

codec.stop();

// call release Methods to release , End operation

codec.release();

Code parsing

MediaFormat Set up

First, you need to create and set it up MediaFormat object , It represents information about the media data format , For video, the following information should be set :

Color format

Bit rate

Rate control mode

Frame rate

I Frame spacing

among , Bit rate refers to the number of data bits transmitted per unit transmission time , It's usually used kbps That is, thousands of bits per second . The frame rate is the number of frames displayed per second .

In fact, there are three modes for bit rate control :

BITRATE_MODE_CQ

Indicates that the bit rate is not controlled , Ensure the image quality as much as possible

BITRATE_MODE_VBR

Express MediaCodec The output bit rate will be dynamically adjusted according to the complexity of the image content , If the image is responsible, the bit rate is high , If the image is simple, the bit rate is low

BITRATE_MODE_CBR

Express MediaCodec The output bit rate will be controlled to the set size

For color format , Because it will be YUV The data is encoded as H264, and YUV There are many formats , This involves the problem of model compatibility . When encoding the camera, do a good job in format processing , For example, the camera uses NV21 Format ,MediaFormat It uses COLOR_FormatYUV420SemiPlanar, That is to say NV12 Pattern , Then you have to make a conversion , hold NV21 The switch to NV12 .

about I Frame spacing , That is, how often there is a H264 In coding I frame .

complete MediaFormat Setup example :

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, width, height);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420SemiPlanar);

// Horse rate

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * height * 5);

// Stream control mode for adjusting bit rate

mediaFormat.setInteger(MediaFormat.KEY_BITRATE_MODE, MediaCodecInfo.EncoderCapabilities.BITRATE_MODE_VBR);

// Set frame rate

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

// Set up I Frame spacing

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

When the codec operation starts , Start the codec thread , Handle the camera preview returned YUV data .

A package library of the camera is used here :

https://github.com/glumes/EzCameraKit

Encoding and decoding operations

The codec operation code is as follows :

while (isEncoding) {

// YUV Color format conversion

if (!mEncodeDataQueue.isEmpty()) {

input = mEncodeDataQueue.poll();

byte[] yuv420sp = newbyte[mWidth * mHeight * 3 / 2];

NV21ToNV12(input, yuv420sp, mWidth, mHeight);

input = yuv420sp;

}

if (input != null) {

try {

// Get the available buffer from the input buffer queue , Fill in the data , Join the team again

ByteBuffer[] inputBuffers = mMediaCodec.getInputBuffers();

ByteBuffer[] outputBuffers = mMediaCodec.getOutputBuffers();

int inputBufferIndex = mMediaCodec.dequeueInputBuffer(-1);

if (inputBufferIndex >= 0) {

// Calculate timestamp

pts = computePresentationTime(generateIndex);

ByteBuffer inputBuffer = inputBuffers[inputBufferIndex];

inputBuffer.clear();

inputBuffer.put(input);

mMediaCodec.queueInputBuffer(inputBufferIndex, 0, input.length, pts, 0);

generateIndex += 1;

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

// Get the encoded content from the output buffer queue , The content is released after corresponding processing

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

byte[] outData = newbyte[bufferInfo.size];

outputBuffer.get(outData);

// flags Use bit operation , Defined flag All are 2 Multiple

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) { // Configuration related content , That is to say SPS,PPS

mOutputStream.write(outData, 0, outData.length);

} elseif ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_KEY_FRAME) != 0) { // Keyframes

mOutputStream.write(outData, 0, outData.length);

} else {

// Non keyframes and SPS、PPS, Write directly to file , May be B Frame or P frame

mOutputStream.write(outData, 0, outData.length);

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_USEC);

}

} catch (IOException e) {

Log.e(TAG, e.getMessage());

}

} else {

try {

Thread.sleep(500);

} catch (InterruptedException e) {

Log.e(TAG, e.getMessage());

}

}

}

First , To put the camera NV21 Format to NV12 Format , then adopt dequeueInputBuffer Method to dequeue from the available input buffer queue , Fill in the data and then pass queueInputBuffer Method to join the team .

dequeueInputBuffer Returns the buffer index , If the index is less than 0 , Indicates that no buffer is currently available . Its parameters timeoutUs Time out , After all, it uses MediaCodec The synchronization mode of , If no buffer is available , Will block the specified parameter time , If the parameter is negative , It will keep blocking .

queueInputBuffer Method when the data is queued , Except for the index value to be passed out of the queue , Then you also need to pass in the timestamp of the current buffer presentationTimeUs And an identifier of the current buffer flag .

among , The timestamp is usually the time when the buffer is rendered , The logo has a variety of logos , Identifies which type the current buffer belongs to :

BUFFER_FLAG_CODEC_CONFIG

Identify that the current buffer carries the initialization information of the codec , It's not media data

BUFFER_FLAG_END_OF_STREAM

End identification , The current buffer is the last , At the end of the stream

BUFFER_FLAG_KEY_FRAME

Indicates that the current buffer is keyframe information , That is to say I Frame information

When encoding, you can calculate the timestamp of the current buffer , It can also be delivered directly 0 Just fine , Identification can also be passed directly 0 As a parameter .

Pass data into MediaCodec after , adopt dequeueOutputBuffer Method to retrieve the encoded and decoded data , In addition to the specified timeout , You need to bring in MediaCodec.BufferInfo object , This object has the length of encoded data 、 Offset and identifier .

Take out MediaCodec.BufferInfo After the data in , Different operations are performed according to different identifiers :

Last

I've seen a lot of Technology leader During the interview , When you meet an older programmer in a confused period , Older than the interviewer . These people have something in common : Maybe it's working 7、8 year , Or write code to the business unit every day , The repetition of work content is relatively high , There's no technical work . When asked about their career plans , They don't have a lot of ideas .

Actually 30 To the age of 40 Age is the golden age of one's career development , Be sure to expand within the scope of your business , We have our own plan to improve the breadth and depth of technology , It helps to have a sustainable development path in career development , Instead of stagnating .

Keep running , You know the meaning of learning !

More advanced BATJ The learning materials of large factories can be shared for free , Need a full version of friends ,【 Click here to see the whole thing 】.

边栏推荐

- Browser event loop

- windows系统下怎么安装mysql8.0数据库?(图文教程)

- 【连载】说透运维监控系统01-监控系统概述

- 【山东大学】考研初试复试资料分享

- VB.net类库(进阶——2 重载)

- Simple Lianliankan games based on QT

- Treasure and niche cover PBR multi-channel mapping material website sharing

- 基于QT开发的线性代数初学者的矩阵计算器设计

- The source code that everyone can understand (I) the overall architecture of ahooks

- C: Reverse linked list

猜你喜欢

Detailed explanation of retrospective thinking

![[Bayesian classification 3] semi naive Bayesian classifier](/img/9c/070638c1a613be648466e4f2bc341e.png)

[Bayesian classification 3] semi naive Bayesian classifier

IDEA 报错:Process terminated【已解决】

![[Shandong University] information sharing for the first and second examinations of postgraduate entrance examination](/img/f9/68b5b5ce21f4f851439fa061b477c9.jpg)

[Shandong University] information sharing for the first and second examinations of postgraduate entrance examination

Vi/vim editor

云计算技术的发展与芯片处理器的关系

Establish a connection with MySQL

Idea error: process terminated

Android IO, a first-line Internet manufacturer, is a collection of real questions for senior Android interviews

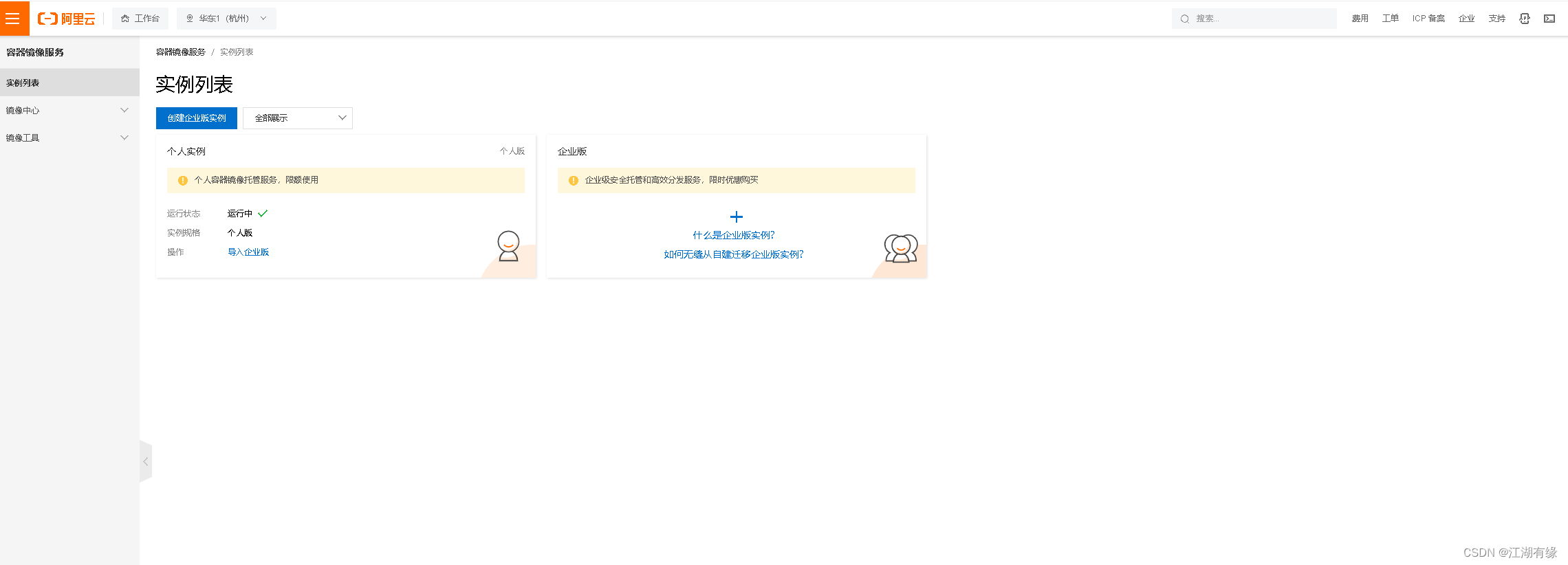

阿里云个人镜像仓库日常基本使用

随机推荐

[Bayesian classification 4] Bayesian network

c语言99乘法表

Looking back at the moon

Redis + Guava 本地缓存 API 组合,性能炸裂!

leetcode刷题:字符串01(反转字符串)

C language 99 multiplication table

动态规划111

Super VRT

The postgraduate entrance examination in these areas is crazy! Which area has the largest number of candidates?

Dynamic planning 111

windows系統下怎麼安裝mysql8.0數據庫?(圖文教程)

Treasure and niche cover PBR multi-channel mapping material website sharing

基于SSH框架的学生信息管理系统

QT based "synthetic watermelon" game

Record a redis large key troubleshooting

Separate save file for debug symbols after strip

Stringutils judge whether the string is empty

Gamefi active users, transaction volume, financing amount and new projects continue to decline. Can axie and stepn get rid of the death spiral? Where is the chain tour?

大家都能看得懂的源码(一)ahooks 整体架构篇

Developer survey: rust/postgresql is the most popular, and PHP salary is low