当前位置:网站首页>[Bayesian classification 4] Bayesian network

[Bayesian classification 4] Bayesian network

2022-06-26 20:38:00 【NoBug ㅤ】

List of articles

1. Knowledge review of semi naive Bayesian classifier

Semi naive Bayesian classifier The principle of is to properly consider the dependency information between some attributes . The most commonly used strategy to consider is dependent estimation , There is a super - dependent estimation (SPODE), Average independent dependence estimation (AODE), Tree augmentation naive Bayes (TAN).

Dependent estimation of superhusband It is to directly make all attributes depend on the same attribute , This property, which is jointly dependent on other properties, is called “ Superhusband ”, Super husband choice is not always it , Cross validation can be used , We choose the model with the best training effect .

Average independent dependence estimation Is to treat each attribute as a SPODE Model , but P ( c ) P(c) P(c) Change into P ( c , x i ) P(c,x_i) P(c,xi), But this model requires that the training set be large enough , Define a threshold m ′ m' m′, requirement ∣ D x i ∣ ≥ m ′ |D_{x_i}|\geq m' ∣Dxi∣≥m′.

Tree augmentation naive Bayes , Based on the maximum weighted spanning tree algorithm , Calculate the conditional mutual information between two attributes , I ( x i , x j ∣ y ) I(x_i,x_j|y) I(xi,xj∣y) The bigger it is , It means the stronger the dependence .

2. Bayesian network learning notes

2.1 introduction

Bayesian network is also called “ Faith net ”, It uses directed acyclic graph to describe the dependency between attributes , The conditional probability table is used to describe the joint probability distribution of attributes , It's a probability graph model , Help people apply probability and statistics to complex fields , Tools for uncertainty reasoning and numerical analysis .

Bayesian network is a directed acyclic graph that describes the dependence between variables from the perspective of conditional probability (DAG). Reveal the dependencies between variables , Also known as causality ( Is the characteristic of Bayesian network ), It simulates the uncertainty of causality in human reasoning .

2.2 Knowledge cards

1. Bayesian network :Bayesian Network, abbreviation BN

2. Faith net :belief network

3. Conditional probability table :Conditional Probability Table, abbreviation CPT

4. Causal relationship

5. Directed acyclic graph :Directed Acyclic Graph, abbreviation DAG

2.3 Probability graph model (PGM)

2.3.1 introduction

Probability graph model (Probabilistic Graphical Model), Combining probability theory and graph theory , It refers to a probability model that describes the conditional independence between multivariate random variables with a graph structure ( Pay attention to conditional independence ), So it brings great convenience to study the probability model of high-dimensional space . It can be divided into Bayesian networks and Markov networks .

We hope to be able to mine the knowledge hidden in the data , The probability graph constructs such a graph . The probability diagram directly shows the relationship of conditional independence between random variables .

2.3.2 Why introduce the probability graph ?

Higher order variables (k Dimensional random variable ), Y = [ X 1 , X 2 , . . . , X k ] Y=[X_1,X_2,...,X_k] Y=[X1,X2,...,Xk], Suppose each random variable is discrete , Values are m m m individual . So without any independence , You need to m k − 1 m^k-1 mk−1 Only one parameter can represent its probability distribution , The parameters are exponential .

An effective way to reduce the number of parameters is the independence assumption . First of all, will k The joint probability decomposition of dimensional random variables is k The product of conditional probabilities , that P ( y ) = ∏ k = 1 k P ( x k ∣ x 1 , x 2 , . . . , x k − 1 ) P(y)=\prod_{k=1}^kP(x_k|x_1,x_2,...,x_{k-1}) P(y)=∏k=1kP(xk∣x1,x2,...,xk−1). If two variables are independent , Then the condition of conditional probability will be reduced .

example : It is known that X 1 X_1 X1 when , X 2 X_2 X2 and X 3 X_3 X3 Independent , X 1 X_1 X1 and X 4 X_4 X4 Independent . be P ( x 2 ∣ x 1 , x 3 ) = P ( x 2 ∣ x 1 ) P(x_2|x_1,x_3)=P(x_2|x_1) P(x2∣x1,x3)=P(x2∣x1), P ( x 3 ∣ x 1 , x 2 ) = P ( x 3 ∣ x 1 ) P(x_3|x_1,x_2)=P(x_3|x_1) P(x3∣x1,x2)=P(x3∣x1). Then the joint probability P ( y ) = P ( x 1 ) P ( x 2 ∣ x 1 ) P ( x 3 ∣ x 1 ) P ( x 4 ∣ x 2 , x 3 ) P(y)=P(x_1)P(x_2|x_1)P(x_3|x_1)P(x_4|x_2,x_3) P(y)=P(x1)P(x2∣x1)P(x3∣x1)P(x4∣x2,x3).

2.3.3 Three basic problems of probability graph ( Express , Study , infer )

- Express problem : For a probability model , How to describe the dependency relationship between variables through graph structure .

- Learning problems : Graph model learning includes graph structure learning and parameter learning .

- Infer the problem : When some variables are known , Calculate the conditional probability distribution of other variables .

2.4 Bayesian networks

2.4.1 Expression of Bayesian network

- form

A Bayesian network B B B By structure G G G And parameters θ \theta θ Two parts make up , namely B = < G , θ > B=<G,\theta> B=<G,θ>. Network structure G G G It's a directed acyclic graph , Each node corresponds to an attribute . If two attributes have direct dependency , Then they are connected by an edge ; Parameters θ \theta θ Describe this dependency quantitatively . Suppose the attribute x i x_i xi stay G G G The parent node set in is π i \pi_i πi, be θ \theta θ A conditional probability table containing each attribute θ x i ∣ π i = P B ( x i ∣ π i ) \theta_{x_i|\pi_i}=P_B(x_i|\pi_i) θxi∣πi=PB(xi∣πi)

- Example

From the network structure in the figure, we can see ,“ Colour and lustre ” Depend directly on “ Good melon “ and " Sweetness ”, and " roots " Is directly dependent on " Sweetness ”,“ Knock sound " Depend directly on " Good melon ”. From the conditional probability table, we can get " roots " Yes " Sweetness " Quantify dependencies , Such as P( roots = Curl up | Sweetness = high )=0.9.

2.4.2 structure

2.4.2.1 Joint probability distribution

Joint probability distribution of discrete random variables , Bayesian network structure effectively expresses the conditional independence between attributes . Given the parent node set π i \pi_i πi, Bayesian network assumes that each attribute is independent of its non descendant attribute , therefore B = < D , θ > B=<D,\theta> B=<D,θ> Attribute x 1 , x 2 , . . . , x d x_1,x_2,...,x_d x1,x2,...,xd The joint probability distribution of is defined as :

P B ( x 1 , x 2 , . . . , x d ) = ∏ i = 1 d P B ( x i ∣ π i ) = ∏ i = 1 d θ x i ∣ π i P_B(x_1,x_2,...,x_d)=\prod_{i=1}^dP_B(x_i|\pi_i)=\prod_{i=1}^d\theta_{x_i|\pi_i} PB(x1,x2,...,xd)=i=1∏dPB(xi∣πi)=i=1∏dθxi∣πi

2.4.2.2 Attribute independent notation

x 3 and x 4 stay to set x 1 Of take value when single state : x 3 ⊥ x 4 ∣ x 1 x_3 and x_4 In the given x_1 Is independent :x_3\bot x_4|x_1 x3 and x4 stay to set x1 Of take value when single state :x3⊥x4∣x1

2.4.2.3 Typical dependencies of three variables in Bayesian networks

The same parent structure

Given parent node x 1 x_1 x1 The value of , be x 3 x_3 x3 And x 4 x_4 x4 Conditions are independent .V Type structure

Give stator nodes x 4 x_4 x4 The value of , x 1 x_1 x1 And x 2 x_2 x2 There is no need to be independent .

if x 4 x_4 x4 The value of is completely unknown , but x 1 x_1 x1 And x 2 x_2 x2 But they are independent of each other .Sequential structure

Given x x x Value , be y y y And z z z Conditions are independent .

2.4.2.4 Moral map

【 introduction 】

" Moralization " Implication of : Parents of children should build strong relationships , Otherwise it is immoral .

In order to analyze the conditional independence of variables in a directed graph , You can use “ Directed separation ”, Turn a directed graph into an undirected graph , The undirected graph produced at this time is called moral graph . Based on the moral map can be intuitive 、 Quickly find the conditional independence between variables .

【 step 】

- Find all of them in a digraph V Type structure , stay V An undirected edge is added between the two parent nodes of a type structure ;

- Change all directed edges to undirected edges .

【 principle 】

Suppose there are variables in the moral map x , y x,y x,y And variable sets z = { z i } z=\{z_i\} z={ zi}, If the variable x x x and y y y Be able to be z z z Separate , That is to set variables from the moral map z z z After removing ( Delete nodes and associated edges ), x x x and y y y Belong to two connected branches , It's called a variable x x x and y y y By z z z Directed separation , x ⊥ y ∣ z x\bot y|z x⊥y∣z establish .

【 Example 】

Moralization

x 3 ⊥ x 2 ∣ x 1 x_3\bot x_2|x_1 x3⊥x2∣x1

2.4.3 Study

2.4.3.1 Mission

If the network structure is known , That is, the dependency between attributes is known , The learning process of Bayesian network is relatively simple , Just by comparing the training samples " Count ", Estimate the conditional probability table of each node .

But in real applications, we often do not know the network structure . therefore , The primary task of Bayesian network learning is to find out the most important structure according to the training data set " Appropriate " Bayesian network .

2.4.3.2 Score search

" Score search " It is a common method to solve this problem . say concretely , Let's first define a scoring function (score function) , To evaluate the fit between Bayesian network and training data , Then based on this scoring function to find the best Bayesian network .

2.4.3.3 Scoring function

- Rule one : Minimum description length criterion

Minimum description length criterion (Minimal Description Length, abbreviation MDL Rules ), The goal of learning is to find a model that can describe the training data with the shortest coding length , At this time, the encoding length includes the byte length required to describe the model itself and the byte length required to describe the data using the model ( The length of the code = The byte length required to describe the model itself + Use this model to describe the byte length required for data ).

- Application of rule one , Bayesian networks

Given training set D = { x 1 , x 2 , . . . , x m } D=\{x_1,x_2,...,x_m\} D={ x1,x2,...,xm}, Bayesian networks B = < G , θ > B=<G,\theta> B=<G,θ> stay D D D The scoring function on can be written as :

s ( B ∣ D ) = f ( θ ) ∣ B ∣ − L L ( B ∣ D ) s(B|D)=f(\theta)|B|-LL(B|D) s(B∣D)=f(θ)∣B∣−LL(B∣D)

【 among 】,

- |B| Is the number of parameters of Bayesian network ;

- f ( θ ) f(\theta) f(θ) Is to describe each parameter θ \theta θ Number of bytes required ;

- LL(B|D) It's a Bayesian network B B B The log likelihood of , L L ( B ∣ D ) = ∑ j = 1 m l o g P B ( x j ) LL(B|D)=\sum_{j=1}^mlogP_B(x_j) LL(B∣D)=∑j=1mlogPB(xj).

【 obviously 】,

- f ( θ ) ∣ B ∣ f(\theta)|B| f(θ)∣B∣: Computationally coded Bayesian networks B B B Number of bytes required ;

- L L ( B ∣ D ) LL(B|D) LL(B∣D): Calculation B B B Corresponding probability distribution P B P_B PB How many bytes are required to describe D.

【 therefore 】,

- The learning task is transformed into an optimization task .

- Look for a Bayesian network B B B Make the scoring function s ( B ∣ D ) s(B|D) s(B∣D) Minimum .

【 f ( θ ) f(\theta) f(θ)】,

- f ( θ ) = 1 f(\theta)=1 f(θ)=1, That is, each parameter uses 1 Byte description , obtain AIC Scoring function .

- f ( θ ) = 1 2 l o g m f(\theta)=\frac{1}{2}logm f(θ)=21logm, That is, each parameter uses 1 2 l o g m \frac{1}{2}logm 21logm Byte description , obtain BIC Scoring function .

- f ( θ ) = 0 f(\theta)=0 f(θ)=0, Then the scoring function degenerates to negative log likelihood , Corresponding , The learning task degenerates into maximum likelihood estimation .

2.4.3.4 G Fix , For parameters θ \theta θ Maximum likelihood estimation of

- Dual transformation

s ( B ∣ D ) = f ( θ ) ∣ B ∣ − L L ( B ∣ D ) s(B|D)=f(\theta)|B|-LL(B|D) s(B∣D)=f(θ)∣B∣−LL(B∣D), It's not hard to find out , If Bayesian network B = < G , θ > B=<G,\theta> B=<G,θ> Network structure G G G Fix , Then the scoring function s ( B ∣ D ) s(B|D) s(B∣D) The first term of is a constant , here , To minimize the s ( B ∣ D ) s(B|D) s(B∣D) Equivalent to a parameter θ \theta θ Maximum likelihood estimation of . a r g m a x L L ( B ∣ D ) arg\ max\ LL(B|D) arg max LL(B∣D), because L L ( B ∣ D ) = ∑ j = 1 m l o g P B ( x j ) LL(B|D)=\sum_{j=1}^mlogP_B(x_j) LL(B∣D)=∑j=1mlogPB(xj), P B ( x 1 , x 2 , . . . , x d ) = ∏ i = 1 d P B ( x i ∣ π i ) = ∏ i = 1 d θ x i ∣ π i P_B(x_1,x_2,...,x_d)=\prod_{i=1}^dP_B(x_i|\pi_i)=\prod_{i=1}^d\theta_{x_i|\pi_i} PB(x1,x2,...,xd)=∏i=1dPB(xi∣πi)=∏i=1dθxi∣πi

- Parameter calculation formula

This parameter θ x i ∣ π i \theta_{x_i|\pi_i} θxi∣πi Can be directly in training data D Through empirical estimation , namely

θ x i ∣ π i = P ^ D ( x i ∣ π i ) \theta_{x_i|\pi_i}=\hat{P}_D(x_i|\pi_i) θxi∣πi=P^D(xi∣πi)

- NP Difficult problem

therefore , To minimize the scoring function s ( B I D ) s(B I D) s(BID), Just search the network structure , The optimal parameters of the candidate structure can be calculated directly on the training set . Unfortunately , Searching for the optimal Bayesian network structure from all possible network structure spaces is a NP Difficult problem , Difficult to solve quickly .

- solve

The first is the greedy method , For example, starting from a certain network structure , Adjust one edge at a time ( increase 、 Delete or adjust direction ), Until the value of the scoring function no longer decreases .

The second is to reduce the search space by imposing constraints on the network structure , For example, the network structure is limited to a tree structure .

2.4.4 infer

2.4.4.1 introduction

After training, Bayesian network can be used to answer " Inquire about " (query), That is to infer the value of other attribute variables through the observed values of some attribute variables . For example, in the watermelon problem , If we observe that watermelon is green 、 Knock the candle 、 Root curl , Want to know if it is mature 、 How sweet . In this way, the process of inferring the variables to be queried through known variable observations is called " infer " (inference) , The observed values of known variables are called " evidence " (evidence).

The most ideal is to calculate the posterior probability accurately according to the joint probability distribution defined by Bayesian network , Unfortunately , In this way " To infer exactly " Has been proved to be NP Difficult . In other words , When there are many network nodes 、 When connections are dense , It is difficult to make accurate inferences , At this time, you need to use " Approximate inference ", By reducing accuracy requirements , Obtain an approximate solution in a finite time .

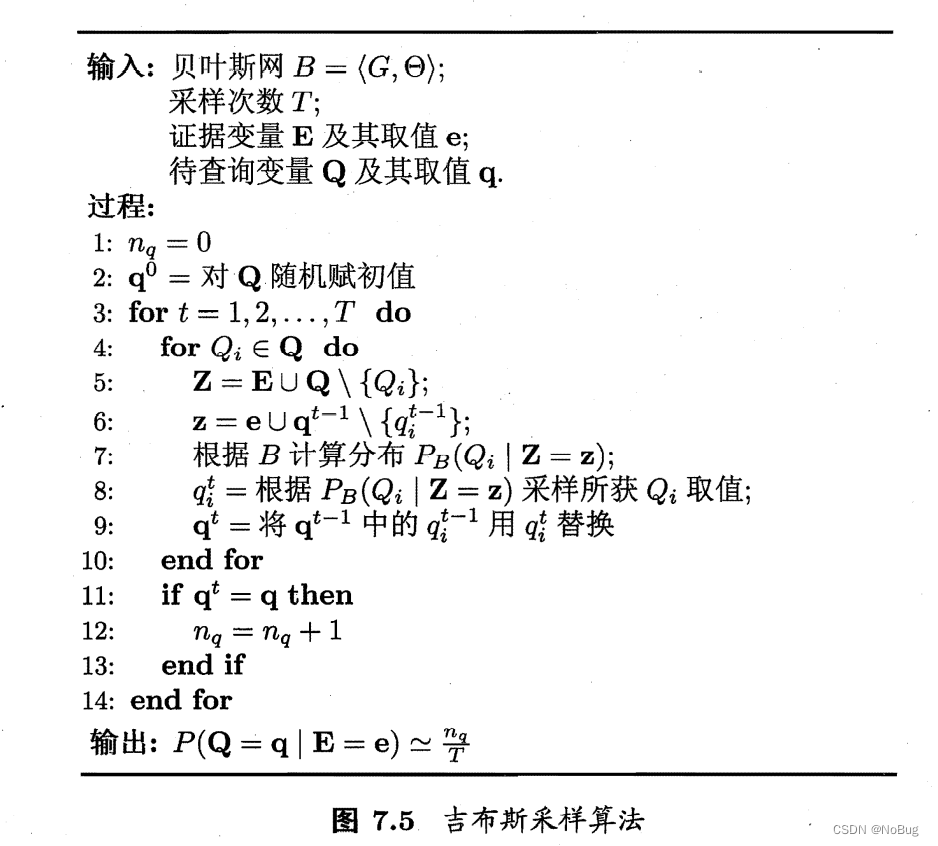

In practical applications , Gibbs sampling is often used for approximate inference of Bayesian networks (Gibbs sampling), This is a random sampling method .

2.4.4.2 Gibbs sampling

【 Knowledge cards 】

1. Gibbs sampling :Gibbs sampling

2. Gibbs sampling is a random sampling method .

3. Gibbs sampling , Used when direct sampling is difficult , Approximate sampling sequence from a multivariable probability distribution .

4. The sequence can be used to approximate the joint distribution .

5. Gibbs Sampling is an iterative algorithm .

6. Gibbs The core of sampling is Bayesian theory , Around prior knowledge and observational data , The posterior distribution can be inferred from the observed values .

7. Gibbs sampling is a kind of Markov chain Monte Carlo (MCMC) Algorithm , It can be seen as MetropolisHastings A special case of the algorithm .

8. Gibbs sampling , Each step depends only on the state of the previous step ,, This is a " Markov chain ".

9. " Markov chain " In steps T Towards infinity , Must converge ( It must be a stationary distribution ).

10. Gibbs sampling is a special case of Markov chain .

11. Markov chains usually take a long time to reach a stable distribution , So the convergence speed of Gibbs sampling algorithm is slow .

【 principle 】

The basic principle of Gibbs sampling algorithm is through random sampling , Constantly update the model and its position in each input sequence , Optimize the objective function , When certain iteration termination conditions are satisfied or the maximum number of iterations is reached, the final desired motif is obtained .

【 Variable 】

- Make Q = { Q 1 , Q 2 , . . . , Q n } Q=\{Q_1,Q_2,...,Q_n\} Q={ Q1,Q2,...,Qn} Represents the variable to be queried

- E = { E 1 , E 2 , . . . , E k } E=\{E_1,E_2,...,E_k\} E={ E1,E2,...,Ek} Is the evidence variable

- Its value is e = { e 1 , e 2 , . . . , e k } e=\{e_1,e_2,...,e_k\} e={ e1,e2,...,ek}

- The goal is to calculate a posteriori probabilities P ( Q = q ∣ E = e ) P(Q=q|E=e) P(Q=q∣E=e)

- among q = { q 1 , q 2 , . . . , q n } q=\{q_1,q_2,...,q_n\} q={ q1,q2,...,qn} Is a set of values of variables to be queried

【 Example 】

- Q = { good melon , sweet degree } Q=\{ Good melon , Sweetness \} Q={ good melon , sweet degree }

- E = { color Ze , knock The sound , root Tit } E=\{ Colour and lustre , Knock sound , roots \} E={ color Ze , knock The sound , root Tit }

- e = { green green , turbid ring , Curl up shrink } e=\{ dark green , Murmur , Curl up \} e={ green green , turbid ring , Curl up shrink }

- P ( Q = q ∣ E = e ) P(Q=q|E=e) P(Q=q∣E=e)

- q = { yes , high } q=\{ yes , high \} q={ yes , high }

- 【 Find out the probability that this is a good melon with high sweetness ?】

【 The process 】

- The Gibbs sampling algorithm first randomly generates one with evidence E = e E=e E=e Consistent samples q 0 q^0 q0 As a starting point ;

- Then, each step starts from the current sample to generate the next sample .

- The algorithm first assumes q t = q t − 1 q^t=q^{t-1} qt=qt−1;

- Then the non evidence variables are sampled one by one to change their values ;

- How to change ? According to Bayesian network B B B And other variables ( namely Z = z Z= z Z=z) Obtained by calculation ;

- after T T T The number of samples obtained from q q q Consistent samples share n q n_q nq individual , be

P ( Q = q ∣ E = e ) ≃ n q T P(Q=q|E=e)\simeq \frac{n_q}{T} P(Q=q∣E=e)≃Tnq - T When a large , By Markov chain properties , Gibbs sampling is equivalent to according to P ( Q ∣ E = e ) P(Q|E=e) P(Q∣E=e) sampling , Thus, the probability converges to P(Q=q|E=e)

【 Algorithm 】

3. Bayesian network comb

3.1 Bayesian network understanding

1. Bayesian network is a directed acyclic graph that describes the dependence between variables from the perspective of conditional probability (DAG).

2. use G=(V,E) To represent a Bayesian network , among V Said variable ,E Represents the conditional probability between variables , Also called weight .

3. Bayesian networks reveal the dependencies between variables , Missing values can be predicted .

4. Bayesian network :Bayesian Network, abbreviation BN

5. Probability graph model ( Two categories: )= Bayesian network + Markov networks

6. characteristic : Causality between variables

3.2 Data collection

- Briefly describe the difference between Bayesian network and other probability graph models

Bayesian network is just a kind of probability graph model , One of the characteristics of it and other probability graph models is , It describes the causal relationship between variables . If time concept is added to the model , Then there can be Markov chains and Gaussian processes . From space , If the random variable is continuous , Then there is a model like Gaussian Bayesian network . Hybrid model plus time series , Then there is hidden Markov model 、 Kalman filtering 、 Particle filter and other models .

- Markov chain

3.3 Bayesian network review

1. Bayesian networks , Directed acyclic graph

2. node ( How to solve parameter independence ), edge ( rely on , How to construct , Three basic dependencies ), A weight ( Calculation of parameters )

3. How to construct B Structure

4. Inquire about , Gibbs sampling --> Markov chain

边栏推荐

猜你喜欢

Unity——Mathf. Similarities and differences between atan and atan2

【贝叶斯分类3】半朴素贝叶斯分类器

论数据库的传统与未来之争之溯源溯本----AWS系列专栏

Development of NFT for digital collection platform

Good thing recommendation: mobile terminal development security tool

![[recommended collection] these 8 common missing value filling skills must be mastered](/img/ab/353f74ad73ca592a3f97ea478922d9.png)

[recommended collection] these 8 common missing value filling skills must be mastered

QT两种方法实现定时器

GEE:计算image区域内像素最大最小值

Dynamic planning 111

Muke 8. Service fault tolerance Sentinel

随机推荐

慕课8、服务容错-Sentinel

Case description: the competition score management system needs to count the competition scores obtained by previous champions and record them in the file. The system has the following requirements: -

两个文件 合并为第三个文件 。

0 basic C language (2)

Developer survey: rust/postgresql is the most popular, and PHP salary is low

Unity——Mathf. Similarities and differences between atan and atan2

GEE:计算image区域内像素最大最小值

[most detailed] the latest and complete redis interview (70)

Guomingyu: Apple's AR / MR head mounted display is the most complicated product in its history and will be released in January 2023

Minimum spanning tree, shortest path, topology sorting, critical path

515. 在每个树行中找最大值

清华大学就光刻机发声,ASML立马加紧向中国出口光刻机

find_ path、find_ Library memo

Dynamic planning 111

威胁猎人必备的六个威胁追踪工具

好物推薦:移動端開發安全工具

Idea error: process terminated

MongoDB实现创建删除数据库、创建删除表(集合)、数据增删改查

Tiktok practice ~ homepage video ~ pull-down refresh

Detailed explanation of retrospective thinking