当前位置:网站首页>Flink spark vs. Flink

Flink spark vs. Flink

2022-06-11 12:10:00 【But don't ask about your future】

List of articles

Preface

Apache Spark It is a general large-scale data analysis engine . Its memory computing concept allows us to learn from Hadoop Heavy MapReduce Get out of the program . In addition to fast calculation 、 High scalability ,Spark Also for batch processing (Spark SQL)、 Stream processing (Spark Streaming)、 machine learning (Spark MLlib)、 Figure calculation (Spark GraphX) It provides a unified distributed data processing platform , After years of vigorous development, the whole ecology has been very perfect .

and Flink In the field of real-time processing , Want to learn a big data processing framework , Choice in the end Spark, still Flink ?

1. Data processing architecture

Batch processing is for bounded data sets , It is very suitable for computing work that requires access to a large amount of all data , It is generally used for offline statistics .

Stream processing is mainly aimed at data streams , Characterized by unbounded 、 real time , Perform operations on each data transmitted by the system in turn , Generally used for real-time statistics .

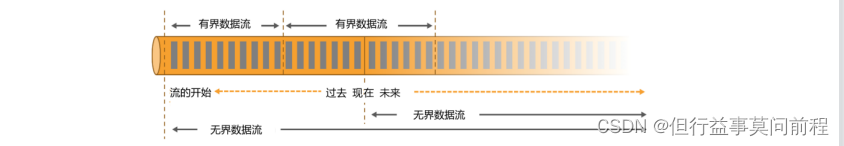

Spark Based on batch processing , And try to support stream computing on a batch basis ; stay Spark In my world view , Offline data is a large batch , The real-time data is composed of infinite small batches . So for the stream processing framework Spark Streaming for , It's not really “ flow ” Handle , It is “ Micro batch ”(micro-batching) Handle

and Flink Think , Stream processing is the most basic operation , Batch processing can also be unified as stream processing . stay Flink In my world view , Real time data is standard 、 A stream without boundaries , Offline data is a bounded stream .

(1) Unbounded data flow (Unbounded Data Stream)

The so-called unbounded data flow , There is a head but no tail , The generation and transmission of data will begin but never end . So for unbounded data streams , Must be handled continuously , That is, it must be processed immediately after the data is obtained . When dealing with unbounded flows , In order to ensure the correctness of the results , It must be possible to process data in sequence .

(2) Bounded data flow (Bounded Data Stream)

Bounded data flows have well-defined beginning and end , So you can process bounded flows by getting all the data . There is no need to strictly guarantee the order of data when dealing with bounded flows , Because you can sort bounded data sets . The processing of bounded flows is also called batch processing

Generally speaking ,Spark There is always a way to synchronize based on micro batch processing “ Save batch ” The process of , So there will be extra expenses , Therefore, the low latency of stream processing cannot be maximized . In low latency stream processing scenarios ,Flink There are already obvious advantages . In the field of batch processing of massive data ,Spark Can handle more throughput , Plus its perfect ecology and mature and easy-to-use API, At present, the same advantages are obvious .

2. Data model and operational architecture

Spark and Flink The main difference in the underlying implementation is the data model :

Spark The underlying data model is an elastic distributed data set (RDD),Spark Streaming The underlying interface for micro batch processing DStream, In fact, it is also a small batch of data RDD Set . It can be seen that ,Spark The design itself is based on batch data sets , More suitable for batch processing scenarios .

and Flink The basic data model is data flow (DataFlow), And events (Event) Sequence .Flink Basically in full accordance with Google Of DataFlow Model implementation , So from the bottom data model ,Flink It is designed to process streaming data , More suitable for the scene of stream processing .

The data model is different , Corresponding to the process of running processing , Naturally, there will be different architectures .Spark You need to set the task corresponding to DAG To divide into stages (Stage), After one is finished, it goes through shuffle Then proceed to the next stage of calculation . and Flink Is the standard streaming execution mode , After an event is processed by one node, it can be directly sent to the next node for processing .

3. Spark still Flink?

If you need to learn from Spark and Flink Choose one of these two mainstream frameworks for real-time streaming , It is more recommended to use Flink, The main reasons are :

Flink The delay of is in milliseconds , and Spark Streaming The delay of is in the order of seconds

Flink It provides strict and precise one-time semantic guarantee

Flink The window of API More flexible 、 More semantic

Flink Provide event time semantics , Delay data can be handled correctly

Flink Provides more flexible state programming API

边栏推荐

- flink Spark 和 Flink对比

- 一般运维架构图

- C reads TXT file to generate word document

- What is a Gerber file? Introduction to PCB Gerber file

- PS does not display text cursor, text box, and does not highlight after selection

- POJ 3278 catch the cow (width first search, queue implementation)

- Software project management 7.1 Basic concept of project schedule

- Secret derrière le seau splunk

- JS 加法乘法错误解决 number-precision

- C # convert ofd to PDF

猜你喜欢

Use of RadioButton in QT

Notes on topic brushing (XIV) -- binary tree: sequence traversal and DFS, BFS

CentOS installation mysql5.7

纯数据业务的机器打电话进来时回落到了2G/3G

C reads TXT file to generate word document

Notes on brushing questions (13) -- binary tree: traversal of the first, middle and last order (review)

Linux changes the MySQL password after forgetting it

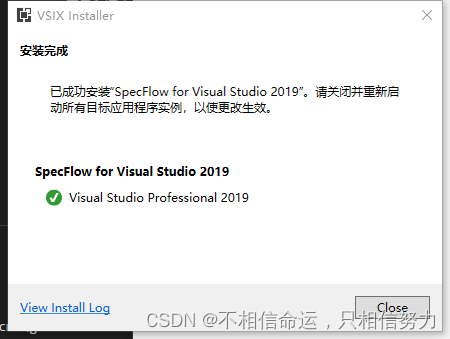

Specflow环境搭建

Linux忘记MySQL密码后修改密码

Splunk 最佳实践-减轻captain 负担

随机推荐

Is the SSL certificate reliable in ensuring the information security of the website?

Acwing50+Acwing51周赛+Acwing3493.最大的和(未完结)

Deep learning and CV tutorial (14) | image segmentation (FCN, segnet, u-net, pspnet, deeplab, refinenet)

Linux忘记MySQL密码后修改密码

Splunk 健康检查之关闭THP

CVPR 2022 | 文本引导的实体级别图像操作ManiTrans

深度学习与CV教程(14) | 图像分割 (FCN,SegNet,U-Net,PSPNet,DeepLab,RefineNet)

Splunk 手工同步search head

Use compiler option '--downleveliteration' to allow iteration of iterations

安全工程师发现PS主机重大漏洞 用光盘能在系统中执行任意代码

Where is it safer to open an account for soda ash futures? How much capital is needed for a transaction?

gocron 定时任务管理平台

什么是Gerber文件?PCB电路板Gerber文件简介

解决Splunk kvstore “starting“ 问题

Sulley fuzzer learning

typescript 编译选项和配置文件

PS does not display text cursor, text box, and does not highlight after selection

Android 11+ 配置SqlServer2014+

中国网络安全年会周鸿祎发言:360安全大脑构筑数据安全体系

Use compiler option ‘--downlevelIteration‘ to allow iterating of iterators 报错解决