当前位置:网站首页>Gaussian distribution and its maximum likelihood estimation

Gaussian distribution and its maximum likelihood estimation

2022-07-31 04:28:00 【Adenialzz】

Gaussian distribution and its maximum likelihood estimation

高斯分布

一维高斯分布

The probability density function of a one-dimensional Gaussian distribution is :

N ( μ , σ 2 ) = 1 2 π σ exp ( − ( x − μ ) 2 2 σ 2 ) N(\mu,\sigma^2)=\frac{1}{\sqrt{2\pi}\sigma}\exp(-\frac{(x-\mu)^2}{2\sigma^2}) N(μ,σ2)=2πσ1exp(−2σ2(x−μ)2)

多维高斯分布

D D D The probability density function of the dimensional Gaussian distribution is :

N ( μ , Σ ) = 1 ( 2 π D 2 ∣ Σ ∣ 1 2 ) exp ( − ( x − μ ) 2 Σ − 1 ( x − μ ) 2 ) N(\mu,\Sigma)=\frac{1}{(2\pi^{\frac{D}{2}}|\Sigma|^{\frac{1}{2}})}\exp(-\frac{(x-\mu)^2\Sigma^{-1}(x-\mu)}{2}) N(μ,Σ)=(2π2D∣Σ∣21)1exp(−2(x−μ)2Σ−1(x−μ))

极大似然估计

贝叶斯公式

贝叶斯公式如下:

P ( θ ∣ X ) = P ( X ∣ θ ) P ( θ ) P ( X ) P(\theta|X)=\frac{P(X|\theta)P(\theta)}{P(X)} P(θ∣X)=P(X)P(X∣θ)P(θ)

其中, P ( X ∣ θ ) P(X|\theta) P(X∣θ) 称为后验概率, P ( θ ) P(\theta) P(θ) 称为先验概率, P ( θ ∣ X ) P(\theta|X) P(θ∣X) be the likelihood function.The so-called maximum likelihood estimation,Even if you want to let the likelihood function P ( θ ∣ X ) P(\theta|X) P(θ∣X) 取到最大,Estimate the parameters at this time θ \theta θ 的值.详见:先验、后验、似然.

高斯分布的极大似然估计

假设我们有 N N N 个观测数据 X = ( x 1 , x 2 , … , x N ) X=(x_1,x_2,\dots,x_N) X=(x1,x2,…,xN) ,Each sample point is D D D 维的,Then our data is one N × D N\times D N×D 的矩阵.The parameter we want to estimate is the mean in the multidimensional Gaussian distribution μ \mu μ 和协方差矩阵 Σ \Sigma Σ .

Here we take the one-dimensional Gaussian distribution as an example for derivation.i.e. each sample point x i x_i xi 是一维的,What we want to estimate is the mean of a one-dimensional Gaussian distribution μ \mu μ 和方差 σ 2 \sigma^2 σ2 ,即 θ = ( μ , σ 2 ) \theta=(\mu,\sigma^2) θ=(μ,σ2) .

Below we use maximum likelihood estimation to estimate these two parameters:

θ ^ M L E = arg max θ L ( θ ) \hat{\theta}_{MLE}=\arg\max_\theta\mathcal{L(\theta)} θ^MLE=argθmaxL(θ)

为了方便计算,We usually optimize the log-likelihood,有:

L ( θ ) = log P ( X ∣ θ ) = log ∏ i = 1 N P ( x i ∣ θ ) = ∑ i = 1 N log P ( x i ∣ θ ) = ∑ i = 1 N log 1 2 π σ exp ( ( x i − μ ) 2 2 σ 2 ) = ∑ i = 1 N [ log 1 2 π + log 1 σ − ( x i − μ ) 2 2 σ 2 ] \begin{align} \mathcal{L}(\theta)&=\log P(X|\theta)\\ &=\log \prod_{i=1}^NP(x_i|\theta)\\ &=\sum_{i=1}^N\log P(x_i|\theta)\\ &=\sum_{i=1}^N\log \frac{1}{\sqrt{2\pi}\sigma}\exp(\frac{(x_i-\mu)^2}{2\sigma^2})\\ &=\sum_{i=1}^N[\log \frac{1}{\sqrt{2\pi}}+\log \frac{1}{\sigma}-\frac{(x_i-\mu)^2}{2\sigma^2}]\\ \end{align} L(θ)=logP(X∣θ)=logi=1∏NP(xi∣θ)=i=1∑NlogP(xi∣θ)=i=1∑Nlog2πσ1exp(2σ2(xi−μ)2)=i=1∑N[log2π1+logσ1−2σ2(xi−μ)2]

And can throw away the constant term in it,is the final optimization goal:

θ ^ M L E = arg max θ ∑ i = 1 N [ log 1 σ − ( x i − μ ) 2 2 σ 2 ] \hat{\theta}_{MLE}=\arg\max_\theta\sum_{i=1}^N[\log \frac{1}{\sigma}-\frac{(x_i-\mu)^2}{2\sigma^2}]\\ θ^MLE=argθmaxi=1∑N[logσ1−2σ2(xi−μ)2]

接下来我们分别对 μ \mu μ 和 σ 2 \sigma^2 σ2 求偏导,并令其等于零,得到估计值.

对于 μ \mu μ :

μ ^ M L E = arg max μ ∑ i = 1 N [ − ( x i − μ ) 2 2 σ 2 ] = arg min μ ∑ i = 1 N ( x i − μ ) 2 \begin{align} \hat{\mu}_{MLE}&=\arg\max_{\mu}{\sum_{i=1}^N[-\frac{(x_i-\mu)^2}{2\sigma^2}]}\\ &=\arg\min_\mu\sum_{i=1}^N(x_i-\mu)^2 \end{align} μ^MLE=argμmaxi=1∑N[−2σ2(xi−μ)2]=argμmini=1∑N(xi−μ)2

求偏导:

∂ ∑ i = 1 N ( x i − μ ) 2 ∂ μ = ∑ i = 1 N − 2 × ( x i − μ ) ≜ 0 \frac{\partial\sum_{i=1}^N(x_i-\mu)^2}{\partial\mu}=\sum_{i=1}^N-2\times(x_i-\mu)\triangleq0 ∂μ∂∑i=1N(xi−μ)2=i=1∑N−2×(xi−μ)≜0

得到:

μ ^ M L E = 1 N ∑ i = 1 N x i \hat{\mu}_{MLE}=\frac{1}{N}\sum_{i=1}^Nx_i μ^MLE=N1i=1∑Nxi

对于 σ 2 \sigma^2 σ2

σ 2 ^ = arg max σ 2 ∑ i = 1 N [ log 1 σ − ( x i − μ ) 2 2 σ 2 ] = arg max σ 2 L σ 2 \hat{\sigma^2}=\arg\max_{\sigma^2}\sum_{i=1}^N[\log \frac{1}{\sigma}-\frac{(x_i-\mu)^2}{2\sigma^2}]=\arg\max_{\sigma^2}\mathcal{L}_{\sigma^2} σ2^=argσ2maxi=1∑N[logσ1−2σ2(xi−μ)2]=argσ2maxLσ2

求偏导:

∂ L σ 2 ∂ σ = ∑ i = 1 N [ − 1 σ − 1 2 ( x i − μ ) × ( − 2 ) ] ≜ 0 ∑ i = 1 N [ − σ 2 + ( x i − μ ) 2 ] ≜ 0 ∑ i = 1 N σ 2 = ∑ i = 1 N ( x i − μ ) 2 \frac{\partial{\mathcal{L}_{\sigma^2}}}{\partial{\sigma}}=\sum_{i=1}^N[-\frac{1}{\sigma}-\frac{1}{2}(x_i-\mu)\times(-2)]\triangleq0\\ \sum_{i=1}^N[-\sigma^2+(x_i-\mu)^2]\triangleq0\\ \sum_{i=1}^N\sigma^2=\sum_{i=1}^N(x_i-\mu)^2 ∂σ∂Lσ2=i=1∑N[−σ1−21(xi−μ)×(−2)]≜0i=1∑N[−σ2+(xi−μ)2]≜0i=1∑Nσ2=i=1∑N(xi−μ)2

得到:

σ 2 ^ M L E = 1 N ∑ i = 1 N ( x i − μ ^ M L E ) 2 \hat{\sigma^2}_{MLE}=\frac{1}{N}\sum_{i=1}^N(x_i-\hat{\mu}_{MLE})^2 σ2^MLE=N1i=1∑N(xi−μ^MLE)2

有偏估计和无偏估计

有偏估计(biased estimate)是指由样本值求得的估计值与待估参数的真值之间有系统误差,其期望值不是待估参数的真值.

在统计学中,估计量的偏差(或偏差函数)是此估计量的期望值与估计参数的真值之差.偏差为零的估计量或决策规则称为无偏的.否则该估计量是有偏的.在统计学中,“偏差”是一个函数的客观陈述.

我们分别计算 μ ^ M L E \hat{\mu}_{MLE} μ^MLE 和 σ 2 ^ M L E \hat{\sigma^2}_{MLE} σ2^MLE ,Let us examine whether the two estimates are unbiased.

对于 μ ^ M L E \hat{\mu}_{MLE} μ^MLE

E [ μ ^ M L E ] = E [ 1 N ∑ i = 1 N x i ] = 1 N ∑ i = 1 N E x i = μ E[\hat{\mu}_{MLE}]=E[\frac{1}{N}\sum_{i=1}^Nx_i]=\frac{1}{N}\sum_{i=1}^NEx_i=\mu E[μ^MLE]=E[N1i=1∑Nxi]=N1i=1∑NExi=μ

可以看到, μ ^ M L E \hat{\mu}_{MLE} μ^MLE The expectation is equal to the truth value μ \mu μ ,So it is an unbiased estimate.

对于 σ 2 ^ M L E \hat{\sigma^2}_{MLE} σ2^MLE

σ 2 ^ M L E = 1 N ∑ i = 1 N ( x i − μ ^ M L E ) 2 = 1 N ∑ i = 1 N ( x i 2 − 2 × x i × μ ^ M L E + μ ^ M L E 2 ) = 1 N ∑ i = 1 N ( x i 2 − 2 μ ^ M L E 2 + μ ^ M L E 2 ) = 1 N ∑ i = 1 N ( x i 2 − μ ^ M L E 2 ) \begin{align} \hat{\sigma^2}_{MLE}&=\frac{1}{N}\sum_{i=1}^N(x_i-\hat{\mu}_{MLE})^2\\ &=\frac{1}{N}\sum_{i=1}^N(x_i^2-2\times x_i\times \hat{\mu}_{MLE}+\hat{\mu}_{MLE}^2)\\ &=\frac{1}{N}\sum_{i=1}^N(x_i^2-2\hat{\mu}_{MLE}^2+\hat{\mu}_{MLE}^2)\\ &=\frac{1}{N}\sum_{i=1}^N(x_i^2-\hat{\mu}_{MLE}^2) \end{align} σ2^MLE=N1i=1∑N(xi−μ^MLE)2=N1i=1∑N(xi2−2×xi×μ^MLE+μ^MLE2)=N1i=1∑N(xi2−2μ^MLE2+μ^MLE2)=N1i=1∑N(xi2−μ^MLE2)

求期望:

E [ σ 2 ^ M L E ] = E [ 1 N ∑ i = 1 N ( x i 2 − μ ^ M L E 2 ) ] = E [ 1 N ∑ i = 1 N ( ( x i 2 − μ 2 ) − ( μ ^ M L E 2 − μ 2 ) ) ] = E [ 1 N ∑ i = 1 N ( x i 2 − μ 2 ) ] − E [ 1 N ∑ i = 1 N ( μ ^ M L E 2 − μ 2 ) ] = 1 N ∑ i = 1 N E ( x i 2 − μ 2 ) − 1 N ∑ i = 1 N E ( μ ^ M L E 2 − μ 2 ) = 1 N ∑ i = 1 N [ E ( x i 2 ) − E ( μ 2 ) ] − 1 N ∑ i = 1 N E ( μ ^ M L E 2 ) − E ( μ 2 ) = 1 N ∑ i = 1 N [ E ( x i 2 ) − μ 2 ] − 1 N ∑ i = 1 N [ E ( μ ^ M L E 2 ) − μ 2 ] = 1 N ∑ i = 1 N [ E ( x i 2 ) − ( E x i ) 2 ] − 1 N ∑ i = 1 N [ E ( μ ^ M L E 2 ) − E μ ^ M L E 2 ] = 1 N ∑ i = 1 N V a r ( x i ) − 1 N ∑ i = 1 N V a r ( μ ^ M L E ) = 1 N ∑ i = 1 N σ 2 − 1 N ∑ i = 1 N σ 2 N = N − 1 N σ 2 \begin{align} E[\hat{\sigma^2}_{MLE}]&=E[\frac{1}{N}\sum_{i=1}^N(x_i^2-\hat{\mu}_{MLE}^2)]\\ &=E[\frac{1}{N}\sum_{i=1}^N((x_i^2-\mu^2)-(\hat{\mu}_{MLE}^2-\mu^2))]\\ &=E[\frac{1}{N}\sum_{i=1}^N(x_i^2-\mu^2)]-E[\frac{1}{N}\sum_{i=1}^N(\hat{\mu}_{MLE}^2-\mu^2)]\\ &=\frac{1}{N}\sum_{i=1}^NE(x_i^2-\mu^2)-\frac{1}{N}\sum_{i=1}^NE(\hat{\mu}_{MLE}^2-\mu^2)\\ &=\frac{1}{N}\sum_{i=1}^N[E(x_i^2)-E(\mu^2)]-\frac{1}{N}\sum_{i=1}^NE(\hat{\mu}_{MLE}^2)-E(\mu^2)\\ &=\frac{1}{N}\sum_{i=1}^N[E(x_i^2)-\mu^2]-\frac{1}{N}\sum_{i=1}^N[E(\hat{\mu}_{MLE}^2)-\mu^2]\\ &=\frac{1}{N}\sum_{i=1}^N[E(x_i^2)-(Ex_i)^2]-\frac{1}{N}\sum_{i=1}^N[E(\hat{\mu}_{MLE}^2)-E\hat{\mu}_{MLE}^2]\\ &=\frac{1}{N}\sum_{i=1}^NVar(x_i)-\frac{1}{N}\sum_{i=1}^NVar(\hat{\mu}_{MLE})\\ &=\frac{1}{N}\sum_{i=1}^N\sigma^2-\frac{1}{N}\sum_{i=1}^N\frac{\sigma^2}{N}\\ &=\frac{N-1}{N}\sigma^2 \end{align} E[σ2^MLE]=E[N1i=1∑N(xi2−μ^MLE2)]=E[N1i=1∑N((xi2−μ2)−(μ^MLE2−μ2))]=E[N1i=1∑N(xi2−μ2)]−E[N1i=1∑N(μ^MLE2−μ2)]=N1i=1∑NE(xi2−μ2)−N1i=1∑NE(μ^MLE2−μ2)=N1i=1∑N[E(xi2)−E(μ2)]−N1i=1∑NE(μ^MLE2)−E(μ2)=N1i=1∑N[E(xi2)−μ2]−N1i=1∑N[E(μ^MLE2)−μ2]=N1i=1∑N[E(xi2)−(Exi)2]−N1i=1∑N[E(μ^MLE2)−Eμ^MLE2]=N1i=1∑NVar(xi)−N1i=1∑NVar(μ^MLE)=N1i=1∑Nσ2−N1i=1∑NNσ2=NN−1σ2

其中 V a r ( μ ^ M L E ) = V a r ( 1 N ∑ i = 1 N x i ) = 1 N 2 ∑ i = 1 N V a r ( x i ) = σ 2 N Var(\hat\mu_{MLE})=Var(\frac{1}{N}\sum_{i=1}^Nx_i)=\frac{1}{N^2}\sum_{i=1}^NVar(x_i)=\frac{\sigma^2}{N} Var(μ^MLE)=Var(N1∑i=1Nxi)=N21∑i=1NVar(xi)=Nσ2.

因此, σ 2 ^ M L E \hat{\sigma^2}_{MLE} σ2^MLE is not equal to its true value σ 2 \sigma^2 σ2 ,And it will be underestimated.An unbiased estimate should be 1 N − 1 ∑ i = 1 N ( x i − μ ^ M L E ) \frac{1}{N-1}\sum_{i=1}^N(x_i-\hat\mu_{MLE}) N−11∑i=1N(xi−μ^MLE) .

Ref

边栏推荐

- ERROR 1819 (HY000) Your password does not satisfy the current policy requirements

- "DeepJIT: An End-To-End Deep Learning Framework for Just-In-Time Defect Prediction" paper notes

- Know the showTimePicker method of the basic components of Flutter

- type_traits元编程库学习

- 聚变云原生,赋能新里程 | 2022开放原子全球开源峰会云原生分论坛圆满召开

- 组件传值 provide/inject

- MySQL数据库安装配置保姆级教程(以8.0.29为例)有手就行

- Safety 20220709

- [C language] General method of base conversion

- open failed: EACCES (Permission denied)

猜你喜欢

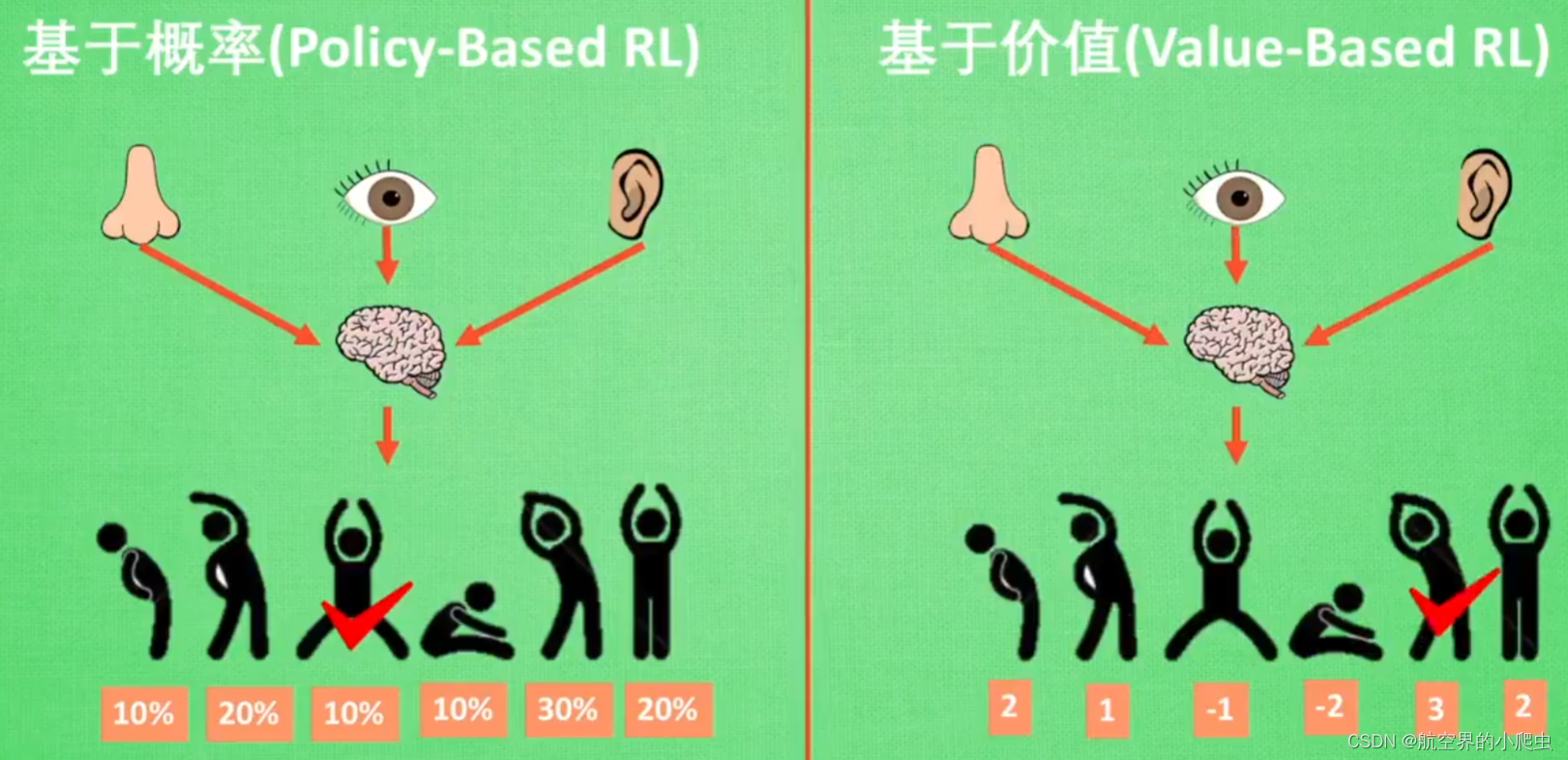

Reinforcement learning: from entry to pit to shit

Win10 CUDA CUDNN 安装配置(torch paddlepaddle)

IDEA常用快捷键与插件

C语言表白代码?

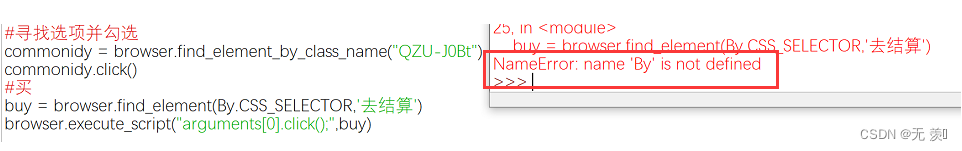

已解决(最新版selenium框架元素定位报错)NameError: name ‘By‘ is not defined

聚变云原生,赋能新里程 | 2022开放原子全球开源峰会云原生分论坛圆满召开

专访 | 阿里巴巴首席技术官程立:云+开源共同形成数字世界的可信基础

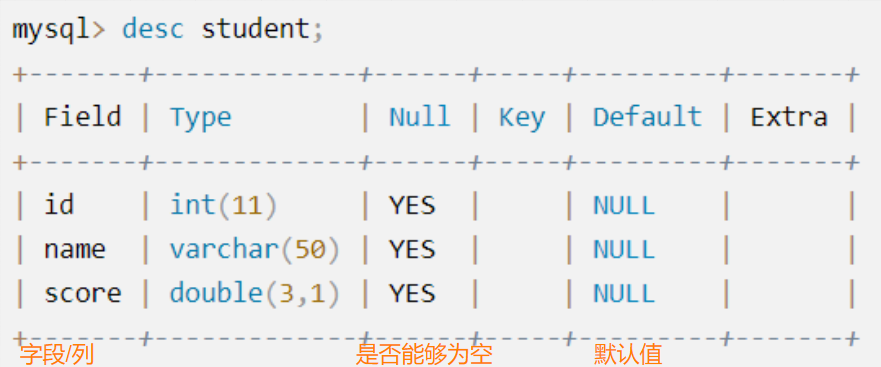

MySQL database must add, delete, search and modify operations (CRUD)

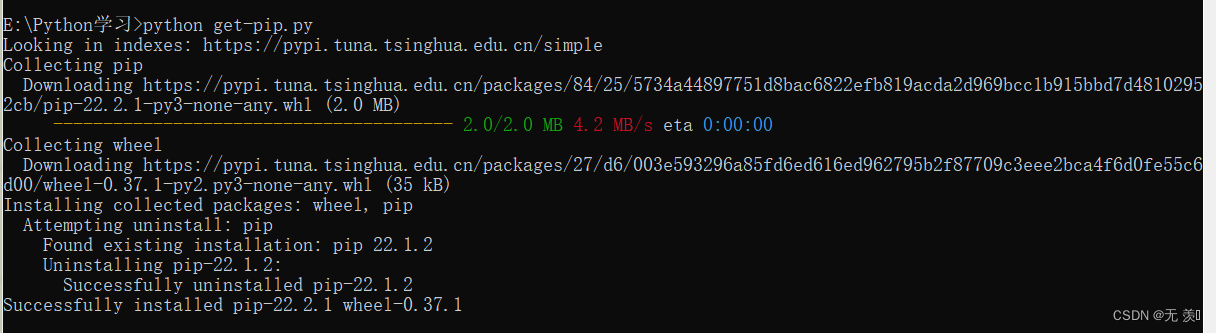

已解决:不小心卸载pip后(手动安装pip的两种方式)

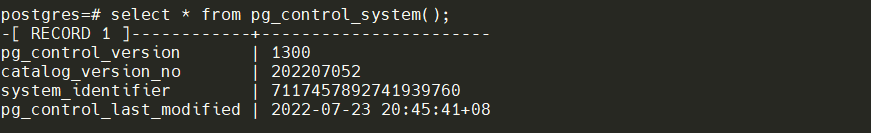

Postgresql 15 source code analysis (5) - pg_control

随机推荐

[C language] General method for finding the sum of the greatest common factor and the least common multiple of two integers m and n, the classical solution

马斯克对话“虚拟版”马斯克,脑机交互技术离我们有多远

MySQL fuzzy query can use INSTR instead of LIKE

mysql数据库安装(详细)

微信小程序使用云函数更新和添加云数据库嵌套数组元素

$attrs/$listeners

高斯分布及其极大似然估计

Safety 20220722

The BP neural network

Component pass value provide/inject

(Line segment tree) Summary of common problems of basic line segment tree

三子棋的代码实现

微软 AI 量化投资平台 Qlib 体验

ClickHouse: Setting up remote connections

pom文件成橘红色未加载的解决方案

重磅 | 基金会为白金、黄金、白银捐赠人授牌

errno error code and meaning (Chinese)

C language from entry to such as soil, the data store

Safety 20220712

Daily practice of LeetCode - palindrome structure of OR36 linked list