当前位置:网站首页>R language handwritten numeral recognition

R language handwritten numeral recognition

2022-07-24 07:22:00 【huiyiiiiii】

Catalog

1. Selection of training set data

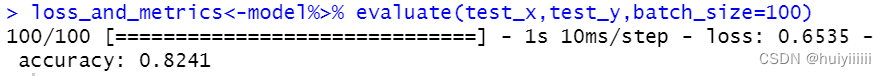

Noise variance 0.1: Accuracy on the test set 82.41%

Noise variance 0.25: Accuracy on the test set 75.91%.

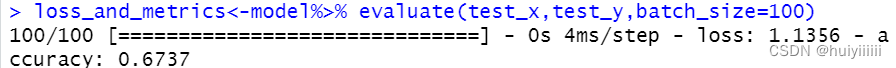

Noise variance 0.5: Accuracy on the test set 67.37%.

1. Selection of training set data

The original data file contains 60000 Individuals , Asked to choose 1000 Individuals train the model ( In order to improve the difficulty of training ), The rest of the individuals are discarded . Yes 0~9 common 10 Kind of label , To ensure the balance of labels , Prevent too few training sets of a certain label from causing poor training results , Yes 10 Choose the same number of labels , That is, each label selection 100 Individuals as a training set . The specific operation is to divide individuals into 10 class , Each type of random selection 100 Individuals , Combine the selection results to form a training set .

If all training sets are required , Ignore this step .

2. Model construction

utilize keras Model of , It is a linear stack of multiple network layers , Through to the Sequential Model passing a layer The list structure of . The model has a 784 Input layer of node , One 784 Full layer connection of nodes ,10 Output layer of nodes . The activation function is selected as relu And exponential, The loss function is chosen as categorical_crossentropy, The optimizer selection is Nadam. The evaluation standard is accuracy.

3. model training

Every time the neural network is upgraded 100 Samples , this 100 Samples come from pairs 1000 Sampling of samples . Every epoch Contains 10 Time iteration, altogether 100 individual epoch.

4. result

Model training results :

- No noise : Accuracy on the test set 91.57%.

Noise variance 0.1: Accuracy on the test set 82.41%

Noise variance 0.25: Accuracy on the test set 75.91%.

Noise variance 0.5: Accuracy on the test set 67.37%.

5. Code

rm(list=ls())

## Function to read image data , Return a data list

read_image<-function(filename){

read.filename <- file(filename, "rb")

test<-list()

length(test)<-7

names(test)<-c("magic_number1","magic_number2","number_of_images",

"number_of_rows","number_of_columns","data","label")

test$magic_number1<-readBin(read.filename,integer(),size=4,n=1,endian="big")

test$number_of_images<-readBin(read.filename,integer(),size=4,n=1,endian="big")

test$number_of_rows<-readBin(read.filename,integer(),size=4,n=1,endian="big")

test$number_of_columns<-readBin(read.filename,integer(),size=4,n=1,endian="big")

data<-readBin(read.filename,integer(),size=1,n=test$number_of_images*test$number_of_rows*test$number_of_columns,signed=F,endian="big")

data<-data/255

data<-matrix(data,byrow=T,test$number_of_images,test$number_of_rows*test$number_of_columns)

data<-as.data.frame(data)

test$data<-data

close(read.filename)

return (test)

}

## Function to read image labels , Back to the data list

read_label<-function(filename,test){

read.filename <- file(filename, "rb")

test$magic_number2<-readBin(read.filename,integer(),size=4,n=1,endian="big")

readBin(read.filename,integer(),size=4,n=1,endian="big")

data<-readBin(read.filename,integer(),size=1,n=test$number_of_images,signed=F,endian="big")

test$label<- as.factor(data)

close(read.filename)

return (test)

}

## Select the training data set function

Randomlychoose<-function(test,r){

d0<-test$data[which(test$label=="0"),]

d1<-test$data[which(test$label=="1"),]

d2<-test$data[which(test$label=="2"),]

d3<-test$data[which(test$label=="3"),]

d4<-test$data[which(test$label=="4"),]

d5<-test$data[which(test$label=="5"),]

d6<-test$data[which(test$label=="6"),]

d7<-test$data[which(test$label=="7"),]

d8<-test$data[which(test$label=="8"),]

d9<-test$data[which(test$label=="9"),]

t0<-sample(nrow(d0),r/10)

t1<-sample(nrow(d1),r/10)

t2<-sample(nrow(d2),r/10)

t3<-sample(nrow(d3),r/10)

t4<-sample(nrow(d4),r/10)

t5<-sample(nrow(d5),r/10)

t6<-sample(nrow(d6),r/10)

t7<-sample(nrow(d7),r/10)

t8<-sample(nrow(d8),r/10)

t9<-sample(nrow(d9),r/10)

test$data<-rbind(d0[t0,],d1[t1,],d2[t2,],d3[t3,],d4[t4,],d5[t5,],d6[t6,],

d7[t7,],d8[t8,],d9[t9,])

test$label<-as.factor(rep(0:9,each=r/10))

test$number_of_images<-r

return (test)

}

## Function to display pictures

show_picture<-function(test){

layout(matrix(1:100,10,10),rep(1,10),rep(1,10))

par(mar=c(0,0,0,0))

for(i in 1:100){

pic<-matrix(unlist(test$data[i,][-785])*255,test$number_of_rows,test$number_of_columns,byrow=F)

image(pic,col=grey.colors(255),axes=F)

}

}

## Add a function of Gaussian white noise

add_noise<-function(test,m,s){

d<-mapply(rnorm,n=test$number_of_images,mean=rep(m,28*28),sd=rep(sqrt(s)/255,28*28))

test$data[1:784]<-test$data[1:784]+d

return (test)

}

## Read the data and labels of training set and test set

library("keras")

test<-read_image("t10k-images.idx3-ubyte")

test<-read_label("t10k-labels.idx1-ubyte",test)

train<-read_image("train-images.idx3-ubyte")

train<-read_label("train-labels.idx1-ubyte",train)

train<-Randomlychoose(train,1000)

## view picture

show_picture(test)

show_picture(train)

dev.off()

## Add Gaussian white noise , The variances are 0.1,0.25,0.5.

test<-add_noise(test,0,0.5)

train<-add_noise(train,0,0.5)

test<-add_noise(test,0,0.1)

train<-add_noise(train,0,0.1)

test<-add_noise(test,0,0.25)

train<-add_noise(train,0,0.25)

## Extract the data and labels of training set and test set

train_x<-as.matrix(train$data[,1:784],1000,784)

test_x<-as.matrix(test$data[,1:784],10000,784)

test_y<-to_categorical(test$label,10)

train_y<-to_categorical(train$label,10)

## Define network parameters

model<-keras_model_sequential()

model %>%

layer_dense(units=784,input_shape = 784) %>%

layer_dropout(rate=0.7)%>%

layer_activation(activation = 'relu') %>%

layer_dense(units=10) %>%

layer_activation(activation = 'exponential')

model %>% compile(

loss='categorical_crossentropy',

optimizer='Nadam',

metrics=c('accuracy')

)

## Training models

model %>% fit(train_x,train_y,epochs=100,batch_size=100)

## Evaluate model performance

loss_and_metrics<-model%>% evaluate(test_x,test_y,batch_size=1000)

result<-model%>%predict(test_x)

result<-apply(result,1,which.max)-1

err <- sum(result != test$label)/test$number_of_images

err

6. Data sources

边栏推荐

- C language from entry to soil (III)

- Can recursion still play like this? Recursive implementation of minesweeping game

- Decompress the anchor and enjoy 4000w+ playback, adding a new wind to the Kwai food track?

- 研究会2022.07.22--对比学习

- From the perspective of CIA, common network attacks (blasting, PE, traffic attacks)

- Hackingtool of security tools

- A great hymn

- QoS quality of service 4 traffic regulation of QoS boundary behavior

- 给一个字符串 ① 请统计出其中每一个字母出现的次数② 请打印出字母次数最多的那一对

- 第一部分—C语言基础篇_11. 综合项目-贪吃蛇

猜你喜欢

Chapter007-FPGA学习之IIC总线EEPROM读取

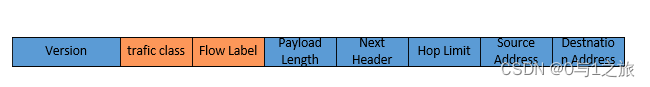

QoS服务质量三DiffServ模型报文的标记及PHB

Take you to learn C step by step (second)

Redis sentinel mechanism

QoS服务质量四QoS边界行为之流量监管

AMD64 (x86_64) architecture ABI document: upper

One book a day: machine learning and practice -- the road to the kaggle competition from scratch

Riotboard development board series notes (IX) -- buildreoot porting matchbox

![[sequential logic circuit] - register](/img/a5/c92e0404c6a970a62595bc7a3b68cd.gif)

[sequential logic circuit] - register

php 转义字符串

随机推荐

OpenCascade笔记:gp包

论文阅读:HarDNet: A Low Memory Traffic Network

Riotboard development board series notes (IX) -- buildreoot porting matchbox

学习笔记-分布式事务理论

使用堡垒机(跳板机)登录服务器

Mqtt learning

Redis sentinel mechanism

From the perspective of CIA, common network attacks (blasting, PE, traffic attacks)

C language from entry to soil function

C language start

Part II - C language improvement_ 3. Pointer reinforcement

Seminar 2022.07.22 -- Comparative learning

Nacos的高级部分

Chinese manufacturers may catch up with the humanoid robot Optimus "Optimus Prime", which has been highly hyped by musk

C language from entry to soil (II)

记账APP:小哈记账1——欢迎页的制作

解压主播狂揽4000w+播放,快手美食赛道又添新风向?

A great hymn

[sequential logic circuit] - register

Bookkeeping app: xiaoha bookkeeping 1 - production of welcome page