当前位置:网站首页>Matrixcube unveils the complete distributed storage system matrixkv implemented in 102-300 lines

Matrixcube unveils the complete distributed storage system matrixkv implemented in 102-300 lines

2022-07-25 22:36:00 【MatrixOrigin】

The last article introduced in detail MatrixCube Function and architecture of ,MatrixCube yes MatrixOne Database is an important component of distributed capability . Today we will use a simple distributed storage demo Experiment to complete the experience MatrixCube The function of .

MatrixKV Project introduction

This demo Project is called MatrixKV, stay Github Your warehouse address is :https://github.com/matrixorigin/matrixkv

MatrixKV Is a simple distributed strong consistency KV The storage system , use Pebble As the underlying storage engine ,MatrixCube As a distributed component , And customize the simplest read-write request interface . Users can simply request to read and write data at any node , You can also read the required data from any node .

If the TiDB Students who are familiar with the architecture can put MatrixKV Equivalent to a TiKV+PD, and MatrixKV Which uses RocksDB Instead of Pebble.

This experiment is based on Docker Simulate a small MatrixKV In the form of clusters , To further illustrate MatrixCube Function and operation mechanism of .

First step : Environmental preparation

Tool preparation

We need to use in this experiment docker And docker-compose Tools , Therefore, it needs to be installed docker And docker-compose. Generally speaking, it can be installed directly Docker-desktop, It comes with it docker engine ,CLI Tools and Compose plug-in unit . The official provides complete installation packages for various operating systems :https://www.docker.com/products/docker-desktop/

After installation, you can use the following command to check whether the installation is complete , If the installation is successfully completed, the corresponding version will be displayed .

docker -vdocker-compose -vDocker It's cross platform , Therefore, this experiment has no requirements for the operating system , But it's recommended macOS12+ perhaps CentOS8+( Because it has been completely verified ). This tutorial is in macOS12 Allowed in the environment of . In this test, there is only one hard disk of a single machine ,Prophet Load rebalance each node Rebalance The function of cannot be used , Therefore, in this test, there will be unbalanced node load and data volume , You can better experience this function in a complete multi machine system .

Clone Code

take MatrixKV Code Clone To local .

git clone https://github.com/matrixorigin/matrixkvThe second step :MatrixKV Cluster configuration

In the last article , We mentioned MatrixCube be based on Raft Build a distributed consensus protocol , Therefore, at least three nodes are required as the minimum deployment scale , The first three nodes belong to scheduling Prophet node . The small cluster we prepared for this experiment has four nodes , Three of them are Prophet node , One is the data node . We use docker Simulate on a single machine in the form of container packaging .

Prophet Node set

We can see in the /cfg Folder has node0-node3 Configuration file for , among Node0-Node2 Are all Prophet node ,Node3 For data nodes .Prophet The configuration of nodes is in Node0 Examples are as follows :

#raft-group Of RPC mailing address , Nodes send messages through this address raft message and snapshot.addr-raft = "node0:8081" # Addresses open to clients , The customer communicates with cube To interact with customized business requests .addr-client = "node0:8082"#Cube Data storage directory , Each node will count the storage usage according to the disk where the directory is located , Report to the scheduling node .dir-data = "/tmp/matrixkv"[raft]#Cube Would be right Raft Write a request to do batch, Multiple write requests will be merged into one Raft-Log, Only once Raft Of Proposal, This configuration specifies a Proposal Size , This # The configuration depends on the specific situation of the application max-entry-bytes = "200MB"[replication]# 1. One raft-group The largest copy of down time, When a copy of down time Beyond that , The scheduling node will think that the copy has failed for a long time ,# Then a suitable node will be selected in the cluster to recreate the replica . If the later node is restarted , Then the scheduling node will notify this copy # Destroy yourself .# 2. The default setting here is 30 minute , At this time, we think that the equipment generally fails, which can be in 30 Complete troubleshooting and recovery within minutes , If you exceed this time, it means that you can't # recovery . Here we are for the convenience of doing experiments , Set to 15 second .max-peer-down-time = "15s"[prophet]# The Prophet The name of the scheduling node name = "node0"# The Prophet The scheduling node is external RPC Address rpc-addr = "node0:8083"# Specify this node as Prophet node prophet-node = true[prophet.schedule]# Cube All nodes in the cluster will regularly send heartbeats to the scheduled Leader node , When a node does not send heartbeat for more than a certain time ,# Then the scheduling node will change the status of this node to Down, And will put this node , be-all Shard Rebuild on other nodes of the cluster ,# When this node is restored , All of the... On this node Shard Will receive the destroyed scheduling message .# It is also set here for the convenience of experiment 10 second , The default is 30 minute .max-container-down-time = "10s"#Prophet There's an embedded ETCD As a component for storing metadata [prophet.embed-etcd]#Cube Of Prophet The scheduling node will start successively , Suppose we have node0, node1, node2 Three scheduling nodes , The first thing to start is node0 node , that node0 The node will # Form a single copy etcd, about node0 for , `join` Parameters do not need to be filled , hinder node1, node1 When it starts , `join` Set to node1# Of Etcd Of Peer addressjoin = ""# Embedded Etcd Of client addressclient-urls = "http://0.0.0.0:8084"# Embedded Etcd Of advertise client address, Do not fill in , Default and `client-urls` Agreement advertise-client-urls = "http://node0:8084"# Embedded Etcd Of peer addresspeer-urls = "http://0.0.0.0:8085"# Embedded Etcd Of advertise peer address, Do not fill in , Default and `peer-urls` Agreement advertise-peer-urls = "http://node0:8085"[prophet.replication]# Every Shard How many copies at most , When Cube When the scheduling node periodically inspects , Find out Shard When the number of copies of does not match this value , Will execute the call to create or delete a copy # Degree operation .max-replicas = 3Node1 And Node2 In addition to the configuration required in ETCD In the configuration section join The front node , Others are almost the same as Node0 There is no difference .

Data node settings

and Node3 As a data node , The configuration is relatively simple , except prophet-node Set to false outside , There are no other parts that need additional configuration .

addr-raft = "node3:8081"addr-client = "node3:8082"dir-data = "/tmp/matrixkv"[raft]max-entry-bytes = "200MB"[prophet]name = "node3"rpc-addr = "node3:8083"prophet-node = falseexternal-etcd = [ "http://node0:8084", "http://node1:8084", "http://node2:8084",]Docker-Compose Set up

Docker-compose Based on the docker-compose.yml To start the container , Among them, we need to change the data directory of each node to the directory specified by ourselves . We use Node0 For example .

node0: image: matrixkv ports: - "8080:8080" volumes: - ./cfg/node0.toml:/etc/cube.toml # /data/node0 It needs to be modified to a local directory specified by the user - /data/node0:/data/matrixkv command: - --addr=node0:8080 - --cfg=/etc/cube.toml # shard will split after 1024 bytes - --shard-capacity=1024The third step : Cluster start

After configuring these options , stay MatrixKV In the code base , We have already written the construction of image dockerfile And start the build process Makefile.

We are directly in the MatrixKV Run under the path of make docker command , It will be MatrixKV Package the whole into a mirror .

# If it is MAC X86 Architecture platform or Linux, You can run the following commands directly (make docker)# If it is MAC Of ARM edition , You need to Makefile Medium docker build -t matrixkv -f Dockerfile . Change to docker buildx build --platform linux/amd64 -t matrixkv -f Dockerfile .make docker In addition, pay attention to domestic users if they may encounter go The source site is too slow to download the dependent Library , Can be in Dockerfile add go Chinese origin site settings :

RUN go env -w GOPROXY=https://goproxy.cn,direct And then through docker-compose up The order will MatrixKV Four images of are started according to different node configurations , So as to form our Node0 To Node3 Four node cluster .

docker-compose upstay docker desktop We should be able to see our 4 individual MatrixKV All nodes of are started in the form of images .

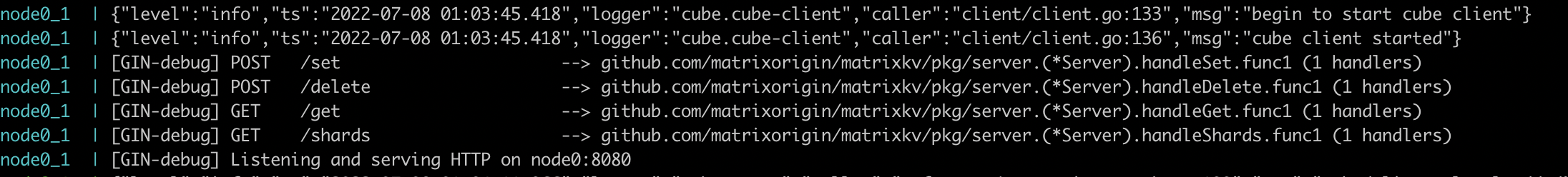

You can see in the following log that each node starts listening 8080 Port time , It means that the cluster has been started .

At the same time, we can see that many folders for storing data and some initial files have been generated in the data directory we specified .

If you shut down the cluster, you can stop the process in the command line you started , Or maybe in Docker desktop Stop any node in a graphical interface .

Step four : Read / write request interface and routing

After starting the cluster , We can read and write data to the cluster .MatrixKV Several very simple data reading and writing interfaces are packaged :

Data writing SET:

curl -X POST -H 'Content-Type: application/json' -d '{"key":"k1","value":"v1"}' http://127.0.0.1:8080/setdata fetch GET:

curl http://127.0.0.1:8080/get?key=k1Data deletion DELETE

curl -X POST -H 'Content-Type: application/json' -d '{"key":"k1"}' http://127.0.0.1:8080/delete

The last article introduced MatrixCube Medium Shard Proxy, This component enables us to initiate requests from any node of the cluster , Whether it's writing , Read or delete request ,Shard Proxy Will automatically route the request to the corresponding processing node .

For example, we can be in node0 Write data on , And in the node0 To node3 Can be read on , It's exactly the same .

// towards node0 Initiate write request curl -X POST -H 'Content-Type: application/json' -d '{"key":"k1","value":"v1"}' http://127.0.0.1:8080/set// from node0-node3 To read curl http://127.0.0.1:8080/get?key=k1curl http://127.0.0.1:8081/get?key=k1curl http://127.0.0.1:8082/get?key=k1curl http://127.0.0.1:8083/get?key=k1

Here, if the system configuration of the experiment and the scale of writing and reading data are larger , You can also verify some more extreme scenarios , For example, there are multiple clients reading the data of each node quickly , And every time the data written is read by the client, it can be guaranteed to be up-to-date and consistent , In this way, we can verify MatrixCube Strong consistency of , Ensure that the data read from any node at any time is up-to-date and consistent .

Step five : Data fragment query and splitting

MatrixCube It will be generated when the amount of data written reaches a certain level Shard split , stay MatrixKV in , We will Shard The size of is set to 1024Byte. Therefore, data written beyond this size will be split .MatrixKV It provides a simple query of how many nodes are in the current cluster or current node Shard The interface of .

# In the current cluster Shard situation curl http://127.0.0.1:8080/shards# In the current node Shard situation curl http://127.0.0.1:8080/shards?local=trueAfter starting the cluster, we can see that in the initial state, the cluster has only 3 individual Shard,id Respectively 4, 6, 8, And their actual storage nodes are node0,node2 And node3 in .

And before we write a more than 1024Byte After the data of , We can see node0,node2 And node3 Medium Shard All split , Each original Shard Have formed two new Shard, In the initial state 3 individual Shard Turned into 11,12,13,15,16,17 six Shard.

#test.json It's test data , The data content needs to be strictly in accordance with Key,Value Format specification , such as {"key":"item0","value":"XXXXXXXXXXX"}curl -X POST -H 'Content-Type: application/json' [email protected] http://127.0.0.1:8083/set

At the same time, we can still access the data we write at any node .

Step six : Node changes and replica generation

Now let's take a look at MatrixCube The function of high availability guarantee . We can go through Docker desktop To manually shut down a single container , So as to simulate the machine failure in the real environment .

In the fifth step, after we input a large data, the whole system exists 6 individual Shard, Every Shard Yes 3 individual Replica. We will now node3 Manual shutdown .

Try to visit again node3 Our orders all ended in failure .

![]()

But read requests are initiated from other nodes , Data can still be read , This is the embodiment of the overall high availability of the distributed system .

According to our previous settings ,store3 The heart of 10 Not sent within seconds Prophet,Prophet Think of this as Store It's offline , By checking the current copy, we find , be-all Shard There are only two Replica, In order to satisfy the 3 Requirements for copies ,Prophet Will automatically start to find idle nodes , take Shard Copy to above , Here we are node1, Then let's look at each node Shard The situation of .

You can see node1 There was no Shard Of , Now also with node0 and node2 It's all the same 6 individual shard. This is the same. Prophet Automatic copy generation , Always ensure that there are three copies in the system to ensure high availability .

In addition to copy generation , If something goes wrong Shard Of Raft Group Leader, So this Shard Of Raft Group The election will be relaunched , Then elect a new Leader, Again by Leader Initiate a request for new copy generation . You can test this by yourself , And verify through the log information .

MatrixKV Code scanning

Through the whole experiment, we have completely experienced in MatrixCube With the help of a stand-alone KV Storage engine Pebble It becomes a distributed KV Storage . And it needs MatrixKV The code implemented by itself is very simple . Generally speaking, there is only 4 individual go file , Less than 300 Line code can complete MatrixKV All construction .

/cmd/matrixkv.go: The entrance of the whole program startup , Perform the most basic initialization and start the service .

/pkg/config/config.go: Defined a MatrixKV Data structure of overall configuration .

/pkg/metadata/metadata.go: Defines the user and MatrixKV Read / write the data structure of the interactive request .

/pkg/server/server.go: This is a MatrixKV The main function of , Three main things have been done :

- Definition MatrixKV server Data structure of .

- Definition Set/Get/Delete And other related requests Executor Concrete realization .

- call Pebble Library as a stand-alone storage engine , Realization MatrixCube designated DataStorage Interface , take MatrixCube Of Config Item is set to the corresponding method .

Benefit time

Please add a little assistant wechat WeChat ID:MatrixOrigin001

- Send your MatrixKV First experience complete screen recording , You can get a limited amount MatrixOrigin T A shirt .

- Send your MatrixKV For the first time, experience the complete screen recording and release it on CSDN, You get value 200 Jingdong card of yuan + limited MatrixOrigin T A shirt .

summary

As MatrixCube The second part of the series , We are based on MatrixCube and Pebble A custom distributed storage system implemented by MatrixKV The experiment of , Further display MatrixCube The operating mechanism of , It also shows 300 Lines of code can quickly build a complete, strong and consistent distributed storage system . We will bring the next issue MatrixCube More in-depth code elaboration , Coming soon .

边栏推荐

- [training day15] paint road [minimum spanning tree]

- PySpark数据分析基础:pyspark.sql.SparkSession类方法详解及操作+代码展示

- Gan, why '𠮷 𠮷'.Length== 3 ??

- Two methods of printing strings in reverse order in C language

- [training Day12] x equation [high precision] [mathematics]

- 【集训DAY13】Internet【并查集】

- 【集训DAY12】X equation 【高精度】【数学】

- Can generic types be used in array

- Wechat official account application development (I)

- Mitsubishi FX PLC free port RS command realizes Modbus Communication

猜你喜欢

![[training Day12] min ratio [DFS] [minimum spanning tree]](/img/f8/e37efd0d2aa0b3d79484b9ac42b7eb.png)

[training Day12] min ratio [DFS] [minimum spanning tree]

【集训DAY15】油漆道路【最小生成树】

![[training day13] out race [mathematics] [dynamic planning]](/img/94/d86031a062c9311f83d63809492d71.png)

[training day13] out race [mathematics] [dynamic planning]

DOM event object

【集训DAY13】Out race【数学】【动态规划】

Smart S7-200 PLC channel free mapping function block (do_map)

![[training day13] travel [violence] [dynamic planning]](/img/ac/dd52771fec20432fd084d8e3cc89e1.png)

[training day13] travel [violence] [dynamic planning]

According to the use and configuration of data permissions in the open source framework

![[training day13] backpack [dynamic planning] [greed]](/img/a7/3df395d84f510dea8b42ebcc4ff5f2.png)

[training day13] backpack [dynamic planning] [greed]

【集训DAY13】Backpack【动态规划】【贪心】

随机推荐

Div drag effect

Learning orientation today

[training day13] Internet [concurrent search]

QT log file system

英文术语对应的解释

数据质量:数据治理的核心

Using simple scripts to process data in 3dslicer

3 词法分析

自媒体人必备的4个素材网站,再也不用担心找不到素材

完啦,上班三个月,变秃了

自媒体人必备的4个资源工具,每一个都很实用

arcgis开发常用源码

Simple application of partial labels and selectors

Arcgis10.2 configuring postgresql9.2 standard tutorial

【集训DAY15】油漆道路【最小生成树】

(1) Integrating two mapping frameworks of Dao

点亮字符串中所有需要点亮的位置,至少需要点几盏灯

LabVIEW 开发 PCI-1680U双端口CAN卡

分割金条的代价

Mitsubishi FX PLC free port RS command realizes Modbus Communication