当前位置:网站首页>20000 words + 30 pictures | what's the use of chatting about MySQL undo log, redo log and binlog?

20000 words + 30 pictures | what's the use of chatting about MySQL undo log, redo log and binlog?

2022-07-27 18:40:00 【Java sharing Officer】

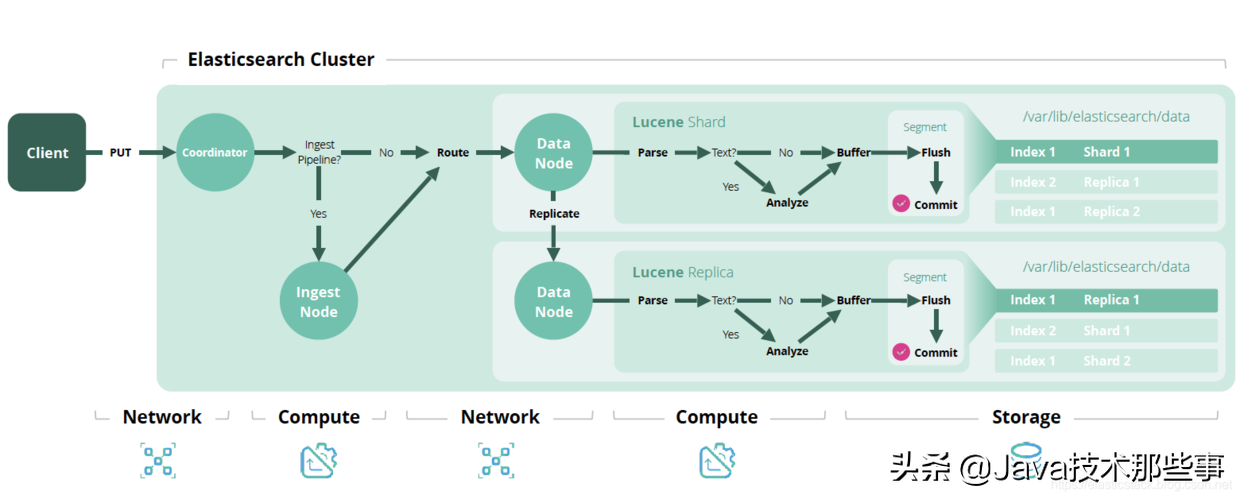

We know the process of a query statement , This belongs to 「 read 」 A recorded process , Here's the picture :

Query statement execution process

that , To perform a update sentence , What happened during that time ?, Like this one update sentence :

UPDATE t_user SET name = 'xiaolin' WHERE id = 1;The process of query statement , UPDATE statement will also go through the same :

- The client first establishes the connection through the connector , The connector will determine the identity of the user ;

- Because this is a update sentence , Therefore, no query cache is required , But there are update statements on the table , It will empty the query cache of the entire table , So the query cache is very weak , stay MySQL 8.0 This function is removed ;

- The parser will recognize keywords through lexical analysis update, Watch name and so on , Build a syntax tree , Then I will do grammar analysis , Determine whether the input statement is consistent with MySQL grammar ;

- The preprocessor will determine whether the tables and fields exist ;

- The optimizer determines the execution plan , because where In the condition of id It's the primary key index , So I decided to use id This index ;

- The actuator is responsible for the specific execution , Find this line , And then update .

however , The process of updating statements involves undo log( Rollback log )、redo log( Redo log ) 、binlog ( Archive log ) These three kinds of logs :

- undo log( Rollback log ): yes Innodb Logs generated by the storage engine layer , Implements the... In transactions Atomicity , The main For transaction rollback and MVCC.

- redo log( Redo log ): yes Innodb Logs generated by the storage engine layer , Implements the... In transactions persistence , The main Used for power failure and other fault recovery ;

- binlog ( Archive log ): yes Server Log generated by layer , The main Used for data backup and master-slave replication ;

So this time I have this question , See how these three types of logs work .

Why undo log?

We are implementing a “ Additions and deletions ” At the time of statement , Although no input begin Open transactions and commit Commit transaction , however MySQL Meeting Implicitly open a transaction To execute “ Additions and deletions ” Of the statement , Automatically commit transactions after execution , This ensures that the execution is completed “ Additions and deletions ” After the statement , We can see in the database table in time “ Additions and deletions ” The results of the .

Whether to execute a statement to automatically commit transactions , By autocommit Parameters determine , The default is on . therefore , To perform a update Statements also use transactions .

that , Consider a question . A transaction is in the process of execution , Before committing the transaction , If MySQL There was a breakdown , How to rollback to the data before the transaction ?

If every time we execute a transaction , Record the information required for rollback in a log , Then something happens in the middle of the transaction MySQL After collapse , You don't have to worry about not being able to rollback to the data before the transaction , We can rollback the data before the transaction through this log .

Implementing this mechanism is undo log( Rollback log ), It guarantees the transaction ACID characteristic The atomicity of (Atomicity).

undo log Is a log used to undo fallback . Before the transaction is committed ,MySQL The data before the update will be recorded to undo log In the log file , When the transaction rolls back , You can use undo log To roll back . Here's the picture :

Roll back the transaction

whenever InnoDB The engine operates on a record ( modify 、 Delete 、 newly added ) when , All the information required for rollback should be recorded in undo log in , such as :

- stay Insert When a record , Write down the primary key value of this record , In this way, only the record corresponding to the primary key value needs to be rolled back Delete Just fine ;

- stay Delete When a record , Write down everything in this record , In this way, when rolling back, the records composed of these contents Insert Just go to the table ;

- stay to update When a record , Write down the old values of the updated columns , In this way, these columns will be rolled back Update to old value Just fine .

When a rollback occurs , Just read undo log The data in , Then do the opposite . For example, when delete When a record ,undo log I will write down all the contents in the record , When the rollback operation is performed , Just read undo log The data in , Then proceed insert operation .

Different operations , The contents to be recorded are also different , So different types of operations ( modify 、 Delete 、 newly added ) Produced undo log The format of is also different , Specific to each operation undo log I won't introduce the format of , If you are interested, you can check it yourself .

Generated by each update operation of a record undo log Each format has a roll_pointer A pointer and a trx_id Business id:

- adopt trx_id You can know which transaction modified the record ;

- adopt roll_pointer The pointer can put these undo log String into a linked list , This list is called version chain ;

The version chain is shown in the figure below :

Version chain

in addition ,undo log There's another function , adopt ReadView + undo log Realization MVCC( Multi version concurrency control ).

about 「 Read the submission 」 and 「 Repeatable 」 For isolation level transactions , Their snapshot reads ( Ordinary select sentence ) It's through Read View + undo log To achieve , The difference between them is to create Read View The timing is different :

- 「 Read the submission 」 The isolation level is at each select Will generate a new Read View, Also means that , Read the same data multiple times during the transaction , The data read before and after may be inconsistent , Because another transaction may have modified the record during this period , And commit the transaction .

- 「 Repeatable 」 The isolation level is generated when a transaction is started Read View, Then this is used throughout the transaction Read View, This ensures that the data read during the transaction is the record before the transaction is started .

These two isolation levels are achieved through 「 The transaction Read View Fields in 」 and 「 Two hidden columns in the record (trx_id and roll_pointer)」 Comparison of , If visible lines are not satisfied , Will follow undo log Find the records that meet the visibility in the version chain , So as to control the behavior of concurrent transactions when accessing the same record , This is called MVCC( Multi version concurrency control ). See my article for specific implementation : How the transaction isolation level is achieved ?

therefore ,undo log Two functions :

- Implement transaction rollback , Guarantee atomicity of transactions . During transaction processing , If there's an error or the user All right ROLLBACK sentence ,MySQL You can use undo log The historical data in restores the data to the state before the start of the transaction .

- Realization MVCC( Multi version concurrency control ) One of the key factors .MVCC It's through ReadView + undo log Realized .undo log Save multiple copies of historical data for each record ,MySQL Performing snapshot read ( Ordinary select sentence ) When , According to the transaction Read View Information in , Along undo log The version chain of finds records that meet its visibility .

Why Buffer Pool?

MySQL All the data is stored in the disk , So when we want to update a record , You have to read the record from the disk first , Then modify the record in memory . After modifying this record, choose to write it directly back to the disk , Or choose to cache it ?

Of course, caching is good , So next time a query statement hits this record , Directly read the records in the cache , No need to get data from disk .

So ,Innodb The storage engine designed a Buffer pool (Buffer Pool), To improve the read-write performance of the database .

Buffer Poo

With Buffer Poo after :

- When the data is read , If the data exists in Buffer Pool in , The client will read directly Buffer Pool Data in , Otherwise, read it from the disk .

- When modifying data , If the data exists in Buffer Pool in , Then modify it directly Buffer Pool The page where the data is located in , Then set its page as dirty ( The memory data of this page is inconsistent with the data on the disk ), To reduce the number of disks I/O, Dirty pages are not immediately written to disk , Subsequently, the background thread chooses an appropriate time to write the dirty pages to the disk .

Buffer Pool Cache what ?

InnoDB The stored data will be divided into several 「 page 」, Take page as the basic unit of disk and memory interaction , The default size of a page is 16KB. therefore ,Buffer Pool You also need to press 「 page 」 To differentiate .

stay MySQL When it starts ,InnoDB Would be Buffer Pool Apply for a continuous memory space , Then follow the default 16KB The size of is divided into pages , Buffer Pool Pages in are called cache pages . At this time, these cache pages are idle , And then as the program runs , Pages on disk will be cached to Buffer Pool in .

therefore ,MySQL When it started , You will observe that the virtual memory space used is very large , The physical memory space used is very small , This is because only these virtual memories are accessed , The operating system will trigger the page missing interrupt , Request physical memory , Then establish a mapping relationship between the virtual address and the physical address .

Buffer Pool In addition to caching 「 Index page 」 and 「 Data pages 」, It also includes Undo page , Insert cache 、 adaptive hash index 、 Lock information, etc .

Undo A page is a record of what ?

After opening the transaction ,InnoDB Before the layer updates the record , First, record the corresponding undo log, If it's an update operation , You need to write down the old values of the updated Columns , That is to generate a undo log,undo log Will write Buffer Pool Medium Undo page .

Look up a record , Just buffer one record ?

No, it isn't .

When we query a record ,InnoDB It will load the data of the whole page into Buffer Pool in , Load... To page Buffer Pool after , And then through the 「 Page directory 」 To locate a specific record .

The answer to the question of what the page structure looks like and how the index queries data can be found in this article : Look at it from a different angle B+ Trees

Why redo log ?

Buffer Pool It improves the efficiency of reading and writing , But the problem is ,Buffer Pool It's memory based , Memory is always unreliable , In case of power failure, restart , The dirty page data will be lost before the disk is dropped .

To prevent data loss caused by power failure , When a record needs to be updated ,InnoDB The engine will write the record first redo log Inside , And update memory , At this time, the update is finished . meanwhile ,InnoDB The engine will... At the right time , Cached by the background thread in Buffer Pool The dirty pages are flushed to disk , This is it. WAL (Write-Ahead Logging) technology , refer to MySQL The write operation is not immediately updated to disk , It's recorded in the log first , And then update it to disk at the right time .

The process is as follows :

What is? redo log?

redo log It's a physical log , Records what changes have been made to a data page , Yes XXX In tablespace YYY Data pages ZZZ The offset is done in place of AAA to update , Each time a transaction is executed, such a physical log will be generated .

At transaction commit , Just put redo log Persistent to disk , You don't need to cache in Buffer Pool The dirty page data in is persisted to disk . When the system crashes , Although dirty page data is not persisted , however redo log Has persisted , next MySQL After restart , According to redo log The content of , Restore all data to the latest state .

Be modified Undo page , It is necessary to record the corresponding redo log Do you ?

Needed .

After opening the transaction ,InnoDB Before the layer updates the record , First, record the corresponding undo log, If it's an update operation , You need to write down the old values of the updated Columns , That is to generate a undo log,undo log Will write Buffer Pool Medium Undo page .

however , In modifying the Undo You need to record the corresponding before the page redo log, therefore Record the modification first Undo Page redo log , And then you can really change it Undo page .

redo log and undo log What's the difference ?

These two kinds of logs belong to InnoDB Log storage engine , The difference is :

- redo log Recorded this transaction 「 After completion 」 Data status of , The record is updated 「 after 」 Value ;

- undo log Recorded this transaction 「 Prior to the start 」 Data status of , The record is updated 「 front 」 Value ;

A crash occurred before the transaction was committed , After restart, it will pass undo log Roll back the transaction , A crash occurred after the transaction was committed , After restart, it will pass redo log Resume business , Here's the picture :

Transaction recovery

So there is redo log, Re pass WAL technology ,InnoDB It can guarantee that even if the database is restarted abnormally , Records submitted before will not be lost , This ability is called crash-safe( Crash recovery ). You can see it , redo log It ensures the persistence of the four features of transactions .

redo log To write to disk , Data should also be written to disk , Why do you want to do more than that ?

write in redo log Method uses the append operation , So disk operation is Sequential writing , To write data, you need to find the write location first , Then write to disk , So disk operation is Write at random .

On disk 「 Sequential writing 」 Than 「 Write at random 」 More efficient , therefore redo log Writing to disk is less expensive .

in the light of 「 Sequential writing 」 Why is it better than 「 Write at random 」 Faster this problem , It can be compared to that you have a book , It must be much faster to write page by page in order than to find the corresponding page to write a word .

It can be said that this is WAL Another advantage of Technology :MySQL Write operation from disk 「 Write at random 」 Turned into 「 Sequential writing 」, Improve the execution performance of statements . This is because MySQL The write operation is not immediately updated to disk , It's recorded in the log first , And then update it to disk at the right time .

thus , For why redo log We have two answers to this question :

- Achieve transaction persistence , Give Way MySQL Yes crash-safe The ability of , Can guarantee MySQL Sudden collapse at any time , Records submitted before restart will not be lost ;

- Write from 「 Write at random 」 Turned into 「 Sequential writing 」, promote MySQL Performance of writing to disk .

Produced redo log Is it written directly to the disk ?

No, it isn't .

actually , Execute a transaction , Produced redo log Nor is it written directly to disk , Because this will produce a lot of I/O operation , And the disk runs much slower than the memory .

therefore ,redo log It also has its own cache —— redo log buffer, Whenever a redo log when , It will be written to redo log buffer, The following figure shows the persistence to disk :

Transaction recovery

redo log buffer Default size 16 MB, Can pass innodb_log_Buffer_size Parameter dynamic resizing , Increasing its size can make MySQL Handle 「 Large transactions 」 No need to write to disk , And then improve writing IO performance .

redo log When to brush the plate ?

cached redo log buffe Inside redo log It's still in memory , When does it refresh to disk ?

There are several opportunities :

- MySQL Normally closed ;

- When redo log buffer The write amount recorded in is greater than redo log buffer Half of the memory space , It will trigger the drop ;

- InnoDB Background threads of every 1 second , take redo log buffer Persist to disk .

- Each transaction commit will be cached in redo log buffer Inside redo log Persist directly to disk ( This strategy can be implemented by innodb_flush_log_at_trx_commit Parameter control , Next I'll say ).

innodb_flush_log_at_trx_commit What parameters control ?

When executing an update statement alone ,InnoDB The engine will start a transaction by itself , During the execution of the update statement , Generated redo log First write to redo log buffer in , Then when the transaction is committed , Then cache it in redo log buffer Medium redo log In groups 「 Sequential writing 」 To disk .

This one above redo log The time to brush the disk is when the transaction is committed , This default behavior .

besides ,InnoDB Two other strategies are also provided , By the parameter innodb_flush_log_at_trx_commit Parameter control , The desirable value is :0、1、2, The default value is 1, The strategies represented by these three values are as follows :

- When setting this Parameter is 0 when , Indicates that every time a transaction is committed , still take redo log Remain redo log buffer in , In this mode, the write to disk operation will not be triggered when the transaction is committed .

- When setting this Parameter is 1 when , Indicates that every time a transaction is committed , all Cache in redo log buffer Inside redo log Persist directly to disk , This ensures MySQL Data will not be lost after abnormal restart .

- When setting this Parameter is 2 when , Indicates that every time a transaction is committed , Are just cached in redo log buffer Inside redo log writes redo log file , Note write to 「 redo log file 」 It does not mean that it is written to the disk , Because there is a file system in the operating system Page Cache( If you want to know Page Cache, You can see This article ),Page Cache It is specially used to cache file data , So write 「 redo log file 」 It means that it is written to the file cache of the operating system .

I drew a picture , So that you can understand :

innodb_flush_log_at_trx_commit by 0 and 2 When , When will redo log Write to disk ?

InnoDB Background threads of every 1 second :

- For parameters 0 : Will slow down the existence of redo log buffer Medium redo log , By calling

write()Write to the operating system Page Cache, And then callfsync()Persist to disk . So the parameter is 0 The strategy of ,MySQL The crash of the process will result in the loss of all transaction data in the last second ; - For parameters 2 : call fsync, Cache in the operating system Page Cache Inside redo log Persist to disk . So the parameter is 2 The strategy of , The comparison value is 0 Safer in case , because MySQL A process crash does not lose data , Only if the operating system crashes or the system is powered down , All transaction data can be lost in the last second .

After adding the background thread ,innodb_flush_log_at_trx_commit The timing of disc brushing is shown in the figure below :

What are the application scenarios for these three parameters ?

The data security and write performance of these three parameters are compared as follows :

- Data security : Parameters 1 > Parameters 2 > Parameters 0

- Write performance : Parameters 0 > Parameters 2> Parameters 1

therefore , Data security and write performance cannot be achieved at the same time , Do not pursue data security , Sacrificial performance ; Do not pursue performance , Sacrifice data security .

- In some scenarios that require high data security , obviously

innodb_flush_log_at_trx_commitThe parameter needs to be set to 1. - Lost in some tolerable database crashes 1s Data scenario , We can set this value to 0, This can significantly reduce the log synchronization to disk I/O operation .

- The compromise between security and performance is parameters 2, Although parameters 2 No parameters 0 High performance , But data security is better than parameters 0 strong , Because parameters 2 As long as the operating system doesn't go down , Even if the database crashes , No loss of data , At the same time, the performance is more convenient than the parameters 1 high .

redo log What if the file is full ?

By default , InnoDB The storage engine has 1 Redo log filegroups ( redo log Group),「 Redo log file group 」 By 2 individual redo log The composition of the document , these two items. redo The file name of the log is :ib_logfile0 and ib_logfile1 .

Redo log file group

In the redo log group , Every redo log File The size of is fixed and consistent , Suppose that each redo log File The upper limit is 1 GB, Then the total can be recorded 2GB The operation of .

The redo log file group is created with Write in cycles Working in a different way , Write from the beginning , Write to the end and go back to the beginning , Equivalent to a ring .

therefore InnoDB The storage engine will first write ib_logfile0 file , When ib_logfile0 When the file is full , Will switch to ib_logfile1 file , When ib_logfile1 When the file is full , Will switch back to ib_logfile0 file .

Redo the log file group writing process

We know redo log To prevent Buffer Pool Designed for the loss of dirty pages in , If the system runs ,Buffer Pool The dirty pages of are flushed to disk , that redo log The corresponding records are useless , At this time we erase these old records , To make room for new update operations .

redo log It's a circular write , Equivalent to a ring ,InnoDB use write pos Express redo log Where the current record is written , use checkpoint Indicates the current position to erase , Here's the picture :

In the picture :

- write pos and checkpoint The movement of is clockwise ;

- write pos ~ checkpoint Part between ( The red part of the picture ), Used to record new update operations ;

- check point ~ write pos Part between ( The blue part of the picture ): Dirty data page records to be landed ;

If write pos Catch up with checkpoint, Means redo log The papers are full , At this time MySQL No new update operations can be performed , in other words MySQL Will be blocked ( Therefore, for systems with a large amount of concurrency , Appropriate settings redo log File size is very important ), here Will stop to Buffer Pool The dirty pages in are flushed to disk , Then mark redo log Which records can be erased , Then the old redo log Records are erased , When the old records are erased to make room ,checkpoint Will move back ( Clockwise in the figure ), then MySQL Return to normal operation , Continue with the new update operation .

therefore , once checkpoint The process is that dirty pages are flushed to disk and become clean pages , Then mark redo log The process of which records can be overwritten .

Why binlog ?

Previously introduced undo log and redo log These two logs are Innodb Generated by the storage engine .

MySQL After completing an update operation ,Server The layer also generates a binlog, When the transaction is committed , All... Generated during the execution of this thing binlog Unified writing Enter into binlog file .

binlog A file is a log that records all database table structure changes and table data modifications , The operation of query class will not be recorded , such as SELECT and SHOW operation .

Why binlog, There will be more redo log?

This question follows MySQL The timeline of .

In the beginning MySQL Not in InnoDB engine ,MySQL The engine is MyISAM, however MyISAM No, crash-safe The ability of ,binlog Logs can only be used for archiving .

and InnoDB It's another company that introduced... In the form of plug-ins MySQL Of , Since we only rely on binlog It's not crash-safe The ability of , therefore InnoDB Use redo log To achieve crash-safe Ability .

redo log and binlog What's the difference? ?

There are four differences between the two logs .

1、 It can be applied to different objects :

- binlog yes MySQL Of Server Log of layer implementation , All storage engines can use ;

- redo log yes Innodb Storage engine implementation logs ;

2、 The file format is different :

- binlog Yes 3 Format types , Namely STATEMENT( The default format )、ROW、 MIXED, The difference between the following :

- STATEMENT: Every modification of data SQL Will be recorded as binlog in ( It is equivalent to recording the logical operation , So for this format , binlog It can be called a logical log ), Master slave replication slave And then according to SQL Statement recurrence . but STATEMENT Problems with dynamic functions , For example, you used uuid perhaps now These functions , The result you execute on the master library is not the result you execute on the slave Library , This function that changes at any time will cause inconsistency in the copied data ;

- ROW: What is the final change of the record line data ( Log in this format , It can't be called a logical log ), There will be no STATEMENT The problem of dynamic function under . but ROW The disadvantage is that the result of each row of data changes will be recorded , For example, execute batch update sentence , As many rows of data are updated, so many records will be generated , send binlog File is too large. , And in the STATEMENT Only one... Will be recorded in the format update Just a statement ;

- MIXED: Contains STATEMENT and ROW Pattern , It will be used automatically according to different situations ROW Patterns and STATEMENT Pattern ;

- redo log It's a physical log , It records what changes have been made on a data page , For example, yes. XXX In tablespace YYY Data pages ZZZ The offset is done in place of AAA to update ;

3、 Write in different ways :

- binlog It's appending , Fill a file , Just create a new file and continue writing , Will not overwrite previous logs , Keep a full amount of logs .

- redo log It's circular , The log space size is fixed , Write it all down and start from scratch , Save dirty page logs that are not flushed to disk .

4、 Different uses :

- binlog For backup and recovery 、 Master slave copy ;

- redo log Used for power failure and other fault recovery .

If you are not careful, the data in the entire database is deleted , Be able to use redo log File recovery data ?

Not available redo log File recovery , Only use binlog File recovery .

because redo log The file is written circularly , It can erase logs while writing , A physical log that records only data that has not been flushed to disk , The data that has been swiped into the disk will be from redo log Erase from file .

binlog The file saves the full amount of logs , That is, all data changes are saved , In theory, just record it in binlog The data on the , Can be recovered , So if you accidentally delete the data in the entire database , Have to use binlog File recovery data .

How to implement master-slave replication ?

MySQL Master-slave replication of depends on binlog , That is, records MySQL All changes on and stored on disk in binary form . The process of copying is to binlog The data in is transferred from the master library to the slave library .

This process is generally asynchronous Of , That is, the thread executing transaction operations on the primary database will not wait for replication binlog Thread synchronization completed for .

MySQL Master slave replication process

MySQL The master-slave replication process of the cluster is sorted into 3 Stages :

- write in Binlog: Main library write binlog journal , Commit transaction , And update the local storage data .

- Sync Binlog: hold binlog Copy to all slave Libraries , Each slave library handle binlog Write to the staging log .

- The playback Binlog: The playback binlog, And update the data in the storage engine .

The detailed process is as follows :

- MySQL After receiving the request from the client to submit the transaction, the main library , Will first write binlog, Commit the transaction again , Update the data in the storage engine , When the transaction is committed , Return to the client “ Successful operation ” Response .

- A special... Will be created from the library I/O Threads , Connect to the main library log dump Threads , To receive... From the main library binlog journal , And then binlog iw relay log In my relay log , Then return to the main library “ Replication success ” Response .

- From the library, a file is created for playback binlog The thread of , To read relay log relay logs , Then playback binlog Update the data in the storage engine , Finally, the data consistency between master and slave is realized .

After master-slave replication , You can write data only to the main database , Read only when reading data from the library , In this way, even if the write request will lock the table or lock the record , Nor will it affect the execution of read requests .

MySQL Master slave architecture

Whether the more libraries, the better ?

No, it isn't .

Because the number of slave libraries increases , Connected from the library I/O There are also many threads , The main library needs to create as many log dump Thread to handle the copy request , The resource consumption of the main database is relatively high , At the same time, it is also limited by the network bandwidth of the main library .

So in practice , A main database is generally similar to 2~3 A slave (1 Set of database ,1 Lord 2 from 1 Standby host ), This is the of one master and many followers MySQL Cluster structure .

MySQL What other models are there for master-slave replication ?

There are three main types :

- Synchronous replication :MySQL The thread that commits the transaction from the master database needs to wait for the successful response of all replication from the slave database , To return the client results . This method is used in actual projects , It basically doesn't work , There are two reasons : First, the performance is very poor , The response is returned because it needs to be copied to all nodes ; Second, the usability is also very poor , There is a problem with either the master database or all the slave databases , Will affect the business .

- Asynchronous replication ( Default model ):MySQL The thread that commits the transaction in the main library does not wait binlog Synchronize to each slave library , It returns the client result . In this mode, once the main library goes down , Data will be lost .

- Semi-synchronous replication :MySQL 5.7 A copy method added after version , In between , The transaction thread does not have to wait for all successful replication responses from the library , As long as part of the replication is successful, the response comes back , For example, one master and two slave clusters , As long as the data is successfully copied to any slave database , The transaction thread of the main library can return to the client . such Semi synchronous replication , It takes into account the advantages of asynchronous replication and synchronous replication , Even if the primary library goes down , At least one has the latest data from the library , There is no risk of data loss .

binlog When to brush the plate ?

During transaction execution , First write down the journal binlog cache(Server Layer of cache), When the transaction is submitted , And then binlog cache writes binlog In file .

MySQL to binlog cache Allocated a piece of memory , One per thread , Parameters binlog_cache_size Used to control within a single thread binlog cache The amount of memory occupied . If it exceeds the specified size of this parameter , It's going to be staged to disk .

When binlog cache Will write binlog file ?

When the transaction is committed , Actuator handle binlog cache The entire transaction in is written to binlog In file , And empty binlog cache. Here's the picture :

binlog cach

Although each thread has its own binlog cache, But they all end up in the same binlog file :

- In the picture write, It means to write the log to binlog file , But it doesn't persist the data to disk , Because the data is still stored in the file system page cache in ,write The write speed of is still relatively fast , Because disk is not involved I/O.

- In the picture fsync, The operation of persisting data to disk , This will involve disks I/O, So often fsync This will cause the disk to I/O elevated .

MySQL Provide a sync_binlog Parameter to control the binlog The frequency of brushing to the disk :

- sync_binlog = 0 When , Indicates that every time a transaction is committed, only write, No fsync, It is up to the operating system to decide when to persist the data to disk ;

- sync_binlog = 1 When , Indicates that each transaction is committed write, Then execute immediately fsync;

- sync_binlog =N(N>1) When , Indicates every transaction submitted write, But cumulatively N After a business fsync.

stay MySQL The default setting in the system is sync_binlog = 0, That is, do not do any mandatory disk refresh instructions , The performance at this time is the best , But the risk is also the biggest . Because once the host is restarted abnormally , stay binlog cache All in binlog Logs will be lost .

And when sync_binlog Set to 1 When , It's the safest setting, but it's the most expensive setting . Because when set to 1 When , Even if the host is restarted abnormally , It's lost at most binlog cache An unfinished transaction in , There is no material impact on the actual data , It has too much impact on the write performance .

If you can accommodate a small amount of business binlog Risk of log loss , In order to improve the performance of writing , Usually sync_binlog Set to 100~1000 Some value in .

Three logs are finished , So far we can make a summary ,update Statement execution .

After the optimizer analyzes the execution plan with the lowest cost , The actuator starts the update operation according to the execution plan .

Update a record specifically UPDATE t_user SET name = 'xiaolin' WHERE id = 1; The process is as follows :

- The actuator is responsible for the specific execution , Will call the interface of the storage engine , Search through the primary key index tree to get id = 1 This line records :

- If id=1 The data page of this row is already in buffer pool in , It will be directly returned to the actuator for updating ;

- If the record is not in buffer pool, Read data pages from disk into buffer pool, Return the record to the actuator .

- After the actuator gets the clustered index record , I will check whether the records before and after the update are the same :

- If it is the same, the subsequent update process will not be carried out ;

- If it is different, both the pre update record and the updated record will be passed to as parameters InnoDB layer , Give Way InnoDB Actually perform the operation of updating records ;

- Open transaction , InnoDB Before the layer updates the record , First, record the corresponding undo log, Because this is an update operation , You need to write down the old values of the updated Columns , That is to generate a undo log,undo log Will write Buffer Pool Medium Undo page , However, in modifying the Undo You need to record the corresponding before the page redo log, therefore Record the modification first Undo Page redo log , And then you can really change it Undo page .

- InnoDB The layer starts to update the record , according to WAL technology , Record the modification data page first redo log , Then you can really modify the data page . The process of modifying a data page is to modify Buffer Pool The page where the data is located in , Then set its page as dirty , To reduce the number of disks I/O, Dirty pages are not immediately written to disk , Subsequently, the background thread chooses an appropriate time to write the dirty pages to the disk .

- thus , A record has been updated .

- After an update statement is executed , Then start recording the corresponding binlog, What's recorded at this time binlog Will be saved to binlog cache, Not flushed to hard disk binlog file , Only when a transaction is committed will all the transactions in the transaction run uniformly binlog Refresh to hard disk .

- Transaction submission , The rest is 「 Two-phase commit 」 It's something , Let's talk about this .

Why a two-phase submission is needed ?

After the transaction is committed ,redo log and binlog Must be persisted to disk , But these two are independent logic , A semi successful state may occur , This will cause the logic inconsistency between the two logs .

for instance , hypothesis id = 1 The fields of this row of data name The value of was originally 'jay', And then execute UPDATE t_user SET name = 'xiaolin' WHERE id = 1; If you're persistent redo log and binlog In the process of two logs , A semi successful state has occurred , So there are two situations :

- If in the future redo log After brushing to disk , MySQL Suddenly it went down , and binlog I haven't had time to write .MySQL After restart , adopt redo log Be able to make Buffer Pool in id = 1 This line of data name The field is restored to the new value xiaolin, however binlog There is no record of this update statement , In the master-slave architecture ,binlog Will be copied to the slave library , because binlog This update statement is missing , From this line of the library name Field is old value jay, Inconsistent with the value of the main library ;

- If in the future binlog After brushing to disk , MySQL Suddenly it went down , and redo log I haven't had time to write . because redo log Not yet , This transaction is invalid after crash recovery , therefore id = 1 This line of data name The field is still the old value jay, and binlog This update statement is recorded in it , In the master-slave architecture ,binlog Will be copied to the slave library , The update statement is executed from the library , So this business name The field is a new value xiaolin, Inconsistent with the value of the main library ;

You can see , In persistence redo log and binlog These two logs , If there is a semi successful state , This will cause data inconsistency in the master-slave environment . This is because redo log Data that affects the master database ,binlog Affect the data from the library , therefore redo log and binlog Consistency must be maintained to ensure the consistency of master-slave data .

MySQL In order to avoid the logic inconsistency between the two logs , Used 「 Two-phase commit 」 To solve , Two phase commit is actually a distributed transaction consistency protocol , It can ensure that multiple logical operations are not all successful , Or all failure , There will be no semi successful state .

Two phase commit splits the commit of a single transaction into 2 Stages , They are... Respectively 「 Get ready (Prepare) Stage 」 and 「 Submit (Commit) Stage 」, At each stage, the coordinator (Coordinator) And participants (Participant) Joint completion . Be careful , Do not submit (Commit) Phase and commit The statement is confused ,commit Statement execution time , Will include submissions (Commit) Stage .

Take boxing for example , Two boxers ( participants ) Before the game starts , The referee ( The coordinator ) Will confirm the status of the two boxers in the middle , It's like asking if you're ready ?

- Preparation stage : The referee ( The coordinator ) Will ask two boxers in turn ( participants ) Are you ready to , Then the boxer heard it and responded , If you feel ready , Will tell the referee that they are ready ; If not, you are not ready ( For example, I haven't worn my fist yet ), They will tell the referee that they are not ready .

- Submission phase : If two boxers ( participants ) All the answers are ready , The referee ( The coordinator ) Announce the official start of the competition , Two boxers can fight directly ; If any boxer ( participants ) The answer is not ready , The referee ( The coordinator ) The game will be suspended , Corresponding to the rollback operation in the transaction .

What is the process of two-phase submission ?

stay MySQL Of InnoDB In the storage engine , Turn on binlog Under the circumstances ,MySQL Will maintain at the same time binlog Journal and InnoDB Of redo log, In order to ensure the consistency of the two logs ,MySQL Used Inside XA Business ( Yes , There are also external factors XA Business , Not relevant to this article , I won't introduce ), Inside XA Transaction by binlog As a coordinator , The storage engine is a participant .

When the client executes commit Statement or in the case of automatic submission ,MySQL The interior opens a XA Business , Complete in two stages XA Commit of transaction , Here's the picture :

Two-phase commit

As you can see from the diagram , The commit process of a transaction has two phases , Namely take redo log The write of is divided into two steps :prepare and commit, Interspersed in the middle binlog, As follows :

- prepare Stage : take XID( Inside XA The transaction ID) Write to redo log, At the same time redo log The corresponding transaction status is set to prepare, And then redo log Refresh to hard disk ;

- commit Stage : hold XID Write to binlog, And then binlog Refresh to disk , Then call the commit transaction interface of the engine , take redo log The status is set to commit( Set the transaction to commit Post state , Brush into disk redo log file , therefore commit The state can also brush the disk );

What happens when you restart abnormally ?

Let's take a look at the different moments in the two-phase commit ,MySQL What happens when you restart abnormally ? There are moments in the figure below A And the moment B Can crash :

moment A And the moment B

No matter the moment A( already redo log, Not written yet binlog), Or the moment B ( Written redo log and binlog, Not written yet commit identification ) collapse , At this time redo log All in prepare state .

stay MySQL After restart, it will scan in sequence redo log file , Meet in prepare State of redo log, Just take redo log Medium XID Go to binlog See if this... Exists XID:

- If binlog There is no current internal XA The transaction XID, explain redolog Finish scrubbing , however binlog Not yet , Then roll back the transaction . Corresponding time A Crash recovery .

- If binlog There are current internal XA The transaction XID, explain redolog and binlog Have finished brushing the disc , Then commit the transaction . Corresponding time B Crash recovery .

You can see , For being in prepare Stage redo log, The transaction can be committed , Transactions can also be rolled back , It depends on whether you can binlog Found in and redo log same XID, Commit the transaction if any , If not, roll back the transaction . So that's a guarantee redo log and binlog The consistency of these two logs .

So , Two phase submission is based on binlog Write success is the identification of successful transaction submission , because binlog Write successfully , It means being able to binlog Found in and redo log same XID.

be in prepare Stage redo log Plus the whole binlog, Commit the transaction upon restart ,MySQL Why do we do this ?

binlog It has been written in , And then it's taken from the library ( Or use this binlog Recovered Library ) Use .

therefore , This transaction should also be committed on the main database . Use this strategy , The data of the master database and the standby database are consistent .

When the transaction is not committed ,redo log Will it be persisted to disk ?

It will be .

The execution of an intermediate process redo log It's also written directly in redo log buffer Medium , These are cached in redo log buffer Inside redo log Will also be 「 Background thread 」 Persistent to disk every second .

in other words , When the transaction is not committed ,redo log It can also be persisted to disk .

Some of you might ask , If mysql collapsed , The transaction has not been committed redo log The disk has been persisted ,mysql After restart , The data is inconsistent ?

don 't worry , This situation mysql Restart will rollback , Because when the transaction is not committed ,binlog It has not been persisted to disk .

therefore , redo log It can be persisted to disk before the transaction is committed , however binlog Must be after the transaction is committed , To persist to disk .

What's the problem with the two-stage submission ?

Two phase commit ensures the data consistency of the two log files , But the performance is very poor , There are mainly two aspects of impact :

- disk I/O High frequency : about “ double 1” To configure , Each transaction is committed twice fsync( Brush set ), Once it was redo log Brush set , Another time is binlog Brush set .

- Lock competition is fierce : Although two-phase submission can guarantee 「 Single business 」 The contents of the two logs are consistent , But in 「 A lot of business 」 Under the circumstances , There is no guarantee that the order of submission is the same , therefore , Based on the two-stage submission process , You also need to add a lock to ensure the atomicity of the submission , So as to ensure that in the case of multiple transactions , The two logs are submitted in the same order .

Why two-phase commit disks I/O The frequency will be very high ?

binlog and redo log Corresponding cache space in memory ,binlog Will be cached in binlog cache,redo log Will be cached in redo log buffer, The time when they are persisted to the disk is controlled by the following two parameters . Generally, we want to avoid the risk of log loss , These two parameters will be set to 1:

- When sync_binlog = 1 When , Indicates that each commit transaction will binlog cache Inside binlog Direct persistence to disk ;

- When innodb_flush_log_at_trx_commit = 1 when , Indicates that every time a transaction is committed , Will be cached in redo log buffer Inside redo log Persist directly to disk ;

You can see , If sync_binlog and When innodb_flush_log_at_trx_commit Set to 1, So during each transaction commit , Will at least call 2 Secondary disc brushing operation , Once it was redo log Brush set , Once it was binlog Discs , So this becomes a performance bottleneck .

Why lock competition is fierce ?

Early MySQL In the version , By using prepare_commit_mutex Lock to ensure the order of transaction submission , Only when a transaction obtains a lock can it enter prepare Stage , Until commit The lock cannot be released until the phase ends , The next transaction can continue prepare operation .

Through locking, the problem of sequence consistency is perfectly solved , But when the concurrency is large , This will lead to contention for locks , Poor performance .

Group to submit

MySQL Introduced binlog Group to submit (group commit) Mechanism , When multiple transactions are committed , Will be more than one binlog The brush operation is combined into one , This reduces the number of disks I/O The number of times , if 10 The time cost of queuing up to brush the disk in turn is 10, Then I will 10 The time cost of one transaction brushing the disk together is similar to 1.

After introducing the group submission mechanism ,prepare Stage unchanged , Only aim at commit Stage , take commit The stage is divided into three processes :

- flush Stage : Multiple transactions will, in the order of entry binlog from cache write file ( Do not brush the disc );

- sync Stage : Yes binlog File do fsync operation ( Of multiple transactions binlog Merge one brush disc );

- commit Stage : Each transaction is done in sequence InnoDB commit operation ;

above Each stage has a queue , Each stage is protected by a lock , Therefore, the order of transaction writing is guaranteed , The first transaction that enters the queue becomes leader,leader Lead all transactions in the queue , Be fully responsible for the operation of the whole team , Inform the team that other business operations are over after completion .

Each stage has a queue

After the queue is introduced for each phase , Locks are only protected for each queue , No longer locks the entire process of committing transactions , You can see it , Lock granularity is reduced , This allows multiple phases to execute concurrently , So as to improve efficiency .

Yes binlog Group to submit , It has a redo log Group submit ?

This depends on MySQL edition ,MySQL 5.6 No, redo log Group to submit ,MySQL 5.7 Yes redo log Group to submit .

stay MySQL 5.6 In the group submission logic , Each transaction is executed separately prepare Stage , That is, each will redo log Brush set , There is no way to redo log Make group submission .

So in MySQL 5.7 In the version , Made an improvement , stay prepare Phases no longer allow transactions to execute individually redo log Brush set operation , It's postponed until the group submits flush Stage , in other words prepare The stages merge in flush Stage .

This optimization will redo log Your brush disk is delayed to flush In the middle of the stage ,sync Before the stage . Write by delaying redo log The way , by redolog Made a group write , such binlog and redo log It's all optimized .

Next, I will introduce the process of each stage , Note that the following procedure is for “ double 1” To configure (sync_binlog and innodb_flush_log_at_trx_commit All configured to 1).

flush Stage

The first transaction will be flush Stage Leader, At this point, the following transactions are Follower :

next , Get the transaction group in the queue , By the green affairs group Leader Yes rodo log Do it once write + fsync, That is, the transactions in the same group will be redolog Brush set :

It's done prepare After the stage , Green is generated during the execution of this group of transactions binlog write in binlog file ( call write, Not invoke fsync, So I can't brush the plate ,binlog Cache in the file system of the operating system ).

From the above process , You can know flush The role of the stage queue is For support redo log Group submission for .

If the database crashes after this step , because binlog There is no record of this group of transactions in , therefore MySQL This group of transactions will be rolled back after restart .

sync Stage

The green group of transactions binlog Write to binlog After the document , It does not immediately perform the disk brushing operation , It is Will wait for a while , The waiting time is determined by Binlog_group_commit_sync_delay Parameter control , The purpose is to combine more transactions binlog, Then we will brush the dishes together , The following process :

however , In the process of waiting , If the number of transactions reaches Binlog_group_commit_sync_no_delay_count Value of parameter setting , You don't have to wait , I'll be right there binlog Brush set , Here's the picture :

From the above process , You can know sync The role of the stage queue is Used to support binlog Group submission for .

If you want to improve binlog Effect of group submission , This can be achieved by setting the following two parameters :

binlog_group_commit_sync_delay= N, Waiting for N Subtle after , Call directly fsync, Will be in the file system page cache Medium binlog Brush set , Also is to 「 binlog file 」 Persist to disk .binlog_group_commit_sync_no_delay_count = N, Indicates that if the number of transactions in the queue reaches N individual , Just ignore it binlog_group_commit_sync_delay Set up , Call directly fsync, Will be in the file system page cache Medium binlog Brush set .

If the database crashes after this step , because binlog Transaction record already exists in ,MySQL It will pass after restart redo log The data of disk swiping continues to be submitted .

commit Stage

The last to enter commit Stage , Call the commit transaction interface of the engine , take redo log The status is set to commit.

commit The role of the stage queue is to undertake sync Stage business , Complete the final engine commit , bring sync The next set of transactions can be processed as early as possible , Maximize the efficiency of group submissions .

MySQL disk I/O Very high , What are the optimization methods ?

Now we know that the transaction is committed , Need to put binlog and redo log Persist to disk , So if there is MySQL disk I/O Very high phenomenon , We can control the following parameters , Come on “ Delay ” binlog and redo log Time to brush the plate , This reduces the cost of disk I/O The frequency of :

- Set two parameters for group submission : binlog_group_commit_sync_delay and binlog_group_commit_sync_no_delay_count Parameters , Delay binlog Time to brush the plate , Thereby reducing binlog Number of times to brush the disk . This method is based on “ Extra intentional waiting ” To achieve , Therefore, the response time of the statement may be increased , But there's no risk of losing data .

- take sync_binlog Set to greater than 1 Value ( The more common is 100~1000), Indicates every transaction submitted write, But cumulatively N After a business fsync, It's a delay binlog Time to brush the plate . But the risk is , It will be lost when the main engine is powered down N A business binlog journal .

- take innodb_flush_log_at_trx_commit Set to 2. Indicates that every time a transaction is committed , Are just cached in redo log buffer Inside redo log writes redo log file , Note write to 「 redo log file 」 It does not mean that it is written to the disk , Because there is a file system in the operating system Page Cache, Designed to cache file data , So write 「 redo log file 」 It means that it is written to the file cache of the operating system , Then it is up to the operating system to control the timing of persistence to disk . But the risk is , Data will be lost when the host is powered down .

summary

Update a record specifically UPDATE t_user SET name = 'xiaolin' WHERE id = 1; The process is as follows :

- The actuator is responsible for the specific execution , Will call the interface of the storage engine , Search through the primary key index tree to get id = 1 This line records :

- If id=1 The data page of this row is already in buffer pool in , It will be directly returned to the actuator for updating ;

- If the record is not in buffer pool, Read data pages from disk into buffer pool, Return the record to the actuator .

- After the actuator gets the clustered index record , I will check whether the records before and after the update are the same :

- If it is the same, the subsequent update process will not be carried out ;

- If it is different, both the pre update record and the updated record will be passed to as parameters InnoDB layer , Give Way InnoDB Actually perform the operation of updating records ;

- Open transaction , InnoDB Before the layer updates the record , First, record the corresponding undo log, Because this is an update operation , You need to write down the old values of the updated Columns , That is to generate a undo log,undo log Will write Buffer Pool Medium Undo page , However, in modifying the Undo You need to record the corresponding before the page redo log, therefore Record the modification first Undo Page redo log , And then you can really change it Undo page .

- InnoDB The layer starts to update the record , according to WAL technology , Record the modification data page first redo log , Then you can really modify the data page . The process of modifying a data page is to modify Buffer Pool The page where the data is located in , Then set its page as dirty , To reduce the number of disks I/O, Dirty pages are not immediately written to disk , Subsequently, the background thread chooses an appropriate time to write the dirty pages to the disk .

- thus , A record has been updated .

- After an update statement is executed , Then start recording the corresponding binlog, What's recorded at this time binlog Will be saved to binlog cache, Not flushed to hard disk binlog file , Only when a transaction is committed will all the transactions in the transaction run uniformly binlog Refresh to hard disk .

- Transaction submission ( For the sake of illustration , The process of group submission is not discussed here , Just say two-stage submission ):

- prepare Stage : take redo log The corresponding transaction status is set to prepare, And then redo log Refresh to hard disk ;

- commit Stage : take binlog Refresh to disk , Then call the commit transaction interface of the engine , take redo log The status is set to commit( Set the transaction to commit Post state , Brush into disk redo log file );

- thus , An update statement is completed .

边栏推荐

- Deep learning: GCN diagram classification case

- MySQL four locks

- [mit 6.s081] LEC 1: introduction and examples notes

- Part of speech list of common words

- Wechat applet obtains openid, sessionkey, unionid

- 你有没有在MySQL的order by上栽过跟头

- Visual studio code installation tutorial (super detailed)

- 2021.8.6 notes jsoup

- Log4j 史诗级漏洞,京东这样的大厂都中招了

- 怎么会不喜欢呢,CI/CD中轻松发送邮件

猜你喜欢

![[MIT 6.S081] Lec 9: Interrupts 笔记](/img/b6/a8d39aa7ede4eb1c5a74e6d15b3b1c.png)

[MIT 6.S081] Lec 9: Interrupts 笔记

![[MIT 6.S081] Lec 3: OS organization and system calls 笔记](/img/34/073d00245eb39844bbe1740f65fe07.png)

[MIT 6.S081] Lec 3: OS organization and system calls 笔记

解决Jsp级联问题

如何实现Word、PDF、TXT文件的全文内容检索?

「MySQL那些事」一文详解索引原理

2021.7.31 note view

Meituan Er Mian: why does redis have sentinels?

2021.7.22 note constraints

MySQL学习 Day1 DDL、DML、DQL基础查询

![[MIT 6.S081] Lec 5: Calling conventions and stack frames RISC-V 笔记](/img/1f/6384f4831718477f0567540250f352.png)

[MIT 6.S081] Lec 5: Calling conventions and stack frames RISC-V 笔记

随机推荐

[MIT 6.S081] Lab 10: mmap

2021.8.9笔记 request

An analysis of CPU explosion of a smart logistics WCS system in.Net

「MySQL那些事」一文详解索引原理

Linked list storage structure of dynamic linked list 2 stack (linkedstack Implementation)

Knowledge map - Jieba, pyhanlp, smoothnlp tools to realize Chinese word segmentation (part of speech)

[mit 6.s081] LEC 4: page tables notes

2. Change color space and color detection

知识图谱 — pyhanlp实现命名体识别(附命名体识别代码)

Conflict between blur event and click event in input box

[MIT 6.S081] Lec 3: OS organization and system calls 笔记

Chained storage structure of dynamic linked list 3 queue (linkedqueue Implementation)

Software installation related

[MIT 6.S081] Lab 4: traps

[MIT 6.S081] Lec 9: Interrupts 笔记

Generate PDM file from Navicat export table

Why don't you like it? It's easy to send mail in ci/cd

阿里p8总结的10条 SQL 优化方案(非常实用)

Run the uniapp to the mobile phone (real machine debugging)

[MIT 6.S081] Lec 5: Calling conventions and stack frames RISC-V 笔记