当前位置:网站首页>Neural network

Neural network

2022-08-02 07:51:00 【Ah Qiangzhen】

人工神经网络

Artificial neurons are the basic building blocks of artificial neural network elements,如下图所示, = [ x 1 , x 2 , x 3 . . . x m ] T , W = [ w 1 , w 2 , . . . w m ] T =\left[ x_1,x_2,x_3...x_m \right] ^T,W=\left[ w_1,w_2,...w_m \right] ^T =[x1,x2,x3...xm]T,W=[w1,w2,...wm]TFor the connection right,So the network input u = ∑ i = 1 m w i x i u=\sum_{i=1}^m{w_ix_i} u=∑i=1mwixi,其向量形式为 u = W T X u=W^TX u=WTX

The picture above for single-layer perceptron neural model,其中mAs the number of neurons in input

v = ∑ i = 1 m w i x i , y = { 1 v ≥ 0 0 v < 0 v=\sum_{i=1}^m{w_ix_i},y=\begin{cases} 1 \quad v\ge 0\\ 0 \quad v<0\\ \end{cases} v=∑i=1mwixi,y={ 1v≥00v<0

Activation function also by excitation function,活化函数,Used to perform the neurons of network input transformation,一般有以下四种:

线性函数 f ( u ) = k u + c f(u)=ku+c f(u)=ku+c

非线性斜面函数 f ( u ) = { γ , u ≥ θ k u , ∣ u ∣ < θ − γ , u ≤ − θ f\left( u \right) =\begin{cases} \gamma ,u\ge \theta\\ ku,\left| u \right|<\theta\\ -\gamma ,u\le -\theta\\ \end{cases} f(u)=⎩⎨⎧γ,u≥θku,∣u∣<θ−γ,u≤−θ

其中 θ , γ \theta ,\gamma θ,γ为非负实数, γ \gamma γReferred to as the saturation value,即 γ \gamma γAs the biggest output neurons阈值函数/阶跃函数

f ( u ) = { β , u > θ − γ , u ≤ θ f\left( u \right) =\begin{cases} \beta ,u>\theta\\ -\gamma ,u\le \theta\\ \end{cases} f(u)={ β,u>θ−γ,u≤θ在logisticReturn to have been introduced insigmoid函数,This function will range minus infinity to infinite is mapped to the(0,1)

sigmoid函数的公式为:

f ( u ) = 1 1 + e − u f\left( u \right) =\frac{1}{1+e^{-u}} f(u)=1+e−u1tanhFunction about educationsigmoid函数要常见一些,This function will range minus infinity to infinite is mapped to the(-1,1),其公式为:

f ( u ) = e u − e u e u + e − u f\left( u \right) =\frac{e^u-e^u}{e^u+e^{-u}} f(u)=eu+e−ueu−eu

例:

Using a single perceptron neural solve the problem of the classification of simple:There are two types of four input vector,Two vector corresponding to the target value as1,The other two vector corresponding to the target value as0,The input vector matrix:

[ − 0.5 − 0.5 0.3 0 − 0.5 0.5 − 0.5 1 ] \left[ \begin{matrix} -0.5& -0.5& 0.3& 0\\ -0.5& 0.5& -0.5& 1\\ \end{matrix} \right] [−0.5−0.5−0.50.50.3−0.501]

其中每一列1Column is the value of an input,Target classification and vectorT=[1,1,0,0].Try to predict new input vector p = [ − 0.5 , 0.2 ] T p=\left[ -0.5,0.2 \right] ^T p=[−0.5,0.2]T的目标值:

from sklearn.linear_model import Perceptron

import numpy as np

x0=np.array([[-0.5,-0.5,0.3,0.0],[-0.5,0.5,-0.5,1.0]]).T

y0=np.array([1,1,0,0])

md=Perceptron().fit(x0,y0)#Construction and fitting model

print("Model coefficients and constant term respectively:",md.coef_,",",md.intercept_)

print("模型精度:",md.score(x0,y0))#模型检验

print("预测值为:",md.predict([[-0.5,0.2]]))

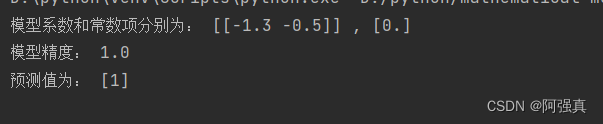

Remember two indicators variables respectively x 1 , x 2 x_1,x_2 x1,x2,For the classification function was obtained v = − 1.3 x 1 − 0.5 x 2 v=-1.3x_1-0.5x_2 v=−1.3x1−0.5x2.New input vectorp的目标值为1

BP神经网络

BPNeural network is the biggest advantage is with strong nonlinear mapping ability,He is mainly used in the following four aspects:

- 函数逼近.With the input vector and the corresponding output vector training a network to approximate a function

- 模式识别

- 预测

- 数据压缩

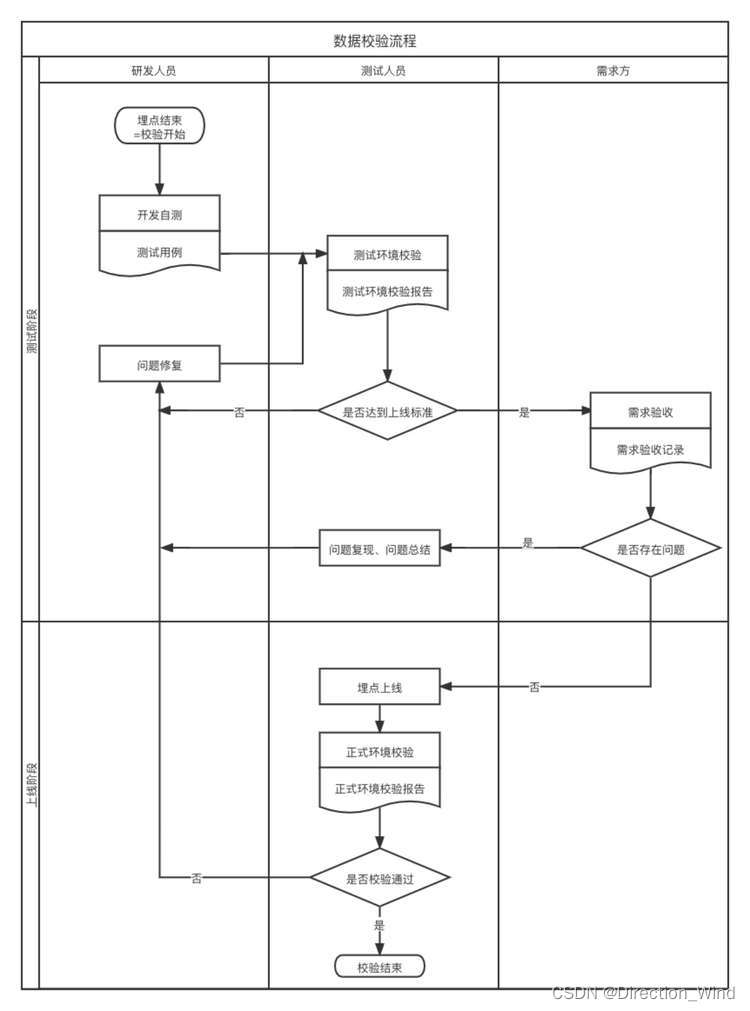

BPNeural network specific process is as follows:

(1) 初始化,To the connection weight and threshold is given[-1,1]的随机值

(2) Choose a random pattern of X 0 = [ x 1 0 , x 2 0 . . . . x n 0 ] , Y 0 = [ y 1 0 , y 2 0 , . . . y n 0 ] X_0=\left[ x_{1}^{0},x_{2}^{0}....x_{n}^{0} \right] ,Y_0=\left[ y_{1}^{0},y_{2}^{0},...y_{n}^{0} \right] X0=[x10,x20....xn0],Y0=[y10,y20,...yn0]提供给网络

(3)用输入模式、连接权,And the value,计算中间层各单元的输入,然后用sThe middle layer through the calculation of living function of each unit output.

(4)With the output of the middle layer;连接权.And the broad terms of input output layer units,然后用.After activation function calculating the response of the output layer unitsd.

(5)With hope output mode、Network actual output calculation of generalization error output layer units..

(6)With connection powerg、Output layer of generalization error、中间层输出,Calculate the middle tier units generalization error

Yuan generalization errorc、The middle tier units output,修正连接权u,和阈值.Every unit input,Fixed connection weight and threshold.

(7)重新从mA learning model of randomly selected from a pattern of,Namely return step3,Until the network global error functionE小于预先设定的一个极小值,即网络收敛;Or study number is greater than the preset value,The network can't convergence

边栏推荐

猜你喜欢

随机推荐

Splunk Filed extraction field interception

spark架构

LeetCode 2360. The longest cycle in a graph

OC-错误提示

【云原生】如何快速部署Kubernetes

OC-NSArray

以训辅教,以战促学 | 新版攻防世界平台正式上线运营!

【机器学习】实验1布置:基于决策树的英雄联盟游戏胜负预测

21 days learning challenge 】 【 sequential search

企业实训复现指导手册——基于华为ModelArts平台的OpenPose模型的训练和推理、基于关键点数据实现对攀爬和翻越护栏两种行为的识别、并完成在图片中只标注发生行为的人

【图像去噪】基于matlab双立方插值和稀疏表示图像去噪【含Matlab源码 2009期】

封装class类一次性解决全屏问题

带手续费买卖股票的最大利益[找DP的状态定义到底缺什么?]

MySQL-数据库设计规范

Splunk Field Caculated Calculated Field

MySQL-慢查询日志

FaceBook社媒营销高效转化技巧分享

飞桨paddle技术点整理

Redis 常用命令和基本数据结构(数据类型)

JS初识高阶函数和函数柯里化

![WebGPU 导入[2] - 核心概念与重要机制解读](/img/8f/195a3b04912d8872d025df58ab5960.png)