当前位置:网站首页>Recognition engine ocropy- & gt; ocropy2-> Ocropus3 summary

Recognition engine ocropy- & gt; ocropy2-> Ocropus3 summary

2022-07-23 18:58:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm your friend, Quan Jun .

The paper :

The OCRopus Open Source OCR System

Transfer Learning for OCRopus Model Training on Early Printed Books

GitHub:

https://github.com/tmbdev/ocropy

https://github.com/tmbdev/ocropy2

https://github.com/NVlabs/ocropus3

https://github.com/tmbdev/clstm

https://github.com/chreul/OCR_Testdata_EarlyPrintedBooks

rely on :

Python2

Pytorch

System structure :

The whole system consists of ocropus-nlbin( Binarization pretreatment ),ocropus-gpageseg( Line detection segmentation ),ocropus-rpred( Based on over segmentation +OpenFST The identification of + Language model correction ),ocropus-hocr(HTML Show results ) These parts make up .

among ,ocropy and ocropy2 Not much difference ,ocropus3 Most of the modules are replaced by Neural Networks , And change it to nvidialab Maintained .

Identification process :

ocropus-nlbin,ocrobin:

This module is mainly responsible for image preprocessing , It mainly includes image normalization processing , Get rid of the noise , Go to background processing , Angular rotation processing , Histogram equalization processing .

Normalized to the conventional minus mean , Divide variance operation .

The de noisy background is , Through a probability distribution filter , about 20*2 The pixels in the window are arranged from small to large , Take the pixel values and arrange them in 80% As the background pixel . Then subtract the background from the original picture to get the word in the foreground .

Angle rotation , Based on the set angle value , Rotate the original picture , Then find the average value of each line , Then the mean value of all rows forms a vector , Find the variance of the vector . Suppose a graph is positive or negative 5 Degrees every 1 Measure and find a variance , All in all, we can get 10 Variance value , The angle corresponding to the largest variance is the angle that needs to be rotated . because , A normal picture is always a line of black words , A line of blank , So the variance is the largest .

Histogram equalization , First, sort all pixels from small to large , Pixels in 5% The value of the position locates the minimum value , Pixels in 90% Positioning maximum value of position , Then perform histogram equalization .

In the original program scipy modular , Slower , after opencv After improvement , Achieved both speed and effect .

from __future__ import print_function

import numpy as np

import cv2

import time

class Pre_Process(object):

def __init__(self):

self.zoom=0.5

self.perc=50

self.range=20

self.bignore=0.2

self.maxskew=5

self.skewsteps=1

self.escale=1.0

self.lo=0.05

self.hi=0.9

def normalize_raw_image(self,raw):

''' perform image normalization '''

image = raw - np.amin(raw)

if np.amax(image) == np.amin(image):

return image

image /= np.amax(image)

return image

def estimate_local_whitelevel(self,image, bignore=0.2,zoom=0.5, perc=80, range=20):

'''flatten it by estimating the local whitelevel

zoom for page background estimation, smaller=faster, default: %(default)s

percentage for filters, default: %(default)s

range for filters, default: %(default)s

'''

d0, d1 = image.shape

o0, o1 = int(bignore * d0), int(bignore * d1)

est = image[o0:d0 - o0, o1:d1 - o1]

image_black=np.sum(est < 0.05)

image_white=np.sum(est > 0.95)

extreme = (image_black+image_white) * 1.0 / np.prod(est.shape)

if np.mean(est)<0.4:

print( np.mean(est),np.median(est))

image = 1 - image

if extreme > 0.95:

flat = image

else:

m=cv2.blur(image,(range,range))

w, h = np.minimum(np.array(image.shape), np.array(m.shape))

flat = np.clip(image[:w, :h] - m[:w, :h] + 1, 0, 1)

return flat

def estimate_skew_angle(self,image, angles):

estimates = []

for a in angles:

matrix = cv2.getRotationMatrix2D((int(image.shape[1] / 2), int(image.shape[0] / 2)), a, 1)

rotate_image = cv2.warpAffine(image, matrix, (image.shape[1], image.shape[0]))

v = np.mean(rotate_image, axis=1)

v = np.var(v)

estimates.append((v, a))

_, a = max(estimates)

return a

def estimate_skew(self,flat,maxskew=2, skewsteps=1):

''' estimate skew angle and rotate'''

flat = np.amax(flat) - flat

flat -= np.amin(flat)

ma = maxskew

ms = int(2 * maxskew * skewsteps)

angle = self.estimate_skew_angle(flat, np.linspace(-ma, ma, ms + 1))

matrix = cv2.getRotationMatrix2D((int(flat.shape[1] / 2), int(flat.shape[0] / 2)), angle, 1)

flat= cv2.warpAffine(flat, matrix, (flat.shape[1], flat.shape[0]))

flat = np.amax(flat) - flat

return flat, angle

def estimate_thresholds(self,flat, bignore=0.2, escale=1, lo=0.05, hi=0.9):

'''# estimate low and high thresholds

ignore this much of the border for threshold estimation, default: %(default)s

scale for estimating a mask over the text region, default: %(default)s

lo percentile for black estimation, default: %(default)s

hi percentile for white estimation, default: %(default)s

'''

d0, d1 = flat.shape

o0, o1 = int(bignore * d0), int(bignore * d1)

est = flat[o0:d0 - o0, o1:d1 - o1]

if escale > 0:

# by default, we use only regions that contain

# significant variance; this makes the percentile

# based low and high estimates more reliable

v = est -cv2.GaussianBlur(est, (3,3), escale * 20)

v=cv2.GaussianBlur(v ** 2, (3,3), escale * 20)** 0.5

v = (v > 0.3 * np.amax(v))

v=np.asarray(v,np.uint8)

v=cv2.cvtColor(v, cv2.COLOR_GRAY2RGB)

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(int(escale * 50),int(escale * 50)))

v = cv2.dilate(v, kernel, 1)

v=cv2.cvtColor(v, cv2.COLOR_RGB2GRAY)

v = (v > 0.3 * np.amax(v))

est = est[v]

if len(est)!=0:

est=np.sort(est)

lo = est[int(lo*len(est))]

hi = est[int(hi*len(est))]

# rescale the image to get the gray scale image

flat -= lo

flat /= (hi - lo)

flat = np.clip(flat, 0, 1)

return flat

def process(self,img):

# perform image normalization(30ms)

image = self.normalize_raw_image(img)

# check whether the image is already effectively binarized(70ms)

flat = self.estimate_local_whitelevel(image,self.bignore, self.zoom, self.perc, self.range)

# estimate skew angle and rotate(100ms)

flat, angle = self.estimate_skew(flat, self.maxskew, self.skewsteps)

# estimate low and high thresholds(200ms)

flat = self.estimate_thresholds(flat, self.bignore, self.escale, self.lo, self.hi)

flat=np.asarray(flat*255,np.uint8)

return flat

if __name__=="__main__":

pp=Pre_Process()

image=cv2.imread("0020_0022.png",0)

image=image/255

for i in range(1):

start = time.time()

flat=pp.process(image)

print("time:",time.time()-start)

cv2.imwrite("gray.jpg", flat)

cv2.imwrite("binary.jpg", 255*(flat>128))stay ocropus3 in , be based on pytorch Build a network structure for the above pretreatment . Especially the treatment of noise , Can get better results . And the speed is faster than the original traditional method . The model is also very small , Only 26K. The network mainly uses 2DLSTM.

MDLSTM structure :

To put it simply, first do a horizontal one-dimensional for a plane lstm, Then do the vertical one dimension lstm.

The network structure is as follows ,

Sequential(

(0): Conv2d(1, 8, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True)

(2): ReLU()

(3): LSTM2(

(hlstm): RowwiseLSTM(

(lstm): LSTM(8, 4, bidirectional=1)

)

(vlstm): RowwiseLSTM(

(lstm): LSTM(8, 4, bidirectional=1)

)

)

(4): Conv2d(8, 1, kernel_size=(1, 1), stride=(1, 1))

(5): Sigmoid()

)The handler :

import ocrobin

import cv2

import numpy as np

import time

bm = ocrobin.Binarizer("bin-000000046-005393.pt")

bm.model

image = np.mean(cv2.imread("0020_0022.png")[:, :, :3], 2)

start=time.time()

binary = bm.binarize(image)

print("time:",time.time()-start)

print(np.max(binary),np.min(binary))

gray=(1-binary)*255

binary=(binary<0.5)*255

cv2.imwrite("gray.png",gray)

cv2.imwrite("bin.png",binary)ocrorot:

The module is ocropus3 Rotation in (rotation) And symmetry (skew) Corrective module . Let's start with , Rotation refers to 0 degree ,90 degree ,180 degree ,360 degree , this 4 Correction of three angles . Symmetry correction refers to less than 90 Correction of degree angle , Symmetry correction . Compared with ocropy Only small angle correction can be carried out in ,ocrorot It can be said to be more practical . rotate (rotation) And symmetry (skew) Correction is achieved through neural networks .

Among them, the module of rotation correction :

Sequential(

(0): CheckSizes [(1, 128), (1, 512), (256, 256), (256, 256)]

(1): Conv2d(1, 8, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(2): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True)

(3): ReLU()

(4): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(5): Conv2d(8, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True)

(7): ReLU()

(8): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(9): Conv2d(16, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(10): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True)

(11): ReLU()

(12): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(13): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(14): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True)

(15): ReLU()

(16): Img2FlatSum

(17): Linear(in_features=64, out_features=64, bias=True)

(18): BatchNorm1d(64, eps=1e-05, momentum=0.1, affine=True)

(19): ReLU()

(20): Linear(in_features=64, out_features=4, bias=True)

(21): Sigmoid()

(22): CheckSizes [(1, 128), (4, 4)]

)Symmetrical correction module :

Sequential(

(0): CheckSizes [(1, 128), (1, 512), (256, 256), (256, 256)]

(1): Conv2d(1, 8, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(2): BatchNorm2d(8, eps=1e-05, momentum=0.1, affine=True)

(3): ReLU()

(4): Spectrum

(5): Conv2d(8, 4, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(6): BatchNorm2d(4, eps=1e-05, momentum=0.1, affine=True)

(7): ReLU()

(8): Reshape((0, [1, 2, 3]))

(9): Linear(in_features=262144, out_features=128, bias=True)

(10): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True)

(11): ReLU()

(12): Linear(in_features=128, out_features=30, bias=True)

(13): Sigmoid()

(14): CheckSizes [(1, 128), (30, 30)]

)ocrodeg:

This module is also the same ocropus3 Module in , Mainly responsible for the enhancement processing of training data . Including page rotation (page rotation), Random geometric transformation (random geometric transformations), Random distribution transformation (random distortions), Regular surface distortion (ruled surface distortions), Fuzzy (blur), Thresholding (thresholding), noise (noise), Multiscale noise (multiscale noise), Random spots (random blobs), Fiber noise (fibrous noise), Foreground Background selection (foreground/background selection) etc. .

ocropus-gpageseg,ocroseg:

This module is mainly responsible for line image segmentation . Specifically, it includes the detection of image color scale , Division of rows , Calculate the reading order, etc . The division of rows , First, remove the black underline and other disturbing lines in the picture , Then find the dividing line of the column , Then find the line based on the method of connected domain , So as to split the rows .

stay ocropus3 in , This module mainly passes cnn Realization . The highlight is also 2 dimension LSTM. Mainly through the network to achieve the detection of underlined text . Then take a fixed height above and below the middle line to generate a text box , Realize the detection of text .

The network structure is as follows :

Sequential(

(0): Conv2d(1, 16, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(16, eps=1e-05, momentum=0.1, affine=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(4): Conv2d(16, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(5): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True)

(6): ReLU()

(7): MaxPool2d(kernel_size=(2, 2), stride=(2, 2), dilation=(1, 1), ceil_mode=False)

(8): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(9): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True)

(10): ReLU()

(11): LSTM2(

(hlstm): RowwiseLSTM(

(lstm): LSTM(64, 32, bidirectional=1)

)

(vlstm): RowwiseLSTM(

(lstm): LSTM(64, 32, bidirectional=1)

)

)

(12): Conv2d(64, 32, kernel_size=(1, 1), stride=(1, 1))

(13): BatchNorm2d(32, eps=1e-05, momentum=0.1, affine=True)

(14): ReLU()

(15): LSTM2(

(hlstm): RowwiseLSTM(

(lstm): LSTM(32, 32, bidirectional=1)

)

(vlstm): RowwiseLSTM(

(lstm): LSTM(64, 32, bidirectional=1)

)

)

(16): Conv2d(64, 1, kernel_size=(1, 1), stride=(1, 1))

(17): Sigmoid()

)Their own tensorflow Reappear ,https://github.com/watersink/ocrsegment,

ocropus-dewarp:

Mainly for line pictures dewarp operation . First, Gaussian filtering and uniform filtering are performed on a line image , Then take the maximum value of each column as the midpoint of the words in this column . And then take 0–h The mean value of the difference between the data and the midpoint , Multiply by the change interval range, As half of the column height of each column . Then the original line image , The upper and lower columns are filled with half of the background pixels . Then take the center position as the center in the figure , Half the height of the column is the length. Take out some pictures with words , Finally, the image is affine transformed , Transform to a picture with a specified height .

from __future__ import print_function

import os

import numpy as np

import matplotlib.pyplot as plt

from scipy.ndimage import interpolation,filters

def scale_to_h(img,target_height,order=1,dtype=np.dtype('f'),cval=0):

h,w = img.shape

scale = target_height*1.0/h

target_width = int(scale*w)

output = interpolation.affine_transform(1.0*img,np.eye(2)/scale,order=order,

output_shape=(target_height,target_width),

mode='constant',cval=cval)

output = np.array(output,dtype=dtype)

return output

class CenterNormalizer:

def __init__(self,target_height=48,params=(4,1.0,0.3)):

self.debug = int(os.getenv("debug_center") or "0")

self.target_height = target_height

self.range,self.smoothness,self.extra = params

def setHeight(self,target_height):

self.target_height = target_height

def measure(self,line):

h,w = line.shape

h=float(h)

w=float(w)

smoothed = filters.gaussian_filter(line,(h*0.5,h*self.smoothness),mode='constant')

smoothed += 0.001*filters.uniform_filter(smoothed,(h*0.5,w),mode='constant')

self.shape = (h,w)

a = np.argmax(smoothed,axis=0)

a = filters.gaussian_filter(a,h*self.extra)

self.center = np.array(a,'i')

deltas = np.abs(np.arange(h)[:,np.newaxis]-self.center[np.newaxis,:])

self.mad = np.mean(deltas[line!=0])

self.r = int(1+self.range*self.mad)

if self.debug:

plt.figure("center")

plt.imshow(line,cmap=plt.cm.gray)

plt.plot(self.center)

plt.ginput(1,1000)

def dewarp(self,img,cval=0,dtype=np.dtype('f')):

print(img.shape==self.shape)

assert img.shape==self.shape

h,w = img.shape

# The actual image img is embedded into a larger image by

# adding vertical space on top and at the bottom (padding)

hpadding = self.r # this is large enough

padded = np.vstack([cval*np.ones((hpadding,w)),img,cval*np.ones((hpadding,w))])

center = self.center + hpadding

dewarped = [padded[center[i]-self.r:center[i]+self.r,i] for i in range(w)]

dewarped = np.array(dewarped,dtype=dtype).T

return dewarped

def normalize(self,img,order=1,dtype=np.dtype('f'),cval=0):

dewarped = self.dewarp(img,cval=cval,dtype=dtype)

h,w = dewarped.shape

scaled = scale_to_h(dewarped,self.target_height,order=order,dtype=dtype,cval=cval)

return scaled

if __name__=="__main__":

cn=CenterNormalizer()

import cv2

image=cv2.imread("20180727122251.png",0)

image=(image>128)*255

image=255-image

image=np.float32(image)

cn.measure(image)

scaled=cn.normalize(image)

print(np.max(scaled),np.min(scaled))

cv2.imwrite("scaled.png",255-scaled)This step dewarp operation , When the diversity of training data is not great , There are still some advantages . Of course, if the data diversity is relatively large , Doing this operation may not improve greatly . Of course, stn Wait for the operation , The effect may be better than this operation .

ocropus-linegen:

This module is mainly based on corpus ( Adventures of Tom Sawyer ) And font files (DejaVuSans.ttf) Generate training text and label. A well written document . It must be useful on the ground .

from __future__ import print_function

import random as pyrandom

import glob

import sys

import os

import re

import codecs

import traceback

import argparse

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

from PIL import ImageFont,ImageDraw

from scipy.ndimage import filters,measurements,interpolation

from scipy.misc import imsave

replacements = [

(u'[_~#]',u"~"), # OCR control characters

(u'"',u"''"), # typewriter double quote

(u"`",u"'"), # grave accent

(u'[“”]',u"''"), # fancy quotes

(u"´",u"'"), # acute accent

(u"[‘’]",u"'"), # left single quotation mark

(u"[“”]",u"''"), # right double quotation mark

(u"“",u"''"), # German quotes

(u"„",u",,"), # German quotes

(u"…",u"..."), # ellipsis

(u"′",u"'"), # prime

(u"″",u"''"), # double prime

(u"‴",u"'''"), # triple prime

(u"〃",u"''"), # ditto mark

(u"µ",u"μ"), # replace micro unit with greek character

(u"[–—]",u"-"), # variant length hyphens

(u"fl",u"fl"), # expand Unicode ligatures

(u"fi",u"fi"),

(u"ff",u"ff"),

(u"ffi",u"ffi"),

(u"ffl",u"ffl"),

]

import unicodedata

def normalize_text(s):

"""Apply standard Unicode normalizations for OCR.

This eliminates common ambiguities and weird unicode

characters."""

#s = unicode(s)

s = unicodedata.normalize('NFC',s)

s = re.sub(r'\s+(?u)',' ',s)

s = re.sub(r'\n(?u)','',s)

s = re.sub(r'^\s+(?u)','',s)

s = re.sub(r'\s+$(?u)','',s)

for m,r in replacements:

s = re.sub((m),(r),s)

#s = re.sub(unicode(m),unicode(r),s)

return s

parser = argparse.ArgumentParser(description = "Generate text line training data")

parser.add_argument('-o','--base',default='linegen',help='output directory, default: %(default)s')

parser.add_argument('-r','--distort',type=float,default=1.0)

parser.add_argument('-R','--dsigma',type=float,default=20.0)

parser.add_argument('-f','--fonts',default="tests/DejaVuSans.ttf")

parser.add_argument('-F','--fontlist',default=None)

parser.add_argument('-t','--texts',default="tests/tomsawyer.txt")

parser.add_argument('-T','--textlist',default=None)

parser.add_argument('-m','--maxlines',default=200,type=int,

help='max # lines for each directory, default: %(default)s')

parser.add_argument('-e','--degradations',default="lo",

help="lo, med, or hi; or give a file, default: %(default)s")

parser.add_argument('-j','--jitter',default=0.5)

parser.add_argument('-s','--sizes',default="40-70")

parser.add_argument('-d','--display',action="store_true")

parser.add_argument('--numdir',action="store_true")

parser.add_argument('-C','--cleanup',default='[_~#]')

parser.add_argument('-D','--debug_show',default=None,

help="select a class for stepping through")

args = parser.parse_args()

if "-" in args.sizes:

lo,hi = args.sizes.split("-")

sizes = range(int(lo),int(hi)+1)

else:

sizes = [int(x) for x in args.sizes.split(",")]

if args.degradations=="lo":

# sigma +/- threshold +/-

deglist = """

0.5 0.0 0.5 0.0

"""

elif args.degradations=="med":

deglist = """

0.5 0.0 0.5 0.05

1.0 0.3 0.4 0.05

1.0 0.3 0.5 0.05

1.0 0.3 0.6 0.05

"""

elif args.degradations=="hi":

deglist = """

0.5 0.0 0.5 0.0

1.0 0.3 0.4 0.1

1.0 0.3 0.5 0.1

1.0 0.3 0.6 0.1

1.3 0.3 0.4 0.1

1.3 0.3 0.5 0.1

1.3 0.3 0.6 0.1

"""

elif args.degradations is not None:

with open(args.degradations) as stream:

deglist = stream.read()

degradations = []

for deg in deglist.split("\n"):

deg = deg.strip()

if deg=="": continue

deg = [float(x) for x in deg.split()]

degradations.append(deg)

if args.fonts is not None:

fonts = []

for pat in args.fonts.split(':'):

if pat=="": continue

fonts += sorted(glob.glob(pat))

elif args.fontlist is not None:

with open(args.fontlist) as fh:

lines = (line.strip() for line in fh)

fonts = [line for line in lines if line]

else:

print("use -f or -F arguments to specify fonts")

sys.exit(1)

assert len(fonts)>0,"no fonts?"

print("fonts", fonts)

if args.texts is not None:

texts = []

for pat in args.texts.split(':'):

print(pat)

if pat=="": continue

texts += sorted(glob.glob(pat))

elif args.textlist is not None:

texts = re.split(r'\s*\n\s*',open(args.textlist).read())

else:

print("use -t or -T arguments to specify texts")

sys.exit(1)

assert len(texts)>0,"no texts?"

lines = []

for text in texts:

print("# reading", text)

with codecs.open(text,'r','utf-8') as stream:

for line in stream.readlines():

line = line.strip()

line = re.sub(args.cleanup,'',line)

if len(line)<1: continue

lines.append(line)

print("got", len(lines), "lines")

assert len(lines)>0

lines = list(set(lines))

print("got", len(lines), "unique lines")

def rgeometry(image,eps=0.03,delta=0.3):

m = np.array([[1+eps*np.random.randn(),0.0],[eps*np.random.randn(),1.0+eps*np.random.randn()]])

w,h = image.shape

c = np.array([w/2.0,h/2])

d = c-np.dot(m,c)+np.array([np.random.randn()*delta,np.random.randn()*delta])

return interpolation.affine_transform(image,m,offset=d,order=1,mode='constant',cval=image[0,0])

def rdistort(image,distort=3.0,dsigma=10.0,cval=0):

h,w = image.shape

hs = np.random.randn(h,w)

ws = np.random.randn(h,w)

hs = filters.gaussian_filter(hs,dsigma)

ws = filters.gaussian_filter(ws,dsigma)

hs *= distort/np.amax(hs)

ws *= distort/np.amax(ws)

def f(p):

return (p[0]+hs[p[0],p[1]],p[1]+ws[p[0],p[1]])

return interpolation.geometric_transform(image,f,output_shape=(h,w),

order=1,mode='constant',cval=cval)

if args.debug_show:

plt.ion()

plt.gray()

base = args.base

print("base", base)

if os.path.exists(base)==False:

os.mkdir(base)

def crop(image,pad=1):

[[r,c]] = measurements.find_objects(np.array(image==0,'i'))

r0 = r.start

r1 = r.stop

c0 = c.start

c1 = c.stop

image = image[r0-pad:r1+pad,c0-pad:c1+pad]

return image

last_font = None

last_size = None

last_fontfile = None

def genline(text,fontfile=None,size=36,sigma=0.5,threshold=0.5):

global image,draw,last_font,last_fontfile

if last_fontfile!=fontfile or last_size!=size:

last_font = ImageFont.truetype(fontfile,size)

last_fontfile = fontfile

font = last_font

image = Image.new("L",(6000,200))

draw = ImageDraw.Draw(image)

draw.rectangle((0,0,6000,6000),fill="white")

# print("\t", size, font)

draw.text((250,20),text,fill="black",font=font)

a = np.asarray(image,'f')

a = a*1.0/np.amax(a)

if sigma>0.0:

a = filters.gaussian_filter(a,sigma)

a += np.clip(np.random.randn(*a.shape)*0.2,-0.25,0.25)

a = rgeometry(a)

a = np.array(a>threshold,'f')

a = crop(a,pad=3)

# FIXME add grid warping here

# clf(); ion(); gray(); imshow(a); ginput(1,0.1)

del draw

del image

return a

lines_per_size = args.maxlines//len(sizes)

for pageno,font in enumerate(fonts):

if args.numdir:

pagedir = "%s/%04d"%(base,pageno+1)

else:

fbase = re.sub(r'^[./]*','',font)

fbase = re.sub(r'[.][^/]*$','',fbase)

fbase = re.sub(r'[/]','_',fbase)

pagedir = "%s/%s"%(base,fbase)

if os.path.exists(pagedir)==False:

os.mkdir(pagedir)

print("===", pagedir, font)

lineno = 0

while lineno<args.maxlines:

(sigma,ssigma,threshold,sthreshold) = pyrandom.choice(degradations)

sigma += (2*np.random.rand()-1)*ssigma

threshold += (2*np.random.rand()-1)*sthreshold

line = pyrandom.choice(lines)

size = pyrandom.choice(sizes)

with open(pagedir+".info","w") as stream:

stream.write("%s\n"%font)

try:

image = genline(text=line,fontfile=font,

size=size,sigma=sigma,threshold=threshold)

except:

traceback.print_exc()

continue

if np.amin(image.shape)<10: continue

if np.amax(image)<0.5: continue

if args.distort>0:

image = rdistort(image,args.distort,args.dsigma,cval=np.amax(image))

if args.display:

plt.gray()

plt.clf()

plt.imshow(image)

plt.ginput(1,0.1)

fname = pagedir+"/01%04d"%lineno

imsave(fname+".bin.png",image)

gt = normalize_text(line)

with codecs.open(fname+".gt.txt","w",'utf-8') as stream:

stream.write(gt+"\n")

print("%5.2f %5.2f %3d\t%s" % (sigma, threshold, size, line))

lineno += 1dlinputs:

data IO Read module , The main advantages :

- pure python

- Support any deep learning framework

- Support very large datasets

- Support data flow

- Support map-reduce And distributed data enhancement

- Support tar,tfrecords Etc

ocropus-rtrain,ocropus-ltrain,dltrainer:

This module is a training module , The training process uses cpu Training . The model is a multi-layer perceptron MLP, It uses CTC loss. Training is fast .

dltrainer by ocropus3 Training module for .

ocropus-rpred,ocropus-lpred,ocroline:

This module is to test the trained model .

stay ocropus3 in , The identification module is convolution network module . Loss or CTC LOSS.

Network structure :

Sequential(

(0): Reorder BHWD->BDHW

(1): CheckSizes [(0, 900), (1, 1), (48, 48), (0, 9000)]

(2): Conv2d(1, 100, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): BatchNorm2d(100, eps=1e-05, momentum=0.1, affine=True)

(4): ReLU()

(5): MaxPool2d(kernel_size=(2, 1), stride=(2, 1), dilation=(1, 1), ceil_mode=False)

(6): Conv2d(100, 200, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): BatchNorm2d(200, eps=1e-05, momentum=0.1, affine=True)

(8): ReLU()

(9): Reshape((0, [1, 2], 3))

(10): CheckSizes [(0, 900), (0, 5000), (0, 9000)]

(11): LSTM1:LSTM(4800, 200, bidirectional=1)

(12): Conv1d(400, 97, kernel_size=(1,), stride=(1,))

(13): Reorder BDW->BWD

(14): CheckSizes [(0, 900), (0, 9000), (97, 97)]

)ocropus-hocr,ocropus-gtedit,ocropus-visualize-results:

The recognition results are HTML Displayed modules .

ocropus-econf,ocropus-errs:

Calculate the error rate , Missing rate , Accuracy module .

summary :

- ocropy Forward and backward of the network python Realization , There is no dependence on the third-party neural network framework , Support your training , need python2 edition .

- ocropy2,ocropus3 Yes pytorch rely on

- ocropus3 Separate each module , Less coupling

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/126699.html Link to the original text :https://javaforall.cn

边栏推荐

- PCL: ajustement multiligne (RANSAC)

- Know two things: how does redis realize inventory deduction and prevent oversold?

- 电子元件-电阻

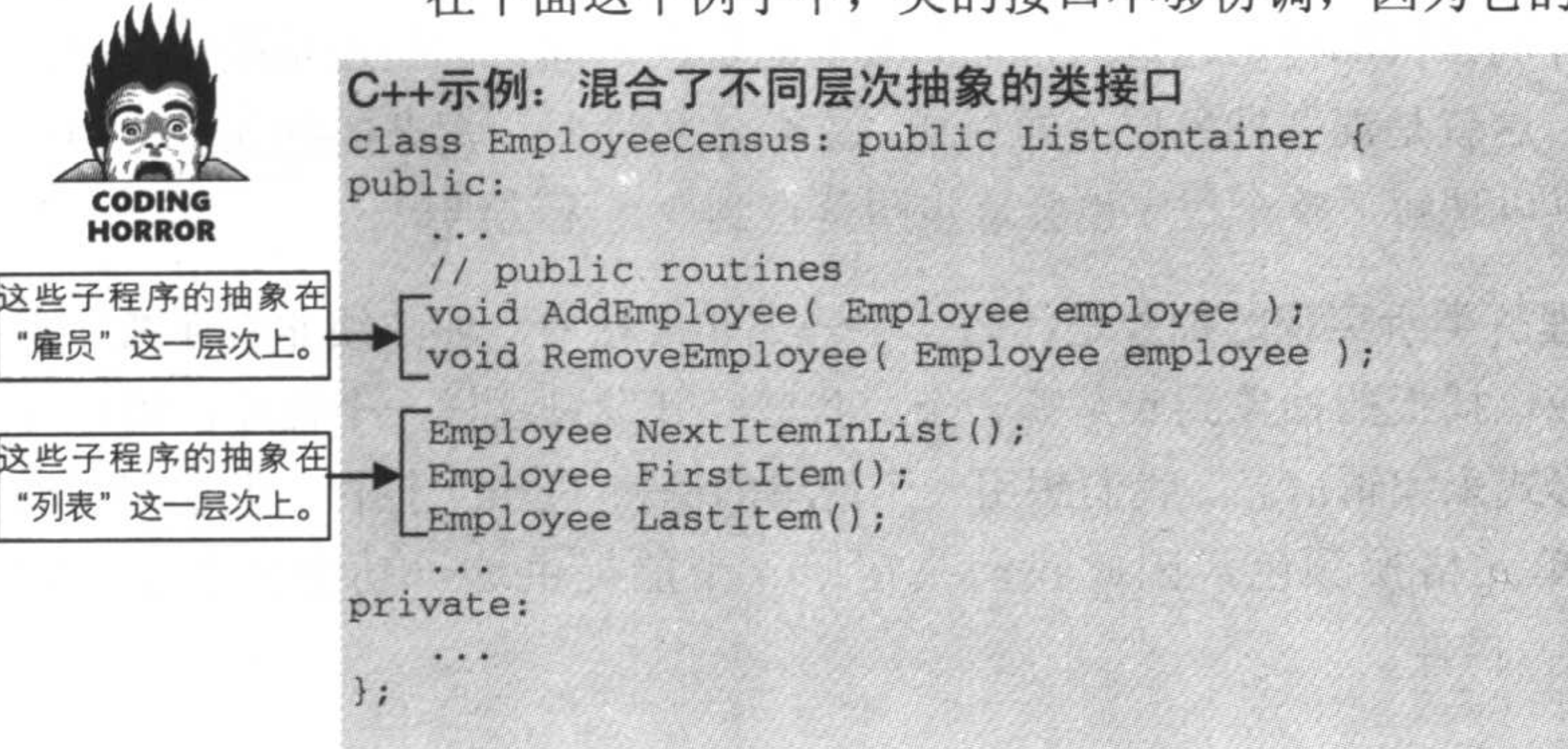

- 到底适不适合学习3D建模?这5点少1个都不行

- Redis [2022 latest interview question]

- The original path is not original [if there is infringement, please contact the original blogger to delete]

- Clean code and efficient system method

- [heavyweight] focusing on the terminal business of securities companies, Borui data released a new generation of observable platform for the core business experience of securities companies' terminals

- OSI模型第一层:物理层,基石般的存在!

- How to become a modeler? Which is more popular, industrial modeling or game modeling?

猜你喜欢

ResponseBodyAdvice接口使用导致的报错及解决

Spark 安装与启动

![Log framework [detailed learning]](/img/2f/2aba5d48e8a544eae0df763d458e84.png)

Log framework [detailed learning]

![[sharing game modeling model making skills] how ZBrush adjusts the brush size](/img/12/4c9be15266bd01c17d6aa761e6115f.png)

[sharing game modeling model making skills] how ZBrush adjusts the brush size

入行3D建模有前景吗?就业高薪有保障还是副业接单赚钱多

类的基础

1259. Disjoint handshake dynamic programming

Crack WiFi password with Kail

学次世代建模是场景好还是角色好?选对职业薪资多一半

Does anyone get a job by self-study modeling? Don't let these thoughts hurt you

随机推荐

MySql【从了解到掌握 一篇就够】

【游戏建模模型制作技巧分享】ZBrush如何调整笔刷大小

How to replace the double quotation marks of Times New Roman in word with the double quotation marks in Tahoma

【2020】【论文笔记】相变材料与超表面——

How does the NiO mechanism of jetty server cause out of heap memory overflow

80 + guests took the stage, users from more than 10 countries attended the meeting, and 70000 + viewers watched the end of "Gwei 2022 Singapore"

Common problems of sklearn classifier

怎么将word中的times new roman的双引号替换成宋体双引号

【2020】【论文笔记】基于Rydberg原子的——

Foundation of class

PCL: multi line fitting (RANSAC)

Know two things: how does redis realize inventory deduction and prevent oversold?

【2020】【论文笔记】太赫兹新型探测——太赫兹特性介绍、各种太赫兹探测器

Paddlenlp之UIE分类模型【以情感倾向分析新闻分类为例】含智能标注方案)

【游戏建模模型制作全流程】ZBrush武器模型制作:弩

moxa串口服务器型号,moxa串口服务器产品配置说明

Gradle [graphic installation and use demonstration]

Is learning next generation modeling a good scene or a good role? Choose the right profession and pay more than half

Can self-study 3D modeling succeed? Can self-study lead to employment?

【2013】【论文笔记】太赫兹波段纳米颗粒表面增强拉曼——