当前位置:网站首页>Multiplication sorting in torch, * & torch. Mul () & torch. MV () & torch. Mm () & torch. Dot () & @ & torch. Mutmal ()

Multiplication sorting in torch, * & torch. Mul () & torch. MV () & torch. Mm () & torch. Dot () & @ & torch. Mutmal ()

2022-07-27 05:28:00 【Charleslc's blog】

Catalog

* Position multiplication

Symbol * stay pytorch Is multiplied by position , There is a broadcasting mechanism .

Example :

vec1 = torch.arange(4)

vec2 = torch.tensor([4,3,2,1])

mat1 = torch.arange(12).reshape(4,3)

mat2 = torch.arange(12).reshape(3,4)

print(vec1 * vec2)

print(mat2 * vec1)

print(mat1 * mat1)

Output:

tensor([0, 3, 4, 3])

tensor([[ 0, 1, 4, 9],

[ 0, 5, 12, 21],

[ 0, 9, 20, 33]])

tensor([[ 0, 1, 4],

[ 9, 16, 25],

[ 36, 49, 64],

[ 81, 100, 121]])

torch.mul(): Number multiplication

Official explanation :

Is the multiplication of the corresponding elements of two variables ,other Can be a number , It could be one tensor Variable

torch.mul() Support broadcast mechanism

Example 1:

‘’‘python

In[1]: vec = torch.randn(3)

In[2]: vec

Out[1]: tensor([0.3550, 0.0975, 1.3870])

In[3]: torch.mul(vec, 5)

Out[2]: tensor([1.7752, 0.4874, 6.9348])

‘’’

Example 2:

‘’'python

In[1]: vec = torch.randn(3)

In[2]: vec

Out[1]: tensor([1.7752, 0.4874, 6.9348])

In[3]: mat = torch.randn(4).view(-1,1)

In[4]: mat

Out[2]: tensor([[-1.5181],

[ 0.4905],

[-0.3388],

[ 0.5626]])

In[5]:torch.mul(vec,mat)

Out[3]:tensor([[-0.5390, -0.1480, -2.1055],

[ 0.1741, 0.0478, 0.6803],

[-0.1203, -0.0330, -0.4699],

[ 0.1998, 0.0548, 0.7803]])

‘’’

torch.mv(): Matrix vector multiplication

The official document says :Performs a matrix-vector product of the matrix input and the vector vec.

explain torch.mv(input, vec, *, out=None)->tensor Only matrix vector multiplication is supported , If input by n × m n\times m n×m Of ,vec The length of the vector is m, So the output is n × 1 n\times 1 n×1 Vector .torch.mv() Broadcast mechanism is not supported

Example :

In[1]: vec = torch.arange(4)

In[2]: mat = torch.arange(12).reshape(3,4)

In[3]: torch.mv(mat, vec)

Out[1]: tensor([14, 38, 62])

torch.mm() Matrix multiplication

The official document says :Performs a matrix multiplication of the matrices input and mat2.torch.mm(input , mat2, *, out=None) → Tensor

The matrix input and mat2 Multiply . If input It's a n×m tensor ,mat2 It's a m×p tensor , Will output a n×p tensor out.torch.mm() Broadcast mechanism is not supported

This is matrix multiplication in linear algebra .

Example :

In[1]: mat1 = torch.arange(12).reshape(3,4)

In[2]: mat2 = torch.arange(12).reshape(4,3)

In[3]: torch.mm(mat1, mat2)

Out[1]: tensor([[ 42, 48, 54],

[114, 136, 158],

[186, 224, 262]])

torch.dot() Dot product

The official document says :Computes the dot product of two 1D tensors.

Only two one-dimensional vectors can be supported , And numpy in dot() The method is different .torch.dot(input, other, *, out=None) → Tensor

Example :

In[1]: torch.dot(torch.tensor([2, 3]), torch.tensor([2, 1]))

Out[1]: tensor[7]

@ operation

torch Medium @ The operation can realize the previous functions , Is a powerful operation .mat1 @ mat2

- if mat1 and mat2 Are two one-dimensional vectors , Then the corresponding operation is torch.dot()

- if mat1 It's a two-dimensional vector ,mat2 It's a one-dimensional vector , Then the corresponding operation is torch.mv()

- if mat1 and mat2 Are two two-dimensional vectors , Then the corresponding operation is torch.mm()

vec1 = torch.arange(4)

vec2 = torch.tensor([4,3,2,1])

mat1 = torch.arange(12).reshape(4,3)

mat2 = torch.arange(12).reshape(3,4)

print(vec1 @ vec2) # Two one-dimensional vectors

print(mat2 @ vec1) # A two-dimensional and a one-dimensional

print(mat1 @ mat2) # Two two-dimensional vectors

Output:

tensor(10)

tensor([14, 38, 62])

tensor([[ 20, 23, 26, 29],

[ 56, 68, 80, 92],

[ 92, 113, 134, 155],

[128, 158, 188, 218]])

torch.matmul()

torch.matmul() And @ The operation is similar to , however torch.matmul() It is not limited to one dimension and two dimensions , You can multiply high-dimensional tensors .torch.matmul(input, other, *, out=None) → Tensor Support broadcast

torch.matmul() The operation depends on input and other The magnitude of the tensor :

- If the two tensors input are one-dimensional , Then return to dot product , The corresponding operation is

torch.dot()

- If the two tensors input are one-dimensional , Then return to dot product , The corresponding operation is

- If the two tensors input are two-dimensional , Then return the matrix product , The corresponding operation is

torch.mm()

- If the two tensors input are two-dimensional , Then return the matrix product , The corresponding operation is

- If the first tensor input is two-dimensional , The second tensor is one-dimensional , Returns the matrix vector product , Corresponding

torch.mv()

- If the first tensor input is two-dimensional , The second tensor is one-dimensional , Returns the matrix vector product , Corresponding

- If the first tensor input is one-dimensional , The second parameter is two-dimensional , that

torch.matmul()The operation will add the dimension of the first tensor before 1, After matrix multiplication , Then remove the added dimension .

- If the first tensor input is one-dimensional , The second parameter is two-dimensional , that

- If two parameters are at least one-dimensional and at least one parameter is N dimension (N>2), Then perform batch matrix multiplication , If the first parameter is one dimension , Then add 1, Delete after batch matrix multiplication . If the second parameter is one-dimensional , Then add 1, Delete after multiplying the batch matrix .

Example 1( Corresponding 1 To 4 operation ):

vec1 = torch.tensor([1,2,3,4])

vec2 = torch.tensor([4,3,2,1])

mat1 = torch.arange(12).reshape(3,4)

mat2 = torch.arange(12).reshape(4,3)

print(torch.matmul(vec1, vec2)) # Both vectors are One dimensional , similar torch.dot() operation

print(torch.matmul(mat1, mat2)) # Both vectors are two-dimensional , similar torch.mm() operation

print(torch.matmul(mat1, vec1)) # The first vector is two-dimensional , The second vector is One dimensional , similar torch.mv() operation

print(torch.matmul(vec1, mat2)) # The first vector is One dimensional , The second vector is two-dimensional

Output:

tensor(20)

tensor([[ 42, 48, 54],

[114, 136, 158],

[186, 224, 262]])

tensor([ 20, 60, 100])

tensor([60, 70, 80])

The fifth situation , When multidimensional situations occur : Remember a little , If multidimensional , Always multiply the last two dimensions , Then add the previous dimension

Example 2: If the first vector is one-dimensional , The second vector is multidimensional .

tensor1 = torch.randn(2)

tensor2 = torch.randn(10,2,3)

print(torch.matmul(tensor1, tensor2).size())

Output:

torch.Size([10, 3])

First the tensor2 Front dimension 10 As batch Bring up , Make it two-dimensional (2 * 3), take tensor1 Add 1, Then it becomes 1*2( A two-dimensional ), Then dimension 1*2 And dimensions 2 * 3 Do matrix multiplication , obtain 1 * 3, then tensor1 Added dimensions 1 Delete , Finally get the dimension 10*3.

Example 3: If the first vector is multidimensional , The second vector is one-dimensional

tensor1 = torch.randn(10,3,4)

tensor2 = torch.randn(4)

print(torch.matmul(tensor1, tensor2).shape)

Output:

torch.Size([10, 3])

First the tensor2 Add 1, that tensor2 The dimension becomes 4*1( A two-dimensional ), next , hold tensor1 The previous dimension 10 As batch Bring up , Make it two-dimensional (3 * 4), Then dimension 3 * 4 And dimensions 4 * 1 Do matrix multiplication , obtain 3 * 1, then tensor2 Added dimensions 1 Delete , Finally, add batch Dimensions , Get dimensions 10 * 3

Example 4: The first vector 3 dimension , The second vector 2 dimension

tensor1 = torch.randn(2,5,3)

tensor2 = torch.randn(3,4)

print(torch.matmul(tensor1, tensor2).shape)

Output:

torch.Size([2, 5, 4])

First the tensor1 Extract the extra one dimension in , The rest is matrix multiplication

Example 5: The first vector 2 dimension , The second vector 3 dimension

tensor1 = torch.randn(5,3)

tensor2 = torch.randn(2,3,4)

print(torch.matmul(tensor1, tensor2).shape)

Output:

torch.Size([2, 5, 4])

First the tensor2 Extract the extra one dimension in , The rest is matrix multiplication

Example 6: When both are multidimensional

tensor1 = torch.randn(5,1,5,3)

tensor2 = torch.randn(2,3,4)

print(torch.matmul(tensor1, tensor2).shape)

Output:

torch.Size([5, 2, 5, 4])

First the tensor1 Extract the redundant one dimension in , There are three dimensions left , take tensor1 Make broadcasting mechanism in , become 2 * 5 * 3, And then tensor1 and tensor2 Do matrix multiplication in the last two dimensions , obtain 5 * 4, Finally get the dimension (5 * 2 * 5 * 4)

边栏推荐

- Li Hongyi machine learning team learning punch in activity day03 --- error and gradient decline

- 35. Scroll

- 如何查看导师的评价

- 数据库连接池&&Druid使用

- 6 zigzag conversion of leetcode

- How to quickly and effectively solve the problem of database connection failure

- Flask对数据库的查询以及关联

- The interface can automatically generate E and other asynchronous access or restart,

- Message reliability processing

- JVM Part 1: memory and garbage collection part 10 - runtime data area - direct memory

猜你喜欢

随机推荐

JVM上篇:内存与垃圾回收篇六--运行时数据区-本地方法&本地方法栈

B1028 census

redis事务

B1026 program running time

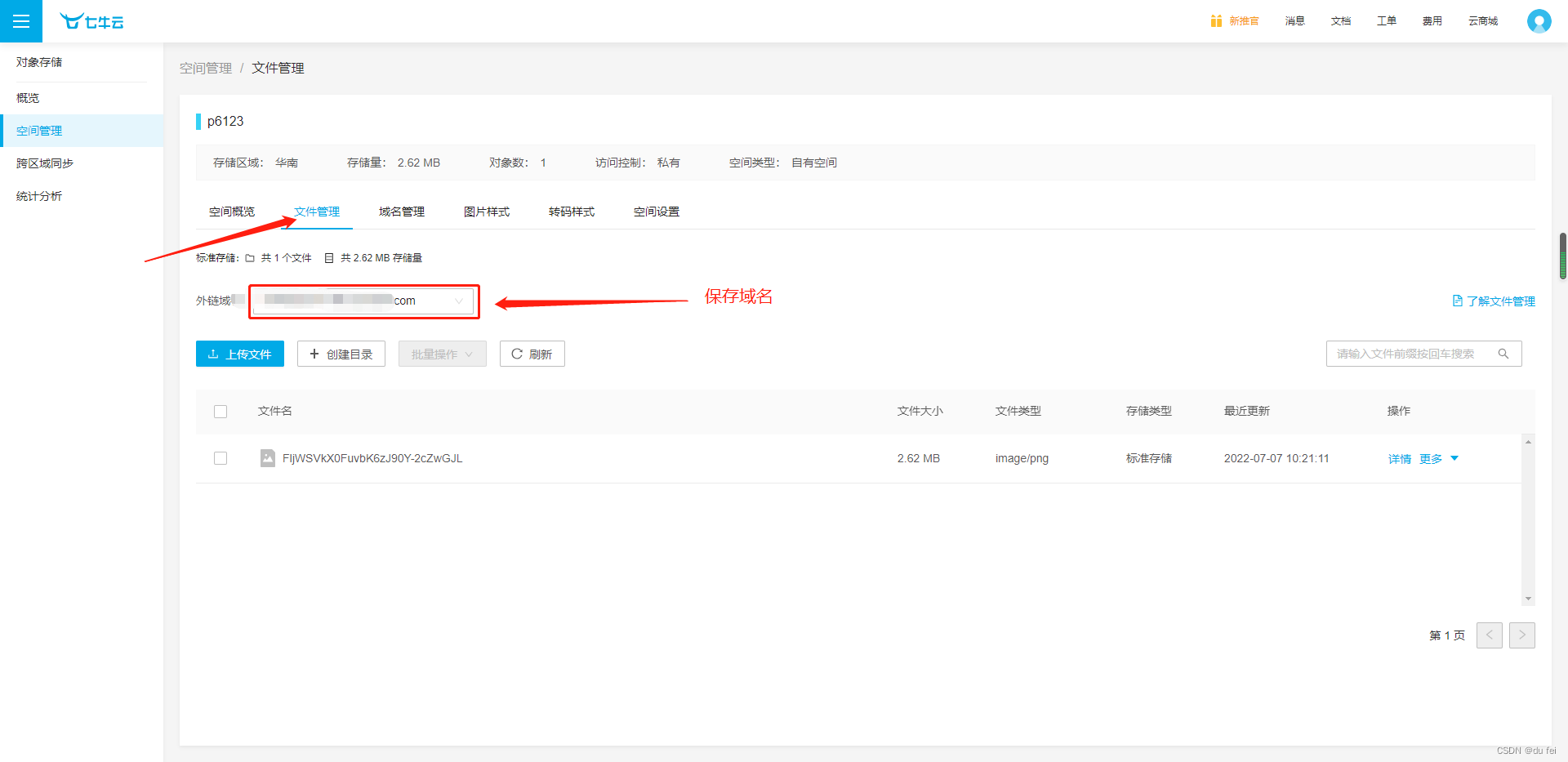

上传七牛云的方法

如何查看导师的评价

JVM Part 1: memory and garbage collection part 10 - runtime data area - direct memory

JVM Part 1: memory and garbage collection part 3 - runtime data area - overview and threads

Enumeration class implements singleton mode

Alphabetic order problem

Machine learning overview

Find the number of combinations (the strongest optimization)

Selenium element operation

Notes series k8s orchestration MySQL container - stateful container creation process

2021 OWASP top 4: unsafe design

Flask登录实现

B1029 旧键盘

cookie增删改查和异常

MQ FAQ

用pygame自己动手做一款游戏01