当前位置:网站首页>Indicator statistics: real time uvpv statistics based on flow computing Oceanus (Flink)

Indicator statistics: real time uvpv statistics based on flow computing Oceanus (Flink)

2022-06-24 04:10:00 【Wuyuntao】

Recently, I sorted out how to use Flink To achieve real-time UV、PV Statistics of indicators , And communicate with colleagues in the micro vision Department of the company . Then the scene is simplified , And found that Flink SQL Come on The statistics of these indicators will be more convenient .

1 Solution description

1.1 summary

This scheme is combined with local self construction Kafka colony 、 Tencent Cloud Computing Oceanus(Flink)、 Cloud database Redis On blogs 、 Shopping and other websites UV、PV Real time visual analysis of indicators . The analysis indicator includes the number of independent visitors to the website (UV )、 Product hits (PV)、 Conversion rate ( Conversion rate = Number of transactions / Clicks ) etc. .

Introduction to related concepts : UV(Unique Visitor): Number of unique visitors . A client visiting your website is a visitor , If the user visits the same page 5 Time , Then the of the page UV Only add 1, because UV It counts the number of users after de duplication, not the number of visits .PV(Page View): Hits or page views . If the user visits the same page 5 Time , Then the of the page PV Will add 5.

1.2 Scheme architecture and advantages

According to the above real-time indicators, make statistics on the scene , The following architecture diagram is designed :

List of products involved :

- Local data center (IDC) Self built Kafka colony

- Private networks VPC

- Private line access / Cloud networking /VPN Connect / Peer to peer connection

- Flow calculation Oceanus

- Cloud database Redis

2 Lead to

Purchase the required Tencent cloud resources , And get through the network . self-built Kafka The cluster shall adopt... According to the region where the cluster is located VPN Connect 、 Special line connection or peer-to-peer connection to realize network interconnection .

2.1 Create a private network VPC

Private networks (VPC) It is a logically isolated cyberspace customized on Tencent cloud , In the build Oceanus colony 、Redis It is recommended to select the same network for services such as components VPC, The network can communicate with each other . Otherwise, you need to use peer-to-peer connection 、NAT gateway 、VPN And other ways to get through the network . Please refer to... For private network creation steps Help document .

2.2 establish Oceanus colony

Flow calculation Oceanus It is a powerful tool for real-time analysis of big data product ecosystem , Is based on Apache Flink Built with one-stop development 、 Seamless connection 、 Sub second delay 、 Low cost 、 Enterprise class real-time big data analysis platform with the characteristics of security and stability . Flow calculation Oceanus The goal is to maximize the value of enterprise data , Accelerate the construction process of real-time digitization of enterprises .

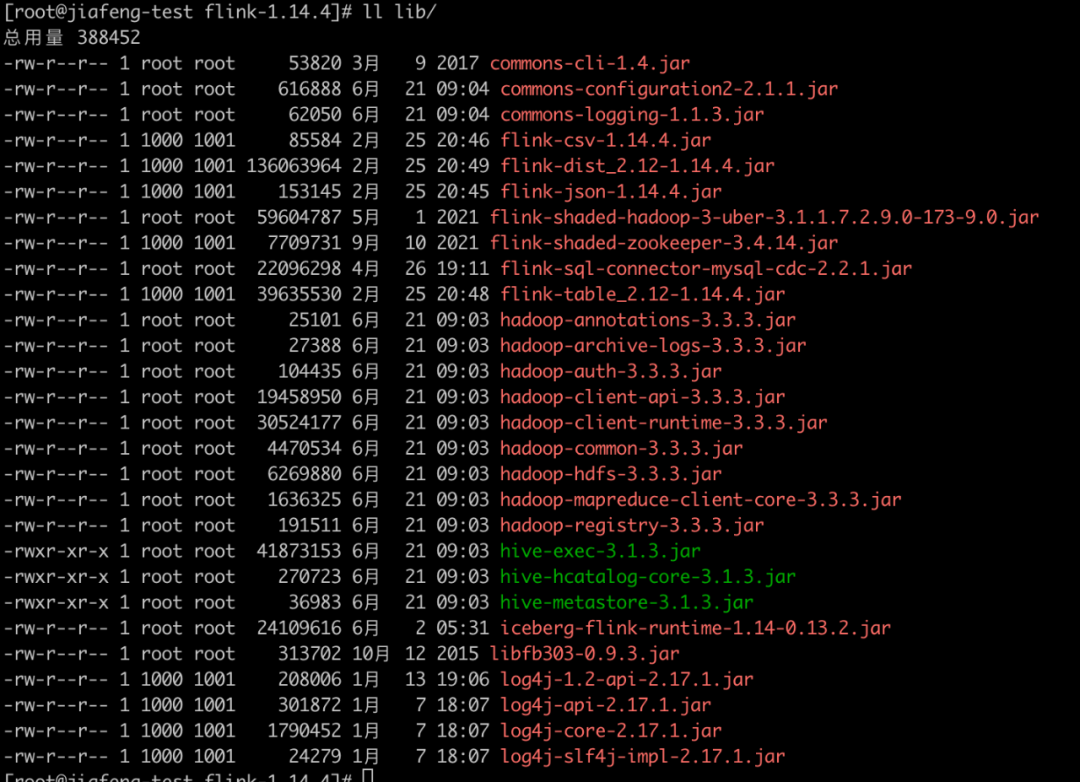

stay Oceanus Console 【 Cluster management 】->【 New cluster 】 Page create cluster , Choose the region 、 Availability zone 、VPC、 journal 、 Storage , Set the initial password, etc .VPC And subnets use the network just created . After you create Flink The clusters are as follows :

2.3 establish Redis colony

stay Redis Console Of 【 New instance 】 Page create cluster , Select the same region as other components , The same private network in the same region VPC, The same subnet is also selected here .

2.4 Configure self built kafka colony

2.4.1 Modify self built Kafka Cluster configuration

build by oneself Kafka When the cluster is connected bootstrap-servers Parameters are often used hostname instead of ip To connect .

Mode one : Use Oceanus platform Set customization DNS Function settings , To map hostname To ip.

Square twelve : Modify self built in the following way Kafka Clustered hostname by ip:

But with self built Kafka The cluster is connected to... On Tencent cloud Oceanus The cluster is a fully managed cluster , Oceanus The self built cluster cannot be resolved on the cluster node hostname And ip The mapping relation of , So you need to change the listener address from hostname by ip Form of address connection .

take config/server.properties In profile advertised.listeners Parameter is configured as IP Address . Example :

# 0.10.X And later versions advertised.listeners=PLAINTEXT://10.1.0.10:9092 # 0.10.X The previous version advertised.host.name=PLAINTEXT://10.1.0.10:9092

Restart after modification Kafka colony .

! If you use self built on the cloud zookeeper Address , We also need to zk The configuration of the hostname modify IP Address form .

2.4.2 Simulate sending data to topic

Use in this case topic by uvpv-demo

1)Kafka client

Enter self built Kafka Cluster nodes , start-up Kafka client , Simulate sending data .

./bin/kafka-console-producer.sh --broker-list 10.1.0.10:9092 --topic uvpv-demo

>{"record_type":0, "user_id": 2, "client_ip": "100.0.0.2", "product_id": 101, "create_time": "2021-09-08 16:20:00"}

>{"record_type":0, "user_id": 3, "client_ip": "100.0.0.3", "product_id": 101, "create_time": "2021-09-08 16:20:00"}

>{"record_type":1, "user_id": 2, "client_ip": "100.0.0.1", "product_id": 101, "create_time": "2021-09-08 16:20:00"}2) Use script to send

A script :Java Code reference :https://cloud.tencent.com/document/product/597/54834

The second script :Python Script . Refer to the previous case Python The script can be modified properly : Based on Tencent cloud Oceanus Realize real-time large screen analysis of live video scenes

2.5 Get through self built IDC Cluster to Tencent cloud network communication

build by oneself Kafka Cluster Unicom Tencent cloud network , This can be done by 3 Two ways to get through self built IDC Network communication to Tencent cloud .

- Private line access Applicable to local data center IDC Connect with Tencent cloud network .

- Cloud networking Applicable to local data center IDC Connect with Tencent cloud network , It can also be used for private networks between different regions on the cloud VPC Get through .

- VPN Connect Applicable to local data center IDC Connect with Tencent cloud network .

- Peer to peer connection + NAT gateway Suitable for private networks between different regions on the cloud VPC Get through .

In this scheme VPN How to connect , Realize local IDC Communication with cloud networks . Reference link : establish VPC To IDC The connection of ( Routing table )

According to this scheme, the following network architecture diagram is drawn :

3 Scheme realization

Next, I will introduce you how to use flow computing through a case Oceanus Implement website UV、PV、 Real time statistics of conversion index .

3.1 Business objectives

Only the following are listed here 3 Two statistical indicators :

- Number of unique visitors to the website UV.Oceanus After treatment in Redis Pass through set Store the number of unique visitors , At the same time, it also achieves the purpose of de duplication of the data of the same visitor .

- The number of hits on the website's product page PV.Oceanus After treatment in Redis Use in list Store page hits .

- Conversion rate ( Conversion rate = Number of transactions / Clicks ).Oceanus After treatment in Redis of use String Just store .

3.2 Source data format

Browse record / Purchase record Kafka topic:uvpv-demo

Field | type | meaning |

|---|---|---|

record_type | int | Customer number |

user_id | varchar | Customer ip Address |

client_ip | varchar | Your room number, |

product_id | Int | Time to enter the room |

create_time | timestamp | Creation time |

Kafka Internal use json Format store , The displayed data format is as follows :

# Browse record

{

"record_type":0,

"user_id": 6,

"client_ip": "100.0.0.6",

"product_id": 101,

"create_time": "2021-09-08 16:20:00"

}

# Purchase record

{

"record_type":1,

"user_id": 6,

"client_ip": "100.0.0.6",

"product_id": 101,

"create_time": "2021-09-08 16:20:00"

}3.3 To write Flink SQL Homework

The example implements UV、PV And conversion 3 Acquisition logic of indicators , And write Sink End .

1、 Definition Source

CREATE TABLE `input_web_record` (

`record_type` INT,

`user_id` INT,

`client_ip` VARCHAR,

`product_id` INT,

`create_time` TIMESTAMP,

`times` AS create_time,

WATERMARK FOR times AS times - INTERVAL '10' MINUTE

) WITH (

'connector' = 'kafka', -- Optional 'kafka','kafka-0.11'. Pay attention to select the corresponding built-in Connector

'topic' = 'uvpv-demo',

'scan.startup.mode' = 'earliest-offset',

'properties.bootstrap.servers' = '10.1.0.10:9092',

'properties.group.id' = 'WebRecordGroup', -- Required parameters , Be sure to designate Group ID

-- Define the data format (JSON Format )

'format' = 'json',

'json.ignore-parse-errors' = 'true', -- Ignore JSON Structure Parsing exception

'json.fail-on-missing-field' = 'false' -- If set to true, An error will be reported if a missing field is encountered Set to false The missing field is set to null

);2、 Definition Sink

-- UV sink CREATE TABLE `output_uv` ( `userids` STRING, `user_id` STRING ) WITH ( 'connector' = 'redis', -- Output to Redis 'command' = 'sadd', -- Use a collection to save uv( Support command :set、lpush、sadd、hset、zadd) 'nodes' = '192.28.28.217:6379', -- redis Connection address , Cluster mode is used by multiple nodes '','' Separate . -- 'additional-key' = '<key>', -- Is used to specify the hset and zadd Of key.hset、zadd You have to set . 'password' = 'yourpassword' -- Optional parameters , password ); -- PV sink CREATE TABLE `output_pv` ( `pagevisits` STRING, `product_id` STRING, `hour_count` BIGINT ) WITH ( 'connector' = 'redis', -- Output to Redis 'command' = 'lpush', -- Save with a list pv( Support command :set、lpush、sadd、hset、zadd) 'nodes' = '192.28.28.217:6379', -- redis Connection address , Cluster mode is used by multiple nodes '','' Separate . -- 'additional-key' = '<key>', -- Is used to specify the hset and zadd Of key.hset、zadd You have to set . 'password' = 'yourpassword' -- Optional parameters ); -- Conversion rate sink CREATE TABLE `output_conversion_rate` ( `conversion_rate` STRING, `rate` STRING ) WITH ( 'connector' = 'redis', -- Output to Redis 'command' = 'set', -- Save with a list pv( Support command :set、lpush、sadd、hset、zadd) 'nodes' = '192.28.28.217:6379', -- redis Connection address , Cluster mode is used by multiple nodes '','' Separate . -- 'additional-key' = '<key>', -- Is used to specify the hset and zadd Of key.hset、zadd You have to set . 'password' = 'yourpassword' -- Optional parameters );

3、 Business logic

-- To produce UV indicators , Count all the time UV INSERT INTO output_uv SELECT 'userids' AS `userids`, CAST(user_id AS string) AS user_id FROM input_web_record ; -- Process and get PV indicators , Count every 10 Minutes of the PV INSERT INTO output_pv SELECT 'pagevisits' AS pagevisits, CAST(product_id AS string) AS product_id, SUM(product_id) AS hour_count FROM input_web_record WHERE record_type = 0 GROUP BY HOP(times, INTERVAL '5' MINUTE, INTERVAL '10' MINUTE), product_id, user_id; -- Process and obtain the conversion index , Count every 10 Conversion rate in minutes INSERT INTO output_conversion_rate SELECT 'conversion_rate' AS conversion_rate, CAST( (((SELECT COUNT(1) FROM input_web_record WHERE record_type=0)*1.0)/SUM(a.product_id)) as string) FROM (SELECT * FROM input_web_record where record_type = 1) AS a GROUP BY HOP(times, INTERVAL '5' MINUTE, INTERVAL '10' MINUTE), product_id;

3.4 The results verify that

General situation , Will pass Web Website to show the statistics UV/PV indicators , And here for simplicity . Directly in Redis Console Log in to query :

userids: Storage UV

pagevisits: Storage PV

conversion_rate: Storage conversion rate , That is, the number of times to buy goods / Total page hits .

4 summary

By self building Kafka Clusters collect data , In stream computing Oceanus (Flink) Field accumulation in real time 、 Window aggregation and other operations , Store the processed data in the cloud database Redis, Statistics to real-time refresh UV、PV Equal index . This program is in Kafka json In order to be easy to understand, the format design is simplified , Put the browsing records and product purchase records in the same topic in , Focus on opening up self built IDC And Tencent cloud products to show the whole scheme . For very large-scale UV duplicate removal , Colleagues with micro vision adopted Redis hyperloglog To achieve UV Statistics . Compared with direct use set Type mode has the advantage of minimal memory space , See the link for details :https://cloud.tencent.com/developer/article/1889162.

边栏推荐

- How to intuitively explain server hosting and leasing to enterprises?

- 华为云GaussDB(for Redis)揭秘第19期:GaussDB(for Redis)全面对比Codis

- Clickhouse (02) Clickhouse architecture design introduction overview and Clickhouse data slicing design

- Tell you about mvcc

- Brief ideas and simple cases of JVM tuning - how to tune

- flutter系列之:flutter中的offstage

- Tencent ECS installs the Minio object storage tool

- 2. in depth tidb: entry code analysis and debugging tidb

- Do you understand TLS protocol?

- How to adjust the incompleteness before and after TS slicing of easydss video recording?

猜你喜欢

15+城市道路要素分割应用,用这一个分割模型就够了

Brief ideas and simple cases of JVM tuning - how to tune

Idea 1 of SQL injection bypassing the security dog

Openeuler kernel technology sharing issue 20 - execution entity creation and switching

Koom of memory leak

黑帽SEO实战之目录轮链批量生成百万页面

Black hat SEO actual combat directory wheel chain generates millions of pages in batch

Application practice | Apache Doris integrates iceberg + Flink CDC to build a real-time federated query and analysis architecture integrating lake and warehouse

黑帽SEO实战搜索引擎快照劫持

web技术分享| 【地图】实现自定义的轨迹回放

随机推荐

Do you understand TLS protocol?

How to do the right thing in digital marketing of consumer goods enterprises?

The practice of tidb slow log in accompanying fish

Wide & deep model and optimizer understand code practice

[numpy] numpy's judgment on Nan value

Kubernetes 资源拓扑感知调度优化

How to draw the flow chart of C language structure, and how to draw the structure flow chart

Idea 1 of SQL injection bypassing the security dog

SQL注入绕过安全狗思路一

Garbage collection mechanism

Web penetration test - 5. Brute force cracking vulnerability - (2) SNMP password cracking

Configuration process of easygbs access to law enforcement recorder

Diskpart San policy is not onlineall, which affects automatic disk hanging

华为云GaussDB(for Redis)揭秘第19期:GaussDB(for Redis)全面对比Codis

一次 MySQL 误操作导致的事故,「高可用」都顶不住了!

黑帽SEO实战搜索引擎快照劫持

"." in the structure of C language And "- & gt;" Differences between

uni-app进阶之认证【day12】

How to gracefully handle and return errors in go (1) -- error handling inside functions

Gaussian beam and its matlab simulation