当前位置:网站首页>Understanding of entropy and cross entropy loss function

Understanding of entropy and cross entropy loss function

2022-07-28 15:16:00 【Demon YoY】

Several well explained links

Detailed explanation of entropy and cross entropy loss function

Detailed explanation and formula derivation of information entropy

entropy

Let's first understand what entropy is ?

The concept of entropy in information theory was put forward by Shannon for the first time , The aim is to find an efficient / A method of encoding information losslessly : Efficiency is measured by the average length of encoded data , The smaller the average length, the more efficient ; At the same time, it also needs to meet “ Nondestructive ” Conditions , That is, the original information cannot be lost after coding . such , Shannon put forward the definition of entropy : Minimum average coding length of lossless coding event information .

Information entropy

The amount of information : Measurement of information , It is used to measure the amount of information , Its value is related to the specific event .

The size of information is related to the probability of random events .

The less probability things happen, the more information they generate , Such as the earthquake in Hunan ;

The greater the probability of something happening, the smaller the amount of information , If the sun rises from the East .

Derive information entropy

Information quantity measures the information brought about by a specific event , Entropy, on the other hand, is the expectation of the amount of information that may be generated before the results are obtained —— Consider all possible values of the random variable , That is, the expectation of the amount of information brought by all possible events .

The formula of information entropy

Information entropy can be understood as seeking log 2 p \log_2{p} log2p The expectation of , namely :

H ( X ) = E x ∽ P [ − log x ] = − ∑ i = 1 n p ( x i ) log p ( x i ) H(X) = \mathbb{E}_{x \backsim {P}}[-\log{x}] = -\sum_{i=1}^{n}p(x_i) \log{p(x_i)} H(X)=Ex∽P[−logx]=−i=1∑np(xi)logp(xi)

The above formula is the code length calculation formula , It is also information entropy .

for instance :

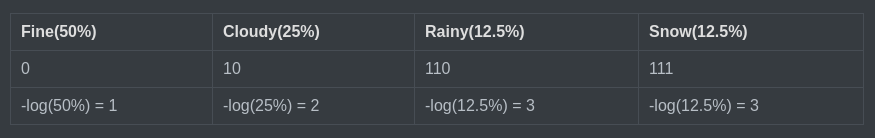

There are four kinds of weather , Corresponding to four codes , Calculate the entropy directly :

entropy = 1 * 50% + 2 * 25% + 3 * 12.5% + 3 * 12.5% = 1.75

Relative entropy (KL The divergence )

KL The divergence (Kullback-Leibler divergence) It's also called relative entropy .

If random variable X There are two separate probability distributions p(x) and p(x), The difference between these distributions can be measured by relative entropy ,

Relative entropy is defined as follows :

D K L ( P ∣ ∣ Q ) = ∑ i = 1 n P ( x i ) log P ( x i ) Q ( x i ) D_{KL}(P||Q) = \sum^n_{i=1}P(x_i)\log{\frac{P(x_i)}{Q(x_i)}} DKL(P∣∣Q)=i=1∑nP(xi)logQ(xi)P(xi)

1、 The relative entropy is nonnegative , Its size can be used to measure the difference between two distributions , If and only if P and Q With exactly the same distribution , The value of relative entropy is 0.

2、 Relative entropy is not symmetric , For certain P and Q,

D K L ( P ∣ ∣ Q ) ≠ D K L ( Q ∣ ∣ P ) D_{KL}(P||Q)\neq D_{KL}(Q||P) DKL(P∣∣Q)=DKL(Q∣∣P)

Cross entropy

Cross entropy is used H ( P , Q ) H(P,Q) H(P,Q) Express , Implied use P Calculate expectations , Use Q Calculate the length of the code

Calculation formula of cross entropy :

H ( P , Q ) = E x ∽ P [ − log Q ( x ) ] H(P,Q) = \mathbb{E}_{x\backsim{P}}[-\log{Q(x)}] H(P,Q)=Ex∽P[−logQ(x)]

Cross entropy as a loss function

for instance , Suppose an animal photo data set has 5 Animals , And there is only one animal in each picture , The label of each photo is one-hot code .

The probability of the first picture being a dog is 100%, The probability of other animals is 0, The rest of the pictures are the same .

Suppose two machine learning models predict the first picture :Q1 and Q2, But a photo is really labeled [1,0,0,0,0].

Calculate the cross entropy respectively :

The smaller the cross entropy is , The more accurate the prediction is , It can be seen that the model Q2 Better prediction .

边栏推荐

- Establish binary tree + C language code from preorder and middle order

- Picture Trojan principle production prevention

- Mysql易错知识点整理(待更新)

- 3715. Minimum number of exchanges

- Mlx90640 infrared thermal imager sensor module development notes (VIII)

- How to gracefully encapsulate anonymous inner classes (DSL, high-order functions) in kotlin

- CCSP international registered cloud security experts set up examination rooms in China

- 代码比较干净的多商户商城系统

- SSRF vulnerability

- crmeb v4.3部署流程

猜你喜欢

crmeb标准版4.4都会增加哪些功能

A problem -- about dragging div in JS, when I change div to round, there will be a bug

SSRF vulnerability

经典Dijkstra与最长路

idea调试burpsuit插件

2021 year end summary of gains and losses

听说crmeb多商户增加了种草功能?

Feeling about software development work in the second anniversary

PS modify the length and width pixels and file size of photos

pyppeteer 遇到的问题

随机推荐

为什么企业需要用户自治的数字身 份

拓展运算符是深拷贝还是浅拷贝

Slider restore and validation (legal database)

SQL labs detailed problem solving process (less1-less10)

Buuctf partial solution

What is the difference between UTF-8, utf-16 and UTF-32 character encoding? [graphic explanation]

The first self introduction quotation

Enterprise wechat customer service link, enterprise wechat customer service chat

Idea2020.1.4 packages package collapse

pyppeteer 遇到的问题

An idea of modifying vertex height with shader vertex shader

JS学习笔记24-28:结束

Compilation language and interpretation language

模板注入总结

MLX90640 红外热成像仪传感器模块开发笔记(八)

Node.js+express realizes the operation of MySQL database

When MySQL uses left join to query tables, the query is slow because the connection conditions are not properly guided

crmeb 标准版window+phpstudy8安装教程(二)

The second 1024, come on!

汇编学习