当前位置:网站首页>Elk7.15.1 installation, deployment and construction

Elk7.15.1 installation, deployment and construction

2022-06-24 02:58:00 【Xiao Chen operation and maintenance】

ELK brief introduction

ELK yes Elasticsearch、Logstash、Kibana Three open source framework acronyms ( But later on Filebeat(beats One of the ) Can be used as an alternative Logstash Data collection function of , Relatively lightweight ). It's also known as Elastic Stack.

Filebeat Is a lightweight delivery tool for forwarding and centralizing log data .Filebeat Monitor the log file or location you specify , Collect log events , And forward them to Elasticsearch or Logstash Index .Filebeat The way it works is as follows : start-up Filebeat when , It will start one or more inputs , These inputs will be found in the location specified for the log data . about Filebeat Every log found ,Filebeat Will start the collector . Each collector reads a single log to get new content , And send the new log data to libbeat,libbeat Will aggregate Events , And send the aggregated data to Filebeat Configured output .

Logstash It's a free and open server-side data processing pipeline , Ability to collect data from multiple sources , Conversion data , Then send the data to your favorite “ The repository ” in .Logstash It can collect data dynamically 、 Converting and transferring data , Not affected by format or complexity . utilize Grok Derive structure from unstructured data , from IP The address decodes the geographic coordinates , Anonymous or exclude sensitive fields , And simplify the whole process .

Elasticsearch yes Elastic Stack Core distributed search and analysis engine , It's based on Lucene、 Distributed 、 adopt Restful A framework of near real time search platform for interaction .Elasticsearch Provides near real-time search and analysis for all types of data . Whether you're structured text or unstructured text , Digital data or geospatial data ,Elasticsearch Can effectively store and index them in a way that supports fast search .

Kibana It's a response to Elasticsearch Open source analysis and visualization platform , Used to search 、 View interaction stored in Elasticsearch Data in index . Use Kibana, Advanced data analysis and presentation can be done through various charts . And it can be Logstash and ElasticSearch Provides log analysis friendly Web Interface , It can be summed up 、 Analyze and search important data logs . It can also make massive data easier to understand . It's easy to operate , A browser based user interface can quickly create dashboards (Dashboard) real-time display Elasticsearch Query dynamic

The basic characteristics of complete log system

collect : Be able to collect log data from multiple sources

transmission : It can stably parse, filter and transmit log data to the storage system

Storage : Store log data

analysis : Support UI analysis

Warning : Able to provide error reports , Monitoring mechanism

install jdk17 Environmental Science

[email protected]:~# mkdir jdk [email protected]:~# cd jdk [email protected]:~/jdk# wget https://download.oracle.com/java/17/latest/jdk-17_linux-x64_bin.tar.gz [email protected]:~/jdk# tar xf jdk-17_linux-x64_bin.tar.gz [email protected]:~/jdk# cd .. [email protected]:~# [email protected]:~# mv jdk/ / [email protected]:~# vim /etc/profile [email protected]:~# [email protected]:~# [email protected]:~# tail -n 4 /etc/profile export JAVA_HOME=/jdk/jdk-17.0.1/ export PATH=$JAVA_HOME/bin:$PATH export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar [email protected]:~# [email protected]:~# source /etc/profile [email protected]:~# chmod -R 777 /jdk/ Copy code

establish elk Folder , And download the required package

[email protected]:~# mkdir elk [email protected]:~# cd elk [email protected]:~/elk# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.15.1-linux-x86_64.tar.gz [email protected]:~/elk# wget https://artifacts.elastic.co/downloads/kibana/kibana-7.15.1-linux-x86_64.tar.gz [email protected]:~/elk# wget https://artifacts.elastic.co/downloads/logstash/logstash-7.15.1-linux-x86_64.tar.gz Copy code

Unzip the installation package

[email protected]:~/elk# tar xf elasticsearch-7.15.1-linux-x86_64.tar.gz [email protected]:~/elk# tar xf kibana-7.15.1-linux-x86_64.tar.gz [email protected]:~/elk# tar xf logstash-7.15.1-linux-x86_64.tar.gz [email protected]:~/elk# ll total 970288 drwxr-xr-x 5 root root 4096 Oct 20 06:09 ./ drwx------ 7 root root 4096 Oct 20 06:04 ../ drwxr-xr-x 9 root root 4096 Oct 7 22:00 elasticsearch-7.15.1/ -rw-r--r-- 1 root root 340849929 Oct 14 13:28 elasticsearch-7.15.1-linux-x86_64.tar.gz drwxr-xr-x 10 root root 4096 Oct 20 06:09 kibana-7.15.1-linux-x86_64/ -rw-r--r-- 1 root root 283752241 Oct 14 13:34 kibana-7.15.1-linux-x86_64.tar.gz drwxr-xr-x 13 root root 4096 Oct 20 06:09 logstash-7.15.1/ -rw-r--r-- 1 root root 368944379 Oct 14 13:38 logstash-7.15.1-linux-x86_64.tar.gz Copy code

Create users and set permissions

[email protected]:~/elk# cd [email protected]:~# useradd elk [email protected]:~# mkdir /home/elk [email protected]:~# cp -r elk/ /home/elk/ [email protected]:~# chown -R elk:elk /home/elk/ Copy code

Modify the system configuration file

[email protected]:~# vim /etc/security/limits.conf [email protected]:~# [email protected]:~# [email protected]:~# tail -n 3 /etc/security/limits.conf * soft nofile 65536 * hard nofile 65536 [email protected]:~# [email protected]:~# vim /etc/sysctl.conf [email protected]:~# [email protected]:~# tail -n 2 /etc/sysctl.conf vm.max_map_count=262144 [email protected]:~# [email protected]:~# sysctl -p vm.max_map_count = 262144 [email protected]:~# Copy code

modify elk The configuration file

[email protected]:~# su - elk $ bash [email protected]:~$ cd /elk/elasticsearch-7.15.1/config [email protected]:~/elk/elasticsearch-7.15.1/config$ vim elasticsearch.yml [email protected]:~/elk/elasticsearch-7.15.1/config$ [email protected]:~/elk/elasticsearch-7.15.1/config$ tail -n 20 elasticsearch.yml # Set up data The storage path is /data/es-data path.data: /home/elk/data/ # Set up logs The path of the log is /log/es-log path.logs: /home/elk/data/ # Set memory not to use swap partition bootstrap.memory_lock: false # Configured with bootstrap.memory_lock by true It will lead to 9200 Will not be monitored , Unknown cause # Settings allow all ip You can connect the elasticsearch network.host: 0.0.0.0 # The port to enable monitoring is 9200 http.port: 9500 # Add new parameters , In order to make elasticsearch-head Plug ins can access es (5.x edition , If not, you can add it manually ) http.cors.enabled: true http.cors.allow-origin: "*" cluster.initial_master_nodes: ["elk"] node.name: elk [email protected]:~/elk/elasticsearch-7.15.1/config# Copy code

Use elk User to start elasticsearch

[email protected]:~# su - elk $ bash [email protected]:~$ [email protected]:~$ mkdir data [email protected]:~/elk/elasticsearch-7.15.1/bin$ cd [email protected]:~$ cd /home/elk/elk/elasticsearch-7.15.1/bin [email protected]:~/elk/elasticsearch-7.15.1/bin$ ./elasticsearch Copy code

Access the test after startup :

[email protected]:~# curl -I http://192.168.1.19:9500/ HTTP/1.1 200 OK X-elastic-product: Elasticsearch Warning: 299 Elasticsearch-7.15.1-83c34f456ae29d60e94d886e455e6a3409bba9ed "Elasticsearch built-in security features are not enabled. Without authentication, your cluster could be accessible to anyone. See https://www.elastic.co/guide/en/elasticsearch/reference/7.15/security-minimal-setup.html to enable security." content-type: application/json; charset=UTF-8 content-length: 532 [email protected]:~# Copy code

Put it in the background

[email protected]:~/elk/elasticsearch-7.15.1/bin$ nohup /home/elk/elk/elasticsearch-7.15.1/bin/elasticsearch >> /home/elk/elk/elasticsearch-7.15.1/output.log 2>&1 & [1] 8811 [email protected]:~/elk/elasticsearch-7.15.1/bin$ Copy code

[email protected]:~$ cd elk/kibana-7.15.1-linux-x86_64/config/ [email protected]:~/elk/kibana-7.15.1-linux-x86_64/config$ vim kibana.yml [email protected]:~/elk/kibana-7.15.1-linux-x86_64/config$ tail -n 18 kibana.yml # Set the listening port to 5601 server.port: 5601 # Set the accessible host address server.host: "0.0.0.0" # Set up elasticsearch The host address elasticsearch.hosts: ["http://localhost:9500"] # If elasticsearch User name and password are set , Then you need to configure these two items , If not configured , Then don't worry about #elasticsearch.username: "user" #elasticsearch.password: "pass" [email protected]:~/elk/kibana-7.15.1-linux-x86_64/config$ [email protected]:~$ cd /home/elk/elk/kibana-7.15.1-linux-x86_64/bin [email protected]:~/elk/kibana-7.15.1-linux-x86_64/bin$ ./kibana Copy code

Test access

[email protected]:~# curl -I http://192.168.1.19:5601/app/home#/tutorial_directory HTTP/1.1 200 OK content-security-policy: script-src 'unsafe-eval' 'self'; worker-src blob: 'self'; style-src 'unsafe-inline' 'self' x-content-type-options: nosniff referrer-policy: no-referrer-when-downgrade kbn-name: elk kbn-license-sig: aaa69ea6a0792153cde61e88d0cd9bbad7ddcdaec87b613f281dd275e9dbad47 content-type: text/html; charset=utf-8 cache-control: private, no-cache, no-store, must-revalidate content-length: 144351 vary: accept-encoding Date: Wed, 20 Oct 2021 07:11:10 GMT Connection: keep-alive Keep-Alive: timeout=120 [email protected]:~# Copy code

Put it in the background

[email protected]:~/elk/kibana-7.15.1-linux-x86_64/bin$ nohup /home/elk/elk/kibana-7.15.1-linux-x86_64/bin/kibana >> /home/elk/elk/kibana-7.15.1-linux-x86_64/output.log 2>&1 & [2] 9378 [email protected]:~/elk/kibana-7.15.1-linux-x86_64/bin$ Copy code

Output log information to the screen

[email protected]:~$ cd elk/logstash-7.15.1/bin/ [email protected]:~/elk/logstash-7.15.1/bin$ ./logstash -e 'input {stdin{}} output{stdout{}}' Input 123 And then go back , Will output the results to the screen { "host" => "elk", "@timestamp" => 2021-10-20T07:15:54.230Z, "@version" => "1", "message" => "" } 123 { "host" => "elk", "@timestamp" => 2021-10-20T07:15:56.453Z, "@version" => "1", "message" => "123" } [email protected]:~/elk/logstash-7.15.1/bin$ cd ../config/ [email protected]:~/elk/logstash-7.15.1/config$ vim logstash [email protected]:~/elk/logstash-7.15.1/config$ cat logstash input { # Read log information from file file { path => "/var/log/messages" type => "system" start_position => "beginning" } } filter { } output { # standard output stdout {} } [email protected]:~/elk/logstash-7.15.1/config$ mv logstash logstash.conf [email protected]:~/elk/logstash-7.15.1/config$ Copy code

Start the test

[email protected]:~/elk/logstash-7.15.1/config$ cd ../bin/ [email protected]:~/elk/logstash-7.15.1/bin$ ./logstash -f ../config/logstash.conf Copy code

Background start

[email protected]:~$ nohup /home/elk/elk/logstash-7.15.1/bin/logstash -f /home/elk/elk/logstash-7.15.1/config/logstash.conf >> /home/elk/elk/logstash-7.15.1/output.log 2>&1 & [3] 10177 [email protected]:~$ Copy code

Set power on self start

[email protected]:~$ vim startup.sh [email protected]:~$ [email protected]:~$ cat startup.sh #!/bin/bash nohup /home/elk/elk/elasticsearch-7.15.1/bin/elasticsearch >> /home/elk/elk/elasticsearch-7.15.1/output.log 2>&1 & nohup /home/elk/elk/kibana-7.15.1-linux-x86_64/bin/kibana >> /home/elk/elk/kibana-7.15.1-linux-x86_64/output.log 2>&1 & nohup /home/elk/elk/logstash-7.15.1/bin/logstash -f /home/elk/elk/logstash-7.15.1/config/logstash.conf >> /home/elk/elk/logstash-7.15.1/output.log 2>&1 & [email protected]:~$ [email protected]:~$ crontab -e no crontab for elk - using an empty one Select an editor. To change later, run 'select-editor'. 1. /bin/nano <---- easiest 2. /usr/bin/vim.basic 3. /usr/bin/vim.tiny 4. /bin/ed Choose 1-4 [1]: 2 crontab: installing new crontab [email protected]:~$ [email protected]:~$ [email protected]:~$ crontab -l @reboot /home/elk/startup.sh [email protected]:~$ Copy code

logstash plug-in unit

logstash Is to enhance its functions through plug-ins

Plug in classification :

inputs Input

codecs decode

filters Filter

outputs Output

stay Gemfile The document records logstash Plug in for

[email protected]:~$ cd elk/logstash-7.15.1 [email protected]:~/elk/logstash-7.15.1$ ls Gemfile Gemfile [email protected]:~/elk/logstash-7.15.1$ Copy code

Get rid of it github Download plug-ins , The address is :github.com/logstash-pl…

Use filter plug-in unit logstash-filter-mutate

[email protected]:~/elk/logstash-7.15.1/config$ vim logstash2.conf # Create a new configuration file to filter input { stdin { } } filter { mutate { split => ["message", "|"] } } output { stdout { } } Copy code

When the input sss|sssni|akok223|23 Will follow | Separator to separate

Its data processing flow :input–> decode –>filter–> decode –>output

Start the service

And then go start logstash service

[email protected]:~$ nohup /home/elk/elk/logstash-7.15.1/bin/logstash -f /home/elk/elk/logstash-7.15.1/config/logstash2.conf >> /home/elk/elk/logstash-7.15.1/output.log 2>&1 & Copy code

边栏推荐

- Why enterprises need fortress machines

- IOS development - multithreading - thread safety (3)

- LeetCode 724. Find the central subscript of the array

- Industry ranks first in blackmail attacks, hacker organizations attack Afghanistan and India | global network security hotspot

- Tornado code for file download

- Precautions for VPN client on Tencent cloud

- How does [lightweight application server] build a cross-border e-commerce management environment?

- Grpc: based on cloud native environment, distinguish configuration files

- Storage crash MySQL database recovery case

- 2022-2028 global aircraft audio control panel system industry research and trend analysis report

猜你喜欢

2022-2028 global indoor pressure monitor and environmental monitor industry research and trend analysis report

![[51nod] 3047 displacement operation](/img/cb/9380337adbc09c54a5b984cab7d3b8.jpg)

[51nod] 3047 displacement operation

2022-2028 global aircraft audio control panel system industry research and trend analysis report

![[51nod] 2102 or minus and](/img/68/0d966b0322ac1517dd2800234d386d.jpg)

[51nod] 2102 or minus and

IOS development - multithreading - thread safety (3)

2022-2028 Global Industry Survey and trend analysis report on portable pressure monitors for wards

2022-2028 global aircraft wireless intercom system industry research and trend analysis report

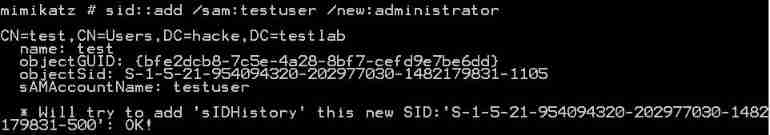

Permission maintenance topic: domain controller permission maintenance

![[51nod] 3395 n-bit gray code](/img/b5/2c072a11601de82cb92ade94672ecd.jpg)

[51nod] 3395 n-bit gray code

The cost of on-site development of software talent outsourcing is higher than that of software project outsourcing. Why

随机推荐

The most comprehensive arrangement of safe operation solutions from various manufacturers

How does [lightweight application server] build a cross-border e-commerce management environment?

Tke single node risk avoidance

Uipickerview show and hide animation

2022-2028 global medical coating materials industry research and trend analysis report

Grpc: adjust data transfer size limit

Gin framework: add Prometheus monitoring

What does operation and maintenance audit fortress mean? How to select the operation and maintenance audit fortress machine?

LeetCode 1323. Maximum number of 6 and 9

What is the performance improvement after upgrading the 4800h to the 5800h?

Face recognition using cidetector

Building a web site -- whether to rent or host a server

Live broadcast Reservation: a guide to using the "cloud call" capability of wechat cloud hosting

How to handle the occasional address request failure in easygbs live video playback?

Kibana report generation failed due to custom template

11111dasfada and I grew the problem hot hot I hot vasser shares

C common regular expression collation

LeetCode 724. Find the central subscript of the array

Cloud call: one line of code is directly connected to wechat open interface capability

Contour-v1.19.1 release