当前位置:网站首页>MySQL advanced 4

MySQL advanced 4

2022-07-01 15:34:00 【Xiao Xiao is not called Xiao Xiao】

The first 16 Chapter Multi version concurrency control

1. What is? MVCC

MVCC (Multiversion Concurrency Control), Multi version concurrency control . seeing the name of a thing one thinks of its function ,MVCC Multiple versions of the database are used to manage the data concurrency control . This technology makes it possible to InnoDB Execute at the transaction isolation level of Read consistency The operation is guaranteed . In other words , To query some rows that are being updated by another transaction , And you can see their values before they are updated , In this way, when doing the query, you don't have to wait for another transaction to release the lock .

2. Snapshot read and current read

MVCC stay MySQL InnoDB In order to improve the database concurrency performance , Deal with it in a better way read - Write conflict , Even if there is a conflict between reading and writing , Can also do No locks , Non blocking concurrent read , And this reading refers to Read the snapshot , Instead of The current reading . The current reading is actually a lock operation , It's the realization of pessimistic lock . and MVCC The essence is a way of adopting optimistic lock thought .

2.1 Read the snapshot

Snapshot reading is also called consistent reading , Read snapshot data . Simple without lock SELECT All belong to snapshot reading , Non blocking read without lock .

The reason why snapshot reading occurs , It is based on the consideration of improving concurrent performance , The implementation of snapshot reading is based on MVCC, In many cases , Avoid lock operation , Lower the cost .

Since it's based on multiple versions , What the snapshot may read is not necessarily the latest version of the data , It could be the previous version of history .

The premise of snapshot read is that isolation level is not serial level , Snapshot reads at the serial level degrade to current reads .

2.2 The current reading

The latest version of the record is currently being read ( The latest data , Instead of historical versions of the data ), When reading, it is also necessary to ensure that other concurrent transactions cannot modify the current record , Will lock the read record . The lock SELECT, Or add, delete and modify the data, which will be read currently .

3. review

3.1 Let's talk about the isolation level again

We know that business has 4 Isolation levels , There are three possible concurrency problems :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-vGCVYbka-1656250508371)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051536648.png)]

Another picture :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-co6VvVD6-1656250508372)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051536125.png)]

3.2 Hide fields 、Undo Log Version chain

Take a look back. undo The version chain of the log , For the use of InnoDB For the table of the storage engine , Its clustered index records contain two necessary hidden columns .

trx_id: Every time a transaction changes a clustered index record , Will take the matterBusiness idAssign a value to trx_id Hide columns .roll_pointer: Every time a cluster index record is changed , Will write the old version to undo journal in , Then the hidden column is equivalent to a pointer , It can be used to find the information before the modification of the record .

4. MVCC The realization principle is ReadView

MVCC The realization of depends on : Hide fields 、Undo Log、Read View.

4.1 What is? ReadView

ReadView Is that transactions are using MVCC The read view generated when the snapshot read operation is performed by the mechanism . When a transaction starts , A snapshot of the current database system will be generated ,InnoDB An array is constructed for each transaction , Used to record and maintain the current status of the system Active affairs Of ID(“ active ” Refers to , Launched but not yet submitted ).

4.2 Design thinking

Use READ UNCOMMITTED Isolation level transactions , Because you can read the modified records of uncommitted transactions , So just read the latest version of the record .

Use SERIALIZABLE Isolation level transactions ,InnoDB Specify the use of locks to access records .

Use READ COMMITTED and REPEATABLE READ Isolation level transactions , Must be sure to read Have submitted Transaction modified records . If another transaction has modified the record but has not yet committed , You can't read the latest version of the record directly , The core problem is to judge which version in the version chain is visible to the current transaction , This is a ReadView The main problem to be solved .

This ReadView It mainly includes 4 A more important content , They are as follows :

creator_trx_id, To create this Read View The business of ID.

explain : Only when you make changes to the records in the table ( perform INSERT、DELETE、UPDATE These sentences are ) To assign a transaction to a transaction id, Otherwise, the transaction in a read-only transaction id Values default to 0.

trx_ids, It means generating ReadView The number of active read-write transactions in the current systemBusiness id list.up_limit_id, The smallest transaction in an active transaction ID.low_limit_id, To generate ReadView Should be assigned to the next transaction in the systemidvalue .low_limit_id Is the biggest transaction in the system id value , Here we should pay attention to the transactions in the system id, Need to be different from the active transaction ID.

Be careful :low_limit_id Not at all trx_ids Maximum of , Business id It's incremental . such as , Now there is id by 1, 2,3 These three things , after id by 3 The transaction of has been submitted . Then a new read transaction is generated ReadView when ,trx_ids Include 1 and 2,up_limit_id The value is 1,low_limit_id The value is 4.

4.3 ReadView The rules of

With this ReadView, So when you access a record , Just follow the steps below to determine if a version of the record is visible .

- If the accessed version of trx_id Property value and ReadView Medium

creator_trx_idSame value , It means that the current transaction is accessing its own modified records , So this version can be accessed by the current transaction . - If the accessed version of trx_id Attribute value less than ReadView Medium

up_limit_idvalue , Indicates that the transaction generating this version generates ReadView Submitted before , So this version can be accessed by the current transaction . - If the accessed version of trx_id Property value is greater than or equal to ReadView Medium

low_limit_idvalue , Indicates that the transaction generating this version generates ReadView It's only opened after , So this version cannot be accessed by the current transaction . - If the accessed version of trx_id The attribute value is ReadView Of

up_limit_idandlow_limit_idBetween , Then we need to judge trx_id Is the attribute value in trx_ids In the list .- If in , Description creation ReadView The transaction that generated this version is still active , This version is not accessible .

- If not , Description creation ReadView The transaction that generated this version has been committed , This version can be accessed .

4.4 MVCC The overall operation process

After understanding these concepts , Let's take a look at when querying a record , How the system passes through MVCC Find it :

First get the version number of the transaction itself , That's business ID;

obtain ReadView;

The data from the query , Then with ReadView Compare the transaction version number in the ;

If it doesn't meet ReadView The rules , You need from Undo Log Take a historical snapshot in ;

Finally, the data that meets the rules is returned .

At the isolation level, read committed (Read Committed) when , Every time in a transaction SELECT The query will be retrieved again Read View.

As shown in the table :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-EQlU7i5R-1656250508373)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051549618.png)]

Be careful , At this time, the same query statement will be retrieved again Read View, At this moment if Read View Different , It may lead to non repeatable reading or unreal reading .

When the isolation level is repeatable , You can't avoid repetition , This is because a transaction is only the first time SELECT You'll get it once Read View, And all the rest SELECT Will reuse this Read View, As shown in the following table :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-tVK8AoWX-1656250508373)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051550072.png)]

5. Illustrate with examples

5.1 READ COMMITTED Under isolation level

READ COMMITTED: Generate a... Before each read ReadView.

5.2 REPEATABLE READ Under isolation level

Use REPEATABLE READ For isolation level transactions , Only one... Is generated when the query statement is executed for the first time ReadView , After that, the query will not be generated repeatedly .

5.3 How to solve unreal reading

Let's say that now the table student There's only one piece of data , In the data content , Primary key id=1, Hidden trx_id=10, its undo log As shown in the figure below .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-RTHxBOmd-1656250508374)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051556631.png)]

Suppose there is a transaction now A And transaction B Concurrent execution , Business A The business of id by 20, Business B The business of id by 30.

step 1: Business A Start querying data for the first time , Of the query SQL The statement is as follows .

select * from student where id >= 1;

Before starting the query ,MySQL Will be for business A Produce a ReadView, here ReadView Is as follows :trx_ids= [20,30],up_limit_id=20,low_limit_id=31,creator_trx_id=20.

At this time, due to student There's only one piece of data , And consistent with where id>=1 Conditions , So it will find out . And then according to ReadView Mechanism , Find the data in this row trx_id=10, Less than transaction A Of ReadView in up_limit_id, This means that this data is a transaction A Before opening , Data already submitted by other transactions , So the business A It can be read .

Conclusion : Business A Your first query , Can read a piece of data ,id=1.

step 2: Then business B(trx_id=30), To the table student Insert two new pieces of data , And commit the transaction .

insert into student(id,name) values(2,' Li Si ');

insert into student(id,name) values(3,' Wang Wu ');

At this point, the table student There are three pieces of data in , Corresponding undo As shown in the figure below :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-Ic2dvGmO-1656250508376)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051559345.png)]

step 3: Then business A Open the second query , According to the rules of repeatable read isolation level , At this point, the transaction A It won't regenerate ReadView. At this point, the table student Medium 3 All data meet where id>=1 Conditions , So we'll find out first . And then according to ReadView Mechanism , Determine whether each piece of data can be transacted A notice .

1) First id=1 This data of , I've already said that , Can be used by transactions A notice .

2) And then there was id=2 The data of , its trx_id=30, At this point, the transaction A Find out , This value is in up_limit_id and low_limit_id Between , So we need to judge again 30 Is it in trx_ids In array . Due to transaction A Of trx_ids=[20,30], So in the array , This means id=2 This data is related to the transaction A Committed by other transactions started at the same time , So this data cannot make transactions A notice .

3) Empathy ,id=3 This data of ,trx_id Also for the 30, Therefore, it cannot be used by transactions A See .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-hNBgbjik-1656250508377)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051559867.png)]

Conclusion : Final transaction A The second query , Only query id=1 This data of . This and business A The results of the first query are the same , Therefore, there is no illusory reading phenomenon , So in the MySQL At the repeatable read isolation level of , There is no magic reading problem .

6. summary

Here is the introduction of MVCC stay READ COMMITTD、REPEATABLE READ These two isolation level transactions access the version chain of records when performing snapshot read operations . This makes different transactions read - Write 、 Write - read Operations are executed concurrently , So as to improve the system performance .

The core point is ReadView Principle ,READ COMMITTD、REPEATABLE READ One big difference between these two isolation levels is generation ReadView The timing is different :

READ COMMITTDIn every ordinary SELECT Before operation, a ReadViewREPEATABLE READOnly for the first time SELECT Generate a before operation ReadView, This is repeated in subsequent query operations ReadView Just fine .

The first 17 Chapter Other database logs

1. MySQL Supported logs

1.1 Log type

MySQL There are different types of log files , Used to store different types of logs , It is divided into Binary log 、 Error log 、 General query log and Slow query log , This is also commonly used 4 Kind of .MySQL 8 Two more logs are added : relay logs and Data definition statement log . Use these log files , You can see MySQL What happened inside .

** Slow query log :** Record all execution times over long_query_time Of all inquiries , It is convenient for us to optimize the query .

** General query log :** Record the start time and end time of all connections , And connect all instructions sent to the database server , For the actual scenario of our recovery operation 、 Find the problem , Even the audit of database operation is of great help .

** Error log :** Record MySQL Start of service 、 To run or stop MySQL Problems with service , It is convenient for us to understand the status of the server , So as to maintain the server .

** Binary log :** Record all statements that change data , It can be used for data synchronization between master and slave servers , And the lossless recovery of data in case of server failure .

** relay logs :** Used in master-slave server architecture , An intermediate file used by the slave server to store the binary log contents of the master server . Read the contents of the relay log from the server , To synchronize operations on the primary server .

** Data definition statement log :** Record the metadata operation performed by the data definition statement .

In addition to binary logs , Other logs are text file . By default , All logs are created in MySQL Data directory in .

1.2 The disadvantages of logging

The log function will

Reduce MySQL Database performance.Journal meeting

Take up a lot of disk space.

2. General query log (general query log)

The general query log is used to Record all user actions , Including startup and shutdown MySQL service 、 Connection start time and end time of all users 、 issue MySQL All of the database server SQL Instruction, etc . When our data is abnormal , View the general query log , Specific scenarios during restore operation , It can help us locate the problem accurately .

2.1 View current status

mysql> SHOW VARIABLES LIKE '%general%';

2.2 start log

The way 1: Permanent way

[mysqld]

general_log=ON

general_log_file=[path[filename]] # Directory path of log file ,filename Is the log file name

The way 2: Temporary way

SET GLOBAL general_log=on; # Open the general query log

SET GLOBAL general_log_file=’path/filename’; # Set the storage location of log files

SET GLOBAL general_log=off; # Close the general query log

SHOW VARIABLES LIKE 'general_log%'; # Check the situation after setting

2.3 Stop logging

The way 1: Permanent way

[mysqld]

general_log=OFF

The way 2: Temporary way

SET GLOBAL general_log=off;

SHOW VARIABLES LIKE 'general_log%';

3. Error log (error log)

3.1 start log

stay MySQL In the database , The error log function is Default on Of . and , Error log Cannot be banned .

[mysqld]

log-error=[path/[filename]] #path Is the directory path where the log file is located ,filename Is the log file name

3.2 Check the log

mysql> SHOW VARIABLES LIKE 'log_err%';

3.3 Delete \ Refresh the log

install -omysql -gmysql -m0644 /dev/null /var/log/mysqld.log

mysqladmin -uroot -p flush-logs

4. Binary log (bin log)

4.1 Check the default

mysql> show variables like '%log_bin%';

4.2 Log parameter settings

The way 1: Permanent way

[mysqld]

# Enable binary logging

log-bin=atguigu-bin

binlog_expire_logs_seconds=600 max_binlog_size=100M

Settings with bin-log Log storage directory

[mysqld]

log-bin="/var/lib/mysql/binlog/atguigu-bin"

Be careful : The new folder needs to be used mysql user , Just use the following command .

chown -R -v mysql:mysql binlog

The way 2: Temporary way

# global Level

mysql> set global sql_log_bin=0;

ERROR 1228 (HY000): Variable 'sql_log_bin' is a SESSION variable and can`t be used with SET GLOBAL

# session Level

mysql> SET sql_log_bin=0;

Query OK, 0 rows affected (0.01 second )

4.3 Check the log

mysqlbinlog -v "/var/lib/mysql/binlog/atguigu-bin.000002"

# No display binlog Formatted statement

mysqlbinlog -v --base64-output=DECODE-ROWS "/var/lib/mysql/binlog/atguigu-bin.000002"

# You can view the parameter help

mysqlbinlog --no-defaults --help

# Check out the last 100 That's ok

mysqlbinlog --no-defaults --base64-output=decode-rows -vv atguigu-bin.000002 |tail -100

# according to position lookup

mysqlbinlog --no-defaults --base64-output=decode-rows -vv atguigu-bin.000002 |grep -A20 '4939002'

Read and take out this way binlog The full-text content of the log is more , It's not easy to distinguish and see pos Some information , Here is a more convenient query command :

mysql> show binlog events [IN 'log_name'] [FROM pos] [LIMIT [offset,] row_count];

IN 'log_name': Specify the binlog file name ( No designation is the first binlog file )FROM pos: Specify from which pos Start from ( Do not specify is from the entire file first pos Start at )LIMIT [offset]: Offset ( No designation is 0)row_count: Total number of queries ( Not specifying is all lines )

mysql> show binlog events in 'atguigu-bin.000002';

4.4 Using logs to recover data

mysqlbinlog The syntax for recovering data is as follows :

mysqlbinlog [option] filename|mysql –uuser -ppass;

filename: Is the log file name .option: optional , Two more important pairs option Parameter is –start-date、–stop-date and --start-position、-- stop-position.--start-date and --stop-date: You can specify the start time and end time of the recovery point of the database .--start-position and --stop-position: You can specify the start and end locations of the recovered data .

Be careful : Use mysqlbinlog Command to restore , It must be recovered first if the number is small , for example atguigu-bin.000001 Must be in atguigu-bin.000002 Restore before .

4.5 Delete binary log

1. PURGE MASTER LOGS: Delete the specified log file

PURGE {MASTER | BINARY} LOGS TO ‘ Specify the log file name ’

PURGE {MASTER | BINARY} LOGS BEFORE ‘ Specify Date ’

5. Let's talk about binary logs (binlog)

5.1 Write mechanism

binlog The timing of writing is also very simple , During transaction execution , First write down the journal binlog cache, When the transaction is submitted , And then binlog cache writes binlog In file . Because of a transaction binlog Can't be taken apart , No matter how big it is , Also make sure to write once , So the system allocates a block of memory for each thread binlog cache.

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-mE07m2wK-1656250508378)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051630535.png)]

write and fsync The timing of , It can be determined by parameters sync_binlog control , The default is 0. by 0 When , Indicates that every time a transaction is committed, only write, It is up to the system to decide when to execute fsync. Although the performance has been improved , But the machine is down ,page cache Inside binglog Will lose . Here's the picture :

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-zXkpxMYo-1656250508378)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051631346.png)]

For safety's sake , It can be set to 1, Indicates that every time a transaction is committed fsync, Just as redo log Process of disc brushing equally . Finally, there is a compromise , It can be set to N(N>1), Indicates every transaction submitted write, But cumulatively N After a business fsync.

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-2uevENl9-1656250508379)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051632526.png)]

In the presence of IO In the bottleneck scene , take sync_binlog Set to a larger value , Can improve performance . alike , If the machine goes down , Will lose recent N A business binlog journal .

5.2 binlog And redolog contrast

- redo log It is

Physical log, The record content is “ What changes have been made on a data page ”, Belong to InnoDB Generated by the storage engine layer . - and binlog yes

Logic log, The record content is the original logic of the statement , Be similar to “ to ID=2 In this line c Field plus 1”, Belong to MySQL Server layer . - Although they all belong to the guarantee of persistence , But the emphasis is different .

- redo log Give Way InnoDB The storage engine has crash recovery capability .

- binlog To ensure the MySQL Data consistency of cluster architecture

5.3 Two-phase commit

During the execution of the update statement , Will record redo log And binlog Two logs , In basic transactions ,redo log During the execution of the transaction, you can continuously write , and binlog Write... Only when the transaction is committed , therefore redo log And binlog Of Write time Dissimilarity .

In order to solve the problem of logical consistency between two logs ,InnoDB Storage engine use Two-phase commit programme .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-DiXgLvk2-1656250508379)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051637390.png)]

Use Two-phase commit after , write in binlog When an exception occurs, it will not affect

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-72G3i67e-1656250508381)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051639192.png)]

Another scene ,redo log Set up commit There was an exception in the phase , Will the transaction be rolled back ?

The transaction will not be rolled back , It will execute the logic framed in the figure above , although redo log Is in prepare Stage , But through business id Find the corresponding binlog journal , therefore MySQL Think it's complete , The transaction recovery data will be committed .

6. relay logs (relay log)

6.1 Introduce

The relay log exists only on the slave server of the master-slave server architecture . In order to be consistent with the master server , To read the contents of the binary log from the master server , And write the read information into Local log files in , This log file from the local server is called relay logs . then , Read relay log from server , And update the data from the server according to the contents of the relay log , Complete the of master-slave server Data synchronization .

6.2 Typical error of recovery

If the slave server goes down , Sometimes for system recovery , To reinstall the operating system , This may lead to your Server name Prior to Different . And the relay log is Contains the server name Of . under these circumstances , It may cause you to recover from the server , Unable to read data from the relay log before downtime , Thought the log file was corrupted , In fact, the name is wrong .

The solution is simple , Change the name from the server back to the previous name .

The first 18 Chapter Master slave copy

1. Master slave replication overview

1.1 How to improve database concurrency

General applications are for databases “ Read more and write less ”, In other words, the pressure on the database to read data is relatively large , One idea is to adopt the scheme of database cluster , do Master slave architecture 、 Conduct Read / write separation , This can also improve the concurrent processing ability of the database . But not all applications need to set the master-slave architecture of the database , After all, setting up the architecture itself has a cost .

If our goal is to improve the efficiency of highly concurrent database access , So the first consideration is how to Optimize SQL And index , It's simple and effective ; The second is to adopt Caching strategy , For example, use Redis Save hotspot data in memory database , Improve the efficiency of reading ; The last step is to adopt Master slave architecture , Read and write separation .

1.2 The role of master-slave replication

The first 1 A role : Read / write separation .

The first 2 One function is data backup .

The first 3 One function is high availability .

2. The principle of master-slave replication

2.1 Principle analysis

Three threads

In fact, the principle of master-slave synchronization is based on binlog For data synchronization . In the master-slave replication process , Will be based on 3 Threads To operate , A main library thread , Two slave threads .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-mC4bASzK-1656250508382)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051646097.png)]

Binary log dump thread (Binlog dump thread) It's a main library thread . When connecting from the library thread , The master library can send the binary log to the slave library , When the main library reads Events (Event) When , Will be in Binlog On Lock , After the read is complete , Release the lock .

Slave Library I/O Threads Will connect to the main library , Send request update to main library Binlog. At this point from the library I/O The thread can read the binary log of the main library and dump the data sent by the thread Binlog Update section , And copy it to the local relay log (Relay log).

Slave Library SQL Threads The relay log from the library is read , And execute the events in the log , Keep the data in the slave library synchronized with the master library .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-qUkamHNA-1656250508382)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051647759.png)]

Copy three steps

step 1:Master Log writes to binary log (binlog).

step 2:Slave take Master Of binary log events Copy to its trunk log (relay log);

step 3:Slave Redo events in relay log , Apply the changes to your own database . MySQL Replication is asynchronous and serialized , And restart from Access point Start copying .

The problem of replication

The biggest problem with copying : Time delay

2.2 The basic principle of reproduction

Every

Slaveonly oneMasterEvery

SlaveThere can only be one unique server IDEvery

MasterThere can be multipleSlave

3. Synchronization data consistency problem

Requirements for master-slave synchronization :

The data of read library and write library are consistent ( Final agreement );

Write data must be written to the write library ;

To read data, you must go to the Reading Library ( not always );

3.1 Understand the master-slave delay problem

The content of master-slave synchronization is binary log , It's a file , It's going on Network transmission In the process of There is a master-slave delay ( such as 500ms), In this way, the data that users read from the library may not be the latest data , That is, in master-slave synchronization Data inconsistency problem .

3.2 Cause of master-slave delay problem

When the network is normal , The time required to transfer logs from the master database to the slave database is very short , namely T2-T1 The value of is very small . namely , Under normal network conditions , The main source of the delay between the master and the standby is that the standby database has finished receiving binlog And the time difference between the execution of the transaction .

** The most direct manifestation of active / standby delay is , Consume relay logs from the library (relay log) The speed of , It's better than the main library binlog It's slower .** Cause :

1、 The machine performance of the slave library is worse than that of the master library

2、 High pressure from the reservoir

3、 Execution of big business

3.3 How to reduce master-slave delay

If you want to reduce the master-slave delay time , We can take the following measures :

Reduce the probability of concurrency of multithreaded large transactions , Optimize business logic

Optimize SQL, Avoid slow SQL,

Reduce batch operations, It is recommended to write scripts to update-sleep It's done in this way .Improve the configuration of slave machines, Reduce the main database write binlog And reading from the library binlog Poor efficiency .Use as far as possible

Short link, In other words, the distance between the master database and the slave database server should be as short as possible , Increase port bandwidth , Reduce binlog Network delay of transmission .The real-time requirements of business read forced to go to the main database , Only do disaster recovery from the library , Backup .

3.4 How to solve the problem of consistency

In the case of separation of reading and writing , Solve the problem of inconsistent data in master-slave synchronization , Is to solve the problem between master and slave Data replication The problem of , If according to data consistency From weak to strong To divide , There are the following 3 Two replication methods .

Method 1: Asynchronous replication

Method 2: Semi-synchronous replication

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-lIkVbUsv-1656250508383)(https://cdn.jsdelivr.net/gh/aoshihuankong/[email protected]/img/202204051655175.png)]

Method 3: Group replication

First, we form a replication group with multiple nodes , stay Perform reading and writing (RW) Business When , Need to pass the consistency protocol layer (Consensus layer ) Consent of , That is, the read-write transaction wants to commit , You have to go through the group “ Most people ”( Corresponding Node node ) Consent of , Most mean that the number of agreed nodes needs to be greater than (N/2+1), In this way, you can submit , Instead of the original sponsor has the final say. . And for the read-only (RO) Business It does not need to be approved by the group , direct COMMIT that will do .

The first 19 Chapter Database backup and recovery

1. Physical backup and logical backup

The physical backup : Backup data files , Dump database physical files to a directory . Physical backup recovery is faster , But it takes up a lot of space ,MySQL Can be used in the xtrabackup Tools for physical backup .

Logical backup : Use tools to export database objects , Summary into backup file . Slow recovery of logical backup , But it takes up little space , More flexible .MySQL The common logical backup tool in is mysqldump. Logical backup is Backup sql sentence , Performing a backup at the time of recovery sql Statement to achieve the reproduction of database data .

2. mysqldump Realize logical backup

2.1 Back up a database

mysqldump –u User name –h Host name –p password Name of the database to be backed up [tbname, [tbname...]]> Backup filename call .sql

mysqldump -uroot -p atguigu>atguigu.sql # The backup files are stored in the current directory

mysqldump -uroot -p atguigudb1 > /var/lib/mysql/atguigu.sql

2.2 Back up all databases

mysqldump -uroot -pxxxxxx --all-databases > all_database.sql

mysqldump -uroot -pxxxxxx -A > all_database.sql

2.3 Backup part of the database

mysqldump –u user –h host –p --databases [ Database name 1 [ Database name 2...]] > Backup filename call .sql

mysqldump -uroot -p --databases atguigu atguigu12 >two_database.sql

mysqldump -uroot -p -B atguigu atguigu12 > two_database.sql

2.4 Back up some tables

mysqldump –u user –h host –p Database name [ Table name 1 [ Table name 2...]] > Backup file name .sql

mysqldump -uroot -p atguigu book> book.sql

# Back up multiple tables

mysqldump -uroot -p atguigu book account > 2_tables_bak.sql

2.5 Backup some data of single table

mysqldump -uroot -p atguigu student --where="id < 10 " > student_part_id10_low_bak.sql

2.6 Exclude backups of some tables

mysqldump -uroot -p atguigu --ignore-table=atguigu.student > no_stu_bak.sql

2.7 Back up only the structure or only the data

- Just back up the structure

mysqldump -uroot -p atguigu --no-data > atguigu_no_data_bak.sql

- Backup data only

mysqldump -uroot -p atguigu --no-create-info > atguigu_no_create_info_bak.sql

2.8 The backup contains stored procedures 、 function 、 event

mysqldump -uroot -p -R -E --databases atguigu > fun_atguigu_bak.sql

3. mysql Command restore data

mysql –u root –p [dbname] < backup.sql

3.1 Restore a single database from a single database backup

# The backup file contains the statement to create the database

mysql -uroot -p < atguigu.sql

# The backup file does not contain the statement to create the database

mysql -uroot -p atguigu4< atguigu.sql

3.2 Full backup recovery

mysql –u root –p < all.sql

3.3 Restore a single library from a full backup

sed -n '/^-- Current Database: `atguigu`/,/^-- Current Database: `/p' all_database.sql > atguigu.sql

# After the separation, we can import atguigu.sql You can restore a single library

3.4 Restore a single table from a single database backup

cat atguigu.sql | sed -e '/./{H;$!d;}' -e 'x;/CREATE TABLE `class`/!d;q' > class_structure.sql

cat atguigu.sql | grep --ignore-case 'insert into `class`' > class_data.sql

# use shell The syntax separates the statement that creates the table and the statement that inserts the data Then export one by one to complete the recovery

use atguigu;

mysql> source class_structure.sql;

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> source class_data.sql;

Query OK, 1 row affected (0.01 sec)

4. Export and import of tables

4.1 Export of tables

1. Use SELECT…INTO OUTFILE Export text file

SHOW GLOBAL VARIABLES LIKE '%secure%';

SELECT * FROM account INTO OUTFILE "/var/lib/mysql-files/account.txt";

2. Use mysqldump Command to export a text file

mysqldump -uroot -p -T "/var/lib/mysql-files/" atguigu account

# or

mysqldump -uroot -p -T "/var/lib/mysql-files/" atguigu account --fields-terminated- by=',' --fields-optionally-enclosed-by='\"'

3. Use mysql Command to export a text file

mysql -uroot -p --execute="SELECT * FROM account;" atguigu> "/var/lib/mysql-files/account.txt"

4.2 Table import

1. Use LOAD DATA INFILE To import a text file

LOAD DATA INFILE '/var/lib/mysql-files/account_0.txt' INTO TABLE atguigu.account;

# or

LOAD DATA INFILE '/var/lib/mysql-files/account_1.txt' INTO TABLE atguigu.account FIELDS TERMINATED BY ',' ENCLOSED BY '\"';

2. Use mysqlimport To import a text file

mysqlimport -uroot -p atguigu '/var/lib/mysql-files/account.txt' --fields-terminated- by=',' --fields-optionally-enclosed-by='\"'

边栏推荐

- 摩根大通期货开户安全吗?摩根大通期货公司开户方法是什么?

- vim 从嫌弃到依赖(22)——自动补全

- Raytheon technology rushes to the Beijing stock exchange and plans to raise 540million yuan

- 做空蔚来的灰熊,以“碰瓷”中概股为生?

- OpenSSL client programming: SSL session failure caused by an insignificant function

- Is JPMorgan futures safe to open an account? What is the account opening method of JPMorgan futures company?

- 22-06-26周总结

- GaussDB(for MySQL) :Partial Result Cache,通过缓存中间结果对算子进行加速

- [antenna] [3] some shortcut keys of CST

- 微信公众号订阅消息 wx-open-subscribe 的实现及闭坑指南

猜你喜欢

《性能之巅第2版》阅读笔记(五)--file-system监测

Implementation of deploying redis sentry in k8s

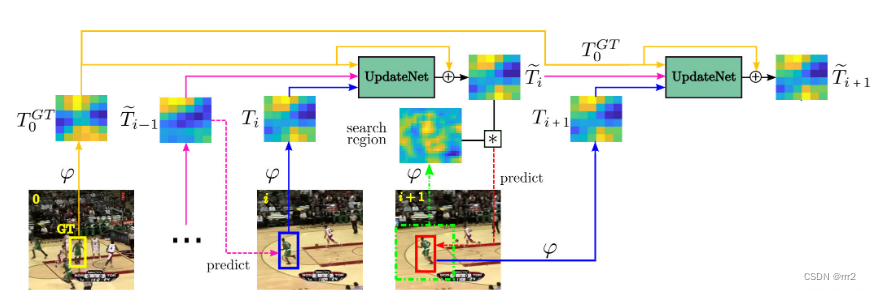

【目标跟踪】|模板更新 时间上下文信息(UpdateNet)《Learning the Model Update for Siamese Trackers》

Redis high availability principle

【目标跟踪】|STARK

MySQL高级篇4

精益六西格玛项目辅导咨询:集中辅导和点对点辅导两种方式

Don't ask me again why MySQL hasn't left the index? For these reasons, I'll tell you all

MySQL 服务正在启动 MySQL 服务无法启动解决途径

An intrusion detection model

随机推荐

Pnas: brain and behavior changes of social anxiety patients with empathic embarrassment

It's settled! 2022 Hainan secondary cost engineer examination time is determined! The registration channel has been opened!

微信小程序01-底部导航栏设置

Skywalking 6.4 distributed link tracking usage notes

STM32F411 SPI2输出错误,PB15无脉冲调试记录【最后发现PB15与PB14短路】

TS报错 Don‘t use `object` as a type. The `object` type is currently hard to use

"Qt+pcl Chapter 6" point cloud registration ICP Series 6

MySQL 服务正在启动 MySQL 服务无法启动解决途径

Microservice tracking SQL (support Gorm query tracking under isto control)

Using swiper to make mobile phone rotation map

厦门灌口镇田头村特色农产品 甜头村特色农产品蚂蚁新村7.1答案

Implementation of wechat web page subscription message

SAP s/4hana: one code line, many choices

智能运维实战:银行业务流程及单笔交易追踪

Task.Run(), Task.Factory.StartNew() 和 New Task() 的行为不一致分析

张驰咨询:锂电池导入六西格玛咨询降低电池容量衰减

22-06-26周总结

[Cloudera][ImpalaJDBCDriver](500164)Error initialized or created transport for authentication

摩根大通期货开户安全吗?摩根大通期货公司开户方法是什么?

远程办公经验?来一场自问自答的介绍吧~ | 社区征文