当前位置:网站首页>Share 5 commonly used feature selection methods, and you must see them when you get started with machine learning!!!

Share 5 commonly used feature selection methods, and you must see them when you get started with machine learning!!!

2022-06-30 17:22:00 【Xinyi 2002】

Click to follow | Set to star | Dry goods express

In many books related to machine learning , It's hard to find content about feature selection , Because the problem to be solved by feature selection is often regarded as a sub module of machine learning , Generally, it will not be discussed separately .

But feature selection is an important data preprocessing process , Feature selection has two main functions :

Reduce the number of features 、 Dimension reduction , Make model generalization more powerful , Reduce overfitting

Enhance understanding between features and eigenvalues

Good feature selection can improve the performance of the model , It can help us to understand the characteristics of data 、 The underlying structure , This will further improve the model 、 Algorithms play an important role .

This article will combine Scikit-learn The examples provided introduce several common feature selection methods , Their respective advantages, disadvantages and problems .

01

Remove the feature of small value change

english :Removing features with low variance

This should be the simplest feature selection method : Suppose that the eigenvalue of a feature is only 0 and 1, And in all the input samples ,95% The characteristic values of the instances of are 1, Then it can be considered that this feature has little effect . If 100% All are 1, Then this feature doesn't make sense . This method can only be used when the eigenvalues are discrete variables , If it's a continuous variable , You need to discretize continuous variables before you can use , And actually , It's not very likely to have 95% All of the above characteristics exist with a certain value , So this method is simple, but not easy to use . It can be used as a preprocessing for feature selection , First, remove the features with small value change , And then select the appropriate feature selection method from the next mentioned feature selection method for further feature selection .

02

Selection of univariate features

english :Univariate feature selection.

Univariate feature selection can test each feature , Measure the relationship between the feature and the response variable , Throw away bad features according to the score . For regression and classification problems, chi square test can be used to test the characteristics .

This method is relatively simple , Easy to run , Easy to understand , It's usually good for understanding data ( But for feature optimization 、 It's not necessarily effective to improve generalization ability ); There are many improved versions of this approach 、 variant .

2.1 Pearson The correlation coefficient

english :Pearson Correlation

Pearson correlation coefficient is one of the simplest , Methods to help understand the relationship between features and response variables , This method measures the linear correlation between variables , The value range of the result is [-1,1],-1 It means a complete negative correlation ( This variable goes down , That will rise ),+1 It means complete positive correlation ,0 No linear correlation .

Pearson Correlation Fast 、 Easy to calculate , Often get data ( After cleaning and feature extraction ) After that, it will be executed as soon as possible .Scipy Of pearsonr The method can calculate the correlation coefficient and p-value

import numpy as np

from scipy.stats import pearsonr

np.random.seed(0)

size = 300

x = np.random.normal(0, 1, size)

print("Lower noise", pearsonr(x, x + np.random.normal(0, 1, size)))

print("Higher noise", pearsonr(x, x + np.random.normal(0, 10, size)))

In this case , We compared the differences between the variables before and after adding noise . When the noise is low , There's a strong correlation ,p-value Very low .

Scikit-learn Provided f_regrssion The method can calculate the characteristics in batch p-value, Very convenient , Reference resources sklearn Of pipeline.

Pearson One obvious defect of the correlation coefficient is , As a feature ordering mechanism , He's only sensitive to linear relationships . If the relationship is nonlinear , Even if two variables have a one-to-one correspondence ,Pearson The correlation may also be close 0.

x = np.random.uniform(-1, 1, 100000)

print pearsonr(x, x**2)[0]For more similar examples, please refer to sample plots. in addition , If we only judge according to the value of correlation coefficient , Sometimes it can be very misleading , Such as Anscombe’s quartet, It's best to visualize the data , So as not to draw wrong conclusions .

2.2 Mutual information and maximum information coefficient

english :Mutual information and maximal information coefficient (MIC)

The above is the classic mutual information formula . It's not convenient to use mutual information for feature selection :

It's not a measure , There's no way to normalize , There is no way to compare the results on different data and models ;

It's not very convenient to calculate continuous variables (X and Y It's all a collection ,x,y Are discrete values ), Usually variables need to be discretized first , The result of mutual information is very sensitive to the way of discretization .

The maximum information coefficient overcomes these two problems . It first looks for an optimal discretization method , Then the mutual information value is transformed into a measurement method , The value range is [0,1].minepy Provides MIC function .

On the contrary, look at this example ,MIC The calculated mutual information value is 1( The maximum value ).

from minepy import MINE

m = MINE()

x = np.random.uniform(-1, 1, 10000)

m.compute_score(x, x**2)

print(m.mic())1.0MIC Our statistical ability has been questioned , When the null hypothesis does not hold ,MIC Your statistics will be affected . This problem does not exist on some data sets , But some data sets have this problem .

2.3 Distance correlation coefficient

english :Distance correlation

The distance correlation coefficient is to overcome Pearson From the weakness of the correlation coefficient . stay x and x^2 In this case , Even if Pearson The correlation coefficient is 0, Nor can we conclude that these two variables are independent ( It may be nonlinear correlation ); But if the distance correlation coefficient is 0, Then we can say that the two variables are independent .

R Of energy The implementation of distance correlation coefficient is provided in the package , And this is Python gist The implementation of the .

#R-code

> x = runif (1000, -1, 1)

> dcor(x, x**2)

[1] 0.4943864Although there are MIC And the distance correlation coefficient , But when the relationship between variables is nearly linear ,Pearson The correlation coefficient is still irreplaceable . First of all 、Pearson The calculation speed of correlation coefficient is fast , This is important when dealing with large-scale data . second 、Pearson The value range of correlation coefficient is [-1,1], and MIC And the distance correlation coefficient is [0,1]. This characteristic makes Pearson Correlation coefficients can represent more abundant relationships , The sign indicates the positive and negative of the relation , The absolute value can represent the strength . Of course ,Pearson The premise of effective correlation is that the relationship between two variables is monotonous .

2.4 Feature ranking based on learning model

english :Model based ranking

The idea of this method is to directly use the machine learning algorithm you want to use , A prediction model is established for each individual characteristic and response variable . Actually Pearson The correlation coefficient is equivalent to the standardized regression coefficient in linear regression . If the relationship between a feature and the response variable is nonlinear , You can use a tree based approach ( Decision tree 、 Random forests )、 Or extended linear model, etc . The tree based approach is easier to use , Because they are good at modeling nonlinear relationships , And don't need much debugging . But we should pay attention to the fitting problem , Therefore, the depth of the tree should not be too deep , Then there is the use of cross validation .

Use... On the Boston house price data set sklearn Random forest regression gives an example of univariate selection :

from sklearn.cross_validation import cross_val_score, ShuffleSplit

from sklearn.datasets import load_boston

from sklearn.ensemble import RandomForestRegressor

#Load boston housing dataset as an example

boston = load_boston()

X = boston["data"]

Y = boston["target"]

names = boston["feature_names"]

rf = RandomForestRegressor(n_estimators=20, max_depth=4)

scores = []

for i in range(X.shape[1]):

score = cross_val_score(rf, X[:, i:i+1], Y, scoring="r2",

cv=ShuffleSplit(len(X), 3, .3))

scores.append((round(np.mean(score), 3), names[i]))

print(sorted(scores, reverse=True))

03

Linear model and regularization

Univariate feature selection method independently measures the relationship between each feature and response variable , Another mainstream feature selection method is the method based on machine learning model . Some machine learning methods have their own mechanism of scoring features , Or it can be easily applied to feature selection tasks , For example, regression models ,SVM, Decision tree , Random forest and so on . Just as an aside , This method seems to be called... In some places wrapper type , Probably means to say , Feature ranking model and machine learning model are coupled together , The corresponding non wrapper The feature selection method of type is called filter type .

The following will introduce how to use the coefficients of the regression model to select features . The more important the feature is, the larger the coefficient will be in the model , The more irrelevant the output variable, the closer the coefficient will be to 0. On less noisy data , Or the amount of data is much larger than the characteristic number of data , If features are relatively independent , So even the simplest linear regression model can achieve very good results .

from sklearn.linear_model import LinearRegression

import numpy as np

np.random.seed(0)

size = 5000

#A dataset with 3 features

X = np.random.normal(0, 1, (size, 3))

#Y = X0 + 2*X1 + noise

Y = X[:,0] + 2*X[:,1] + np.random.normal(0, 2, size)

lr = LinearRegression()

lr.fit(X, Y)

#A helper method for pretty-printing linear models

def pretty_print_linear(coefs, names = None, sort = False):

if names == None:

names = ["X%s" % x for x in range(len(coefs))]

lst = zip(coefs, names)

if sort:

lst = sorted(lst, key = lambda x:-np.abs(x[0]))

return " + ".join("%s * %s" % (round(coef, 3), name)

for coef, name in lst)

print("Linear model:", pretty_print_linear(lr.coef_))

In this case , Although there is some noise in the data , However, this feature selection model can still well reflect the underlying structure of data . Of course, this is also because the problem in the example is very suitable to be solved by linear model : The relationship between characteristics and response variables is all linear , And the features are independent .

In many actual data , There are often multiple interrelated features , Then the model becomes unstable , Subtle changes in the data can lead to huge changes in the model ( The change of the model is essentially a coefficient , Or parameters , Can be interpreted as W), This makes the prediction of the model difficult , This phenomenon is also called multicollinearity . for example , Suppose we have a data set , Its real model should be Y=X1+X2, When we observe , Find out Y’=X1+X2+e,e It's noise . If X1 and X2 There is a linear relationship between , for example X1 About equal to X2, At this time, due to noise e The existence of , The model we learned may not be Y=X1+X2 了 , It could be Y=2X1, perhaps Y=-X1+3X2.

In the following example , Add some noise to the same data , Using random forest algorithm for feature selection .

from sklearn.linear_model import LinearRegression

size = 100

np.random.seed(seed=5)

X_seed = np.random.normal(0, 1, size)

X1 = X_seed + np.random.normal(0, .1, size)

X2 = X_seed + np.random.normal(0, .1, size)

X3 = X_seed + np.random.normal(0, .1, size)

Y = X1 + X2 + X3 + np.random.normal(0,1, size)

X = np.array([X1, X2, X3]).T

lr = LinearRegression()

lr.fit(X,Y)

print("Linear model:", pretty_print_linear(lr.coef_))

The sum of the coefficients is close to 3, It is basically consistent with the result of the previous example , It should be said that the learned model is still good for prediction . however , If the importance of features is explained from the literal meaning of coefficients ,X3 It has a strong positive impact on the output variables , and X1 Have a negative effect on , In fact, the influence between all features and output variables is equal .

The same method and routine can be used on similar linear models , For example, logical regression .

3.1 Regularization model

Regularization is to add additional constraints or penalties to existing models ( Loss function ) On , To prevent over fitting and improve generalization ability . The loss function consists of the original E(X,Y) Turn into E(X,Y)+alpha||w||,w It's a vector of model coefficients ( Some places are also called parameters parameter,coefficients),||·|| It's usually L1 perhaps L2 norm ,alpha It's an adjustable parameter , Controlling the intensity of regularization . When used in linear models ,L1 Regularization and L2 Regularization is also known as Lasso and Ridge.

3.2 L1 Regularization /Lasso

L1 Regularize the coefficients w Of l1 The norm is added to the loss function as a penalty term , Because the regular term is nonzero , This forces the coefficients corresponding to those weak features to become 0. therefore L1 Regularization often makes the learned model very sparse ( coefficient w Often for 0), This feature makes L1 Regularization becomes a good feature selection method .

Scikit-learn It provides Lasso, Provides for classification L1 Logical regression .

The following example runs on Boston house price data Lasso, The parameter alpha It's through grid search Optimized .

from sklearn.linear_model import Lasso

from sklearn.preprocessing import StandardScaler

from sklearn.datasets import load_boston

boston = load_boston()

scaler = StandardScaler()

X = scaler.fit_transform(boston["data"])

Y = boston["target"]

names = boston["feature_names"]

lasso = Lasso(alpha=.3)

lasso.fit(X, Y)

print("Lasso model: ", pretty_print_linear(lasso.coef_, names, sort = True))

You can see , The coefficients of many characteristics are 0. If it continues to increase alpha Value , The resulting model will become more and more sparse , That is, more and more characteristic coefficients will become 0.

However ,L1 Regularization is as unstable as non regularized linear models , If there is an associated feature in the feature set , Small changes in data can also lead to significant model differences .

3.3 L2 Regularization /Ridge regression

L2 Regularization of the coefficient vector L2 The norm is added to the loss function . because L2 The coefficient in the penalty term is quadratic , This makes L2 and L1 There are many differences , The most obvious point is ,L2 Regularization will make the values of coefficients average . For associative features , This means that they can get a closer correspondence coefficient . Or to Y=X1+X2 For example , hypothesis X1 and X2 With a strong connection , If you use L1 Regularization , No matter what model you learn is Y=X1+X2 still Y=2X1, The punishment is the same , All are 2alpha. But for L2 Come on , The penalty term of the first model is 2alpha, But the second model is 4*alpha. It can be seen that , When the sum of the coefficients is a constant , When the coefficients are equal, the penalty is minimal , That's why L2 Will make each coefficient tend to the same characteristics .

It can be seen that ,L2 Regularization is a stable model for feature selection , Unlike L1 Regularization is like that , The coefficients fluctuate because of subtle data changes . therefore L2 Regularization and L1 The value provided by regularization is different ,L2 Regularization is more useful for feature understanding : The coefficient corresponding to the characteristic with strong ability is non-zero .

Look back at 3 An example of interrelated features , Respectively by 10 A random initialization run of different seeds 10 Time , To observe L1 and L2 Regularized stability

from sklearn.linear_model import Ridge

from sklearn.metrics import r2_score

size = 100

#We run the method 10 times with different random seeds

for i in range(10):

print "Random seed %s" % i

np.random.seed(seed=i)

X_seed = np.random.normal(0, 1, size)

X1 = X_seed + np.random.normal(0, .1, size)

X2 = X_seed + np.random.normal(0, .1, size)

X3 = X_seed + np.random.normal(0, .1, size)

Y = X1 + X2 + X3 + np.random.normal(0, 1, size)

X = np.array([X1, X2, X3]).T

lr = LinearRegression()

lr.fit(X,Y)

print("Linear model:", pretty_print_linear(lr.coef_))

ridge = Ridge(alpha=10)

ridge.fit(X,Y)

print("Ridge model:", pretty_print_linear(ridge.coef_))

It can be seen that , Models obtained by linear regression on different data ( coefficient ) It's quite different , But for the L2 For the regularization model , The coefficients in the results are very stable , The difference is small , Are relatively close to 1, It can reflect the internal structure of data .

04

Random forests

Random forests have high accuracy 、 Good robustness 、 Easy to use, etc , This makes it one of the most popular machine learning algorithms . Random forests provide two methods of feature selection :mean decrease impurity and mean decrease accuracy.

4.1 The average impurity is reduced

english :mean decrease impurity

Random forest is composed of multiple decision trees . Every node in the decision tree is a condition about a feature , The purpose is to divide the data set into two according to different response variables . The node can be determined by using the impurity ( The optimal conditions ), For the classification problem , Gini impurity or information gain is usually used , For the return question , Variance or least squares fitting is usually used . When training decision trees , You can calculate how much tree impure each feature reduces . For a decision tree forest , It is possible to calculate the average reduction in impurity per feature , The average reduced impurity is used as the value of feature selection .

The following example is sklearn Measurement method of feature importance based on random forest in :

from sklearn.datasets import load_boston

from sklearn.ensemble import RandomForestRegressor

import numpy as np

#Load boston housing dataset as an example

boston = load_boston()

X = boston["data"]

Y = boston["target"]

names = boston["feature_names"]

rf = RandomForestRegressor()

rf.fit(X, Y)

print("Features sorted by their score:")

print(sorted(zip(map(lambda x: round(x, 4), rf.feature_importances_), names),

reverse=True))

The feature score here is actually Gini Importance. When using methods based on impurity , Remember :

This method is biased , It is more beneficial for variables with more categories ;

For multiple features that are associated , Any one of them can be used as an indicator ( Excellent features ), And once a feature is selected , The importance of other features will drop sharply , Because the impurity has been reduced by the selected feature , Other features are difficult to reduce so much impurity , thus , Only the feature selected first is highly important , The importance of other correlation features is often low .

In understanding data , This can lead to misunderstandings , Leading to the wrong belief that the first selected feature is very important , The rest of the features are unimportant , But in fact, the effect of these characteristics on response variables is very close ( This one Lasso Is very like ).

The random feature selection method slightly alleviates this problem , But in general, it has not been completely solved . In the following example ,X0、X1、X2 Are three interrelated variables , Without noise , The output variable is the sum of the three .

size = 10000

np.random.seed(seed=10)

X_seed = np.random.normal(0, 1, size)

X0 = X_seed + np.random.normal(0, .1, size)

X1 = X_seed + np.random.normal(0, .1, size)

X2 = X_seed + np.random.normal(0, .1, size)

X = np.array([X0, X1, X2]).T

Y = X0 + X1 + X2

rf = RandomForestRegressor(n_estimators=20, max_features=2)

rf.fit(X, Y);

print("Scores for X0, X1, X2:", map(lambda x:round (x,3),

rf.feature_importances_))

When calculating the importance of features , You can see X1 Is more important than X2 Is more important than 10 times , But in fact, their real importance is the same . Although the amount of data is already large and there is no noise , And used 20 A tree for random selection , But this problem will still exist .

One thing to note is that , The scoring of correlation features is unstable , This is not just unique to random forests , Most model-based feature selection methods have this problem .

4.2 The average accuracy is reduced

english :Mean decrease accuracy

Another common feature selection method is to directly measure the impact of each feature on the accuracy of the model . The main idea is to disrupt the order of eigenvalues of each feature , And measure the influence of order change on the accuracy of the model . Obviously , For unimportant variables , Disordering the order will not have much effect on the accuracy of the model , But for important variables , Disrupting the order will reduce the accuracy of the model .

This method sklearn There is no direct provision for , But it's easy to achieve , Let's continue to implement it on the Boston house price data set .

from sklearn.cross_validation import ShuffleSplit

from sklearn.metrics import r2_score

from collections import defaultdict

X = boston["data"]

Y = boston["target"]

rf = RandomForestRegressor()

scores = defaultdict(list)

#crossvalidate the scores on a number of different random splits of the data

for train_idx, test_idx in ShuffleSplit(len(X), 100, .3):

X_train, X_test = X[train_idx], X[test_idx]

Y_train, Y_test = Y[train_idx], Y[test_idx]

r = rf.fit(X_train, Y_train)

acc = r2_score(Y_test, rf.predict(X_test))

for i in range(X.shape[1]):

X_t = X_test.copy()

np.random.shuffle(X_t[:, i])

shuff_acc = r2_score(Y_test, rf.predict(X_t))

scores[names[i]].append((acc-shuff_acc)/acc)

print("Features sorted by their score:")

print(sorted([(round(np.mean(score), 4), feat) for

feat, score in scores.items()], reverse=True))

In this case ,LSTAT and RM These two characteristics have a great impact on the performance of the model , Disrupting the eigenvalues of these two features reduces the performance of the model 73% and 57%. Be careful , Despite these, we trained on all features and got the model , Then we get the importance test of each feature , This does not mean that when we throw away one or some important features, the performance of the model will decline a lot , Because even if a feature is deleted , Its associated features can also play a role , Make the performance of the model basically unchanged .

05

Two top-level feature selection algorithms

It's called the top floor , Because they are all based on model-based feature selection methods , For example, regression and SVM, Build models on different subsets , Then summarize and finally determine the feature score .

5.1 Stability options

Stability selection is a new method based on the combination of secondary sampling and selection algorithm , The selection algorithm can be regression 、SVM Or something like that . Its main idea is to run feature selection algorithms on different data subsets and feature subsets , Keep repeating , The final summary feature selection results , For example, you can count the frequency of a feature that is considered to be an important feature ( The number of times a feature is selected as an important feature divided by the number of times its subset is tested ). Ideally , The score of important characteristics will be close to 100%. A slightly weaker feature has to be true or false 0 Number of numbers , And the most useless feature score will be close to 0.

sklearn At random lasso And random logistic regression have the realization of stability selection .

from sklearn.linear_model import RandomizedLasso

from sklearn.datasets import load_boston

boston = load_boston()

#using the Boston housing data.

#Data gets scaled automatically by sklearn's implementation

X = boston["data"]

Y = boston["target"]

names = boston["feature_names"]

rlasso = RandomizedLasso(alpha=0.025)

rlasso.fit(X, Y)

print("Features sorted by their score:")

print(sorted(zip(map(lambda x: round(x, 4), rlasso.scores_),

names), reverse=True))

In the example above , The highest 3 The first characteristic score is 1.0, This means that they will always be selected as useful features ( Of course , The score will receive the regularization parameter alpha Influence , however sklearn The random lasso Can automatically choose the best alpha). The next few feature scores began to decline , But the decline is not particularly sharp , This is pure lasso The result of random forest is different from that of random forest . It can be seen that stability selection is helpful for overcoming over fitting and data understanding : in general , Good features don't have similar features 、 According to the characteristics, the score is 0, This one Lasso Is different . For feature selection tasks , In many datasets and environments , Stability selection is often one of the best ways to perform .

5.2 Recursive feature elimination

The main idea of recursive feature elimination is to build the model repeatedly ( Such as SVM Or regression models ) And then choose the best ( Or the worst ) Characteristics of ( You can choose according to the coefficient ), Put the selected features in one pass , And then repeat the process on the remaining features , Until all the features are traversed . In this process, the order in which features are eliminated is the order of features . therefore , This is a greedy algorithm for finding the optimal feature subset .

RFE The stability of depends largely on which model is used at the bottom of the iteration . for example , If RFE Ordinary regression , Regression without regularization is unstable , that RFE It's unstable ; If... Is used Ridge, While using Ridge The regularized regression is stable , that RFE It's stable .

Sklearn Provides RFE package , It can be used for feature elimination , It also provides RFECV, Features can be sorted by cross validation .

from sklearn.feature_selection import RFE

from sklearn.linear_model import LinearRegression

boston = load_boston()

X = boston["data"]

Y = boston["target"]

names = boston["feature_names"]

#use linear regression as the model

lr = LinearRegression()

#rank all features, i.e continue the elimination until the last one

rfe = RFE(lr, n_features_to_select=1)

rfe.fit(X,Y)

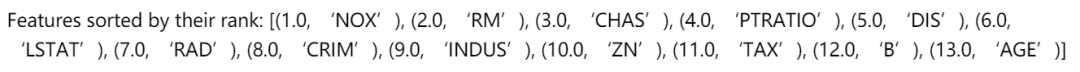

print("Features sorted by their rank:")

print(sorted(zip(map(lambda x: round(x, 4), rfe.ranking_), names)))

06

A complete example

Next, all the methods mentioned in this paper are compared , The dataset uses Friedman #1 Regression data ( The data in this paper ). The data is generated by this formula :

X1 To X5 It is generated by univariate distribution ,e Is a standard normal variable N(0,1). in addition , The original dataset contains 5 A noise variable X5,…,X10, Independent of the response variable . We added 4 An additional variable X11,…X14, Namely X1,…,X4 The associated variable , adopt f(x)=x+N(0,0.01) Generate , This will produce greater than 0.999 Correlation coefficient of . The data generated in this way can reflect the performance of different feature sorting methods in dealing with associated features .

Next, all feature selection methods will be run on the above data , The scores given by each method are normalized , Let the values fall on 0-1 Between . about RFE Come on , Because it gives the order, not the score , We will be the best 5 The score of one is set as 1, The scores of other features are evenly distributed in 0-1 Between .

from sklearn.datasets import load_boston

from sklearn.linear_model import (LinearRegression, Ridge,

Lasso, RandomizedLasso)

from sklearn.feature_selection import RFE, f_regression

from sklearn.preprocessing import MinMaxScaler

from sklearn.ensemble import RandomForestRegressor

import numpy as np

from minepy import MINE

np.random.seed(0)

size = 750

X = np.random.uniform(0, 1, (size, 14))

#"Friedamn #1” regression problem

Y = (10 * np.sin(np.pi*X[:,0]*X[:,1]) + 20*(X[:,2] - .5)**2 +

10*X[:,3] + 5*X[:,4] + np.random.normal(0,1))

#Add 3 additional correlated variables (correlated with X1-X3)

X[:,10:] = X[:,:4] + np.random.normal(0, .025, (size,4))

names = ["x%s" % i for i in range(1,15)]

ranks = {}

def rank_to_dict(ranks, names, order=1):

minmax = MinMaxScaler()

ranks = minmax.fit_transform(order*np.array([ranks]).T).T[0]

ranks = map(lambda x: round(x, 2), ranks)

return dict(zip(names, ranks ))

lr = LinearRegression(normalize=True)

lr.fit(X, Y)

ranks["Linear reg"] = rank_to_dict(np.abs(lr.coef_), names)

ridge = Ridge(alpha=7)

ridge.fit(X, Y)

ranks["Ridge"] = rank_to_dict(np.abs(ridge.coef_), names)

lasso = Lasso(alpha=.05)

lasso.fit(X, Y)

ranks["Lasso"] = rank_to_dict(np.abs(lasso.coef_), names)

rlasso = RandomizedLasso(alpha=0.04)

rlasso.fit(X, Y)

ranks["Stability"] = rank_to_dict(np.abs(rlasso.scores_), names)

#stop the search when 5 features are left (they will get equal scores)

rfe = RFE(lr, n_features_to_select=5)

rfe.fit(X,Y)

ranks["RFE"] = rank_to_dict(map(float, rfe.ranking_), names, order=-1)

rf = RandomForestRegressor()

rf.fit(X,Y)

ranks["RF"] = rank_to_dict(rf.feature_importances_, names)

f, pval = f_regression(X, Y, center=True)

ranks["Corr."] = rank_to_dict(f, names)

mine = MINE()

mic_scores = []

for i in range(X.shape[1]):

mine.compute_score(X[:,i], Y)

m = mine.mic()

mic_scores.append(m)

ranks["MIC"] = rank_to_dict(mic_scores, names)

r = {}

for name in names:

r[name] = round(np.mean([ranks[method][name]

for method in ranks.keys()]), 2)

methods = sorted(ranks.keys())

ranks["Mean"] = r

methods.append("Mean")

print("%s" % "".join(methods))

for name in names:

print("%s%s" % (name, "".join(map(str, [ranks[method][name] for method in methods]))))

Some interesting findings can be found from the above results :

There is a linear correlation between features , Each feature is evaluated independently , therefore X1,…X4 Score and X11,…X14 Your score is very close , And the noise characteristics X5,…,X10 As expected, there is little relationship between and response variables . Because of the variable X3 It's twice , therefore X3 And response variables ( except MIC outside , No other way to find the relationship ). This method can measure the linear relationship between characteristics and response variables , However, if you want to select high-quality features to improve the generalization ability of the model , This method is not particularly awesome , Because all the high-quality features will inevitably be selected twice .

Lasso Be able to pick out some high-quality features , At the same time, let the coefficients of other features tend to 0. It's useful when you need to reduce the number of features , But it is not easy to use for data understanding .( For example, in the result table ,X11,X12,X13 All the scores are good 0, It seems that they have no strong connection with the output variables , But it's not )

MIC Treat characteristics equally , This is a bit like the correlation coefficient , in addition , It can find X3 And response variables .

The ranking result of random forest based on impure is very clear , Features after the highest scoring features , A sharp drop in scores . As you can see from the table , The third character is smaller than the first 4 times . Other feature selection algorithms do not decline so dramatically .

Ridge The regression coefficient is evenly distributed to each related variable , As can be seen from the table ,X11,…,X14 and X1,…,X4 Your score is very close .

Stability selection is often a choice that can help understand the data and pick out high-quality features , It can be well seen in the result table . image Lasso equally , It can find those features with better performance (X1,X2,X4,X5), meanwhile , Variables with strong correlation with these features also get higher scores .

07

summary

For understanding data 、 Structure of data 、 In terms of characteristics , Univariate feature selection is a very good choice . Although it can be used to sort features to optimize the model , But because it can't find redundancy ( For example, suppose a feature subset , There is a strong correlation between the features , Then it is difficult to consider the problem of redundancy when selecting the optimal feature ).

Regularized linear model is a very powerful tool for feature understanding and feature selection .L1 Regularization can generate sparse models , Very useful for selecting feature subsets ; Compared with L1 Regularization ,L2 The performance of regularization is more stable , Because useful features often correspond to non-zero coefficients , therefore L2 Regularization is very suitable for the understanding of data . Due to the nonlinear relationship between response variables and characteristics , May adopt basis expansion Transform features into a more appropriate space , On this basis, consider using a simple linear model .

Random forest is a very popular feature selection method , It's easy to use , In general, you don't need feature engineering、 Adjustment and other tedious steps , And many toolkits provide methods for reducing average impurity . Its two main problems ,1 It's an important feature that has the potential to score very low ( The problem of relevance characteristics ),2 It is that this method is more beneficial to the features with more types of characteristic variables ( Biased questions ). For all that , This method is still well worth trying in your application .

Feature selection is very useful in many machine learning and data mining scenarios . When using it, you should find out what your goal is , Then find out which method is suitable for your task . When selecting the best features to improve the performance of the model , Cross validation can be used to verify whether one method is better than others .

When using feature selection to understand data, be careful , The stability of feature selection model is very important , Models with poor stability can easily lead to wrong conclusions . It is helpful to resample the data and then run the feature selection algorithm on the subset , If the results on each subset are consistent , It can be said that the conclusion drawn from this data set is credible , The results of this feature selection model can be used to understand the data .

NO.1

Previous recommendation

Historical articles

use Python among Plotly.Express The module draws several charts , I was really amazed !!

Hands teach you how to get started Python Medium Web Development framework , Dry cargo is full. !!

Long press attention - About data analysis and visualization - Set to star , Dry goods express

Share 、 Collection 、 give the thumbs-up 、 I'm looking at the arrangement ?

边栏推荐

- 山西化工园区智能化管控平台建设时间表

- Compile - compile for itop4412 development board makefile

- Sub chain cross technology source level exploration: an overview of xcvm

- 高等数学(第七版)同济大学 总习题一 个人解答

- In the past, the industrial Internet we knew only appeared as a substitute for the consumer Internet

- 【JVM】类加载相关面试题——类加载过程、双亲委派模型

- geo 读取单细胞csv表达矩阵 单细胞 改列名 seurat

- Exch: database integrity checking

- 3D chart effectively improves the level of large data screen

- Property or method “approval1“ is not defined on the instance but referenced during render

猜你喜欢

Nut cloud - sync files on your mobile hard drive on your new computer

Daily question brushing record (IX)

Property or method “approval1“ is not defined on the instance but referenced during render

【网易云信】播放demo构建:无法将参数 1 从“AsyncModalRunner *”转换为“std::nullptr_t”**

Jsr303 and common validator implementations

基于SSM实现毕业设计管理系统

Login box tricks

Interview shock 60: what will cause MySQL index invalidation?

parker比例溢流阀RS10R35S4SN1JW

Parker proportional overflow valve rs10r35s4sn1jw

随机推荐

canvas鼠标控制重力js特效

万卷书 - 欧洲的门户 [The Gates of Europe]

以往我们认识的产业互联网,只是以消费互联网的替代者的身份出现

Jsr303 and common validator implementations

Property or method “approval1“ is not defined on the instance but referenced during render

Differential analysis between different groups nichenet for silicosis runs successfully!

NielsenIQ迎来零售实验室负责人Dawn E. Norvell,将加速扩张全球零售战略

k线图快速入门必读

unity粒子_异常显示处理

Hyper-V: enable SR-IOV in virtual network

定时任务删除指定时间的的数据

List becomes vector list becomes vector list vector

博士毕业去县城工作,如何是你,怎么选?

Parker Parker sensor p8s-grflx

腾讯云的一场硬仗

“推广+搞笑剧情”,如何碰撞出爆款的火花?

idea必用插件

Splitting.js文本标题缓慢加载js特效

ROC-RK3566-PC使用10.1寸IPS触摸屏显示

Mysql8 NDB cluster installation and deployment