当前位置:网站首页>Elastic stack Best Practices Series: a filebeat memory leak analysis and tuning

Elastic stack Best Practices Series: a filebeat memory leak analysis and tuning

2022-06-21 15:36:00 【Three ignition cycles】

ELK From release 5.0 Then I joined beats After the kit , It's called elastic stack 了 .beats It's a lightweight set of software , It provides us with a simple , Quick way to collect in real time 、 More data to support our analysis . But because of beats All need to be installed in ELK Outside the cluster , On host , Its impact on the performance of the host often becomes the key to consider whether it can be used , Rather than what it provides . Because the stable operation of the business is the core KPI, Other data generated due to operation and maintenance is always a lower priority . There may be many aspects that affect the performance of the host , such as CPU Occupancy rate , Network throughput occupancy , disk IO, Memory, etc. , Here we discuss the problem of memory leakage in detail

filebeat yes beats One of the core components of the suite ( Another core is metricbeat), It is generally used to generate the collected files ( It's mainly the journal ) The program is installed in one place , According to the official advice , yes filebeat It is not recommended to collect NFS( Network shared disk ) Of the data on . When filebeat After running , Must be right cpu, Memory , The network and other resources are consumed , When this consumption can be limited to an acceptable range , I don't think anyone will restrict your use in the production environment filebeat. But if something unexpected happens , For example, it takes up a lot of memory , Then the operation and maintenance team must give priority to the resources to ensure the core business , hold filebeat The process was killed . It's a pity , Memory leaks , from filebeat The birth of has not been completely solved until now , No matter what version ( Abreast of the times 6.5 The version has not been observed yet ) In different scenarios and configurations , There is a problem of too much memory . ad locum , I mainly describe what I met in filebeat 6.0 Problems encountered on .

Problem scenarios and configurations

We use a unified simple configuration to monitor many hosts , It is this simple, undifferentiated configuration , It creates problems . It's not right , It's not right , It's not right !!! The reasonable way is to analyze specific problems , Customized configurations are made for different scenarios .

multiline, Multiline configuration , When the log file does not conform to the specification , Lots of matches pattern When , Memory leaks can occurmax_procs, Limit filebeat The number of processes , It's actually the number of cores , It is recommended to manually set to 1

filebeat.prospectors:

- type: log

enabled: true

paths:

- /qhapp/*/*.log

tail_files: true

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror'

multiline.negate: false

multiline.match: after

fields:

app_id: bi_lass

service: "{{ hostvars[inventory_hostname]['service'] }}"

ip_address: "{{ hostvars[inventory_hostname]['ansible_host'] }}"

topic: qh_app_raw_log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

#index.codec: best_compression

#_source.enabled: false

output.kafka:

enabled: true

hosts: [{{kafka_url}}]

topic: '%{[fields][topic]}'

max_procs: 1Be careful , In the above configuration , Just right cpu The number of cores is limited , There are no special restrictions on memory usage . At the configuration level , influence filebeat There are two main indicators of memory usage :

queue.mem.eventsThe size of the message queue , The default value is 4096, This parameter is in 6.0 The previous version wasspool-size, From the command line , Configure at startupmax_message_bytesThe size of a single message , The default value is 10M

filebeat The largest possible memory footprint is max_message_bytes * queue.mem.events = 40G, Considering this queue It's for storage encode Data from the past ,raw Data is also stored , therefore , Without limiting memory , The maximum memory usage can reach more than 80G.

therefore , The suggestion is to simultaneously filebeat Of CPU And memory .

below , Let's see. , Using the above configuration, under what conditions will memory leaks be observed

Too many monitoring files

For files that generate large amounts of content in real time , Like logs , The common practice is to change the log file rotate, Depending on the strategy , Every once in a while or after reaching a fixed size , Log rotate.

such , A large number of log files may be generated in the file directory .

If we use wildcards , To monitor the directory , be filebeat Will start a lot of harvester Instance to collect files . however , please remember , I'm not saying that this is bound to cause memory leaks , Just a memory leak was observed here , This is not to say that this is the cause of the memory leak .

When filebeat After a few months of operation , Occupied more than 10 individual G Of memory

Very often rotate journal

Another possibility is ,filebeat Only one file is configured for monitoring , such as test2.log, But because of test2.log Ceaseless rotate Issue new files , Although no wildcard is used to collect all the files in this directory , But because linux System is used inode number To uniquely identify the document ,rotate The new document has not changed its inode number, therefore , On the time filebeat It also enables monitoring of multiple files at the same time .

because multiline This leads to excessive memory consumption

multiline.pattern: '^[[:space:]]+|^Caused by:|^.+Exception:|^\d+\serror, For example, this configuration , Beginning with a space or tab line Is an addition to the previous line , It needs to be a multiline mode , Store in the same event among . When the file you are monitoring happens to have a space on each line of the file , Match multiple rows incorrectly , cause filebeat After the analysis , Single event The number of lines reached thousands , The size has reached 10M, And regular expressions are used in this process , Every one of them event The processing of will consume a lot of memory . Because most of the filebeat output It needs to be answered ,buffer these event Will inevitably consume a lot of memory .

Simulation scenario

I won't go into that , Let's make it simple python Code for :

[loggers]

keys=root

[handlers]

keys=NormalHandler

[formatters]

keys=formatter

[logger_root]

level=DEBUG

handlers=NormalHandler

[handler_NormalHandler]

class=logging.handlers.TimedRotatingFileHandler

formatter=formatter

args=('./test2.log', 'S', 10, 200)

[formatter_formatter]

format=%(asctime)s %(filename)s[line:%(lineno)d] %(levelname)s %(message)sabove , every other 10 second ('S', 'M' = minute ,'D'= God )rotate A file , All in all rotate 200 File .

then , Just look for a log , Keep playing , Here are 330 strip / second

import logging

from logging.config import fileConfig

import os

import time

CURRENT_FOLDER = os.path.dirname(os.path.realpath(__file__))

fileConfig(CURRENT_FOLDER + '/logging.ini')

logger = logging.getLogger()

while True:

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task !")

logger.debug("DEBUG 2018-11-26 09:31:35 com.sunyard.insurance.server.GetImage 43 - Resource request :date=20181126&file_name=/imagedata/imv2/pool1/images/GXTB/2017/11/14/57/06b6bcdd31763b70b20f56c689e51f5e_1/06b6bcdd31763b70b20f56c689e51f5e_2.syd&file_encrypt=0&token=HUtGGG20GH4GAqq209R9tc9UGtAURR0b DEBUG 2018-11-26 09:31:40 com.sunyard.insurance.scheduler.job.DbEroorHandleJob 26 - [ Database operation exception handling JOB] Handling exception files , This machine does not run , Exit task [email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#[email protected]#!#@[email protected]##########################################################################################################################################################")

time.sleep(0.03)How to observe filebeat Of memory

stay 6.5 Before the release , We can't get through xpack Of monitoring Function to observe beats The performance of the suite . therefore , What is discussed here is no monitoring when , How do we detect filebeat Performance of . Of course , The simple way is through top,ps Wait for the command of the operating system to view , But these are real-time , Unable to make trend observation , And they are all process level , Can't see filebeat The internal situation is really . therefore , Here's how to pass filebeat 's logs and pprof This tool is used to observe memory usage

adopt filebeat Log

filebeat Document interpretation

Actually filebeat Log , Many parameters have been included for real-time observation filebeat Resource usage of , Here are filebeat A log fragment of ( The log fragment here is 6.0 Version of ,6.5 After the version , The entire log format has changed , from kv The format becomes json Object format ,xpack You can directly log filebeat Of monitoring):

2018-11-02T17:40:01+08:00 INFO Non-zero metrics in the last 30s: beat.memstats.gc_next=623475680 beat.memstats.memory_alloc=391032232 beat.memstats.memory_total=155885103371024 filebeat.events.active=-402 filebeat.events.added=13279 filebeat.events.done=13681 filebeat.harvester.closed=1 filebeat.harvester.open_files=7 filebeat.harvester.running=7 filebeat.harvester.started=2 libbeat.config.module.running=0 libbeat.output.events.acked=13677 libbeat.output.events.batches=28 libbeat.output.events.total=13677 libbeat.outputs.kafka.bytes_read=12112 libbeat.outputs.kafka.bytes_write=1043381 libbeat.pipeline.clients=1 libbeat.pipeline.events.active=0 libbeat.pipeline.events.filtered=4 libbeat.pipeline.events.published=13275 libbeat.pipeline.events.total=13279 libbeat.pipeline.queue.acked=13677 registrar.states.cleanup=1 registrar.states.current=8 registrar.states.update=13681 registrar.writes=28

The parameters are mainly divided into three parts :

beat.*, contain memstats.gc_next,memstats.memory_alloc,memstats.memory_total, This is all beat Indicators for all components , yes filebeat inherited , Mainly memory related , We pay special attention tomemstats.memory_alloc,alloc The more , The more memory it takesfilebeat.*, This part is filebeat Unique indicators , adopt event Relevant indicators , We know huff and puff , adopt harvester, We know how many files are being monitored , Not consumed event The more they pile up ,havester The more you create , The more memory is consumedlibbeat.*, It's also beats Common metrics for components , contain outputs and pipeline Etc . It's important here When outputs When there is a jam , It will directly affect queue Inside event Consumption , Cause memory accumulationregistrar,filebeat Put the status of the monitoring file in registry In the document , When there are many monitoring files , such as 10 m , And there is no reasonable settingclose_inactiveParameters , This file can achieve 100M, After loading memory , Take up memory directly

filebeat Log parsing

Of course , We can't read this log directly , Since we use ELK, It must be ELK Read it . the reason being that kv Format , Very convenient , use logstash Of kv plugin:

filter {

kv {}

}kv Can't specify properties Of type, therefore , We need to specify the template of the index slightly :

PUT _template/template_1

{

"index_patterns": ["filebeat*"],

"settings": {

"number_of_shards": 1

},

"mappings": {

"doc": {

"_source": {

"enabled": false

},

"dynamic_templates": [

{

"longs_as_strings": {

"match_mapping_type": "string",

"path_match": "*beat.*",

"path_unmatch": "*.*name",

"mapping": {

"type": "long"

}

}

}

]

}

}

} The template above , take kv It was resolved that properties all mapping To long type , But pay attention to "path_match": "*beat.*" unable match To registrar Indicators of , Readers can write a more perfect mapping.

such , We can go through kibana Visualization components , Clearly see the process of memory leakage

And the use of resources :

After visualizing the information , We can clearly see that , Memory mutations and ERR It happens at the same time

That is, the following error:

2018-11-27T09:05:44+08:00 ERR Harvester could not be started on new file: /qhapp/logs/bd-etl/logs/test2.log, Err: Error setting up harvester: Harvester setup failed. Unexpected file opening error: file info is not identical with opened file. Aborting harvesting and retrying file later again

It can lead to filebeat Suddenly requested additional memory . Please check issue

adopt pprof

as everyone knows ,filebeat Yes, it is go The realization of language , and go The basic library of the language itself contains pprof This extremely powerful performance analysis tool , This tool is just for debug Of , In normal mode ,filebeat It will not start the selection process , And I'm sorry , There is no mention in the official documents that we can use pprof To observe filebeat. We can then pass 6.3 A memory leak fixed on issue, To learn how to use pprof Analyze

start-up pprof monitoring

First , Need make filebeat Run at startup pprof, The specific method is to add parameters during startup -httpprof localhost:6060, namely /usr/share/filebeat/bin/filebeat -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat -httpprof localhost:6060. There is only binding localhost, Unable to access remotely , If you want remote access , You should use 0.0.0.0.

At this time , You can go through curl http://localhost:6060/debug/pprof/heap > profile.txt Wait for the order , obtain filebeat Real time stack information .

Remote connection

Of course , You can also install on your local computer go, And then through go tool Remote connection pprof.

Because we need to study the memory problem , So the following connection accesses /heap Subpath

go tool pprof http://10.60.x.x:6060/debug/pprof/heap

top command

After the connection , You can go through top command , View the instances that consume the most memory :

33159.58kB of 33159.58kB total ( 100%)

Dropped 308 nodes (cum <= 165.80kB)

Showing top 10 nodes out of 51 (cum >= 512.04kB)

flat flat% sum% cum cum%

19975.92kB 60.24% 60.24% 19975.92kB 60.24% runtime.malg

7680.66kB 23.16% 83.40% 7680.66kB 23.16% github.com/elastic/beats/filebeat/channel.SubOutlet

2048.19kB 6.18% 89.58% 2048.19kB 6.18% github.com/elastic/beats/filebeat/prospector/log.NewHarvester

1357.91kB 4.10% 93.68% 1357.91kB 4.10% runtime.allgadd

1024.08kB 3.09% 96.76% 1024.08kB 3.09% runtime.acquireSudog

544.67kB 1.64% 98.41% 544.67kB 1.64% github.com/elastic/beats/libbeat/publisher/queue/memqueue.NewBroker

528.17kB 1.59% 100% 528.17kB 1.59% regexp.(*bitState).reset

0 0% 100% 528.17kB 1.59% github.com/elastic/beats/filebeat/beater.(*Filebeat).Run

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.CloseOnSignal.func1

0 0% 100% 512.04kB 1.54% github.com/elastic/beats/filebeat/channel.SubOutlet.func1View the stack call graph

Input web command , Will produce stack call relationships svg chart , In this svg In the figure , You can combine top Commands , stay top in , We already know github.com/elastic/beats/filebeat/channel.SubOutlet It takes up a lot of memory , In the picture , It shows the call relation stack , You can see how this class is instantiated , And in the whole heap , How memory is distributed . The most intuitive is , The larger the rectangular area of the instance , Represents the more memory used .:

View source code

adopt list command , You can quickly view the problem source code of an instance , Like before top10 In command , We've seen github.com/elastic/beats/filebeat/channel.SubOutlet Instances of this class take up a lot of memory , We can go through list Do further analysis , See which statement in this class begins to occupy memory :

(pprof) list SubOutlet

Total: 32.38MB

ROUTINE ======================== github.com/elastic/beats/filebeat/channel.SubOutlet in /home/jeremy/src/go/src/github.com/elastic/beats/filebeat/channel/util.go

7.50MB 7.50MB (flat, cum) 23.16% of Total

. . 15:// SubOutlet create a sub-outlet, which can be closed individually, without closing the

. . 16:// underlying outlet.

. . 17:func SubOutlet(out Outleter) Outleter {

. . 18: s := &subOutlet{

. . 19: isOpen: atomic.MakeBool(true),

1MB 1MB 20: done: make(chan struct{}),

2MB 2MB 21: ch: make(chan *util.Data),

4.50MB 4.50MB 22: res: make(chan bool, 1),

. . 23: }

. . 24:

. . 25: go func() {

. . 26: for event := range s.ch {

. . 27: s.res <- out.OnEvent(event) How to tune

In fact, the process of tuning is the process of adjusting parameters , I said before , Memory related parameters , max_message_bytes,queue.mem.events,queue.mem.flush.min_events, And the formula of the memory occupied by the queue :max_message_bytes * queue.mem.events

output.kafka:

enabled: true

# max_message_bytes: 1000000

hosts: ["10.60.x.x:9092"]

topic: '%{[fields][topic]}'

max_procs: 1

#queue.mem.events: 256

#queue.mem.flush.min_events: 128But in fact , In different circumstances , Different reasons may cause filebeat Too much memory used , here , You need to carefully identify your context :

- Is it because of wildcard characters , Cause a huge number of files to be monitored at the same time , In this case, you should avoid using wildcards to monitor useless files .

- Whether the single line content of the file is huge , Determine whether the contents of the document need to be modified , Or filter it

- Whether there are too many matches multiline Of pattern, And multi line event Whether the volume of a single strip is too large . At this time , It needs to be closed temporarily multiline, Modify the contents of the file or multiline Of pattern.

- whether output Frequent blockage ,event queue It is always cached event. At this time, check whether your network environment or message queue middleware is normal

边栏推荐

- AAAI 2022 | sasa: rethinking point cloud sampling in 3D object detection

- 階乘求和

- Select everything between matching brackets in vs Code - select everything between matching brackets in vs Code

- The application of RPC technology and its framework sekiro in crawler reverse, encrypting data is a shuttle!

- Interview - difference between pointer and reference

- 泰山OFFICE技术讲座:域在MODEL的存储结构

- Basic concepts of database

- Is it safe to open a securities account by downloading the app of qiniu school? Is there a risk?

- I don't really want to open an account online. Is it safe to open an account online

- Link storage structure of simulated design disk file

猜你喜欢

Someone is storing credit card data - how do they do it- Somebody is storing credit card data - how are they doing it?

Fundamentals of C language 13: file input / output

2022 Fujian latest fire protection facility operator simulation test question bank and answers

Defcampctf2122 Forensics

Alibaba cloud energy consumption treasure will be released soon to help SMEs' green upgrading and participate in the carbon neutral trillion market

Metric win computer application

Several common implementation methods of mock interface test

Based on Transformer's artificial neural network, the image of organic structure is transformed into molecular structure

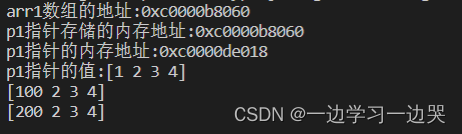

GO语言-指针

Redis introduction and Practice (with source code)

随机推荐

2022 latest MySQL interview questions

Build an efficient and scalable result cache

Factorial summation

Is pension insurance a financial product? Is the fund safe?

Reasonably set the number of threads 【 rpm 】

Alibaba cloud energy consumption treasure will be released soon to help SMEs' green upgrading and participate in the carbon neutral trillion market

养老年金险是理财产品吗?资金安全吗?

Merge two ordered linked lists

Integration of sparkstreaming and sparksql

Windows系统下C语言连接MySQL

Word thesis typesetting tutorial

Research Report on the overall scale, major producers, major regions, products and application segments of active aluminum chloride in the global market in 2022

[Yugong series] February 2022 wechat applet -app Subpackages and preloadrule of JSON configuration attribute

[Yugong series] February 2022 wechat applet -app Debug JSON configuration attribute

Leetcode: number of good subsets (backtracking + state compression +dfs + pruning)

Somme factorielle

Non local network: early human attempts to tame transformer in CV | CVPR 2018

Phantom star VR product details 31: escape from the secret room

[Yugong series] February 2022 wechat applet -app Networktimeout of JSON configuration attribute

Best practice | how to use Tencent cloud micro build to develop enterprise portal applications from 0 to 1