当前位置:网站首页>[ai4code final chapter] alphacode: competition level code generation with alphacode (deepmind)

[ai4code final chapter] alphacode: competition level code generation with alphacode (deepmind)

2022-07-25 13:08:00 【chad_ lee】

AlphaCode—— Macro recommendations (DeepMind)

14 Work together ,74 Page papers .

Think CodeX Just made a simple Natural language - Programming language My translation task ,AlphaCode To do a more difficult . The input and output are :

Method

technological process

Model training is divided into pre training and fine tuning , Then, in the prediction stage, large-scale sampling ( Recall ) Get onemillion , Then clustering and filtering get 1000 individual ( Rough row ), Then choose 10 Submission ( Fine discharge ).

Data sets

First in Github Collect open source code , After pretreatment and cleaning 715GB, As a pre training data set ; And then use CodeContests Dataset tuning , The format is shown in the figure above .

Model structure

No model diagram . differ CodeX Of GPT, Only Transformer Of decoder, Here is a complete Transformer, both encoder Also have decoder. Minimum model 3 Million parameters , Maximum model 4000 Million parameters .

What is worth mentioning here is the multi-head attention Only multiple query,KV It's all the same .

Fine-tuning

encoder The input is the description of the topic ( Also include : Of the subject tags、solution The language used 、 Examples in the title ),decoder The output of corresponds to ground-truth It's a solution, It can be right , It can also be wrong .

Sampling & Evaluation: Massive trial and error

- Step1: Enter the title description into Model 1, From the model 1 Sample out 100 Ten thousand output codes .

- Step2: Obviously, the sampled 100 In 10000 codes 99% Can't run 、 Wrong code , Use the one that comes with the title test case, First filter out these invalid 、 Error code , Still left 1000 Code that can run .( Recall : One million ~ thousand )

- Step3: AlphaCode Introduce an extra Model 2, Model 2 It's also a model 1 Pre training model of , however fine-tuning The purpose of is to enter the Title Description , Output test case. Model 2 Automatically generated test case Accuracy is not guaranteed , It's just For next clustering use Of . Model 2 Generated for the current problem 50 individual test inputs.

- Step4: The generated 50 individual test inputs Input to 1000 Code , If the generation results of some codes are almost the same , Explain that the algorithm or logic behind these codes is similar , Can be classified into one category . After clustering , leave 10 Class code , First select the code from a large number of classes to submit , More likely to win .( Fine discharge : thousand ~10)

experimental result

Competition results

stay 10 The result of this program competition . Did not actually participate , It's the estimated ranking , Because there are penalties in the competition , So this penalty can only be estimated .AlphaCode Basically in Codeforce Medium level in the competition .

Evaluation indicators

- [email protected]:Step1 Sampling recall K Code , Then cluster and select 10 Submission .

- [email protected]: Sample out k Code , If one is right, it will be a hit .

The effect of the number of samples

Step1 The more code samples are recalled , The better the result. . The comparison between the left and right figures shows , After filtering 、 Cluster the selected code , Basically the best . Especially the comparison of the following figure :

Random from K It's useless to choose among the samples ; Clustering is a little better than filtering alone ; clustering + Filtering and oracle It's a little bit close .

The influence of money

The longer you train , The more you pick , The better the result. .

Security discussion

There's only one page ( article 74 page ), Basic is CodeX Subset .

边栏推荐

- 零基础学习CANoe Panel(15)—— 文本输出(CAPL Output View )

- Leetcode 1184. distance between bus stops

- Mysql 远程连接权限错误1045问题

- Chapter5 : Deep Learning and Computational Chemistry

- "Autobiography of Franklin" cultivation

- Selenium uses -- XPath and analog input and analog click collaboration

- 《富兰克林自传》修身

- Make a general cascade dictionary selection control based on jeecg -dictcascadeuniversal

- Redis可视化工具RDM安装包分享

- Connotation and application of industrial Internet

猜你喜欢

Clickhouse notes 03-- grafana accesses Clickhouse

【问题解决】org.apache.ibatis.exceptions.PersistenceException: Error building SqlSession.1 字节的 UTF-8 序列的字

Date and time function of MySQL function summary

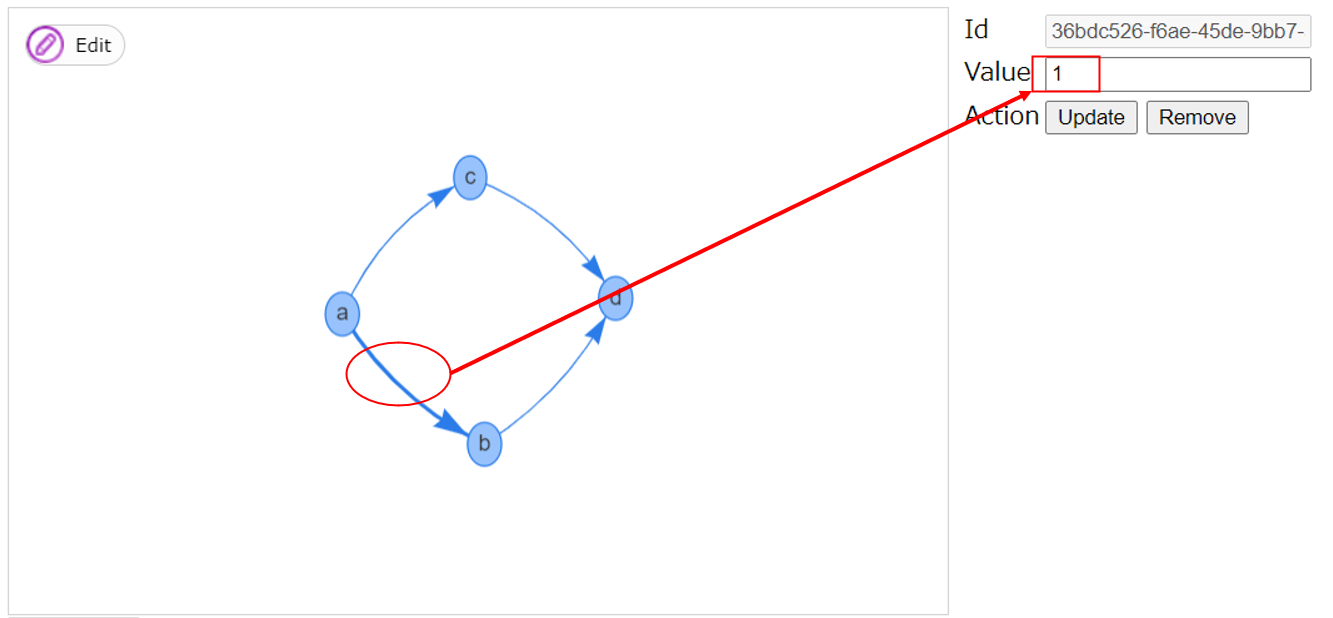

Business visualization - make your flowchart'run'(3. Branch selection & cross language distributed operation node)

JS convert pseudo array to array

mysql函数汇总之日期和时间函数

感动中国人物刘盛兰

Connotation and application of industrial Internet

“蔚来杯“2022牛客暑期多校训练营2 补题题解(G、J、K、L)

Docekr学习 - MySQL8主从复制搭建部署

随机推荐

Mysql 远程连接权限错误1045问题

I want to ask whether DMS has the function of regularly backing up a database?

如何理解Keras中的指标Metrics

卷积神经网络模型之——VGG-16网络结构与代码实现

吕蒙正《破窑赋》

Selenium use -- installation and testing

Selenium uses -- XPath and analog input and analog click collaboration

A turbulent life

[300 opencv routines] 239. accurate positioning of Harris corner detection (cornersubpix)

艰辛的旅程

【AI4Code】《Contrastive Code Representation Learning》 (EMNLP 2021)

【问题解决】org.apache.ibatis.exceptions.PersistenceException: Error building SqlSession.1 字节的 UTF-8 序列的字

[rust] reference and borrowing, string slice type (& STR) - rust language foundation 12

485通讯( 详解 )

2022 年中回顾 | 大模型技术最新进展 澜舟科技

JS convert pseudo array to array

Eccv2022 | transclassp class level grab posture migration

交换机链路聚合详解【华为eNSP】

Machine learning strong foundation program 0-4: popular understanding of Occam razor and no free lunch theorem

cv2.resize函数报错:error: (-215:Assertion failed) func != 0 in function ‘cv::hal::resize‘