当前位置:网站首页>Spark shuffle tuning

Spark shuffle tuning

2022-08-01 21:33:00 【xiexiexie0520】

Spark suffle tuning

spark.shuffle.file.buffer

- Default: 32k

- Parameter description: This parameter is used to set the buffer size of the BufferedOutputStream of the shuffle write task.Before writing the data to the disk file, it will be written to the buffer buffer first, and the overflow will be written to the disk only after the buffer is full.

- Timing suggestion: If the available memory resources of the job are sufficient, you can appropriately increase the size of this parameter (such as 64k), thereby reducing the number of times of overflowing disk files during the shuffle write process, which can also reduce the number of disk IOs.thereby improving performance.In practice, it is found that by adjusting this parameter reasonably, the performance will be improved by 1% to 5%.

spark.reducer.maxSizeInFlight

- Default: 48m

- Parameter description: This parameter is used to set the buffer buffer size of the shuffle read task, and this buffer buffer determines how much data can be pulled each time.

- Optimization suggestion: If the available memory resources of the job are sufficient, you can appropriately increase the size of this parameter (for example, 96m), thereby reducing the number of times of data pulling, which can also reduce the number of network transmissions, thereby improving performance.In practice, it is found that by adjusting this parameter reasonably, the performance will be improved by 1% to 5%.

- Error: reduce oom

The reduce task goes to map to pull data, and reduce pulls data while aggregating. The reduce segment has an aggregated memory (executor memory * 0.2) - Solution:

- Increase the proportion of memory for reduce aggregation Set spark.shuffle.memoryFraction

- Increase the size of executor memory --executor-memory 5G

- Reduce the amount of data pulled by the reduce task each time Set spark.reducer.maxSizeInFlight 24m

spark.shuffle.io.maxRetries

- Default: 3

- Parameter description: When the shuffle read task pulls its own data from the node where the shuffle write task is located, if the pull fails due to a network abnormality, it will automatically retry.This parameter represents the maximum number of retries that can be made.If the pull is not successful within the specified number of times, it may cause the job to fail.

- Timing suggestions: For those jobs that include particularly time-consuming shuffle operations, it is recommended to increase the maximum number of retries (such as 60 times) to avoid data pull due to factors such as JVM full gc or network instability.fail.In practice, it has been found that for the shuffle process for a large amount of data (several billions to tens of billions), adjusting this parameter can greatly improve the stability.

shuffle file not find taskScheduler is not responsible for retrying tasks, DAGScheduler is responsible for retrying stages

spark.shuffle.io.retryWait

- Default: 5s

- Parameter description: The specific explanation is the same as above. This parameter represents the waiting interval for each retry to pull data, and the default is 5s.

- Timing suggestion: It is recommended to increase the interval (such as 60s) to increase the stability of the shuffle operation.

spark.shuffle.memoryFraction

- Default: 0.2

- Parameter description: This parameter represents the proportion of memory in the Executor memory that is allocated to the shuffle read task for aggregation operations. The default is 20%.

- Timing suggestion: This parameter is explained in Resource Parameter Tuning.If the memory is sufficient and persistent operations are rarely used, it is recommended to increase this ratio and give more memory to the shuffle read aggregation operation to avoid frequent reading and writing of disks during the aggregation process due to insufficient memory.In practice, it is found that reasonable adjustment of this parameter can improve the performance by about 10%.

spark.shuffle.manager

- Default: sort

- Parameter description: This parameter is used to set the type of ShuffleManager.After Spark 1.5, there are three options: hash, sort and tungsten-sort.HashShuffleManager was the default option before Spark 1.2, but Spark 1.2 and later versions are SortShuffleManager by default.tungsten-sort is similar to sort, but uses the off-heap memory management mechanism in the tungsten plan, which is more memory efficient.

- Timing suggestion: Since SortShuffleManager sorts data by default, if your business logic needs this sorting mechanism, you can use the default SortShuffleManager; and if your business logic does not need to sort data,Then it is recommended to refer to the following parameter tuning to avoid sorting operations through the bypass mechanism or the optimized HashShuffleManager, while providing better disk read and write performance.It should be noted here that tungsten-sort should be used with caution, because some corresponding bugs have been found before.

spark.shuffle.sort.bypassMergeThreshold

- Default: 200

- Parameter description: When ShuffleManager is SortShuffleManager, if the number of shuffle read tasks is less than this threshold (default is 200), the sorting operation will not be performed during the shuffle write process, but directly in the way of the unoptimized HashShuffleManager.Write data, but in the end all temporary disk files generated by each task will be merged into one file, and a separate index file will be created.

- Timing suggestion: When you use SortShuffleManager, if you do not need sorting operation, it is recommended to increase this parameter larger than the number of shuffle read tasks.Then the bypass mechanism will be automatically enabled at this time, and the map-side will not be sorted, reducing the performance overhead of sorting.However, in this way, a large number of disk files will still be generated, so the performance of shuffle write needs to be improved.

spark.shuffle.consolidateFiles

- Default: false

- Parameter description: If HashShuffleManager is used, this parameter is valid.If set to true, the consolidate mechanism will be turned on, and the output files of shuffle write will be greatly merged. In the case of a particularly large number of shuffle read tasks, this method can greatly reduce disk IO overhead and improve performance.

- Tuning suggestion: If the sorting mechanism of SortShuffleManager is really not needed, in addition to using the bypass mechanism, you can also try to manually specify the spark.shffle.manager parameter as hash, use HashShuffleManager, and enable the consolidate mechanism at the same time.I have tried it in practice and found that its performance is 10%~30% higher than that of SortShuffleManager with bypass mechanism enabled.

边栏推荐

猜你喜欢

随机推荐

图的邻接矩阵存储

Realize the superposition display analysis of DWG drawing with CAD in Cesium

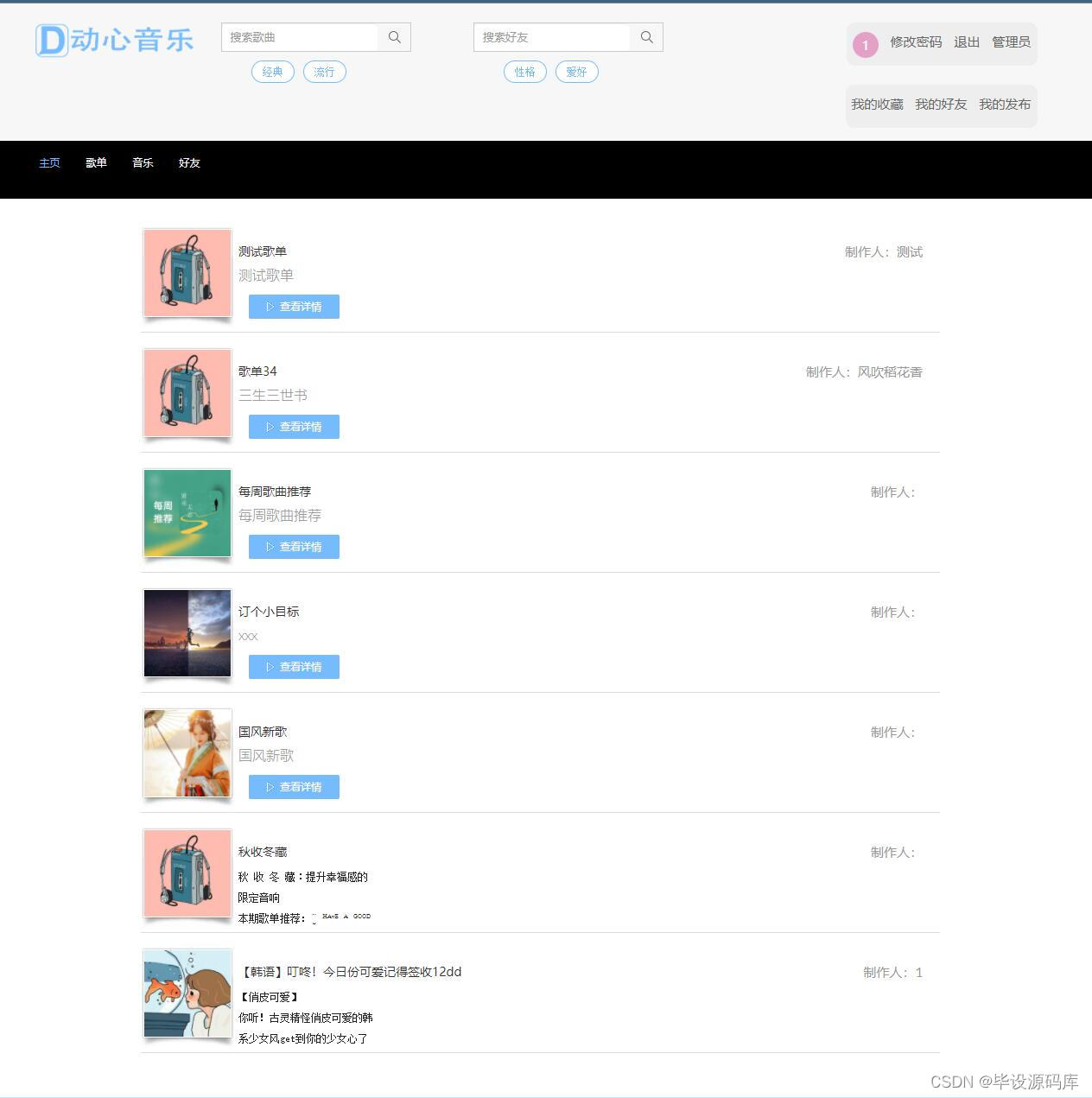

基于php湘西旅游网站管理系统获取(php毕业设计)

Based on php film and television information website management system acquisition (php graduation design)

淘宝获取收货地址列表的 API

C语言_联合体共用体引入

HCIP---多生成树协议相关知识点

如何封装 cookie/localStorage/sessionStorage hook?

数据库练习

JSD-2204-Knife4j框架-处理响应结果-Day07

一个关于操作数据库的建议—用户密码

深拷贝浅拷贝

JS提升:如何中断Promise的链式调用

空间数据库开源路,超图+openGauss风起禹贡

C专家编程 第1章 C:穿越时空的迷雾 1.4 K&R C

PX4模块设计之十五:PX4 Log设计

C陷阱与缺陷 第7章 可移植性缺陷 7.6 内存位置0

软考 ----- UML设计与分析(上)

C专家编程 前言

render-props和高阶组件