当前位置:网站首页>Detailed data governance knowledge system

Detailed data governance knowledge system

2022-07-01 02:25:00 【Zhuojiu South Street】

Catalog

- 01 What are the misunderstandings of data governance ?

- Mistake 1 : Unclear customer needs

- Mistake 2 : Data governance is the business of the Technology Department

- Mistake 3 : Large and comprehensive data governance

- Error 4 : Tools are omnipotent

- Mistake 5 : Data standards are difficult to implement

- Error 6 : The data quality problem has been found , so what ?

- Mistake 7 : You seem to have done nothing ?

- 02 Metadata management of data governance

- 03 Data quality management of data governance

- 04 Data standard management of data governance

In the industry , Everyone is confused about how to do a good job in data governance . Data management must first find out the background of the data , Plan the road map , Then make a decision .

This paper starts from the misunderstanding of data governance 、 Metadata management 、 Data quality management 、 Data standard management, etc 4 Sort out a set of experience summary of data governance from three aspects , Give some reference to colleagues working on data governance .

01 What are the misunderstandings of data governance ?

Big data era , Data becomes a valuable asset for society and organizations , It drives everything like oil and electricity in the industrial age , However, if there are too many impurities in the oil , The voltage of the current is unstable , The value of data is greatly reduced , Not even available at all , Dare not use , therefore , Data governance is the inevitable choice for us to make good use of massive data in the big data era .

But we all know that , Data governance is a long-term and complicated work , It can be said to be dirty work in the big data field , Many times, data governance vendors have done a lot of work , But the customer thinks they haven't seen any results . Most data governance consulting projects can submit an answer sheet that satisfies customers , But when the consulting results are put into practice , For a variety of reasons , It may well be a different landscape . How to avoid this , It is a problem worth pondering for every enterprise doing data governance .

It can be said that in the industry , Everyone is confused about how to do a good job in data governance .

The author has been involved in the field of big data governance 6 More than a year , Responsible for the government 、 military 、 aviation 、 Data governance projects for large and medium-sized manufacturing enterprises . I have had successful experience in practice , Of course, I have also experienced many lessons of failure , In these processes , The author has been thinking about what big data governance is ? What kind of reasonable goal to achieve ? How to avoid some detours in the middle ? The following is the pit that the author has been through , I hope it can be used for reference .

Mistake 1 : Unclear customer needs

Since the customer asks the manufacturer to help him with data management , You must have seen that there are various problems with your data . But what to do , How do you do it? , How big is the scope , What to do first, then what to do , What kind of goal to achieve , Business unit 、 Technology Department 、 How to cooperate among manufacturers …… In fact, many customers do not think clearly about the problems they really want to solve . Data governance , It is difficult to find a starting point .

In my experience , If the customer doesn't think clearly about the requirements for the time being , It is recommended to ask the manufacturer to help with a small consulting project , Through a professional team , Let's find the starting point . The focus of this consulting project should be the investigation of data status . Through the research data structure 、 Existing data standards and implementation , The current situation and pain points of data quality , The current status of the data governance capability that the customer already has , To figure out the data .

On the basis of finding out the family background , A professional data governance team helps customers design a practical data governance roadmap , On the basis of mutual agreement , Follow the roadmap to perform data governance .

In fact, customers often don't have no demand , But the demand is relatively general , Fuzzy, not clear , Both parties can spend a certain amount of time and energy to find the real goal , Sharpening a knife never misses a woodcutter , In this way, we will not spend more money to pay tuition fees in the future .

summary : Data governance work , Be sure to find out the background of the data first , Plan the road map , Never set up a platform as soon as you come up .

Mistake 2 : Data governance is the business of the Technology Department

In the age of big data , Many organizations recognize the value of data , A dedicated team has also been set up to manage the data , Some are called data management division , Some are called big data centers , Some are called data application department , There are many names . These institutions are often composed of technicians , Its positioning also belongs to the technical department , What they have in common is : Strong technology , Weak service . When a data governance project needs to be implemented , It is often these technical departments that take the lead . Most technical departments start from the data center or big data platform , Limited by the scope of the organization , Don't want to expand to business systems , I just want to manage my own responsibility .

But the reason for the data problem , It is often business > technology . It can be said that most of the data quality problems , It all comes from the business , Such as : There are many data sources , Unclear responsibilities , As a result, the same data is expressed differently in different information systems ; Business requirements are not clear , Irregular or missing data filling , wait . Many superficial technical problems , Such as ETL Data processing error caused by a code change in the process , Affect the correctness of data in the report , In essence, it is still the nonstandard business management .

When I communicate with many customers about data governance , It is found that most customers do not realize the root cause of data quality problems , We just want to solve the data problem unilaterally from the technical dimension , This way of thinking leads customers to plan data governance , No consideration was given to the establishment of a technical group covering 、 Strong organizational structure of the business group , Systems and processes that can be effectively implemented , The result is a big discount .

summary : Data governance is the business of the technology department , It's the business department , It is necessary to establish an organizational structure and institutional processes with the participation of multiple parties , The work of data governance can be truly implemented to people , Not floating on the surface .

Mistake 3 : Large and comprehensive data governance

In consideration of return on investment , Customers tend to do a business and technical domain , Large and comprehensive data governance projects . From the production of data , To data processing , application , The destruction , They hope to manage the whole life cycle of data . From business system , To the data center , To data applications , They hope that every data in it can be included in the scope of data governance .

However, it is a big concept in a broad sense , It includes a lot of content , It's usually impossible to do it in one project , Instead, it needs to be implemented in stages and batches , So if the manufacturer succumbs to the customer's idea , It's easy to fail in the end , It doesn't work . therefore , We need to guide our customers , From the core system , The most important data starts data governance .

How to guide customers ? Here we introduce a well-known concept : This principle . actually , The "28" principle also applies to data governance :80% Data services , Actually, it depends on 20% The data is supporting ; alike ,80% Data quality problems , In fact, it is from that 20% System and human generated . In the process of data governance , If you can find this 20% The data of , And here 20% System and people , without doubt , It will get twice the result with half the effort .

But how to convince customers , Start with the most important data ? This is what we talked about in myth 1 : Before you know the data , Don't start work rashly . Through research , analysis , Find out that 20% The data and 20% System and people , Provide authentic and reliable analysis report , It's possible to impress customers , Let customers accept the core system first , The core data starts , And then gradually cover other fields .

summary : Data governance , Don't be greedy for perfection , But from the core system , The important data starts .

Error 4 : Tools are omnipotent

Many customers think , Data governance is about spending money , Buy some tools , Think of the tool as a filter , The filter is ready , Data goes through the middle , That's fine . The result is : On the one hand, more and more functions , On the other hand, after actually going online , Complex functions , Users are reluctant to use .

In fact, the above idea is a kind of simplified thinking , Data governance itself contains a lot of content , Organizational structure 、 Institutional process 、 Mature tools 、 Site implementation and operation and maintenance , None of these four items is indispensable , Tools are just part of it . The most easily overlooked issues in data governance are organizational structure and staffing , But actually all the activity flows 、 People are needed to implement the rules and regulations 、 Implement and promote , There is no arrangement for personnel , It is difficult to guarantee the follow-up work .

On the one hand, no one does the management and promotion work , Whether the process can be consistently implemented is not guaranteed . On the other hand, there is no relevant data governance training , As a result, people do not pay attention to data governance , Think it has nothing to do with me , As a result, the entire data governance project is doomed to fail . It is recommended that you put the organizational structure first in data governance , Organized existence , Someone will think about this work , How to push , Keep doing things well , People centered data governance , It's easier to promote it .

A foreign data governance expert said it well ,Data Governance is governance of people; Data behaves what people behave. Which translates as : Data governance is the governance of human behavior . For organizations , Whether it's business or government , Data governance is essentially a process that covers all employees 、 About data “ change management ”, It will involve the organizational structure , Managing process change .

Of course , It's an ideal state . Come back , Let's look at the domestic situation , In the financial industry and some large enterprises , A special organization may be established to take charge of data governance , But some governments and small and medium-sized enterprises , They are out of cost considerations , There is often no budget for this . This is a time to compromise , Let the people who already have jobs , Part time responsible for a process or function of data governance . This will increase the workload of the existing staff , But it is a compromise , The point is to be responsible to people .

Site implementation and operation and maintenance are also very important , Although data governance has a trend towards automation , But so far , Data governance is more of a service , Not just a set of products . therefore , Deploy strong enough implementation consultants and implementation personnel , Help customers gradually build their own data governance capabilities , Is a very important work .

summary : remember , Doing data governance is not just going shopping shopping mall, Choose a few handy tools and everything will be fine when you come back . Good data governance should not be based on superstitious tools , Organizational structure 、 Institutional process 、 Site implementation and operation and maintenance are also very important , Be short of one cannot .

Mistake 5 : Data standards are difficult to implement

Many customers talk about data governance , We have a lot of data standards , But these standards have not been implemented , therefore , We need to implement data standards first . The data standard has really been implemented , The data quality is naturally good .

But this statement actually confuses data standards and data standardization . The first thing to understand is this : Data standards must be done , But data standardization , That is, the landing of data standards , It needs to be implemented according to the situation .

Make data standards , First, we need to comprehensively sort out the data standards . The comprehensive sorting of data standards , It's a wide range , Including national standards , Industry standard , Standards within the organization, etc , It takes a lot of energy , You can even do it on a single project . therefore , First, let customers see the breadth and difficulty of sorting out data standards .

secondly , Even if you spend a lot of energy combing , It's hard to see the effect , The result is often that customers only see a bunch of Word and Excel file , A long time , No one cares about these old documents anymore . This is the most common problem .

In the financial sector , Or some special industries such as national security , The implementation of data standards is good , In ordinary enterprises , Data standard is basically a kind of decoration .

There are two reasons for this problem :

First, we do not pay attention to the work of data standards .

Second, domestic enterprises make data standards , The motivation is often not to do data governance well , Instead, it should be checked by the superior , Many of them are consulting companies , It is modified by referring to the standard localization of enterprises in the same industry , Once the consulting company withdraws , The enterprise itself has no ability to implement data standards .

But the implementation of data standards , That is, data standardization , In fact, we must pay attention to the situation , There are at least two situations :

One is the system that has been put into operation , For this part of the information system , For historical reasons , It is difficult to implement data standards . Because of the transformation of the existing system , Apart from the cost , It often brings great unknown risks .

The second category is for newly launched systems , It is completely possible to require its data items to be implemented in strict accordance with the data standards .

Of course , Whether the data standards can be successfully implemented , It is also directly related to the authority obtained by the Department responsible for data governance , Without leadership authorization and strong support , You can't push “ The book is on the same track as the car ” Of , Do that , Please make sure that behind you stands the one and the same Emperor Qin Shihuang , Or you are the first emperor of Qin . Don't complain , This is the current situation faced by each data governance team .

summary : The difficulty in implementing data standards is a universal problem in data governance , The implementation process needs to distinguish between legacy systems and new systems , Implement different landing strategies separately .

Error 6 : The data quality problem has been found , so what ?

Work hard to build a platform , Business and technical personnel work together , The data quality check rules are configured , A lot of data quality problems have also been found , so what ? Six months later , A year later , The same data quality problems still exist .

The root cause of this problem is that there is no closed loop of data quality accountability . We should be responsible for data quality problems , First of all, we need to determine the responsibility for data quality problems . The basic principle of responsibility determination is : Who makes , Who is responsible for . Who did the data come from , Who is responsible for handling data quality issues .

This closed loop does not have to follow the online process , But we must make sure that everyone is responsible for every problem , Every problem must be fed back with a solution , The best way to deal with it is to form a performance evaluation , Such as ranking , To urge all responsible persons and departments to deal with data quality problems .

In fact, this can be traced back to what we talked about in error 2 : To establish an organizational structure and institutional processes , Otherwise, all kinds of things in data governance work , No one is responsible for , No one did it .

summary : Solution of data quality problems , To form a closed-loop mechanism and feedback mechanism for determining the responsible person in each link .

Mistake 7 : You seem to have done nothing ?

Many data governance projects are difficult to accept , Customers often have questions : What have you done in data governance ? Look at your report that you have done a lot of things , Why can't we see anything ? This happens , The reason is that the customer demand mentioned in the previous misunderstanding is not clear , Misunderstanding 3 says that it is difficult to finish up because of large and comprehensive data governance , But there is another reason that cannot be ignored , That is, the results of data governance are not perceived by customers . Users lack perception of data governance results , This leads to a lack of sense of existence in data governance , Especially the leadership and decision-making level of the user side , Naturally, the project will not be accepted happily .

In this case , A word of “ The baby has a hard time , But the baby doesn't say ” It won't help . A project from sales 、 pre-sale 、 To organize the team to implement , How many people have worked hard . The important thing is to make customers realize the important value of the project , Finally, I will pay for what everyone has paid .

in my opinion , In the project requirements phase of data governance , We should adhere to the business value orientation , The purpose of data governance is to effectively manage data assets , Ensure its accuracy 、 trusted 、 Perceptible 、 Understandable 、 Easy to access , Provide data support for big data application and leadership decision-making . And in the process , We must pay attention to and design the visual presentation effect of data governance , Such as :

How much metadata is managed , Whether it should be beautifully displayed with a data asset map .

How many data assets are managed , Which sources , What topics , From what data source , Whether it should be displayed in the form of a data asset portal .

How do data assets provide services to upper layer applications , How are these external services controlled , Who used the data , How much data is used , Whether the statistics and presentation should be carried out in a graphical way .

How many rules for cleaning data have been established , How many classes of data have been cleaned , Whether it should be shown in a chart .

How many pieces of problem data have been found , How many pieces of problem data have been processed , Whether there should be a constantly updated statistics to indicate .

Data quality problems are decreasing month by month , Whether it should be shown with a trend chart .

Data quality problems according to Department 、 The ranking of the system , Whether it should be added to the data quality report , To the decision-making level , Help customers with performance appraisal .

Data analysis 、 Reports and other applications , The number of times it is necessary to trace back the source and processing process due to data problems , Whether the monthly downward trend should be counted ; The previous backtracking method , And now we can more clearly locate the links of problem data generation through blood relationship management , Compare the two , How much time and energy has been saved by customers , Whether there should be a fair assessment , And submit it to the customer .

The average time the user spent looking for data before , Now the average time it takes to find data , Whether we can get a fair conclusion through interviews , Submit to the customer .

……

These are all means to enhance the sense of existence of data governance . In addition to these , Organize communication and training from time to time , Guide customers to realize the importance of data governance , Let customers really realize the role of data governance in promoting their business , Gradually transfer the ability of data governance to customers , These are the tasks that should be paid attention to at ordinary times .

summary : Traditional data governance does not pay attention to the presentation of results , We do data governance , Be sure to start with requirements , Just try to let customers see the results intuitively .

In the fierce market competition , Big data vendors have put forward various concepts of data governance , Some proposed data governance covering the whole life cycle of data , Some propose user centered self-service data governance , Some proposed to reduce manual intervention 、 Cost saving AI based automated data governance , In the face of these concepts , On the one hand, we should have a clear understanding of the current data situation , There are clear demands for the goal of data governance , On the other hand, we should also know various common misunderstandings in data governance , Cross these traps , Only in this way can the data governance work be truly implemented , The project has achieved success , Make the data more accurate , Better access to data , Better use of data , Really use data to improve business level .

02 Metadata management of data governance

Start with three concepts about metadata , About the distribution of metadata and how to obtain metadata , Finally, starting from several common applications , Talk about some practical application scenarios of metadata .

One 、 What is metadata ?

Metadata is quite abstract 、 An incomprehensible concept , So the first chapter , Let's figure out what metadata is . This chapter puts forward three concepts .

1、 Metadata (Meta Data) Is the data that describes the data .

This is the standard definition of metadata , But it's a bit abstract , Technical students can understand , If the audience lacks the appropriate technical background , Maybe I was confused on the spot . The root of this problem is actually a curse of knowledge : We know something , It's hard to explain clearly when you describe it to someone you don't know .

To break this curse , We might as well use a metaphor to describe metadata : Metadata is the account book of data . Let's think about what a person's Hukou is , It's this person's information register : It has the name of this person on it , Age , Gender 、 Id card number , address 、 Origin 、 When and where to move in , In addition to these basic descriptions , And the blood relationship between this man and his family , For example, father and son , Brother and sister, etc . All this information adds up , Constitute a comprehensive description of the person . So all this information , We can all call it this person's metadata .

alike , If we want to describe a real data , Take a table for example , We need to know the name of the watch 、 Table alias 、 The owner of the watch 、 Physical location of data storage 、 Primary key 、 Indexes 、 What are the fields in the table 、 The relationship between this table and other tables, etc . All this information adds up , This is the metadata of this table .

Such an analogy , We may know a lot about the concept of metadata : Metadata is the account book of data .

2、 Metadata management , Is the core and foundation of data governance .

Why do we say that metadata management is the core and foundation of data governance ? Why do you need to do metadata management first when doing data governance ? Why is its status so special ?

Let us imagine , A general is going to war , He is indispensable , What information must be grasped ? Yes , It's a map of the battlefield . It's hard to believe that a general without a military map can win a war . Metadata is a map of all data .

In this map of data , We can know :

What data do we have ?

Where is the data distributed ?

What are the types of data ?

What's the relationship between the data ?

What data is often quoted ? What kind of data no one patronizes ?

……

All this information , Can be found in metadata . If we want to do data governance , But I don't have this map in my hand , Data governance is like a blind man feeling an elephant . In the following articles, we will talk about data asset management , Knowledge map , In fact, most of them are also based on metadata . So we say : Metadata is a map of data within an organization , It's the core and foundation of data governance .

3、 Metadata is data that describes data , Is there any data that describes metadata ?

Yes . The data describing metadata is called meta model (Meta Model). Metamodel 、 Metadata 、 The relationship between the data , You can use the picture below to describe .

For the concept of metamodel , We don't discuss it in depth . We just need to know the following :

The data structure of metadata itself also needs to be defined and standardized , What defines and normalizes metadata is the meta model , The international standard of metamodel is CWM(Common Warehouse Metamodel, Public warehouse metamodel ), A mature metadata management tool , Need to support CWM standard .

Two 、 Where does metadata come from ?

In the big data platform , Metadata runs through the whole process of data flow of big data platform , It mainly includes data source metadata 、 Data processing process metadata 、 Data theme library theme library metadata 、 Service layer metadata 、 Application layer metadata, etc . The figure below takes a data center as an example , Shows the distribution of metadata :

The industry generally divides metadata into the following types :

Technical Metadata : Library table structure 、 Field constraints 、 Data model 、ETL Program 、SQL Procedure, etc .

Business Metadata : Business indicators 、 Business code 、 Business terms, etc .

Management metadata : Data owner 、 Data quality accountability 、 Data security level, etc .

Metadata Collection refers to obtaining metadata in the data life cycle , Organize metadata , Then write the metadata to the database .

To get metadata , There are many ways , In terms of collection methods , Use includes database direct connection 、 Interface 、 Log files and other technical means , Data dictionary for structured data 、 Metadata information of unstructured data 、 Business indicators 、 Code 、 Metadata information such as data processing process is collected automatically and manually .

When metadata collection is complete , Be organized to conform to CWM Structure of model , Stored in a relational database .

3、 ... and 、 With metadata , What can we do ?

In this chapter, we mainly talk about several typical applications of metadata .

First, let's look at the overall functional architecture of metadata management , With metadata , What can we do , It's clear from this picture :

1. Metadata view

Generally, metadata is organized in a tree structure , Browse and retrieve metadata by different types . If we can browse the structure of the table 、 Field information 、 Data model 、 Index information, etc . Through a reasonable allocation of authority , Metadata viewing can greatly improve the sharing of information within the organization .

2. Data consanguinity and impact analysis

Data consanguinity and impact analysis “ What's the relationship between the data ” The problem of . Because of its important value , Some vendors will extract it from metadata management , As an independent and important function . However, the author considers that the blood relationship and impact analysis of data actually come from metadata information , So it is still described in metadata management .

Blood relationship analysis refers to the blood relationship of the data , Record the source of the data in the form of historical facts , Process, etc .

Take the blood relationship of a table for example , Blood analysis shows the following information :

Data consanguinity analysis is of great value to users , Such as : When problem data is found in data analysis , Can depend on blood relationship , Their roots , Quickly locate the source and processing flow of problem data , Reduce the time and difficulty of analysis .

Typical application scenarios of data consanguinity analysis : A business person found that “ Monthly Marketing Analysis ” Report data has quality problems , So she asked IT The Department raised an objection , The technicians found out through metadata consanguinity analysis that “ Monthly Marketing Analysis ” Reports are subject to upstream FDM Layer four different data table impact , So as to quickly locate the source of the problem , Solve problems at a low cost .

Apart from blood analysis , There is also an impact analysis , It can analyze the downstream flow direction of data . When the system is upgraded , If the data structure is modified 、ETL Metadata information such as programs , Impact analysis based on data , It can quickly locate which downstream systems will be affected by metadata modification , So as to reduce the risk of system upgrading . As can be seen from the above description : Data impact analysis and blood relationship analysis are the opposite , Blood analysis points to the upstream sources of data , The impact analysis points to the downstream of the data .

Typical application scenarios of impact analysis : An organization is upgrading its business system , stay “FINAL_ZENT ” The fields in the table have been modified :TRADE_ACCORD Length from 8 It is amended as follows 64, It is necessary to analyze the impact of this upgrade on subsequent relevant systems . For metadata “FINAL_ZENT” Conduct impact analysis , Found on the downstream DW Layer related tables and ETL The process has an impact ,IT The Department is positioned after the impact , Modify the downstream procedures and table structure in time , To avoid problems . thus it can be seen , Data impact analysis helps to quickly lock in the impact of metadata changes , Nip the possible problems in the bud ahead of time .

3. Data analysis of heat and cold

The analysis of heat and cold is mainly to make statistics on the use of data sheets , Such as : Table and ETL Program 、 Tables and analytical applications 、 The relationship between tables and other tables , From the perspective of visit frequency and business requirements , Data analysis of heat and cold , In the form of charts , Show the importance index of the table .

The analysis of data cooling and heating is of great value to users , Typical application scenarios : We observe that some data resources are idle for a long time , Not called by any application , There is no other program to use the State , Now , The user can refer to the cold and heat report of the data , Combined with artificial analysis , Do hierarchical storage for data with different degrees of heat and cold , To make better use of HDFS resources , Or evaluate whether to process the lost data offline , To save data storage space .

4. Data assets map

Through the processing of metadata , It can form data asset map and other applications . Data asset maps are generally used to organize information at a macro level , Merge information from a global perspective 、 Arrangement , Show the amount of data 、 Data changes 、 Data storage 、 Overall data quality and other information , Provide reference for data management department and decision makers .

5. Other applications of Metadata Management

There are other important functions in metadata management , Such as :

Metadata change management . Query metadata change history , Compare the versions before and after the change, etc .

Metadata comparative analysis . Compare similar metadata .

Metadata statistical analysis . It is used to count the quantity of all kinds of metadata , Such as the types of data , Quantity, etc , It is convenient for users to master the summary information of metadata .

Applications like this , Limited to space , Not to list .

Four 、 summary

Metadata is equivalent to the household register and map of data , Is the core and foundation of data governance .

Metadata is generated from data production 、 Data access 、 The data processing 、 From data service to data application , On the whole, it can be divided into three categories : Technical Metadata 、 Business metadata and management metadata .

After metadata collection and warehousing , Can produce cold and heat analysis 、 Blood relationship analysis 、 Impact Analysis , Data asset map and other applications . Metadata management allows data to be described more clearly , Easier to understand , Be traced , It is easier to assess its value and impact . Metadata management can also greatly facilitate the sharing of information within and outside the organization .

03 Data quality management of data governance

The theory and practice of data governance continue to develop , But data quality management is always the original intention of data governance , It is also the most important purpose . Next, from the goal of data quality management , The root cause of quality problems , Quality evaluation criteria , Quality management process , The selection and rejection of quality management are expounded .

One 、 The goal of data quality management

Data quality management mainly solves “ How is the data quality , Who can improve , How to improve , How to assess ” The problem of .

The first era of relational databases , The main purpose of data governance , To improve the quality of data , Let Report 、 analysis 、 The application is more accurate . today , Although the scope of data governance has expanded a lot , Let's start with data asset management 、 Knowledge map 、 Automated data governance and so on , But improve the quality of the data , Still one of the most important goals of data governance .

Why is data quality so important ?

Because data needs to be able to play its value , The key lies in the quality of its data , High quality data is the foundation of all data applications .

If an organization analyzes business based on poor data 、 To make decisions , It's better to have no data , Because the result of wrong data analysis often brings “ To mislead accurately ”, For any organization , such “ To mislead precisely ” It's like a disaster .

According to the statistics , Data scientists and data analysts have 30% It's a waste of time trying to figure out if the data is “ Bad data ” On , In an environment where data quality is not high , Do data analysis can be said to be jittery . It can be seen that the data quality problem has seriously affected the normal operation of the organization's business . Through scientific data quality management , Continuously improve data quality , Has become an urgent priority within the organization .

Two 、 The root cause of data quality problems

Do data quality management , First of all, we need to find out the causes of data quality problems . There are many reasons , For example, in technology 、 management 、 Every aspect of the process . But fundamentally , Most of the data quality problems are caused by the business , That is, poor management . Many superficial technical problems , Go further , In fact, it's still a business issue .

The author is doing data governance consulting for customers , We found that many customers didn't realize the root cause of data quality problems , Limited to just trying to solve problems from a technical point of view , I hope that I can solve the quality problem by purchasing a tool , Of course, this does not achieve the desired effect . After communication with customers and mutual analysis , Most organizations recognize the real root cause of data quality problems , So we started to solve data quality problems from the business .

Solve data quality problems from a business perspective , It's important to build a science 、 Feasible data quality assessment standards and management process .

3、 ... and 、 Criteria for data quality assessment

When we talk about data quality management , We must have a standard for data quality assessment , With this standard , Only then can we know how to evaluate the quality of the data , To quantify the quality of data , And know the direction of improvement , Compare the improved effect .

At present, the data quality standards recognized by the industry are :

“

accuracy : Describe whether the data is consistent with the characteristics of its corresponding objective entity .

integrity : Describes whether the data has missing records or missing fields .

Uniformity : Describe whether the values of the same attribute of the same entity are consistent in different systems .

effectiveness : Describes whether the data meets user-defined conditions or is within a certain range of domain values .

Uniqueness : Describe whether the data has duplicate records .

timeliness : Describe the timeliness of data generation and supply .

stability : Describe whether the fluctuation of data is stable , Whether it is within the scope of its validity .

The above data quality standards are just some general rules , These standards can be extended according to the actual situation of data and business requirements , Such as cross table verification .

Four 、 Data quality management process

To improve data quality , Need to start with problem data , Focus on problem analysis 、 solve 、 track 、 Continue to optimize 、 Accumulation of knowledge , Form a closed-loop of continuous improvement of data quality .

First, we need to sort out and analyze the data quality problems , Figure out the current situation of data quality ; Then choose suitable solutions for different quality problems , Make a detailed solution ; Then there is the question of accountability , Track the effect of program execution , To supervise and inspect , Continue to optimize ; Finally, a knowledge base for data quality problem solving is formed , For future reference . These steps are iterative , Form a closed loop of data quality management .

Obviously , To manage data quality , Tool support alone is not enough , There has to be an organizational structure 、 Institutional processes are involved , Be responsible for the data , Data accountability .

5、 ... and 、 Data quality management access and give up

Enterprise , Or the government , Never lived in a vacuum , But is tightly wrapped by society . Solve any difficult problem , All must take into account the influence of social factors , Make the right choice .

The first choice : Data quality management process . The data quality management process mentioned above , It's a relatively ideal state , But within different organizations , In fact, the intensity of implementation is different , Take data accountability : It is also feasible to implement it in the enterprise , But it's hard to apply in government . Because of big data projects in government departments , No matter who is the leader , It is likely that you do not have the relevant permissions .

Meet this kind of problem , We can only do something roundabout , Try to make up for the negative impact of a missing link , For example, work with data providers to establish rules for data cleaning , Clean the source data , Try to meet the standards available .

The second choice : Data in different time dimensions are processed in different ways . Divide... From the time dimension , There are three main types of data : Future data 、 Current data 、 The historical data . When solving different kinds of data quality problems , The choice needs to be considered , Take a different approach .

1. The historical data

When you hold a pile of historical problem data , Find the person in charge of the information system to rectify it for you , The other person usually doesn't give you a look , Maybe with “ The current data problems cannot be solved , There is no time for you to deal with historical data ” As the reason for the , Although you are thousands of miles away . At this time, even if you are looking for a leader to coordinate , Generally, it doesn't play a big role , Because this is the reality : The historical data of an organization is usually accumulated over the years , It is already on a massive scale , It's hard to deal with them one by one .

Then is there no better way ?—— Dealing with historical data problems , We can give full play to the advantages of technical personnel , Use data cleaning to solve , For those that really can't be cleaned , We want the decision-maker to judge the benefit ratio of input and output , The result is often the need to accept the status quo .

Look at it from another Angle : The freshness of the data is different , Their values are often differentiated . Generally speaking , The older the historical data is , The lower its value . therefore , We should not put the most important resources on the improvement of historical data quality , Instead, we should focus more on the data that is currently generated and will be generated in the future .

2. Current data

Problems with current data , We need to sort out and find problems through the fourth chapter , To analyze problems , solve the problem , Problem accountability 、 Follow up and evaluation to solve the problem , The management process must strictly follow the process , Avoid dirty data from continuing to flow to data analysis and application .

3. Future data

Manage future data , Be sure to start with data planning , From the perspective of informatization of the whole organization , Plan and organize a unified data architecture , Develop a unified data standard . Borrow business system to create 、 Timing of renovation or reconstruction , Creating a physical model 、 Build table 、ETL Development 、 Data services 、 Data use and other links follow the unified data standards , Fundamentally improve data quality . This is also the ideal 、 The best data quality management mode .

such , Through different processing methods for data in different periods , Be able to prevent in advance 、 In process monitoring 、 Improve after the fact , Fundamentally solve the problem of data quality .

summary

Improve data quality , Is one of the most important goals of data governance . Do data quality management , First of all, it is necessary to make clear that the root cause of data quality problems lies in business management problems .

secondly , We should according to the organizational structure , Establish a set of data quality evaluation standards and data quality management processes .

Last , In the process of data quality management , We should take full account of the present situation , For historical data 、 Current data 、 Different processing strategies will be developed for future data .

04 Data standard management of data governance

One 、 Big data standard system

According to the big data standard system formulated by the big data standard working group of the National Information Technology Standardization Technical Committee , The standard system framework of big data consists of seven categories of standards , Respectively : Basic standards 、 Data standards 、 technical standard 、 Platform and tool standards 、 Management standards 、 Security and privacy standards 、 Industry application standards . This paper mainly expounds the second category : Data standards .

Two 、 Several misunderstandings about data standards

The word data standard , First in the financial industry , Especially in the data governance of the banking industry . Data standards have always been the basic and important content of data governance . But for data standards , Different people have different views :

Some people think that data standards are extremely important , As long as the data standard is established , All data related work shall be carried out according to the standard , Most of the goals of data governance are natural .

Others believe that data standards are of little use , Did a lot of combing , Built a set of comprehensive standards , Finally, it is not put on the shelf , Forgotten , Hardly any effect .

First, clarify the author's point of view : Both views are wrong , At least one-sided . actually , Data standardization is a complex task , Extensive , Systematic , Long term work . It doesn't work fast , Quickly solve most of the problems in data governance , At the same time, it is certainly not completely useless , All that was left was a pile of documents —— If this is the end result of the data standards work , That only shows that the work is not done well , Not implemented . The main purpose of this paper is , Is to analyze why this happens , And how to deal with it . The first thing to do is to clarify the definition of data standards .

3、 ... and 、 Definition of data standards

What is a data standard , Relevant organizations do not have a unified , A definition agreed by all parties . Combined with the elaboration of various data standards , From the perspective of data governance , I try to define the data standard : Data standard is the expression of data 、 Consistent agreement on format and definition , Contains data business attributes 、 Unified definition of technical attribute and management attribute ; The purpose of data standards , It is to make the data used and exchanged inside and outside the organization consistent , accurate .

Four 、 How to formulate data standards

Generally speaking , For the government , There will be data standard management methods issued by national or local governments , Relevant data standards will be specified in detail . So here we mainly talk about how enterprises formulate data standards .

The data standard sources of enterprises are very rich , There are external regulatory requirements , General standards of the industry , At the same time, we must also consider the actual situation of internal data of the enterprise , Sort out the business indicators 、 Data item 、 Code etc. , It is not necessary to include all the above sources in the data standard , The scope of data standards should mainly focus on the core data part of enterprise business , Some enterprises are also called key business data or core data , As long as the standards for these core data are formulated , Can support enterprise data quality 、 Master data management 、 Data analysis, etc .

5、 ... and 、 The problem of data standardization

Data standards are easy to formulate , However, the implementation of data standards is much more difficult . Domestic data standardization has developed for so many years , All industries , Organizations are building their own data standards , But you rarely hear how well an organization publicizes its data standards , let me put it another way , There are not many cases where data standards have achieved significant results . Why does this happen , There are two main reasons :

First, there are problems in the data standard itself . Some standards blindly pursue advancement , Keep up with industry leaders , The standard is large and complete , Divorced from the actual data situation , Make it difficult to land .

The second reason , It's a problem in the process of promoting standardization . That's why we focus on , There are mainly the following situations :

The purpose of the construction data standard is not clear . Some organizations build data standards , Its purpose is not to guide the construction of information system , Improve data quality , Easier to process and exchange data , Instead, deal with regulatory inspections , Therefore, what is needed is a pile of standard documents and system documents , There is no plan to implement at all .

Over reliance on consulting firms . Some organizations do not have the capacity to build data standards , So ask a consulting company to help plan and implement . Once the consulting company withdraws , The organization still lacks the ability and conditions to implement these standards .

The difficulty of data standardization is underestimated . Many companies say they need to make data standards , But I don't know that the scope of data standards is very large , It's hard to finish all the ways of a project , But a long-term process of continuous promotion , The result is that the more customers do, the greater the resistance they encounter , The more difficult it is , Finally, I have no confidence , Instead, put a pile of achievements sorted out in the early stage on the shelf , This is the most common problem .

Lack of landing system and process planning . Implementation of data standards , Multiple systems are required 、 Only with the cooperation of the Department can . If you only sort out the data standards , But there is no specific plan on how to land , Lack of Technology 、 Business unit 、 Support from system developers , Especially the lack of leadership support , It's impossible to land anyway .

Lack of organization and management level : Long term implementation of data standards 、 complexity 、 Systematic characteristics , It is decided that the management ability of the organization promoting the implementation must be maintained at a high level , And the architecture must remain stable , In order to move forward in an orderly way . These are the reasons , This makes it difficult to carry out data standardization , More difficult to achieve better results . Data standardization is difficult to implement , Is the current situation of the data governance industry , Can't be avoided .

6、 ... and 、 How to deal with these problems

Deal with these problems , Most economical 、 The ideal model is, of course : Build big data , First, do the standard , Then build a big data platform , Data warehouse, etc . But it is unlikely that ordinary people have such an understanding , Most of the time, we build first and then manage . First put the information system 、 The data center is built , Then there is a problem with the standard , Poor quality , Rebuild data standard , But in fact, it's time to go back and do something to make up for the lost , Part of the customer's investment must be wasted .

Because it's too idealistic , So this pattern is almost invisible . In practice , We often need to think more about how to implement data standards into existing systems and big data platforms .

There are three forms of data standard landing :

Source system transformation : The transformation of the source system is the most direct way to implement the data standard , Help control the quality of future data , But the workload and difficulty are high , In reality, we often don't choose this way , For example, there is a customer number field , Involving multiple systems , A wide range 、 High degree of importance 、 Great influence , Once the field is modified , Relevant systems will be involved and need to be modified . But it's not completely infeasible , Can borrow the system transformation , The chance to go online again , Conduct partial benchmarking on the data of relevant source systems .

Data center landing : Build a data center according to the requirements of data standards ( Or data warehouse ), Map the source system data to the data center , Ensure that the data transmitted to the data center is standardized data . This approach is highly feasible , Is the choice of most organizations .

Data interface standardization : Transform the existing data transmission interface between systems , When transferring data between systems , All data standards are followed . This is also a feasible method .

In the process of data standard implementation , Need to do well 6 thing , As shown in the figure below :

Determine the landing range in advance : Which data standards need to be implemented , What's involved IT System , All need to be considered in advance .

Make a difference analysis in advance : Between existing data and data standards , What are the differences , What are the differences , Do a good job of difference analysis .

Make an impact analysis in advance : If these data standards are implemented , What kind of impact will it have on the relevant game halls , Are these effects controllable . Impact analysis in metadata management can help users determine the scope of impact .

Formulate the implementation plan for landing : The implementation plan should focus on the landing . A plan that can't be landed , Finally, it can only be abandoned . A landing plan , There should be an organizational structure and division of labor , What is everyone responsible for , How to evaluate , How to supervise , They are all contents that must be included in the implementation plan .

Implement the landing plan specifically : According to the implementation plan , Carry out data standard implementation .

Post evaluation : Need to follow up afterwards 、 Evaluate the effect of data landing , What did you do right , What has not been done enough , How to improve .

7、 ... and 、 summary

The construction of data standards can be roughly divided into two stages :

1、 Sort out and formulate data standards .

2、 Implementation of data standards .

The latter is a recognized problem . This paper analyzes the reasons , It provides some methods on how to make data standards fall into place faster and better .

边栏推荐

猜你喜欢

![[JS] [Nuggets] get people who are not followers](/img/cc/bc897cf3dc1dc57227dbcd8983cd06.png)

[JS] [Nuggets] get people who are not followers

Desai wisdom number - other charts (parallel coordinate chart): employment of fresh majors in 2021

最新微信ipad协议 CODE获取 公众号授权等

(translation) reasons why real-time inline verification is easier for users to make mistakes

FL Studio20.9水果软件高级中文版电音编曲

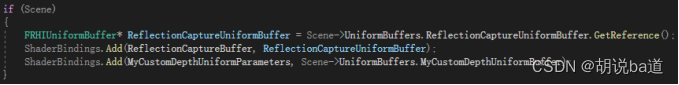

UE4渲染管线学习笔记

Pulsar Geo Replication/灾备/地域复制

VirtualBox installation enhancements

CorelDRAW 2022中文精简64位直装版下载

@ConfigurationProperties和@Value的区别

随机推荐

Rocketqa: cross batch negatives, de noised hard negative sampling and data augmentation

SAP ALV汇总跟导出Excel 汇总数据不一致

map数组函数

import tensorflow.contrib.slim as slim报错

如何学习和阅读代码

js中的图片预加载

如何在智汀中实现智能锁与灯、智能窗帘电机场景联动?

[graduation season · advanced technology Er] - summary from graduation to work

I want to know how to open a stock account? Is it safe to open an account online?

C # generates PPK files in putty format (supports passphrase)

手机上怎么开户?还有,在线开户安全么?

How does ZABBIX configure alarm SMS? (alert SMS notification setting process)

(translation) reasons why real-time inline verification is easier for users to make mistakes

URL和URI

SWT/ANR问题--ANR/JE引发SWT

Applet custom top navigation bar, uni app wechat applet custom top navigation bar

7_ Openresty installation

UE4渲染管线学习笔记

AI edge computing platform - beaglebone AI 64 introduction

Go import self built package