当前位置:网站首页>My NVIDIA developer tour -jetson nano 2GB teaches you how to train models (complete model training routines)

My NVIDIA developer tour -jetson nano 2GB teaches you how to train models (complete model training routines)

2022-06-28 12:26:00 【Driving without a license】

my NVIDIA Developer journey ” | The essay solicitation activity is in progress .......

Model saving and loading

pytorch The installation method of is not written here , Previous articles are recorded ,nvidia The information on the official website has been very detailed, and the connection is attached ( Pay attention to your Jetpack The version is good , General metaphysical problems arise here )

install pychrom The previous articles of the method also have complete records , This is the basic environment . What we use today is pytorch.

The training of neural network generally involves the following steps :

Load data set , And do the pretreatment .

The preprocessed data is divided into feature and label Two parts ,feature Send it to the model ,label Be treated as ground-truth.

model receive feature As input, And through a series of operations , Output out predict.

By way of predict and predict As a variable , Establish a loss function Loss,Loss The function value of is to represent predict And ground-truth The gap between .

establish Optimizer Optimizer , The goal of optimization is Loss function , Make it as small as possible ,loss Smaller representative Model The higher the accuracy of the prediction .

Optimizer In the process of optimization ,Model Change the weight of its own parameters according to the rules , This is an iterative and continuous process , until loss Values tend to stabilize , You can't get a smaller value .

CIFAR-10 and CIFAR-100 yes 8000 Ten thousand tiny images A marked subset of a dataset . They are created by Alex Krizhevsky,Vinod Nair and Geoffrey Hinton collect .

CIFAR-10 Data sets

CIFAR-10 Data set from 10 Of the classes 60000 Zhang 32x32 Color image composition , Each class contains 6000 Zhang image . Yes 50000 Training images and 10000 Test images .

The data set is divided into five training batches and one test batch , Each batch contains 10000 Zhang image . The test batch contains exactly... Randomly selected from each class 1000 Zhang image . The training batch contains the remaining images in random order , However, some training batches may contain more images from one class than from another class . Between them , The training batch contains exactly... From each class 5000 Zhang image .

After running the code , The dataset will be automatically downloaded , And stored in the current directory data In file .

# This is an example Python Script .

# Press Shift+F10 Execute or replace it with your code .

# Press Double Shift Search for classes everywhere 、 file 、 Tool window 、 Operation and setting .

def print_hi(name):

# Use breakpoints in the following line of code to debug the script .

print(f'Hi, {name}') # Press Ctrl+F8 Switch breakpoints .

# Press the green button in the space to run the script .

if __name__ == '__main__':

print_hi('PyCharm')

# visit https://www.jetbrains.com/help/pycharm/ obtain PyCharm help

import torch

from torch.utils.data import DataLoader

#import torch.nn as nn

from torch import nn

from model import *

torch.cuda.is_available()

print('CUDA available: ' + str(torch.cuda.is_available()))

a = torch.cuda.FloatTensor(2).zero_()

print('Tensor a = ' + str(a))

b = torch.randn(2).cuda()

print('Tensor b = ' + str(b))

c = a + b

print('Tensor c = ' + str(c))

import torchvision

train_data = torchvision.datasets.CIFAR10("root=../data",train=True,transform=torchvision.transforms.ToTensor(),download=True)

test_date = torchvision.datasets.CIFAR10("root=../data",train=False,transform=torchvision.transforms.ToTensor(),download=True)

trian_data_size = len(train_data)

test_data_size = len(test_date)

#train_data_size=10,xunlianshujujide changduwei10

print("xunlianshujujjidechangduwei:{}".format(trian_data_size))

print("ceshishujujjidechangduwei:{}".format(test_data_size))

#liyongdataloader laijiazaishujuji

train_dataloader = DataLoader(train_data,batch_size=64)

test_dataloader = DataLoader(test_date,batch_size=64)

#Build neural network

tudui = Tudui()

# sunshihanshu

loss_fn = nn.CrossEntropyLoss()

#youhuaqi

# learning_rate = 0.01

#1e-2=1x (10)^(-2) = 1/100 =0.01

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(),lr=learning_rate)

#shezhixunliandechishu

#jiluxunliandechichu

total_train_setp = 0

##jiluceshide cichu

total_test_step = 0

# xunlianndelunsh

epoch = 10

for i in range(epoch):

print("---------di{}lunxunlianstart".format(i+1))

#xunliankaishi

for data in train_dataloader:

imgs, targets = data

outputs = tudui(imgs)

loss = loss_fn(outputs,targets)

#youhuaqijianmoxing

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_setp = total_train_setp + 1

print("xunliancishu:{},loss:{}".format(total_test_step, loss.item()))

epoch and iteration The difference between ,iteration It means a single time mini-batch Training , and epoch And the size of the data set batch size of .

CIFAR-10 The number of training set pictures is 50000,batch size Its size is 100, So we have to go through 500 Time iteration Only then can we finish walking epoch.

epoch It can be roughly regarded as a neural network to go through all the photos of the training set from the beginning to the end .

The operation results are as follows .

[email protected]:~/PycharmProjects/pythonProject$ cat model.py

import torch

from torch import nn

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64 * 4 * 4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

if __name__ == '__main__':

tudui = Tudui()

input = torch.ones((64, 3, 32, 32))

output = tudui(input)

print(output.shape)

Jetson nano It's hard to test this , I hope you can change to a strong one nx perhaps orin try .

边栏推荐

- IDEA全局搜索快捷设置

- Url追加参数方法,考虑#、?、$的情况

- [C language] use of nested secondary pointer of structure

- 【附源码+代码注释】误差状态卡尔曼滤波(error-state Kalman Filter),扩展卡尔曼滤波,实现GPS+IMU融合,EKF ESKF GPS+IMU

- JNI confusion of Android Application Security

- AcWing 607. Average 2 (implemented in C language)

- AcWing 604. Area of circle (implemented in C language)

- 【C语言】判断三角形

- 智联招聘基于 Nebula Graph 的推荐实践分享

- Levels – virtual engine scene production "suggestions collection"

猜你喜欢

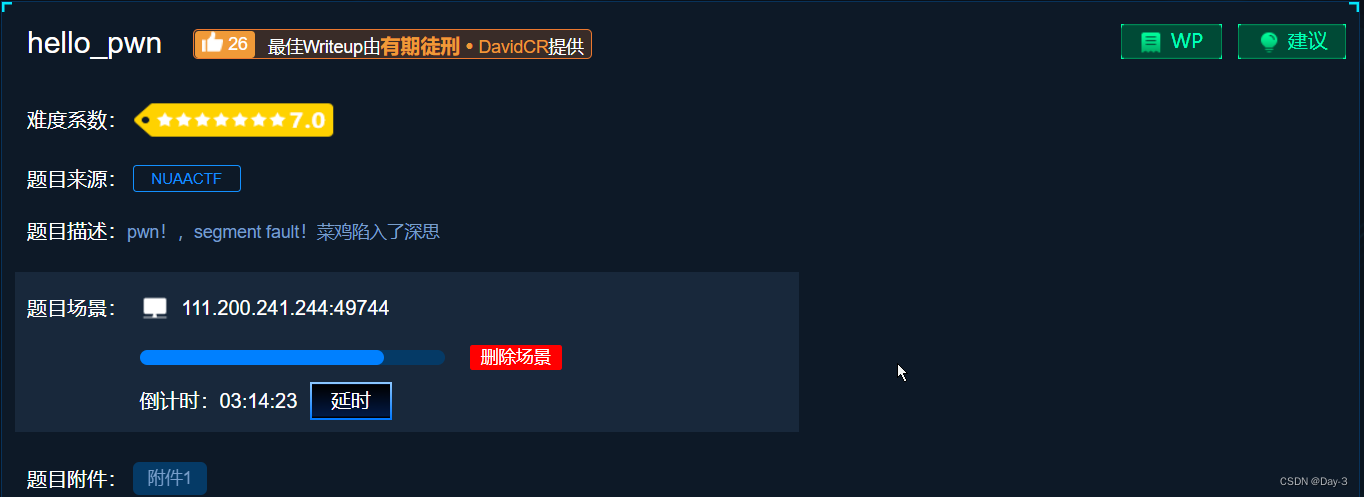

攻防世界新手入门hello_pwn

Unity Editor Extension Foundation, GUI

Multi dimensional monitoring: the data base of intelligent monitoring

In less than an hour, apple destroyed 15 startups

为什么CAD导出PDF没有颜色

Leetcode 705. 设计哈希集合

Function and principle of remoteviews

![[unity Editor Extension practice], find all prefabrications through code](/img/0b/10fec4e4d67dfc65bd94f7f9d7dbe7.png)

[unity Editor Extension practice], find all prefabrications through code

Bytev builds a dynamic digital twin network security platform -- helping network security development

最新!基于Open3D的点云处理入门与实战教程

随机推荐

2022年理财产品的常见模式有哪些?

Unity加载设置:Application.backgroundLoadingPriority

fatal: unsafe repository (‘/home/anji/gopath/src/gateway‘ is owned by someone else)

[source code + code comments] error state Kalman filter, extended Kalman filter, gps+imu fusion, EKF eskf gps+imu

设置Canvas的 overrideSorting不生效

NFT数字藏品系统开发(3D建模经济模型开发案例)

【Unity编辑器扩展实践】、查找所有引用该图片的预制体

【C语言】结构体嵌套二级指针的使用

【C语言】关于scanf()与scanf_s()的一些问题

杰理之wif 干扰蓝牙【篇】

不到一小时,苹果摧毁了15家初创公司

Map排序工具类

Url追加参数方法,考虑#、?、$的情况

Unity导入资源后还手动修改资源的属性?这段代码可以给你节约很多时间:AssetPostprocessor

杰理之wif 干扰蓝牙【篇】

Prefix and (2D)

UDP传输rtp数据包丢帧

UGUI使用小技巧(六)Unity实现字符串竖行显示

EMC RS485 interface EMC circuit design scheme

结构光之相移法+多频外差的数学原理推导