当前位置:网站首页>Redis series (3) - sentry highly available

Redis series (3) - sentry highly available

2022-06-24 17:56:00 【bojiangzhou】

The sentry cluster

The last article introduced Redis Master slave architecture pattern , In this mode , There is a single point problem in the main database , If the main warehouse fails , Then the client cannot send a write request , In addition, data replication and synchronization cannot be performed from the library . So the general deployment Redis When master database schema , Sentinels will also be deployed (Sentinel) Cluster to ensure Redis High availability , When the main library fails , It can automatically fail over , Switch from the library to the new main library . This article will take a look at how to deploy sentry clusters , And how it works .

Sentinel deployment architecture

The sentinel mechanism is Redis Official high availability solutions , Sentinel itself is also a cluster distributed deployment , And at least 2 More than Nodes can work normally .

In the last article, we deployed one master and two slaves , On top of that , We start another sentinel instance on each server , A group of three sentinels to monitor Redis Master-slave cluster , The deployment architecture is shown in the figure below , This is also a classic 3 Node sentry cluster .

Deploy sentry groups

Sentinel is actually a special mode of operation Redis process , The prerequisite for deploying sentinels is that they have been installed Redis, The installation method can refer to Redis series (1) — Stand alone installation and data persistence .

Install well Redis after , Copy the sentry configuration file to /var/redis Next :

# cp /usr/local/src/redis-6.2.5/sentinel.conf /etc/redis/

Copy code Modify the following configuration in the configuration file :

| To configure | value | explain |

|---|---|---|

| bind | <ip> | Bind native IP |

| port | 26379 | port |

| daemonize | yes | Background operation |

| pidfile | /var/run/redis-sentinel.pid | PID file |

| logfile | /var/redis/log/26379.log | Log files |

| dir | /var/redis/26379 | working directory |

| sentinel monitor | <master-name> <ip> <redis-port> <quorum> | To monitor master |

among ,sentinel monitor <master-name> <ip> <redis-port> <quorum> There can be multiple , Because a sentinel cluster can monitor multiple Redis Master-slave cluster .

sentinel monitor mymaster 172.17.0.2 6379 2

Copy code Create a working directory :

# mkdir -p /var/redis/26379

Copy code Switch to redis The installation directory :

/usr/local/src/redis-6.2.5/src

Copy code Start the sentry on each machine separately :

./redis-sentinel /etc/redis/sentinel.conf

Copy code Check whether the sentry is started successfully :

[[email protected] src]# ps -ef|grep redis

root 185 1 0 03:05 ? 00:00:05 /usr/local/bin/redis-server 172.17.0.4:6379

root 213 1 0 03:37 ? 00:00:00 ./redis-sentinel 172.17.0.4:26379 [sentinel]

Copy code Check the sentry status

After startup , You can check the sentry log , The log will show what each sentinel monitored master, And can automatically find the corresponding slave.

[[email protected] src]# cat /var/redis/log/26379.log

213:X 28 Dec 2021 03:37:46.227 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

213:X 28 Dec 2021 03:37:46.227 # Redis version=6.2.5, bits=64, commit=00000000, modified=0, pid=213, just started

213:X 28 Dec 2021 03:37:46.227 # Configuration loaded

213:X 28 Dec 2021 03:37:46.227 * monotonic clock: POSIX clock_gettime

213:X 28 Dec 2021 03:37:46.228 * Running mode=sentinel, port=26379.

213:X 28 Dec 2021 03:37:46.228 # Sentinel ID is f43d3d4bca3f809be708c17e4c1c8951de5c6d9d

213:X 28 Dec 2021 03:37:46.228 # +monitor master mymaster 172.17.0.2 6379 quorum 2

213:X 28 Dec 2021 03:37:46.230 * +slave slave 172.17.0.4:6379 172.17.0.4 6379 @ mymaster 172.17.0.2 6379

213:X 28 Dec 2021 03:37:46.234 * +slave slave 172.17.0.3:6379 172.17.0.3 6379 @ mymaster 172.17.0.2 6379

213:X 28 Dec 2021 03:37:47.955 * +sentinel sentinel fbeaca3b7cc3a7fee7660dd2e239683021861d69 172.17.0.3 26379 @ mymaster 172.17.0.2 6379

213:X 28 Dec 2021 03:37:48.063 * +sentinel sentinel 018048f0adc0523b4b13d8ddde04c8882bb3d9af 172.17.0.2 26379 @ mymaster 172.17.0.2 6379

Copy code You can then connect to the sentinel server :

redis-cli -h 172.17.0.4 -p 26379

Copy code Then check the status of the sentry through the following commands :

sentinel master mymaster

sentinel slaves mymaster

sentinel sentinels mymaster

sentinel get-master-addr-by-name mymaster

Copy code Delete sentinel node

When adding sentry nodes , The cluster will automatically discover sentinel instances .

If you want to stop a sentry , Follow the steps below :

- Stop the sentry process

- Execute on all sentinels

SENTINEL RESET *, clear master state

- Execute on all sentinels

- Execute on all sentinels

SENTINEL MASTER mymasterSee if all sentinels have agreed on the number

- Execute on all sentinels

The sentry consists of

Basic operating mechanism

Sentinel is actually a special mode of operation Redis process , yes Redis A very important component of cluster architecture , The sentry is mainly responsible for three tasks : monitor 、 Elector 、 notice .

- monitor : Responsible for monitoring redis master and slave Is the process working

- Elector : If master Hang up , from salve To elect a new master come out

- notice : If a fail over occurs , Notify client of new master Address

The sentinel process is running , Will be periodically given to all the master repositories 、 Send from library PING command , Check that they are running online . If the slave does not respond to the sentry within the specified time PING command , The sentinel will mark it as Offline status ; If the main warehouse does not respond to the sentry's request within the specified time PING command , The Sentry will judge Main warehouse offline , And then start Switch the main library automatically The process of .

To automatically switch to the main database, first of all Elector , Main library After hanging up , Sentinels need to be from multiple Slave Library in , Select a slave instance according to certain rules , Make it the new main library , The cluster has a new master database .

After selecting the new main database , The sentry is going to notify the connection information of the new master database to other slave databases , Let them perform replicaof command , Connect to the new master library , And copy the data . meanwhile , The sentinel will notify the client of the connection information of the new main database , Let them send the request operation to the new main library .

Cluster discovery mechanism

The minimum configuration to start the sentry is :

sentinel monitor <master-name> <ip> <redis-port> <quorum>

Copy code After the sentinel instance is activated , You can establish a connection with the main database , So how does the sentry know what to get from the library ?

The Sentry will send... To the main library INFO command ,INFO The result returned by the command contains the information of the master library and the information of the slave library . With the information from the library , The Sentry can then establish a connection with the slave Library , Regularly send PING Command to check the health of the master-slave database .

INFO The command contains information from the library :

Sentinel discovery mechanism

Sentinels are simply connected to the main library , How does it know the other sentinels in the cluster ? Sentinels also need to find each other , Sentinels in the cluster are required to vote together when judging whether the main database is offline or selecting the master .

The Sentinels found each other , It's through Redis Of pub/sub( Release / subscribe ) Realized by mechanism , Every sentinel every 2 second Will turn out for the __sentinel__:hello This channel sends a message , This message contains its own IP、 port 、 example ID Etc , Then other sentinels can consume this message , You can know the information of other sentinels , Then establish a connection .

Each sentinel also sends the monitoring configuration for the main library , In this way, you can exchange the monitoring configuration of the main library with other sentinels , Synchronize monitoring configuration with each other .

The core principle

Judge whether the main warehouse is offline

The sentinel has a problem with the offline judgment of the main library “ Subjective offline (sdown)” and “ Objective offline (odown)” Two kinds of , Only objective offline , Will open Master slave switch The process of .

Sentinels can use PING The command periodically detects itself and the master 、 Network connection of slave library , To determine the status of the instance . If the master or slave library responds PING Command timeout , The Sentry will mark it “ Subjective offline ”.

PING The detection timeout parameters are as follows , Default 30 second :

sentinel down-after-milliseconds mymaster 30000

Copy code If it is Slave Library , Then it is simply marked as “ Subjective offline ”, Because the offline from the library generally has little impact , The external service of the cluster will not be interrupted . And if it is Main library Subjective offline , The master-slave switch will not be started immediately , Because there may be Miscalculation The situation of . Misjudgment means that there is no fault in main database , However, the cluster network may be under great pressure 、 Network congestion and other conditions lead to sentinel misjudgment . Once the master-slave switch is enabled , You have to choose a new master database , Then synchronize the master-slave database data , You also need to notify the client to connect to the new master database , It's very expensive . Therefore, it is necessary to judge whether it is misjudged , Reduce miscarriage of justice .

The way to reduce miscarriage of justice is to judge through multiple sentry instances , Only more than a specified number of instances in the sentinel cluster are considered as the primary database “ Subjective offline ”, The main database “ Objective offline ”, It indicates that it is an objective fact that the main database is offline .

Any instance in the sentry cluster only needs to judge the main database “ Subjective offline ” after , It will send to other instances is-master-down-by-addr command , Other instances will be based on their connection to the main database , return Y( Affirmative vote ) or N ( no ). As long as a sentinel obtains the number of affirmative votes required for arbitration , You can mark the main library Objective offline .

The number of affirmative votes required for arbitration is sentinel monitor Parameter quorum value . for example , Yes 3 A sentinel ,quorum The configuration is 2, that , A sentinel needs 2 Yes, yes , You can mark the master database as “ Objective offline ” 了 , These two votes include one for himself and one for another sentry .

sentinel monitor <master-name> <ip> <redis-port> <quorum>

Copy code The process of judging the main database offline is as follows :

Leader The election

For a while , Multiple sentinels in the sentinel cluster may decide that the main database is offline , At this time, you need to select a sentinel to perform master-slave switching . The sentinel who determines the objective downline of the main library will then send commands to other sentinels , Let it vote for itself , Indicates that you want to perform the master-slave switch by yourself . The voting process is Leader The election , The sentry who finally performs master-slave switching is called Leader.

In the process of voting , The Sentinels who judge the objective downline of the main library will first vote for themselves , Then send orders to the other sentinels , The other sentinels did not vote , Will vote for , Or vote against . Sentinels become Leader The following conditions must be met : Get more than half of the affirmative votes (majority = N/2 + 1), And The number of votes received >= quorum.

For example, there are 5 A sentinel ,majority = 3,quorum = 2, Then the sentry must get 3 Tickets or more can become Leader; If quorum = 5, Then you must get 5 Tickets can become Leader.

If no sentry gets enough votes , Then this round of voting will not produce Leader, The sentinel group will wait for some time before re-election , The waiting time is... Of the failover timeout 2 times . The failover timeout is configured as follows , Default 180 second :

sentinel failover-timeout mymaster 180000

Copy code You can see , The sentinel cluster can successfully vote , To a large extent, it depends on the normal network propagation and transmission speed of election orders . If the network pressure is high or there is short-term congestion , It may lead to no sentinel getting more than half of the votes , Sentinels with good network conditions may get more than half of the votes .

The number of sentinels

As I said before , The sentinel cluster needs at least 3 Instances to work properly , If only 2 An example , So a sentinel wants to be Leader, Must obtain 2 Tickets only , Although elections can normally be completed , But if one of the Sentinels dies , Then there will not be enough votes , The master-slave switch cannot be performed . therefore ,2 Sentinel instances cannot guarantee high availability of sentinel cluster , Usually we will at least configure 3 A sentinel example .

But the more sentinel instances, the better , The sentry is judging “ Subjective offline ” And elections “ sentry Leader” when , All need to communicate with other nodes , The more sentinel instances , The more times you communicate , And when multiple sentinels are deployed , It will be distributed on different machines , The more nodes, the greater the risk of machine failure , These problems will affect sentinel communications and elections , When something goes wrong, it means that the election will take longer , It takes longer to switch between master and slave .

Select a new master library

sentry Leader After the election ,Leader You can start to perform master-slave switching , The first step of master-slave switchover is to select a new master database .

The rules for selecting a new master database are as follows :

1. Network status

First, check the network condition of the slave library , If the slave database is disconnected from the master database for more than down-after-milliseconds * 10 millisecond , This indicates that the network condition of the slave database is not good , It is not suitable to be used as the main library , Be filtered out .

2. From library priority

Next, priority the remaining slave libraries (replica-priority) Sort , The lower the value , The higher the priority , If there is one with the highest priority , Then it will be the new main library . Generally, when the server configuration is different , We can set high priority for instances of highly configured servers .

Priority configuration , Default 100, The lower the value, the higher the priority . Pay attention to the notes , Do not set to 0, Set to 0 Indicates that the slave node will not be used as the master database (master), Will not be selected by the sentry as the main library .

# The replica priority is an integer number published by Redis in the INFO

# output. It is used by Redis Sentinel in order to select a replica to promote

# into a master if the master is no longer working correctly.

#

# A replica with a low priority number is considered better for promotion, so

# for instance if there are three replicas with priority 10, 100, 25 Sentinel

# will pick the one with priority 10, that is the lowest.

#

# However a special priority of 0 marks the replica as not able to perform the

# role of master, so a replica with priority of 0 will never be selected by

# Redis Sentinel for promotion.

#

# By default the priority is 100.

replica-priority 100

Copy code 3. Copy offset

If the priority of the slave libraries in the previous step is the same , The next step is to judge the replication progress of the slave library and the original master library (offset), Copying from the library is getting slower , The higher the priority .

Both master and slave libraries maintain an offset , Main library write N Byte instructions , Main library offset (master_repl_offset) Will add N, Received... From library N Bytes of data , Offset from library (slave_repl_offset) It will add N. If there is an offset from the slave library that is closest to the offset from the master library , Then it is the new main library .

4. Compare runID

Last , With the same priority and replication schedule , Press run ID Sort ,ID The slave library with the smallest number will be selected as the new master library .

That's all “ Elector ” A whole process of :

Notify the client

Between the sentinels pub/sub The mechanism forms a cluster , meanwhile , The sentry passed again INFO command , Get the connection information from the library , You can also connect to the slave library , And monitor . After the master-slave database is switched , The client also needs to know the connection information of the new master database , therefore , The sentry also needs to tell the client about the new master database .

The information synchronization between sentry and client is also based on pub/sub Mechanism to complete , But based on sentinels pub/sub Mechanism , The client can subscribe to messages from the sentry . The sentinel has a lot of subscription channels , Different channels contain different key events in the process of master-slave switch .

After the client establishes a connection with the sentry , You can subscribe to these events . After the master-slave switch is completed , The client will listen +switch-master event , At this time, the client can get the address and port of the new main database from the sentry .

adopt pub/sub Mechanism , With the notification of these events , The client can not only get the connection information of the new master database after the master-slave switch , It can also monitor all important events in the process of master-slave switch . such , The client can know where the master-slave switch is going , Help to understand the switch progress .

Configuration version number

The sentry is switching to the main warehouse , You will get a from the new main library you want to switch to epoch( Version number ) Every time I switch epoch It's all unique . If the first elected sentinel fails to switch , So the other sentinels , Will wait for failover-timeout Time , Then take over to continue the switch , A new epoch.

After the Sentinels have switched , Will update and generate the latest master To configure , And then through pub/sub The mechanism synchronizes to other sentinels . At this time epoch The version number works , Because all kinds of news are through one channel To release and monitor , So after a sentinel completes a new switch , new master The configuration follows the new epoch Version number of , Other sentinels update themselves according to the size of the version number master Configured .

Data loss

Redis There are two types of data loss in master-slave replication :

1. Asynchronous replication

The master database synchronizes data to the slave database asynchronously , Therefore, some data may not be synchronized to the slave database , The main library goes down , This part of the data is lost .

2. Split brain

If the server where the main database is located is disconnected from the normal network , Unable to connect with other slave libraries , But in fact, the main library is still running . The Sentry will think that the main library is down , Then start selecting the new master library , At this time, the cluster will have two main databases , This is it. “ Split brain ”. At this time, the client may not have had time to switch to the new main database , Then continue to write data to the old master database , After the old master database is restored , It will be regarded as a slave Library of the new master library , The data will also be cleared , Re copy data from the new master database , Then this part of data will also be lost .

There are two configurations that can reduce the data loss caused by asynchronous replication and cerebrolysis :

min-replicas-to-write 1 # Default 3

min-replicas-max-lag 10 # Default 10

Copy code These two configurations mean at least 1 individual (min-replicas-to-write) Slave Library , The replication synchronization delay with the primary database exceeds 10 second (min-replicas-max-lag) after , The main library will no longer receive any write requests .

These two configurations can ensure that if the delay between any slave database and the master database exceeds 10 second , The main library will not receive any write requests from the client , At most 10 Second data .

Cluster disaster recovery drill

Here are some tests to see when the main library goes down , Whether the Sentry can re select a master and switch to a new master library .

Main warehouse offline analysis

First, view the information of the current main database on one of the sentinel instances as follows (172.17.0.2):

Now the main library kill fall , And delete PID file :

You can check the sentry log :

First, we can know the current sentry ID by :

# Sentinel ID is 018048f0adc0523b4b13d8ddde04c8882bb3d9af

Copy code After killing the main library , You can see the sentry judge master Subjective offline (+sdown):

# +sdown master mymaster 172.17.0.2 6379

Copy code Then there was quorum The number of sentinels thought master I'm offline , The status changes to objective offline (+odown):

# +odown master mymaster 172.17.0.2 6379 #quorum 2/2

Copy code Then we got a new one epoch Version number :

# +new-epoch 1

Copy code Then there is the vote Leader Stage : The current sentinel voted for himself , The other two sentinels also voted for themselves :

# +vote-for-leader 018048f0adc0523b4b13d8ddde04c8882bb3d9af 1

# f43d3d4bca3f809be708c17e4c1c8951de5c6d9d voted for 018048f0adc0523b4b13d8ddde04c8882bb3d9af 1

# fbeaca3b7cc3a7fee7660dd2e239683021861d69 voted for 018048f0adc0523b4b13d8ddde04c8882bb3d9af 1

Copy code The new warehouse is selected (172.17.0.4):

# +selected-slave slave 172.17.0.4:6379 172.17.0.4 6379 @ mymaster 172.17.0.2 6379

Copy code The instance exits the subjective offline state :

# -odown master mymaster 172.17.0.2 6379

Copy code Reconfigure events from library :

* +slave-reconf-inprog slave 172.17.0.3:6379 172.17.0.3 6379 @ mymaster 172.17.0.2 6379 160:X 21 Feb 2022 03:48:47.494

* +slave-reconf-done slave 172.17.0.3:6379 172.17.0.3 6379 @ mymaster 172.17.0.2 6379

Copy code The new main database switching is completed :

# +switch-master mymaster 172.17.0.2 6379 172.17.0.4 6379

Copy code thus , We can see from the log Redis A process of active / standby switching , Then you can see on the sentry that the main library has been successfully switched .

The old main database is online

Restart the old master database :

After startup, you can see in the sentry log that the old main database has exited the subjective offline status :

# -sdown slave 172.17.0.2:6379 172.17.0.2 6379 @ mymaster 172.17.0.4 6379

Copy code Then in the new main database (172.17.0.4) View in , Old master libraries can be found (172.17.0.2) It has become a slave Library of the new master library .

thus , We can confirm that the sentinel cluster is working properly , When the primary library goes down , Be able to select a new master database , And complete the master-slave switch , And notify the client to update the master database information . After the old main database goes online again , It will automatically switch to the slave Library of the new master library .

边栏推荐

- Specification for self test requirements of program developers

- Zabix5.0-0 - agent2 monitoring MariaDB database (Linux based)

- 电子元器件行业B2B电商市场模式、交易能力数字化趋势分析

- Erc-721 Standard Specification

- Eight recommended microservice testing tools

- On software requirement analysis

- NVM download, installation and use

- Software testing methods: a short guide to quality assurance (QA) models

- Implementation of pure three-layer container network based on BGP

- Tencent cloud TCS: an application-oriented one-stop PAAS platform

猜你喜欢

SQL basic tutorial (learning notes)

Ten excellent business process automation tools for small businesses

Specification for self test requirements of program developers

NVM download, installation and use

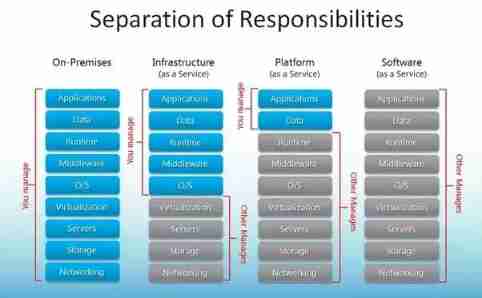

Cloud service selection of enterprises: comparative analysis of SaaS, PAAS and IAAs

How can programmers reduce bugs in development?

13 ways to reduce the cost of cloud computing

Regression testing strategy for comprehensive quality assurance system

Top ten popular codeless testing tools

Software testing methods: a short guide to quality assurance (QA) models

随机推荐

专有云TCE COS新一代存储引擎YottaStore介绍

Quickly build MySQL million level test data

How to decompile APK files

Open up the construction of enterprise digital procurement, and establish a new and efficient service mode for raw material enterprises

Yum to install warning:xxx: header V3 dsa/sha1 signature, key ID 5072e1f5: nokey

持续助力企业数字化转型-TCE获得国内首批数字化可信服务平台认证

Use BPF to count network traffic

视频平台如何将旧数据库导入到新数据库?

Nine practical guidelines for improving responsive design testing

How to select the best test cases for automation?

Devops in digital transformation digital risk

[can you really use es] Introduction to es Basics (I)

Top ten popular codeless testing tools

NVM download, installation and use

Tiktok Kwai, e-commerce enters the same river

C language - structure II

EasyPlayer流媒体播放器播放HLS视频,起播速度慢的技术优化

High quality defect analysis: let yourself write fewer bugs

Seven strategies for successfully integrating digital transformation

How much does it cost to develop a small adoption program similar to QQ farm?