当前位置:网站首页>Experimental reproduction of variable image compression with a scale hyperprior

Experimental reproduction of variable image compression with a scale hyperprior

2022-07-02 01:33:00 【Xiao Yao.】

Preface

This article is in END-TO-END OPTIMIZED IMAGE COMPRESSION Improvement based on literature , Mainly, the super prior network is added to process the edge information . Relevant environment configurations and foundations can be referred to END-TO-END OPTIMIZED IMAGE COMPRESSION

github Address :github

1、 Relevant command

(1) Training

python bmshj2018.py -V train

Again , It's convenient for debugging here , Joined the launch.json

{

// Use IntelliSense Learn about properties .

// Hover to see the description of an existing property .

// For more information , Please visit : https://go.microsoft.com/fwlink/?linkid=830387

"version": "0.2.0",

"configurations": [

{

"name": "Python: Current file ",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": true,

// bmshj2018

"args": ["--verbose","train"] // Training

}

]

}

2、 Data sets

if args.train_glob:

train_dataset = get_custom_dataset("train", args)

validation_dataset = get_custom_dataset("validation", args)

else:

train_dataset = get_dataset("clic", "train", args)

validation_dataset = get_dataset("clic", "validation", args)

validation_dataset = validation_dataset.take(args.max_validation_steps)

Given the address of the dataset, use the corresponding dataset , Otherwise use the default CLIC Data sets

3、 Organize the training process

The whole code is mainly in bls2017.py On , So the whole circulation process is and bls2017 The process is the same , Here I will briefly sort out the training process of this document

Instantiate objects

init Initialize some parameters in (LocationScaleIndexedEntropyModel、ContinuousBatchedEntropyModel The required parameters in the two entropy models , The corresponding model description can be found in tfc Entropy model , The specific parameters are scale_fn And given num_scales)

Initialize nonlinear analysis transformation 、 Nonlinear synthesis transformation 、 Super prior nonlinear analysis transformation 、 Super prior nonlinear synthesis transformation 、 Add uniform noise 、 Obtain a priori probability, etc

call It is calculated by two entropy models

(1)x->y( Nonlinear analysis transformation )

(2)y->z( Super prior nonlinear analysis transformation )

(3)z->z_hat、 Side information bit Count (ContinuousBatchedEntropyModel Entropy model )

(4)y->y_hat、 Number of bits (LocationScaleIndexedEntropyModel Entropy model )

(5) Calculation bpp( here bpp=bit/px,bit The number is equal to the above two bit Sum of numbers )、mse、loss

Among them, the nonlinear analysis transformation is cnn Convolution process , One step of zero filling is to make the input and output pictures equal in sizemodel.compile

adopt model.compile Configure training methods , Use the optimizer for gradient descent 、 count bpp、mse、lose The weighted average ofFilter the clipped dataset

Check whether the data set path is given through parameters

Given data set path : Cut directly into 256x256( Cut uniformly into 256*256 Send to network training , The compressed image will not change its size )

Dataset path not given : use CLIC Data sets , The size of the filtered image is larger than 256x256 Picture of tee , And then cut it

Separate the training data set from the verification data setmodel.fit

Incoming training data set , Set related parameters (epoch etc. ) Training

adoptretval = super().fit(*args, **kwargs)Get into model Of train_step( I have to say that the packaging here is too tight … But you can't find how to jump in from the code of a single file )train_step

self.trainable_variables Get variable set , By passing in the set of variables and defining the loss function , Carry out forward propagation and reverse error propagation to update parameters . And then update loss, bpp, mse

def train_step(self, x):

with tf.GradientTape() as tape:

loss, bpp, mse = self(x, training=True)

variables = self.trainable_variables

gradients = tape.gradient(loss, variables)

self.optimizer.apply_gradients(zip(gradients, variables))

self.loss.update_state(loss)

self.bpp.update_state(bpp)

self.mse.update_state(mse)

return {

m.name: m.result() for m in [self.loss, self.bpp, self.mse]}

- The next step is to repeat the training process , Until the termination condition ( such as epoch achieve 10000)

4、 Compress a picture

python bmshj2018.py [options] compress original.png compressed.tfci

Through here launch.json debugging

"args": ["--verbose","compress","./models/kodak/kodim01.png", "./models/kodim01.tfci"] // Compress a picture

Introduce your own path

边栏推荐

- 企业应该选择无服务器计算吗?

- 机器学习基本概念

- 基于SSM实现微博系统

- 6-2漏洞利用-ftp不可避免的问题

- 6-3 vulnerability exploitation SSH environment construction

- [IVX junior engineer training course 10 papers to get certificates] 09 chat room production

- Edge computing accelerates live video scenes: clearer, smoother, and more real-time

- 站在新的角度来看待产业互联网,并且去寻求产业互联网的正确方式和方法

- [IVX junior engineer training course 10 papers] 06 database and services

- 【疾病检测】基于BP神经网络实现肺癌检测系统含GUI界面

猜你喜欢

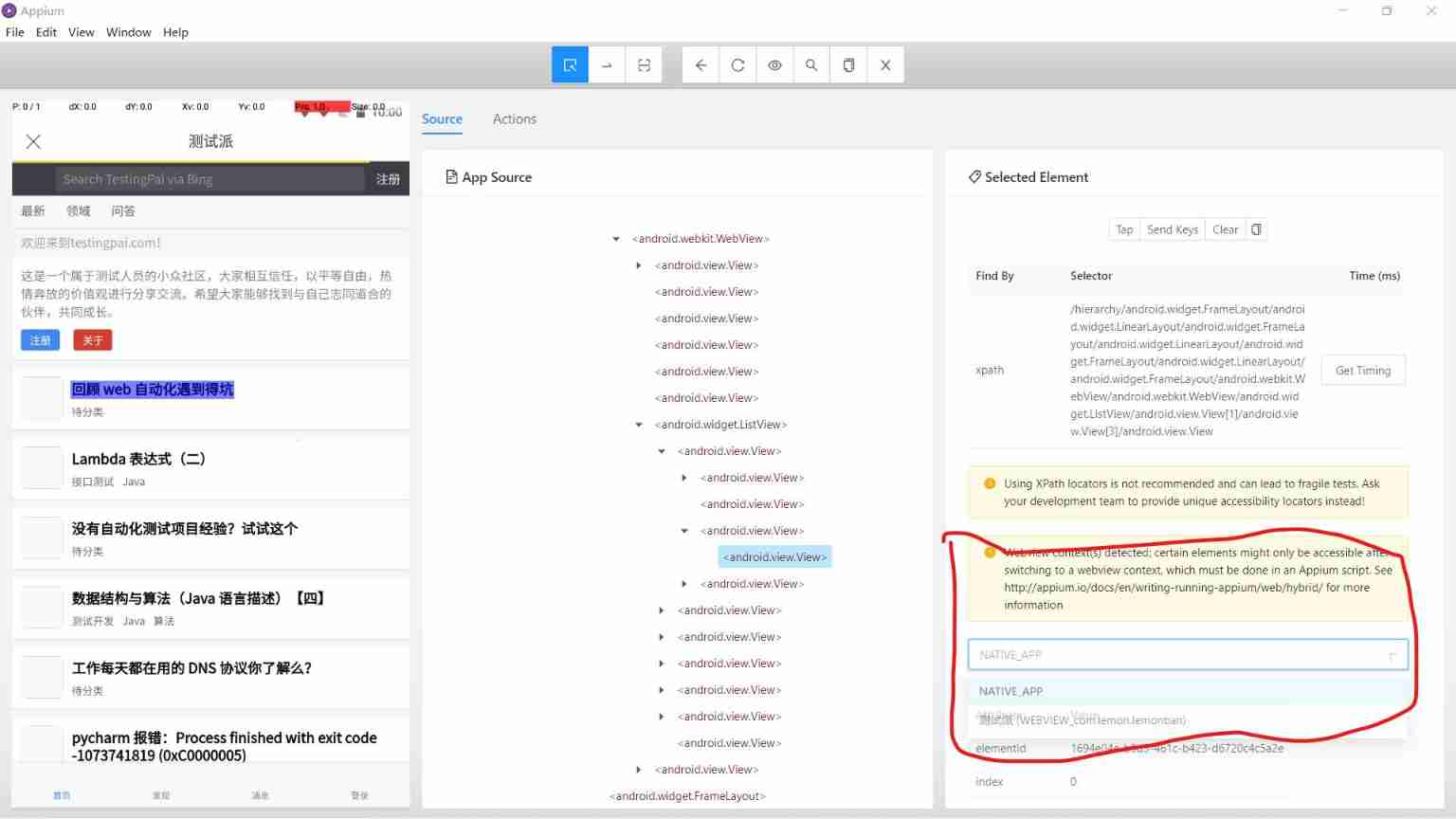

Appium inspector can directly locate the WebView page. Does anyone know the principle

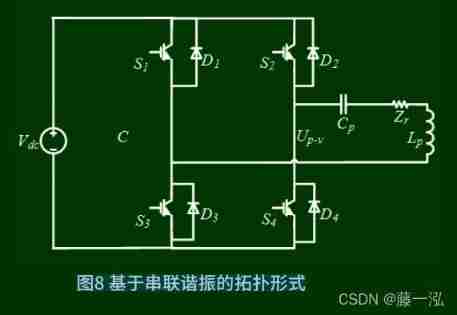

II Basic structure of radio energy transmission system

ACM tutorial - quick sort (regular + tail recursion + random benchmark)

![[IVX junior engineer training course 10 papers to get certificates] 0708 news page production](/img/ad/a1cb672d2913b6befd6d8779c993ec.jpg)

[IVX junior engineer training course 10 papers to get certificates] 0708 news page production

![[IVX junior engineer training course 10 papers] 04 canvas and a group photo of IVX and me](/img/b8/31a498c89cf96567640677e859df4e.jpg)

[IVX junior engineer training course 10 papers] 04 canvas and a group photo of IVX and me

Exclusive delivery of secret script move disassembly (the first time)

Liteos learning - first knowledge of development environment

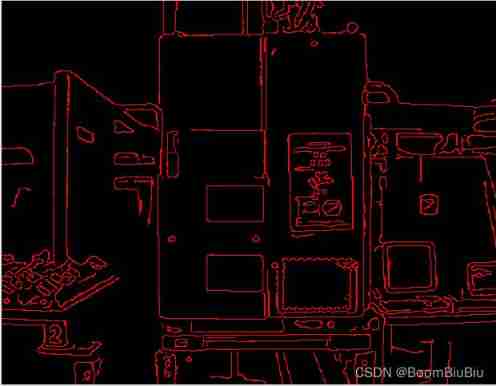

Edge extraction edges based on Halcon learning_ image. Hdev routine

Learning note 24 - multi sensor post fusion technology

Edge computing accelerates live video scenes: clearer, smoother, and more real-time

随机推荐

基于SSM实现微博系统

[dynamic planning] interval dp:p3205 Chorus

Liteos learning - first knowledge of development environment

Learning notes 25 - multi sensor front fusion technology

Sql--- related transactions

Data visualization in medical and healthcare applications

Game thinking 15: thinking about the whole region and sub region Services

学习笔记24--多传感器后融合技术

matlab 实现语音信号重采样和归一化,并播放比对效果

游戏思考15:全区全服和分区分服的思考

微信小程序中使用tabBar

Docker安装Oracle_11g

Docker installing Oracle_ 11g

首场“移动云杯”空宣会,期待与开发者一起共创算网新世界!

遷移雲計算工作負載的四個基本策略

2022年6月国产数据库大事记

matlab 使用 resample 完成重采样

Design and implementation of radio energy transmission system

[Chongqing Guangdong education] Tianshui Normal University universe exploration reference

Quatre stratégies de base pour migrer la charge de travail de l'informatique en nuage