当前位置:网站首页>Neural Network (ANN)

Neural Network (ANN)

2022-07-31 00:16:00 【A problem every day】

算法介绍

概念

An artificial neural network is an extensive parallel interconnected network of adaptive simple units,Its organization canSimulates the biological nervous systemInteractive responses to real-world objects.在实际应用中,80%-90%The artificial neural network model is adoptedError inversion algorithmor its variant form of the network model.

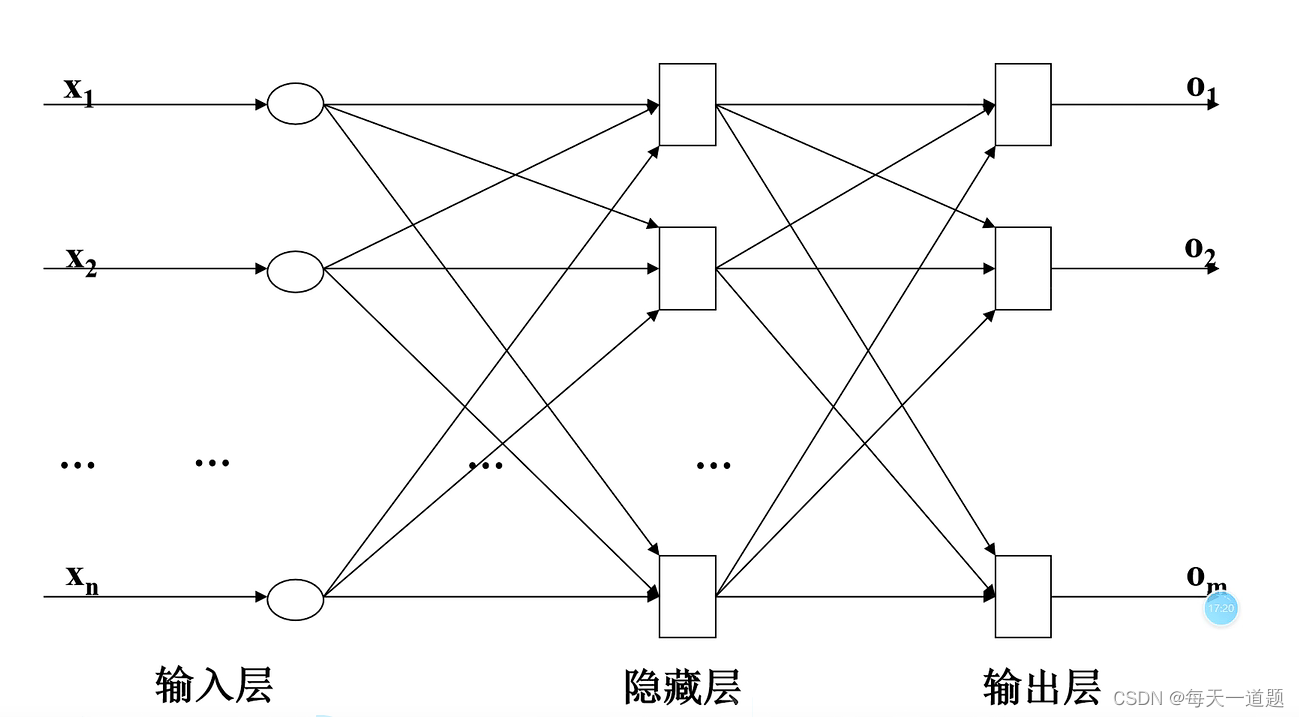

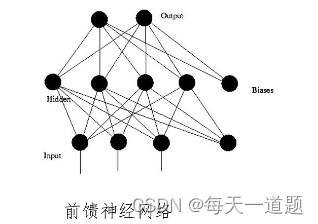

A typical structure of a neural network:

作用

1.拟合 ->预测.

2.分离 -> 聚类分析.

Neural network models and network structures

1.神经元

大脑可视作为1000多亿神经元组成的神经网络.

The following is the working process of neurons:

2.Neuronal information transmission

神经元的信息传递和处理是一种电化学活动,树突由于电化学作用接受外界的刺激;通过胞体内的活动体现为axon potential,当axon potential达到一定的值A nerve impulse or action potential is formed;It is then transmitted to other neurons through axonal terminals.从控制论的观点来看,This process can be seen as one多输入单输出The process of nonlinear systems.

3.Model of artificial neuron

x1-xnfrom other neurons输入信号.

wij表示从神经元j到神经元i的连接权值.

another independent variable阈值,或称为偏置.

The relationship between the input and output of a neuron:

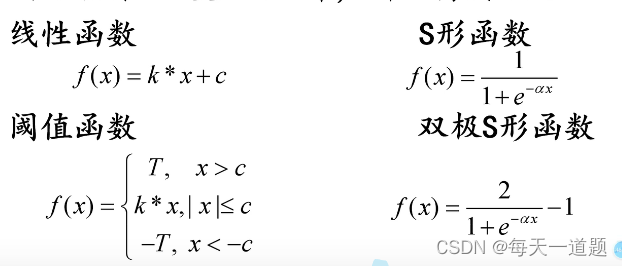

4.激活函数

The activation function is the function that maps the net activation function to the output.Some commonly used activation functions,Since the input data and the expected value may not be of the same order of magnitude,所以需要激活.

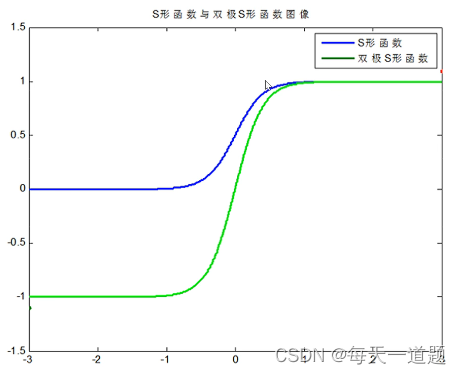

Sshape and bipolarS形函数图像:

Sshape and bipolarSThe derivatives of the shape functions are all continuous functions.

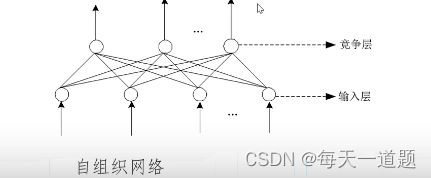

5.网络模型

Depending on how the neurons in the network are interconnected,网络模型分为:

前馈神经网络:There will be feedback signals only during the training process,而在分类过程中数据只能向前传送,until the output layer is reached,There is no backward output signal between layers.

反馈神经网络:A neural network with feedback connections from output to input,Its structure is much more complicated than the feedforward network.

自组织网络:通过自动寻找样本中的内在规律和本质属性,自组织,自适应地改变网络参数与结构.

6.工作状态

The working state of the neural network is divided into学习和工作状态.

学习:A learning algorithm is used to adjust the connection weights between neurons,使得网络输出更符合实际.

工作:The connection weights between neurons remain unchanged,Can be used as a classifier or predicting data.

7.学习方式

Learning methods are divided into有导师学习(监督学习)和无导师学习(非监督学习).

有导师学习:Feed a set of training sets to the network,根据网络的实际输出与期望输出间的差别来调整连接权.(如:BP算法)

无导师学习:Extract the statistical properties contained in the sample set,并以神经元之间的联接权的形式存于网络中.(如:Hebb学习率)

Steps to build and apply a neural network

(1)Determination of network structure

Including the topology of the network and the selection of the corresponding function of each neuron.

(2)Determination of weights and thresholds

通过学习得到,for guided learning,That is, using a known set of correct inputs、输出数据,Adjust the weights and thresholds so that the network output deviates as little as possible from the ideal output.

(3)工作阶段

The process of solving practical problems with neural networks with determined weights and thresholds,也叫做模拟.

BP算法

采用BPThe feedforward neural network for the learning algorithm is calledBP神经网络.

BP算法基本原理:利用输出后的误差来估计输出层的直接前导层的误差,再用这个误差估计更前一层的误差,This is passed down layer by layer,就获得了所有其他各层的误差估计.

Prediction class code

% 读取数据

input = rand(2,200);

output = input(1,:) .* input(2, :);

% 训练集,测试集

input_train = input(:, 1:150);

output_train = output(1:150);

input_test = input(:, 151:end);

output_test = output(151:end);

% 数据归一化

[inputn, inputps] = mapminmax(input_train, 0, 1);

[outputn, outputps] = mapminmax(output_train);

inputn_test = mapminmax('apply', input_test, inputps);

% 构建BP神经网络

net = newff(inputn, outputn, [8, 7]) % 输入层,输出层,隐含层神经元个数

% 网络参数

net.trainParam.epochs = 1000; % 训练次数

net.trainParam.lr = 0.01; % 学习速率

net.trainParam.goal = 0.000001 % 训练目标最小误差

% BP神经网络训练

net = train(net, inputn, outputn);

% BP神经网络测试

an = sim(net, inputn_test); % 用训练好的模型进行仿真

test_simu = mapminmax('reverse', an, outputps); % 预测结果反归一化

error = test_simu - output_test; % 预测值和真实值的误差

% 真实值与预测值误差比较

figure(1)

plot(output_test, 'bo-')

hold on

plot(test_simu, 'r*-')

hold on

plot(error, 'square', 'MarkerFaceColor', 'b')

legend('期望值', '预测值', '误差值')

xlabel('数据组数'),ylabel('值'),title('测试集预测值和期望值的误差对比'),set(gca,'fontsize',12)%计算误差

[~,len] = size(output_test);

MAE1 = sum(abs(error ./ output_test)) / len;

MSE1 = error * error' / len;

RMSE1 = MSE1 ^ (1/2);

disp(['---------------误差计算---------------'])

disp(['平均绝对误差MAE为:', num2str(MAE1)])

disp(['均方误差MSE为:', num2str(MSE1)])

disp(['均方根误差RMSE为:', num2str(RMSE1)])

边栏推荐

- 写了多年业务代码,我发现了这11个门道,只有内行才知道

- 乌克兰外交部:乌已完成恢复粮食安全出口的必要准备

- pytorch bilinear interpolation

- Dry goods | 4 tips for MySQL performance optimization

- 【深入浅出玩转FPGA学习14----------测试用例设计2】

- 46.<list链表的举列>

- Ukraine's foreign ministry: wu was restored to complete the export of food security

- joiplay模拟器报错如何解决

- 对象集合去重的方法

- Shell编程条件语句 test命令 整数值,字符串比较 逻辑测试 文件测试

猜你喜欢

随机推荐

asser利用蚁剑登录

How to solve types joiplay simulator does not support this game

Soft Exam Study Plan

joiplay模拟器报错如何解决

【深入浅出玩转FPGA学习13-----------测试用例设计1】

leetcode:127. Word Solitaire

xss靶机训练【实现弹窗即成功】

joiplay模拟器rtp如何安装

Optimization of aggregate mentioned at DATA AI Summit 2022

动态修改el-tab-pane 的label(整理)

pytorch双线性插值

uni-ui installation

状态机动态规划之股票问题总结

Shell programming conditional statement test command Integer value, string comparison Logical test File test

一款好用的接口测试工具——Postman

joiplay模拟器如何使用

MySQL中substring与substr区别

从笔试包装类型的11个常见判断是否相等的例子理解:包装类型、自动装箱与拆箱的原理、装箱拆箱的发生时机、包装类型的常量池技术

神经网络(ANN)

How to adjust Chinese in joiplay simulator