当前位置:网站首页>A case study of apiserver avalanche caused by serviceaccount

A case study of apiserver avalanche caused by serviceaccount

2022-06-24 07:09:00 【shawwang】

background

A business uses k8s1.12 Version of cluster , There are thousands in the cluster node, One day in master After a burst of requests , Suddenly becomes unavailable , A large number of components in the cluster access master Overtime , Business restart master The component has not been restored .

The screening process

First of all kube-apiserver Log , Found a lot of creation TokenReview Request log print for , And are 30s Overtime , It is suspected that the request will kube-apiserver Full speed limit , This affects other normal requests .

Observe kube-apiserver Monitoring discovery ,apiserver Of apiserver_current_inflight_requests{requestKind="mutating"} The indicators have indeed reached the business setting max-mutating-requests-inflight, The speed limit is triggered .

At first, I suspected that it was triggered mutating The speed limit , Led to a large number of client retry , Triggered an avalanche , We've had similar problems before , Increase the speed limit to recover . So I decided to turn it up first mutating Value observation , adjustment max-mutating-requests-inflight after , Observe for a period of time and find , The business still has a timeout , Check the log and find that there are still many Create TokenReview Overtime , It seems that only by checking TokenReview Created from , To find the root cause .

TokenReview Is a virtual resource , Only when there is Token Relevant authentication requests will be created , Therefore, it is urgent to find out which component is requesting authentication frequently . Because the current log cannot find more valid information , Want to find out the source of the request , Only hope for kube-apiserver Check the ultimate killer of the request —— Audit . After configuring the relevant audit rules , We can easily count the source and details of the request .

After a period of observation , Find out TokenReview The request sources of are basically from kubelet, And the requests are relatively uniform , There is no obvious aggregation , It looks like some normal requests , This rule out that it is caused by a burst request from a node .

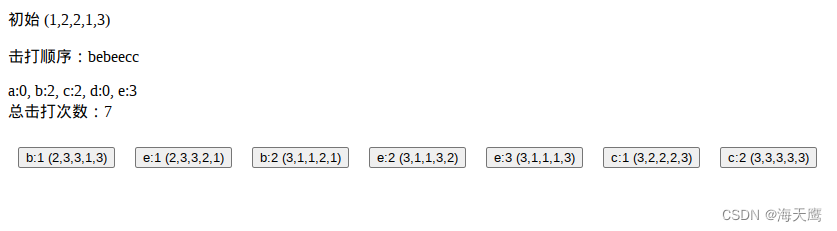

Under what circumstances kubelet Will send to kube-apiserver Request creation TokenReview Well ? By looking at K8s Source code discovery , On the client side through ServiceAccount Authentication mode request kubelet when ,kubelet By creating TokenReview The way (webhook The way ) request apiserver For authentication ,TokenReview Creation time , Would call kube-apiserver Built in Authenticator authentication , If it is Token authentication , Then check in sequence basic auth, bearertoken,ServiceAccount token,bootstrap token etc. ( Sequence can be referred to BuildAuthenticator Function construction process ), among ,ServiceAccount token Of Authenticator From ServiceAccountTokenGetter adopt loopback client To get secret, The operation is in K8s-1.12 Do not cache , also lookback client The speed limit qps 50,burst 100(SecureServingInfo.NewClientConfig, K8s-1.17 Before ), It's easy to trigger the speed limit during an avalanche , cause max-mutating-requests-inflight Be filled with , This affects other write operations .( notes :kube-apiserver Of token Authentication will add local by default cache, cache 10s. kubelet adopt webhook By token authentication , There is also a local cache ( Default 2 minute ). If the request fails, it will pass backoff And try again . But when the cluster has thousands of nodes and the cache fails , It is difficult to recover automatically after triggering an avalanche ).

Find out the cause of the problem , So how can I recover quickly ? see K8s Code discovery , Current version lookback client The speed limit configuration of is hardcode In the code , No configuration can be modified . If you want to change it, there can only be one more K8s edition , The changes are relatively large .

Look at it in a different way , If you use this ServiceAccount request kubelet Find out the source of , Is it possible to solve this problem ? Check the audit log and find , The requested user is basically the same ServiceAccount,system:serviceaccount:metrics-server. All the questions can be explained here ,metrics-server Need to pass through kubelet To get some monitoring data , Therefore, each node's kubelet, In the case of a large cluster , It's easy to trigger kube-apiserver Of loopback client The speed limit of .

Solution

Find out the cause and source , The problem is easy to solve , take metrics-server The authentication method of is changed to certificate authentication , perhaps static token The way to authenticate , This problem can be solved temporarily .

in addition ,K8s-1.17 Has been removed loopback client The speed limit of ,K8s-1.14 in the future ServiceAccountTokenGetter We'll start with informer get data , Fail and pass loopback client request apiserver, So by upgrading the cluster master edition , Only then can we fundamentally solve this problem .

appendix

K8s Community related discussions :

https://github.com/kubernetes/kubernetes/issues/71811

https://github.com/kubernetes/kubernetes/pull/71816

边栏推荐

- What is JSP technology? Advantages of JSP technology

- [problem solving] the connection to the server localhost:8080 was referred

- Jumping game ii[greedy practice]

- 学生管理系统页面跳转及数据库连接

- Typora charges? Build vs Code markdown writing environment

- sql join的使用

- Are internal consultants and external consultants in SAP implementation projects difficult or successful? [English version]

- 1. go deep into tidb: see tidb for the first time

- 原神方石机关解密

- Internet cafe management system and database

猜你喜欢

面渣逆袭:MySQL六十六问,两万字+五十图详解

![[Yugong series] June 2022 asp Basic introduction and use of cellreport reporting tool under net core](/img/18/1576cb7bdae5740828d1db5b283aee.png)

[Yugong series] June 2022 asp Basic introduction and use of cellreport reporting tool under net core

You have a chance, here is a stage

Unexpected token u in JSON at position 0

Decryption of the original divine square stone mechanism

Internet cafe management system and database

JVM debugging tool -arthas

在js中正则表达式验证小时分钟,将输入的字符串转换为对应的小时和分钟

网吧管理系统与数据库

树莓派4B开发板入门

随机推荐

机器人迷雾之算力与智能

App management platform app host

Programmers use personalized Wallpapers

Implementation and usage analysis of static pod

JVM debugging tool -jstack

JSON formatting method advantages of JSON over XML

Another double win! Tencent's three security achievements were selected into the 2021 wechat independent innovation achievements recommendation manual

[JUC series] completionfuture of executor framework

[Yugong series] June 2022 asp Basic introduction and use of cellreport reporting tool under net core

The third session of freshman engineering education seminar is under registration

Use of SQL join

Brief introduction of domain name registration

JVM debugging tool -arthas

十年

JVM debugging tool -jvisualvm

文件系统笔记

typescript vscode /bin/sh: ts-node: command not found

C: use of mutex

What is the role of domain name websites? How to query domain name websites

学生管理系统页面跳转及数据库连接