当前位置:网站首页>pyspark --- count the mode of multiple columns and return it at once

pyspark --- count the mode of multiple columns and return it at once

2022-08-03 07:02:00 【WGS.】

df = ss.createDataFrame([{

'newid': 'DDD1', 'time_h': 10, 'city': '北京', 'model': '华为'},

{

'newid': 'DDD1', 'time_h': 10, 'city': '北京', 'model': '华为'},

{

'newid': 'DDD1', 'time_h': 20, 'city': '北京', 'model': '小米'},

{

'newid': 'DDD1', 'time_h': 2, 'city': '上海', 'model': '苹果'},

{

'newid': 'DDD1', 'time_h': 3, 'city': '青岛', 'model': '华为'},

{

'newid': 'www1', 'time_h': 20, 'city': '青岛', 'model': '华为'}])\

.select(*['newid', 'city', 'model', 'time_h'])

df.show()

df.createOrReplaceTempView('info')

sqlcommn = "select {}, count(*) as `cnt` from info group by {} having count(*) >= (select max(count) from (select count(*) count from info group by {}) c)"

strsql = """ select city, model, time_h from ({}) sub_city left join ({}) sub_model on 1=1 left join ({}) sub_time_h on 1=1 """.format(

sqlcommn.format('city', 'city', 'city'),

sqlcommn.format('model', 'model', 'model'),

sqlcommn.format('time_h', 'time_h', 'time_h')

)

df = ss.sql(strsql)

df.show()

all_modes_dict = {

}

cols = ['city', 'model', 'time_h']

modeCol = ['city_mode', 'model_mode', 'time_h_mode']

for c in modeCol:

all_modes_dict[c] = []

for row in df.select(['city', 'model', 'time_h']).collect():

for mc in range(len(modeCol)):

all_modes_dict.get(modeCol[mc]).append(row[cols[mc]])

for k, v in all_modes_dict.items():

all_modes_dict[k] = set(v)

# 只取一个

# all_modes_dict[k] = list(set(v))[0]

all_modes_dict

global all_modes_dict_bc

all_modes_dict_bc = sc.broadcast(all_modes_dict)

+-----+----+-----+------+

|newid|city|model|time_h|

+-----+----+-----+------+

| DDD1|北京| 华为| 10|

| DDD1|北京| 华为| 10|

| DDD1|北京| 小米| 20|

| DDD1|上海| 苹果| 2|

| DDD1|青岛| 华为| 3|

| www1|青岛| 华为| 20|

+-----+----+-----+------+

+----+-----+------+

|city|model|time_h|

+----+-----+------+

|北京| 华为| 10|

|北京| 华为| 20|

+----+-----+------+

{'city_mode': {'北京'}, 'model_mode': {'华为'}, 'time_h_mode': {10, 20}}

udfway to fill the mode

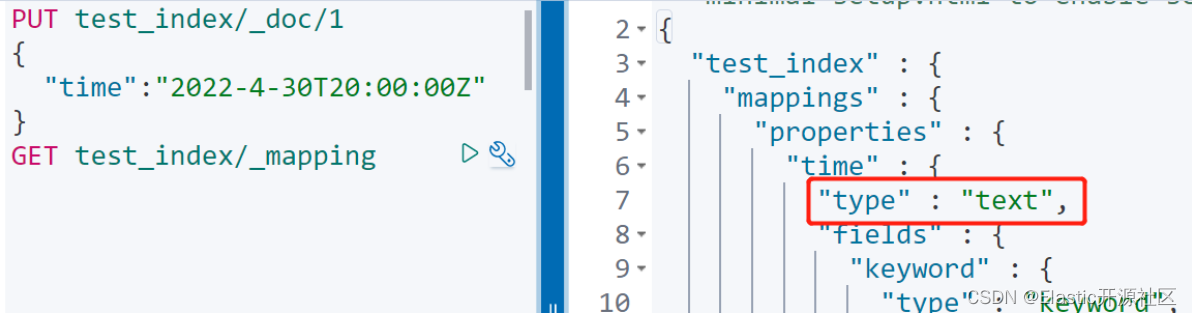

spark版本如果是2.4的话,多次调用这个sql和udfAn error will be reported if the mode is filled in the mode,可考虑用fillna方式

# UserDefinedFunction

def deal_na_udf(row_col, modekey):

if row_col is None or row_col == '':

row_col = all_modes_dict_bc.value[modekey]

return row_col

ludf = UserDefinedFunction(lambda row_col, modekey: deal_na_udf(row_col, modekey))

# # lambda udf

# ludf = fn.udf(lambda row_col, modekey: all_modes_dict_bc.value[modekey] if row_col is None or row_col == '' else row_col)

for i in range(len(colnames)):

df = df.withColumn(colnames[i], ludf(fn.col(colnames[i]), fn.lit(modeCol[i])))

finallway to fill the mode

# 将 空串 替换为 null

# # 方法1

# for column in df.columns:

# trimmed = fn.trim(fn.col(column))

# df = df.withColumn(column, fn.when(fn.length(trimmed) != 0, trimmed).otherwise(None))

# 方法2

df = df.replace(to_replace='', value=None, subset=['time_h', 'model', 'city'])

for i in range(len(colnames)):

df = df.fillna({

colnames[i]: all_modes_dict_bc.value[modeCol[i]]})

It can also be in the form of word frequency statistics:

rdds = df.select('city').rdd.map(lambda word: (word, 1)).reduceByKey(lambda a, b: a + b)\

.sortBy(lambda x: x[1], False).take(3)

# examples: [(Row(city=None), 2), (Row(city='北京'), 2), (Row(city='杭州'), 1)]

边栏推荐

猜你喜欢

随机推荐

零代码工具拖拽流程图

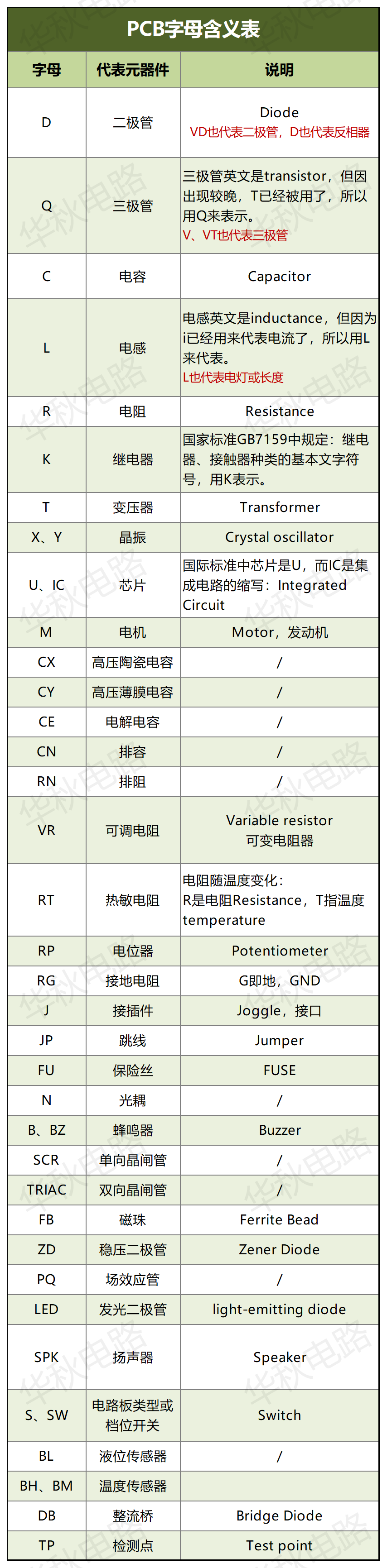

【干货分享】PCB 板变形原因!不看不知道

Charles抓包显示<unknown>解决方案

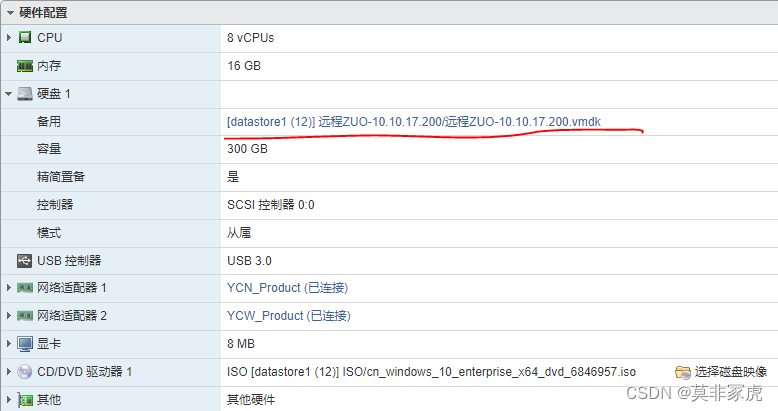

【OpenStack云平台】搭建openstack云平台

SQLServer2019安装(Windows)

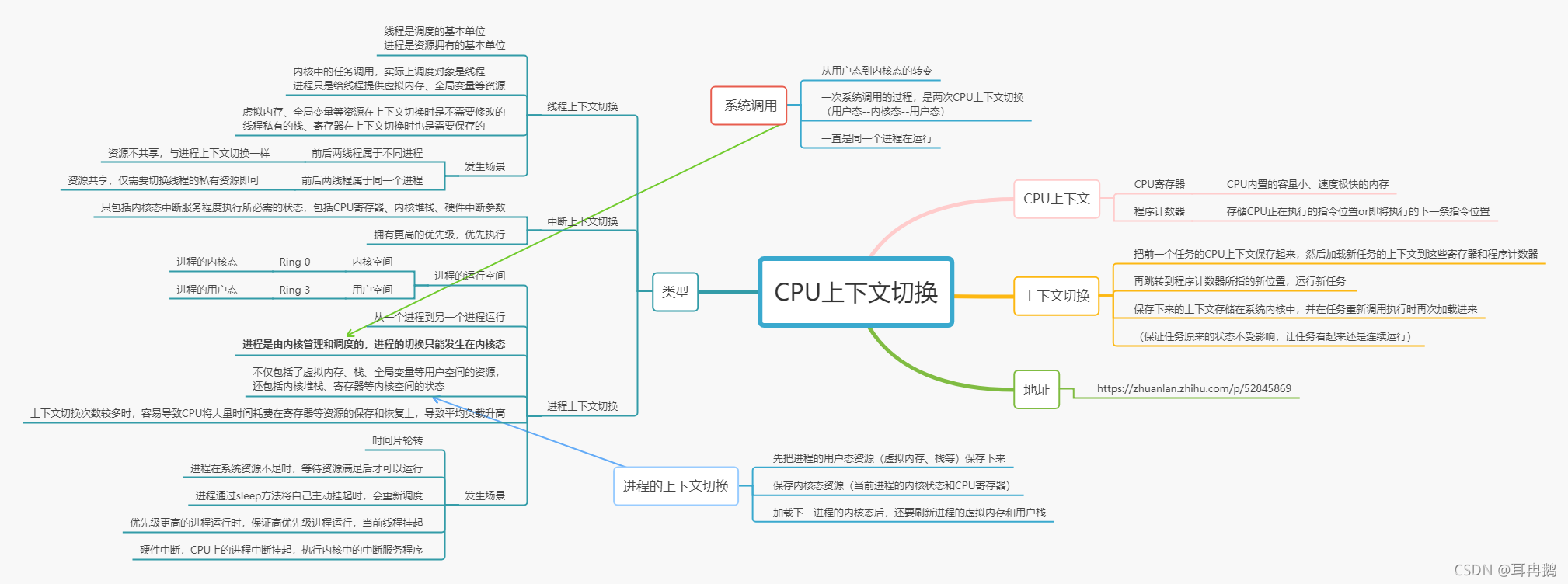

CPU上下文切换详解思维导图

【英语单词】常见深度学习中编程用到的英语词汇

【GIoU loss】GIoU loss损失函数理解

WinServer2012r2破解多用户同时远程登录,并取消用户控制

MySQL 数据库基础知识(系统化一篇入门)

C # program with administrator rights to open by default

Nvidia NX使用向日葵远程桌面遇到的问题

Oracle 数据库集群常用巡检命令

Chrome 配置samesite=none方式

在OracleLinux8.6的Zabbix6.0中监控Oracle11gR2

AR路由器如何配置Portal认证(二层网络)

计算机网络高频面试考点

【云原生 · Kubernetes】搭建Harbor仓库

TFS(Azure DevOps)禁止多人同时签出

【设计指南】避免PCB板翘,合格的工程师都会这样设计!