当前位置:网站首页>Loops in tensorrt

Loops in tensorrt

2022-07-02 06:29:00 【Little Heshang sweeping the floor】

TensorRT The cycle in

Click here to join NVIDIA Developer Program

NVIDIA TensorRT Support circular structure , This is useful for circular Networks . TensorRT Loops support scanning input tensors 、 The cyclic definition of tensor and “ Scan output ” and “ Last value ” Output .

1. Defining A Loop

The cycle consists of a cyclic boundary layer (loop boundary layers) Definition .

ITripLimitLayerSpecify the number of iterations of the loop .IIteratorLayerEnable the loop to iterate the tensor .IRecurrenceLayerSpecify a cycle definition .ILoopOutputLayerSpecify the output of the loop .

Each boundary layer inherits from the class ILoopBoundaryLayer , This class has a method getLoop() Used to get its associated ILoop . ILoop Object identification loop . Have the same ILoop All cyclic boundary layers of belong to this cycle .

The following figure depicts the structure of the loop and the data flow at the boundary . Cyclic invariant tensors can be used directly inside loops , for example FooLayer Shown .

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-Ifo18gkx-1656593793084)(loop.png)]

A cycle can have multiple IIteratorLayer 、 IRecurrenceLayer and ILoopOutputLayer , And there can be at most two ITripLimitLayer , As described later . No, ILoopOutputLayer The loop of has no output , And by the TensorRT Optimize .

NVIDIA TensorRT The flow control structure layer in the support matrix describes Can be used inside the loop TensorRT layer .

The inner layer is free to use tensors defined inside or outside the loop . Other loops can be included inside ( see also Nested loop ) And other conditional constructions ( see also Conditions of nested ).

To define a loop , First , Use INetworkDefinition ::addLoop Method to create a ILoop object . Then add boundary layer and inner layer . The rest of this section describes the characteristics of the boundary layer , Use loop Express INetworkDefinition ::addLoop Back to ILoop* .

ITripLimitLayer Support counting cycles and while loop .

loop ->addTripLimit( t ,TripLimit::kCOUNT)Create aITripLimitLayer, Its inputtSpecifies the number of iterations of the loop 0D INT32 tensor .loop ->addTripLimit( t ,TripLimit::kWHILE)Create aITripLimitLayer, Its inputtIt's a 0D Bool tensor , Used to specify whether iterations should be performed . UsuallytOrIRecurrenceLayerOutput , Or the calculation based on the output .

A cycle can have at most one limit .

IIteratorLayer Supports forward or backward iterations on any axis .

loop ->addIterator( t )Add oneIIteratorLayer, It is tensor t The shaft 0 Iterate on the . for example , If the input is a matrix :

2 3 5

4 6 8

One dimensional tensor of the first iteration {2, 3, 5} And the second iteration {4, 6, 8} . Iterations beyond the tensor range are invalid .

loop ->addIterator( t , axis )similar , But this layer iterates on a given axis . for example , If axis=1 And the input is matrix , Then each iteration will transfer a column of the matrix .loop ->addIterator( t , axis,reverse )similar , But ifreverse =true, Then the layer produces its output in reverse order .

ILoopOutputLayer Support three forms of cyclic output :

loop ->addLoopOutput( t, LoopOutput::kLAST_VALUE)OutputtThe last value of , amongtMust beIRecurrenceLayerOutput .loop-> addLoopOutput( t ,LoopOutput::kCONCATENATE, axis )Output the input of each iteration in series tot. for example , If the input is a one-dimensional tensor , The value of the first iteration is{ a,b,c}, The value of the second iteration is{d,e,f}, axis =0 , Then the output is matrix :

a b c

d e f

If axis =1 , The output of :

a d

b e

c f

loop-> addLoopOutput( t ,LoopOutput::kREVERSE, axis )similar , But the order is reversed .kCONCATENATEandkREVERSEForm requires a second input , This is a 0D INT32 Shape tensor , Used to specify the length of the new output dimension . When the length is greater than the number of iterations , Additional elements contain arbitrary values . The second input , for exampleu, You should useILoopOutputLayer::setInput(1, u )Set up .

Last , also IRecurrenceLayer . Its first input specifies the initial output value , The second input specifies the next output value . The first input must come from outside the loop ; The second input usually comes from inside the loop . for example , This C++ Fragment TensorRT simulation :

for (int32_t i = j; ...; i += k) ...

You can create , among j and k yes ITensor* .

ILoop* loop = n.addLoop();

IRecurrenceLayer* iRec = loop->addRecurrence(j);

ITensor* i = iRec->getOutput(0);

ITensor* iNext = addElementWise(*i, *k,

ElementWiseOperation::kADD)->getOutput(0);

iRec->setInput(1, *iNext);

The second input is TensorRT The only case where trailing edge is allowed . If these inputs are deleted , Then the remaining network must be acyclic .

2. Formal Semantics

TensorRT With application semantics , This means that there are no visible side effects other than engine input and output . Because there are no side effects , Intuition about loops in imperative languages is not always valid . This section defines TensorRT The formal semantics of circular structure .

Formal semantics is based on tensor inert sequences (lazy sequences). Each iteration of the loop corresponds to an element in the sequence . Cyclic tensor X The sequence of is expressed as * X 0, X 1, X 2, ... * . The elements of the sequence are lazily evaluated , It means as needed .

IIteratorLayer(X) The output of is * X[0], X[1], X[2], ... * among X[i] It means that IIteratorLayer Subscript on the specified axis .

IRecurrenceLayer(X,Y) The output of is * X, Y0, Y1, Y2, ... * .

The input and output of depends on LoopOutput The type of .

kLAST_VALUE: The input is a single tensor X , about n-trip loop , The output is X n .kCONCATENATE: The first input is tensorX, The second input is the scalar shape tensorY. The result isX0, X1, X2, ... Xn-1With post fill ( If necessary, ) Connect toYSpecified length . IfY < nRuntime error .YIs the construction time constant . Pay attention to andIIteratorLayerThe inverse relationship of .IIteratorLayerMap the tensor to a series of sub tensors ; withkCONCATENATEOfILoopOutputLayerMap a series of sub tensors to a tensor .- kREVERSE : Be similar to

kCONCATENATE, But the output direction is opposite .

ILoopOutputLayer In the output definition of n The value is determined by the circular ITripLimitLayer determine :

- For counting cycles , It is the iteration count , Express ITripLimitLayer The input of .

- about while loop , It's the smallest n bring X n X_n Xn For false , among

XyesITripLimitLayerThe sequence of input tensors .

The output of acyclic layer is the sequential application of layer functions . for example , For a two input acyclic layer F(X,Y) = * f(X 0 , Y 0 ), f(X 1 , Y 1 ), f(X 2 , Y 2 )... * . If a tensor comes from outside the loop , That is, loop invariant , Then its sequence is created by copying tensor .

3. Nested Loops

TensorRT Infer the nesting of loops from the data flow . for example , If the cycle B Used in cycles A The value defined in , be B Considered nested in A in .

TensorRT Reject loops without clean nested Networks , For example, if the loop A Using a loop B Internally defined values , vice versa .

4. Limitations

Loops that reference multiple dynamic dimensions may consume an unexpected amount of memory .

In a cycle , Memory allocation is like all dynamic dimensions taking the maximum value of any of these dimensions . for example , If a circular reference to two dimensions is [4,x,y] and [6,y] Tensor , Then the memory allocation of these tensors is like their dimension [4,max(x,y),max(x ,y)] and [6,max(x,y)] .

with kLAST_VALUE Of LoopOutputLayer The input of must be IRecurrenceLayer Output .

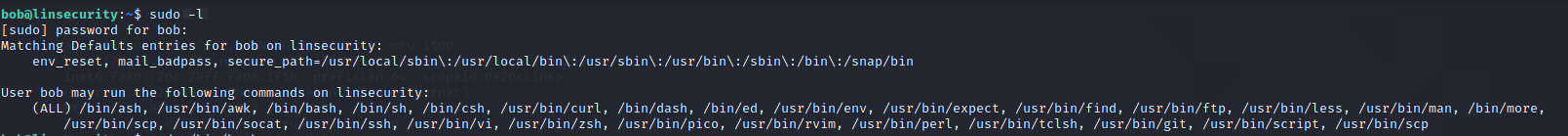

loop API Support only FP32 and FP16 precision .

5. Replacing IRNNv2Layer With Loops

IRNNv2Layer stay TensorRT 7.2.1 Has abandoned , And will be in TensorRT 9.0 Delete in . Using a loop API Synthetic cyclic subnet . for example , see also sampleCharRNN Method SampleCharRNNLoop::addLSTMCell . loop API It allows you to express the general circular network , Not limited to IRNNLayer and IRNNv2Layer Precast units in .

see also sampleCharRNN .

边栏推荐

- LeetCode 27. Removing Elements

- Ruijie ebgp configuration case

- When requesting resttemplate, set the request header, request parameters, and request body.

- Hydration failed because the initial UI does not match what was rendered on the server.问题原因之一

- sprintf_s的使用方法

- PgSQL学习笔记

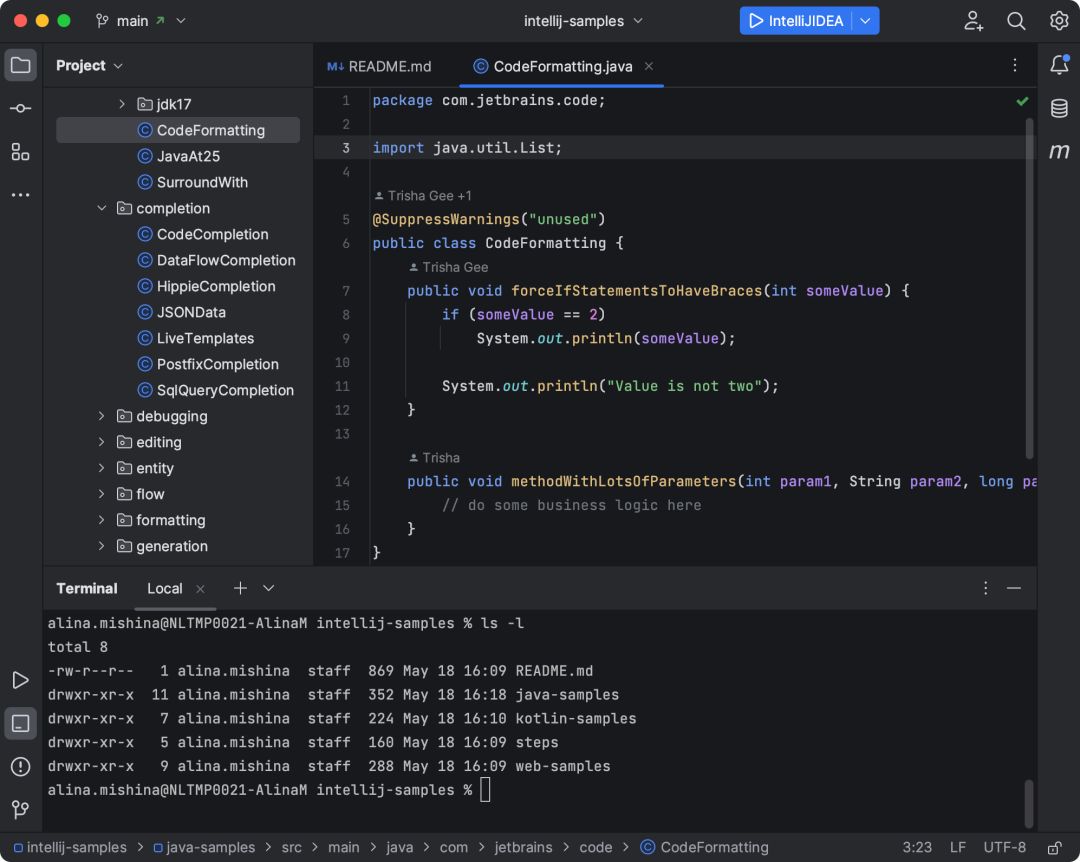

- IDEA公布全新默认UI,太清爽了(内含申请链接)

- Browser principle mind map

- Three suggestions for all students who have graduated and will graduate

- 深入了解JUC并发(一)什么是JUC

猜你喜欢

随机推荐

Redis---1.数据结构特点与操作

浅谈三点建议为所有已经毕业和终将毕业的同学

CUDA中内置的Vector类型和变量

20201002 VS 2019 QT5.14 开发的程序打包

Top 10 classic MySQL errors

qq邮箱接收不到jenkins构建后使用email extension 发送的邮件(timestamp 或 auth.......)

2020-9-23 QT的定时器Qtimer类的使用。

Linear DP (split)

Arduino Wire 库使用

Learn about various joins in SQL and their differences

Log (common log framework)

FE - Eggjs 结合 Typeorm 出现连接不了数据库

MySQL的10大经典错误

Vector types and variables built in CUDA

Codeforces Round #797 (Div. 3) A—E

Android - Kotlin 下使用 Room 遇到 There are multiple good constructors and Room will ... 问题

Is there a really free applet?

TensorRT中的循环

Does the assignment of Boolean types such as tag attribute disabled selected checked not take effect?

sprintf_s的使用方法