当前位置:网站首页>08 Spark cluster construction

08 Spark cluster construction

2022-08-01 16:35:00 【blue wind 9】

Foreword

Ha ha recently there are a series of related requirements for environment construction

Record it

spark three nodes: 192.168.110.150, 192.168.110.151, 192.168.110.152

150 is master, 151 is slave01, 152 is slave02

All three machines are trusted shell

spark version is spark-3.2.1-bin-hadoop2.7

Spark cluster setup

spark three nodes: 192.168.110.150, 192.168.110.151, 192.168.110.152

1. Basic environment preparation

Install jdk on 192.168.110.150, 192.168.110.151, 192.168.110.152, and upload the spark installation package

The installation package is from Downloads | Apache Spark

2. spark configuration adjustment

Copy the following three configuration files, make adjustments, and then scp to slave01, slave02 above

[email protected]:/usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7# cp conf/spark-defaults.conf.template conf/[email protected]:/usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7# cp conf/spark-env.sh.template conf/[email protected]:/usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7# cp conf/workers.template conf/workersUpdate workers

# A Spark Worker will be started on each of the machines listed below.slave01slave02Update spark-defaults.conf

spark.master spark://master:7077# spark.eventLog.enabled true# spark.eventLog.dir hdfs://namenode:8021/directoryspark.serializer org.apache.spark.serializer.KryoSerializerspark.driver.memory 1g# spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"Update spark-env.sh

export JAVA_HOME=/usr/local/ProgramFiles/jdk1.8.0_291export HADOOP_HOME=/usr/local/ProgramFiles/hadoop-2.10.1export HADOOP_CONF_DIR=/usr/local/ProgramFiles/hadoop-2.10.1/etc/hadoopexport SPARK_DIST_CLASSPATH=$(/usr/local/ProgramFiles/hadoop-2.10.1/bin/hadoop classpath)export SPARK_MASTER_HOST=masterexport SPARK_MASTER_PORT=70773. Start the cluster

The machine where the master is located executes start-all.sh

[email protected]:/usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7# ./sbin/start-all.shstarting org.apache.spark.deploy.master.Master, logging to /usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7/logs/spark-root-org.apache.spark.deploy.master.Master-1-master.outslave01: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-slave01.outslave02: starting org.apache.spark.deploy.worker.Worker, logging to /usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7/logs/spa[email protected]master:/usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7#Test cluster

Submit 1000 iterations of SparkPI using spark-submit

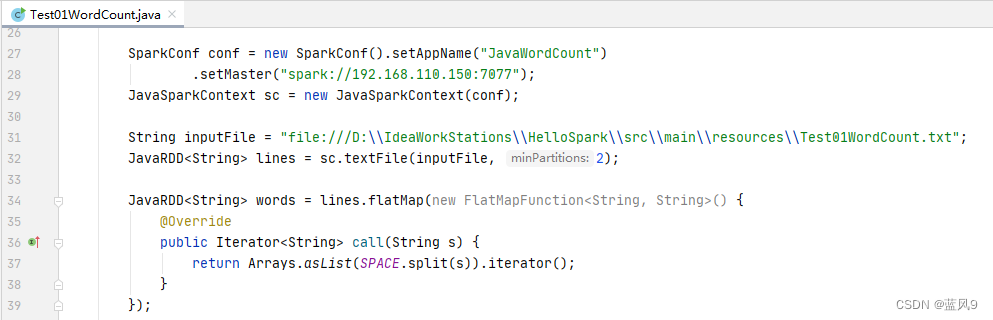

spark-submit --class org.apache.spark.examples.SparkPi /usr/local/ProgramFiles/spark-3.2.1-bin-hadoop2.7/examples/jars/spark-examples_2.12-3.2.1.jar 1000java driver submits spark task

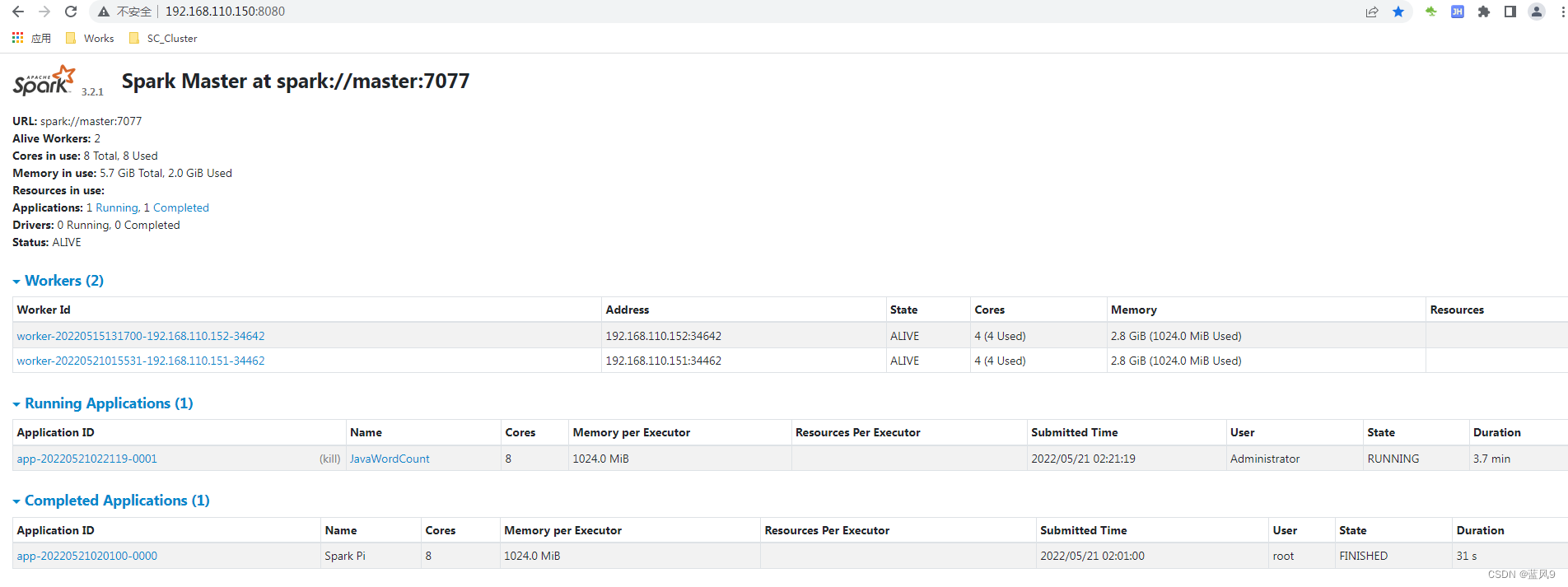

spark web ui monitoring page

End

边栏推荐

猜你喜欢

Using Canvas to achieve web page mouse signature effect

MySQL INTERVAL 关键字指南

LeetCode Week 303

清华教授发文劝退读博:我见过太多博士生精神崩溃、心态失衡、身体垮掉、一事无成!...

软件测试谈薪技巧:同为测试人员,为什么有人5K,有人 20K?

2022 Strong Net Cup CTF---Strong Net Pioneer ASR wp

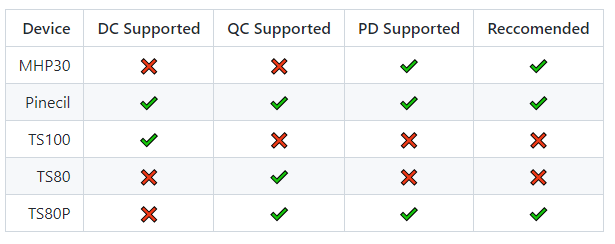

便携烙铁开源系统IronOS,支持多款便携DC, QC, PD供电烙铁,支持所有智能烙铁标准功能

HashCode technology insider interview must ask

美国弗吉尼亚大学、微软 | Active Data Pattern Extraction Attacks on Generative Language Models(对生成语言模型的主动数据模式提取攻击)

使用Canvas实现网页鼠标签名效果

随机推荐

主流定时任务解决方案全横评

年化收益高的理财产品

测试技术|白盒测试以及代码覆盖率实践

软件测试谈薪技巧:同为测试人员,为什么有人5K,有人 20K?

06 redis 集群搭建

mysql源码分析——聚簇索引

怎么安装汉化包(svn中文语言包安装)

C#的FTP帮助类

二分练习题

Winform message prompt box helper class

使用Canvas 实现手机端签名

Path helper class for C#

京东软件测试面试题,仅30题就已经拯救了50%的人

[Dark Horse Morning Post] Hu Jun's endorsement of Wukong's financial management is suspected of fraud, which is suspected to involve 39 billion yuan; Fuling mustard responded that mustard ate toenails

5年测试,只会功能要求17K,功能测试都敢要求这么高薪资了?

沈腾拯救暑期档

重庆银河证券股票开户安全吗,是正规的证券公司吗

Ant discloses the open source layout of core basic software technology for the first time

AI艺术‘美丑’不可控?试试 AI 美学评分器~

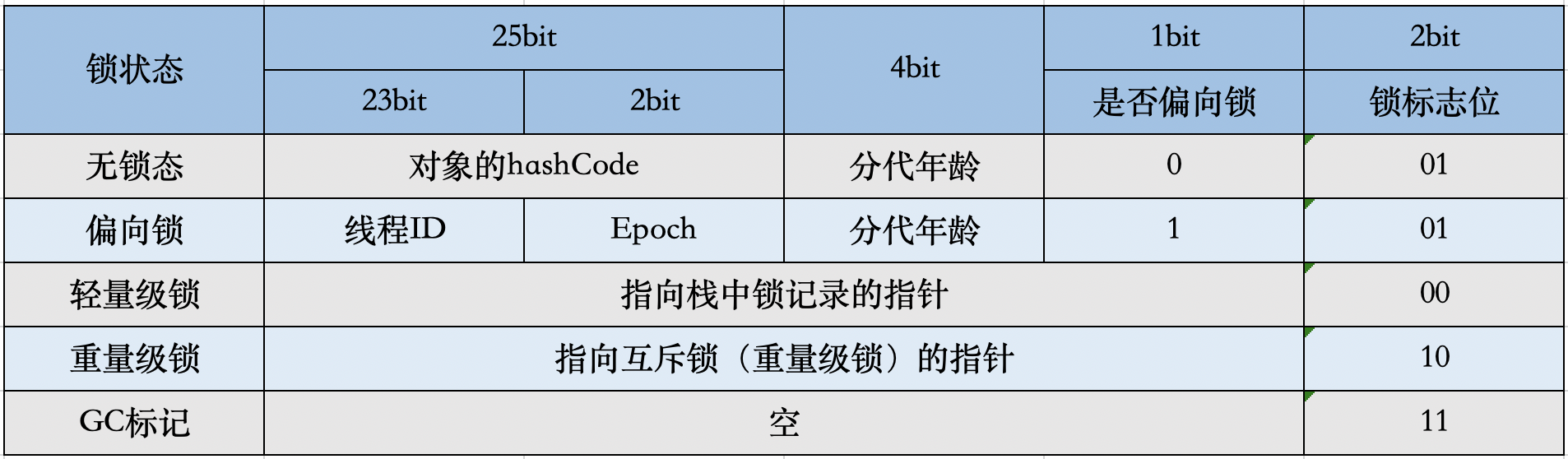

Synchronized原理