当前位置:网站首页>[deep learning] data preparation -pytorch custom image segmentation data set loading

[deep learning] data preparation -pytorch custom image segmentation data set loading

2022-07-29 07:41:00 【Fish and jade meet rain】

Catalog

One 、 Reference material

Macro methodological framework , Refer to my article

Other details :

1. pytorch Loading your own image data set 2 Detailed explanation of methods

2 .PIL take png Of RGBA The four channels are changed to jpg Of RGB Three channel method

3. take cv2+plt+Image Convert the read picture to tensor Format

Two 、 Problem definition

Suppose I have now 20 Picture and use labelme Played 30 Label image . We separate train and train_label In these two folders , And name them one by one .

How to load these pictures into the process of deep learning , As the training set and test set that match the samples and label items ?

3、 ... and 、 Implementation process

Be careful , The way the code is written is not unique , Here knowledge provides an idea , If there's a better one , You can also leave a message at the bottom of the comment area .

among , The most desirable part is inheritance Dataset class , Rewrite it __len__ Methods and __getitem__ Method .__len__ Method is used to return data

First step : Generate file index sequence

stay train and train_label Under this directory of the file , Create a python The file is used to give the address of all pictures , Build index txt

import os

path = os.getcwd() # Get the absolute venison of the current file

def make_txt(root, file_name):

path = os.path.join(root, file_name)

data = os.listdir(path)

f = open(root+'/'+file_name+'.txt', 'w')

for line in data:

f.write(line+'\n')

f.close()

print('success')

# Call the function to generate... Under two folders txt file

make_txt(path, file_name='train')

make_txt(path, file_name='train_label')

output:

success

success

obtain train.txt and train_label.txt Document indexing

The second step : Inherit Dataset class

According to document index , Load pictures and labels , And inheritance Dataset class .

from torch.utils.data import Dataset, DataLoader

from PIL import Image

import torchvision.transforms as trans

trans1 = trans.ToTensor()

class MyDataset(Dataset):

def __init__(self, path, transform=None):

super(MyDataset, self).__init__()

self.image_path = path+'/'+'train.txt'

self.label_path = path+'/'+'train_label.txt'

self.path = path

f = open(self.image_path, 'r')

data_image = f.readlines()

imgs = []

for line in data_image:

line = line.rstrip()

imgs.append(os.path.join(self.path+'/train', line))

f.close()

f2 = open(self.label_path, 'r')

data_label = f2.readlines()

labels = []

for line in data_label:

line = line.rstrip()

labels.append(os.path.join(self.path+'/train_label', line))

f2.close()

self.img = imgs

self.label = labels

self.transform = transform

def __len__(self):

return len(self.label)

def __getitem__(self, item):

img = self.img[item]

label = self.label[item]

img = Image.open(img).convert('1')

img = trans1(img)

# here img yes PIL.Image type label yes str type

if transforms is not None:

img = self.transform(img)

label = Image.open(label).convert('1')

label = trans1(label)

# label = torch.from_numpy(label)

# label = label.to(torch.float32)

return img, label

Instantiation myDataset, And divide the data , Get training set and test set

path = os.getcwd()

data = MyDataset(path, transform=None)

train_size = int(len(data) * 0.7)

test_size = len(data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(data, [train_size, test_size])

The third step : utilize DataLoader Generate sample iterators for training

train_loader = DataLoader(train_dataset,batch_size=4,shuffle=True,drop_last=False,num_workers=0)

test_loader = DataLoader(test_dataset,batch_size=4,shuffle=False,drop_last=False,num_workers=0)

After reading from the iterator , Check the effect

How to use plt Draw two pictures at the same time ?

from matplotlib import pyplot as plt

for i, (images,GT) in enumerate (test_loader):

print(i)

print(GT.shape)

plt.subplot(121)

plt.imshow(images.reshape(256,256),cmap='gray')

plt.subplot(122)

plt.imshow(GT.reshape(256,256),cmap='gray')

plt.show()

边栏推荐

- 【暑期每日一题】洛谷 P6500 [COCI2010-2011#3] ZBROJ

- MySQL 45 讲 | 07 行锁功过:怎么减少行锁对性能的影响?

- Better performance and simpler lazy loading of intersectionobserverentry (observer)

- Meeting notice of OA project (Query & whether to attend the meeting & feedback details)

- MySQL 45讲 | 08 事务到底是隔离的还是不隔离的?

- [daily question in summer] Luogu p6408 [coci2008-2009 3] pet

- Embroidery of little D

- 【暑期每日一题】洛谷 P7760 [COCI2016-2017#5] Tuna

- Multi thread shopping

- [summer daily question] Luogu p7760 [coci2016-2017 5] tuna

猜你喜欢

![[MySQL] - [subquery]](/img/81/0880f798f0f41724fd485ae82d142d.png)

[MySQL] - [subquery]

美智光电IPO被终止:年营收9.26亿 何享健为实控人

Analyze the roadmap of 25 major DFI protocols and predict the seven major trends in the future of DFI

![【暑期每日一题】洛谷 P7760 [COCI2016-2017#5] Tuna](/img/9a/f857538c574fb54bc1accb737d7aec.png)

【暑期每日一题】洛谷 P7760 [COCI2016-2017#5] Tuna

Leetcode buckle classic problem -- 4. Find the median of two positively ordered arrays

Monitor the bottom button of page scrolling position positioning (including the solution that page initialization positioning does not take effect on mouse sliding)

Sort out the two NFT pricing paradigms and four solutions on the market

207. Curriculum

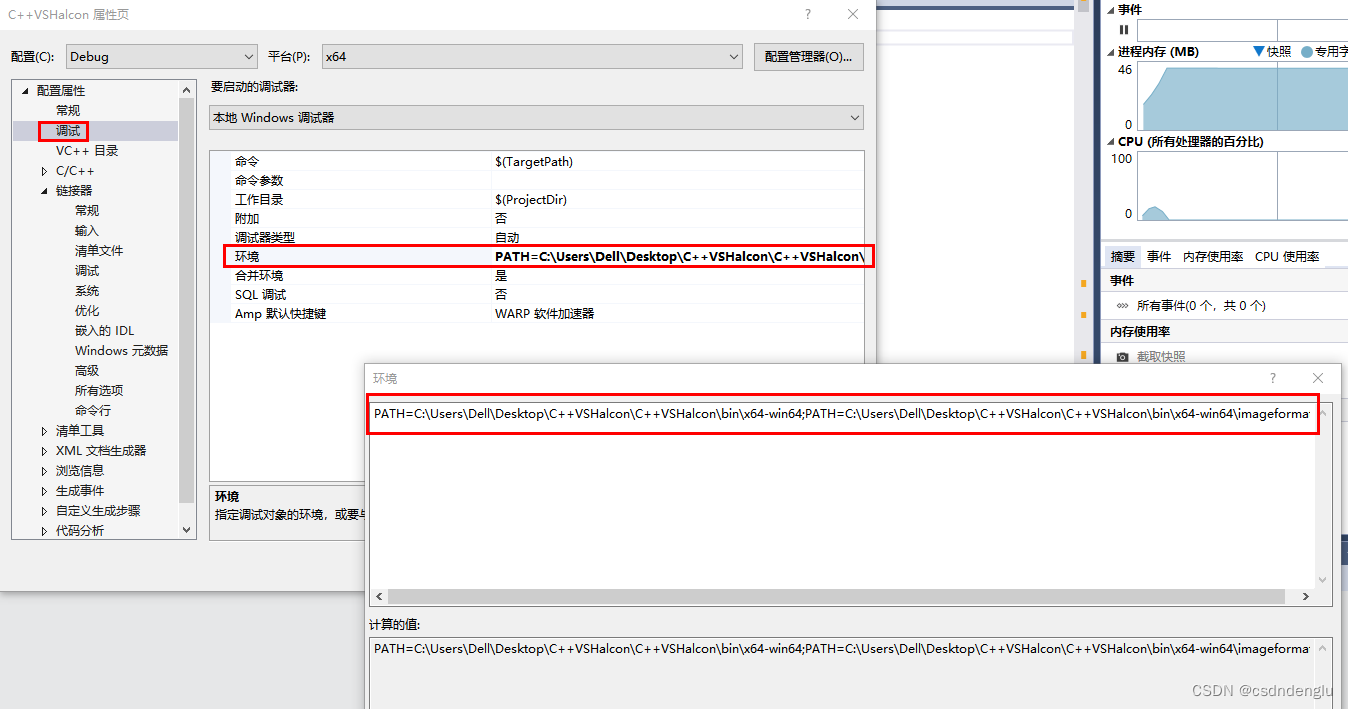

Halcon installation and testing in vs2017, DLL configuration in vs2017

How does MySQL convert rows to columns?

随机推荐

Calculate program run time demo

Popular cow G

功能自动化测试实施的原则以及方法有哪些?

Sqlmap (SQL injection automation tool)

Access数据库引入datagridview数据后,显示错误

logback appender简介说明

小D的刺绣

cs61abc分享会(六)程序的输入输出详解 - 标准输入输出,文件,设备,EOF,命令行参数

MySQL 45讲 | 08 事务到底是隔离的还是不隔离的?

状态机dp(简单版)

【暑期每日一题】洛谷 P7760 [COCI2016-2017#5] Tuna

【暑期每日一题】洛谷 P6336 [COCI2007-2008#2] BIJELE

IonIcons图标大全

Description of rollingfileappender attribute in logback

Halcon installation and testing in vs2017, DLL configuration in vs2017

[summer daily question] Luogu p7760 [coci2016-2017 5] tuna

程序的静态库与动态库的区别

Use custom annotations to verify the size of the list

Log4qt memory leak, use of heob memory detection tool

【暑期每日一题】洛谷 P6320 [COCI2006-2007#4] SIBICE