当前位置:网站首页>Neural network learning (2) introduction 2

Neural network learning (2) introduction 2

2022-07-26 18:33:00 【@[email protected]】

Catalog

4.1 Other regularization methods

1. Preface

One reason why deep learning is difficult to achieve the maximum effect in the field of big data is , Training on a huge data set is slow . The optimization algorithm can help us train the model quickly , Improve computational efficiency . Next, let's see what methods can solve the problems we just encountered or similar problems

1. Equipment problems ----- adopt gpu Improve training speed

2. optimization algorithm ---- Reduce the gradient and disappear , Gradient explosion , Local optimum problem ( The exponential increase or decrease in the gradient function is called Gradient explosion perhaps The gradient disappears . )

2. Data set partitioning

First of all, we have a simple review of the data set division involved in machine learning

Training set (train set): The algorithm or model is simulated with the training set Training The process ;

Verification set (development set): Using validation sets ( Also known as simple cross validation set ,hold-out cross validation set) Conduct Cross validation , Choose the best model ;----- Generally omit

Test set (test set): Finally, the model is tested by test set , Evaluate learning methods .

stay Small data volume Era , Such as 100、1000、10000 The size of the amount of data , The dataset can be divided according to the following scale :

In the case of no verification set :70% / 30%

If there is a verification set :60% / 20% / 20%

And in today's Big data era , The size of the data set owned may be millions , So the proportion of verification and test sets tends to be smaller .

100 Ten thousand data :98% / 1% / 1%

Millions of data :99.5% / 0.25% / 0.25%

These ratios can be selected according to the data set .

3. Deviation and variance

“ deviation - Variance decomposition ”(bias-variance decomposition) It is an important tool to explain the generalization performance of learning algorithms .

Generalization error can be decomposed into deviation 、 Variance and noise , Generalization performance By The ability to learn algorithms 、 Sufficiency of data as well as The difficulty of the learning task itself Jointly decided .

deviation : The deviation between the expected prediction and the real result of the learning algorithm is measured , Immediately drew Learning the fitting ability of the algorithm itself The smaller the better. -- There is an under fitting problem

variance : It measures the change of learning performance caused by the change of training set of the same size , Immediately drew The impact of data disturbance -- There have been fitting problems

noise : It expresses the expectation that any learning algorithm can achieve in the current task Lower bound of generalization error , Immediately drew The difficulty of learning the problem itself .

So the deviation 、 What is the relationship between variance and the division of our data set ?

1、 The error rate of the training set is small , And the validation set / The test set has a high error rate , It shows that the model has large variance , There may be over fitting

2、 The error rates of training set and test set are large , And the two are similar , It shows that there is a large deviation in the model , There may be a lack of fit

3、 The error rates of training set and test set are small , And the two are similar , It shows that the variance and deviation are small , This model works better .

So we finally conclude , Variance generally refers to the data model , Whether the disturbance of unknown data can be predicted accurately . and The deviation indicates that the error is large in the training set , Basically, there is no good effect in the test set .

So if our model has large variance or large deviation at the same time , How to solve it ?

resolvent :

For high square difference ( Over fitting ), There are several ways :

Get more data , So that the training can cover all possible situations

Regularization (Regularization)

Find a more suitable network structure ---- There are too many neurons , A little less

For high deviations ( Under fitting ), There are several ways :

Expand the scale of the network , Such as adding hidden layers or the number of neurons

Looking for the right network architecture , Use a larger network structure , Such as AlexNet

Train longer

Keep trying , Until a low deviation is found 、 Low variance framework .

4 Regularization

Regularization , That is to add a regularization term to the loss function ( Penalty item ), The complexity of the penalty model , Prevent over fitting of network

Logically regressive L1 And L2 Regularization :

Add a term to the loss function , So actually, the gradient descent is to reduce the size of the loss function , about L2 perhaps L1 Generally speaking, it is necessary to reduce the size of the regular term , Then it will reduce W The size of the weight . This is our intuitive feeling .

4.1 Other regularization methods

Early stop method (Early Stopping)

Data to enhance

Data to enhance

Data to enhance

Finger pass shear 、 rotate / Reflection / Flip transformation 、 Zoom transform 、 Translation transformation 、 Scale transformation 、 Contrast conversion 、 Noise disturbance 、 Color change And one or more ways to enhance the transformation of combined data To increase the size of the dataset .

Even if the convolutional neural network is placed in different directions , Convolution neural network for translation 、 visual angle 、 Size or illumination ( Or a combination of the above ) Keep invariance , Will be considered an object .

We need to reduce the number of irrelevant features in the dataset . For the car type classifier above , You just need to flip the photos in the existing dataset horizontally , Orient the car to the other side . Now? , Training neural networks with new data sets , By enhancing the dataset , It can prevent the neural network from learning irrelevant patterns , Improve the effect .

版权声明

本文为[@[email protected]]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/207/202207261736095231.html

边栏推荐

- [kitex source code interpretation] service discovery

- ECS MySQL prompt error

- 链表-倒数最后k个结点

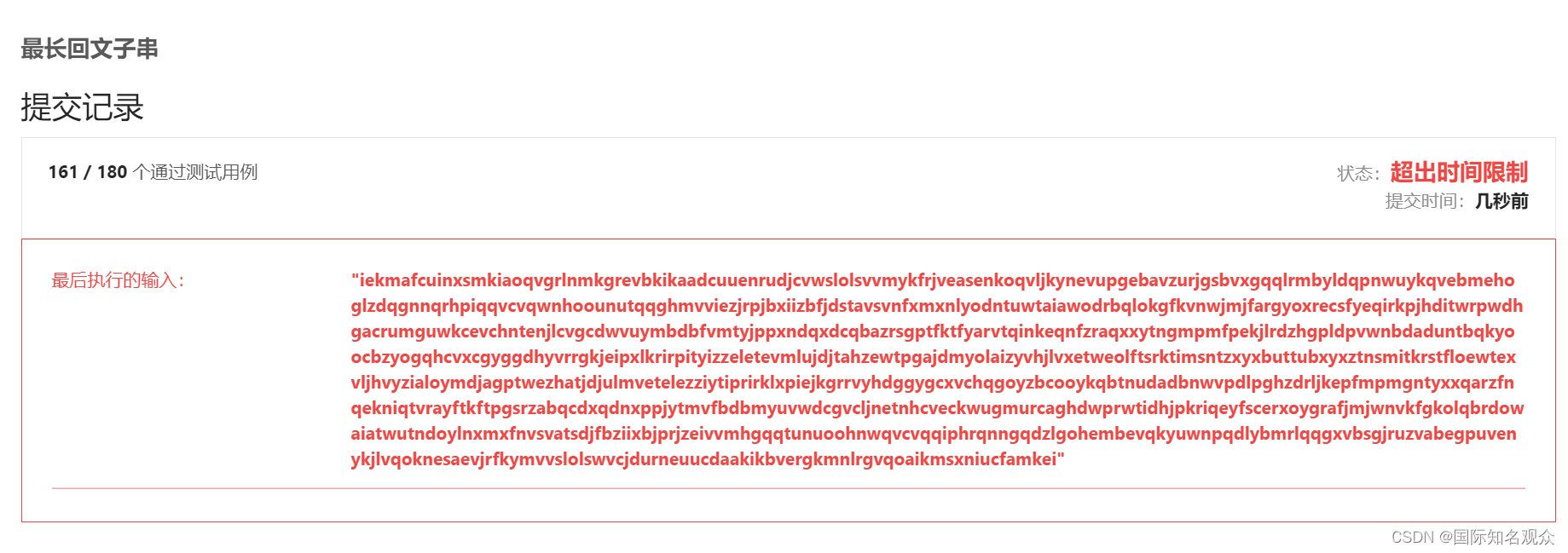

- LeetCode50天刷题计划(Day 5—— 最长回文子串 10.50-13:00)

- 自动化测试工具-Playwright(快速上手)

- 深入理解为什么不要使用System.out.println()

- 文件上传下载测试点

- PS_ 1_ Know the main interface_ New document (resolution)_ Open save (sequence animation)

- 剑指offer 连续子数组的最大和(二)

- 神经网络学习(2)前言介绍二

猜你喜欢

8.2 some algebraic knowledge (groups, cyclic groups and subgroups)

Meta Cambria手柄曝光,主动追踪+多触觉回馈方案

ssm练习第四天_获取用户名_用户退出_用户crud_密码加密_角色_权限

菜鸟 CPaaS 平台微服务治理实践

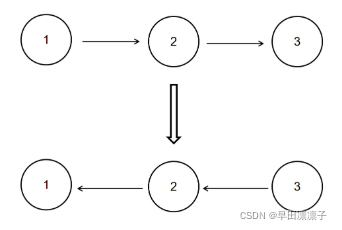

链表-反转链表

Rookie cpaas platform microservice governance practice

Hello World

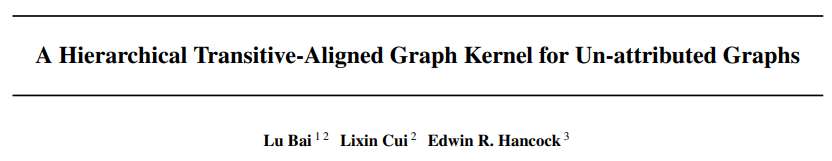

ICML 2022(第四篇)|| 图分层对齐图核实现图匹配

LeetCode50天刷题计划(Day 4—— 最长回文子串 14.00-16:20)

offer-集合(1)

随机推荐

14.梯度检测、随机初始化、神经网络总结

Kindergarten system based on SSM

PS_1_认识主界面_新建文档(分辨率)_打开保存(序列动画)

The first day of Oracle (review and sort out the common knowledge points of development)

SQL判断某列中是否包含中文字符、英文字符、纯数字,数据截取

详解 gRPC 客户端长连接机制实现

China polyisobutylene Market Research and investment value report (2022 Edition)

Are you suitable for automated testing?

[brother hero July training] day 25: tree array

效率提升98%!高海拔光伏电站运维巡检背后的AI利器

Linked list - the first common node of two linked lists

云服务器mySQL提示报错

ICML 2022(第四篇)|| 图分层对齐图核实现图匹配

How to switch nodejs versions at will?

How to assemble a registry

Some tips for printing logs

Redis持久化RDB/AOF

SQL determines whether a column contains Chinese characters, English characters, pure numbers, and data interception

百度飞桨EasyDL X 韦士肯:看轴承质检如何装上“AI之眼”

LeetCode_ 1005_ Maximized array sum after K negations